Implemented front end monitoring

- Understand business access and user distribution

- Monitor the stability of business customization key nodes (payment, im message, etc.)

- Monitor business system exceptions (exception details, exception occurrence environment)

- Monitor business performance (future)

- Alarm, mail task (future)

Written in front

This article is the result of practice when doing monitoring platform. There may be improper use of grammar. Please give me more suggestions and make progress together!

Ideas for implementation

nginx introduction

Nginx server is famous for its rich functions. It can be used not only as HTTP server, but also as reverse proxy server or mail server; it can quickly respond to static page (HTML) requests; it supports FastCGI, SSL, Virtual Host, URL Rewrite, HTTP Basic Auth, Gzip and other large-scale use functions; it also supports the expansion of more third-party function modules.

From Nginx high performance Web server details

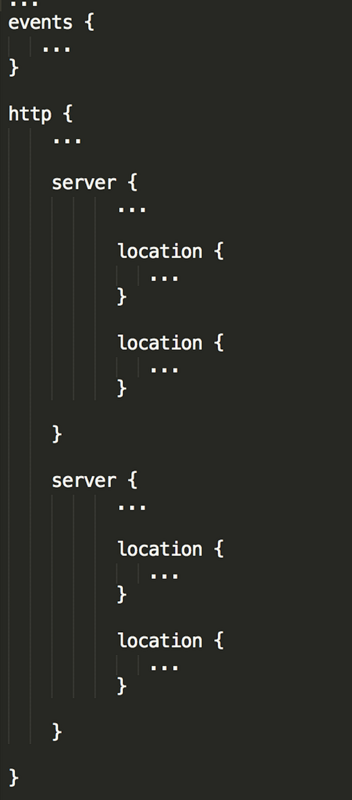

As shown in the picture:

The outer curly bracket divides the whole content into two parts, plus the first content, which is indicated by the line ellipsis. nginx.conf - consists of three parts: Global block, events block and htp block. In the http block, there are htp global blocks and multiple server blocks. Each server block can contain server global block and multiple location blocks. For configuration blocks nested in the same configuration block, there is no order relationship between them.

Chapter 2.4 from Nginx high performance Web server

The way of nginx segmentation

- Read file directory of shell script for segmentation

- Segmentation based on built-in variables

Read file directory of shell script for segmentation

Execute the script "not recommended operation" regularly through crontab of linux

#!/bin/bash #nginx minute log cutting script #Set log file storage directory logs_path="/data/nginx_log/logs/demo/" #Set pid file pid_path="/usr/local/nginx/logs/nginx.pid" #Rename log file mv ${logs_path}access.log ${logs_path}access_$(date "+%Y-%m-%d-%H:%M:%S").log # Be careful! # USR1 is also commonly used to inform applications of overloaded configuration files; # For example, sending a USR1 signal to the Apache HTTP server will lead to the following steps: stop accepting the new connection, wait for the current connection to stop, reload the configuration file, reopen the log file, restart the server, so as to achieve relatively smooth change without shutdown. # Details address [https://blog.csdn.net/fuming0210sc/article/details/50906372] kill -USR1 `cat ${pid_path}` echo "Let's start.~~~"

Segmentation based on built-in variables

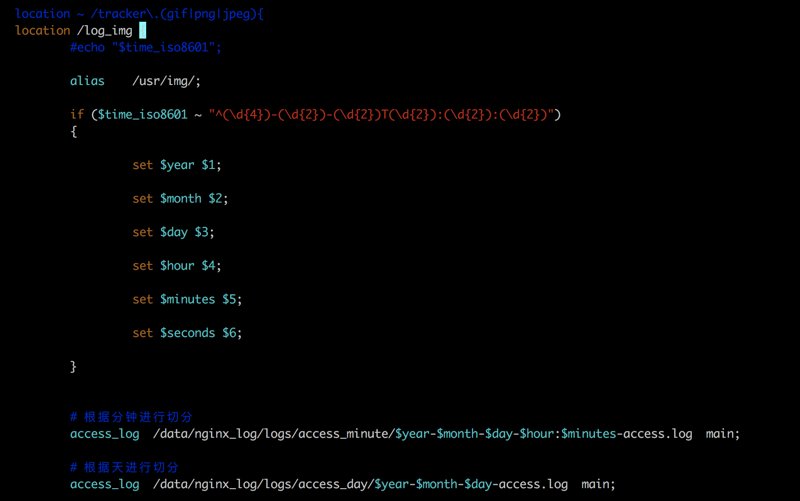

Use the location of the request to name the log information "recommended use" according to the matching rule

Add: just now, set is actually a variable marker of nginx, which is similar to the var and let of Javsscript. It uses regular extraction to extract the current time node for assignment segmentation and log assignment.

It is similar to the regular JavaScript, as shown in the following figure:

Read the log through the shell script for warehousing operation

Because I don't know much about shell's advanced syntax, it's tedious and tedious to write. If there's something wrong or optimization plan, leave a comment

#!/bin/sh # ACCESSDAY='/data/nginx_log/logs/access_day/2019-07-18-access.log'; # Log folder ACCESSDAY='/data/nginx_log/logs/access_minute/' # Current time This_time=$(date "+%Y-%m-%d %H:%M" ) # Current timestamp This_tamp=$(date -d "$This_time" +"%s") # Last 5 minutes Past_times=$(date -d "-5 minute" +"%Y-%m-%d %H:%M") # Timestamps for the last 5 minutes Past_Minute_tamp=$(date -d "$Past_Minute_tamp" +"%s") # Yesterday's time Yester_day=$(date -d "-1 day" +"%Y-%m-%d %H:%M") # Yesterday's timestamp Past_tamp=$(date -d "$Yester_day" +"%s") # Time stamp printed #echo ${This_tamp} #echo ${Past_Minute_tamp} #echo ${Past_tamp} #echo $(date -d "$This_time" +"%s") #echo $(date -d "$Past_times" +"%s") echo "===============Script start======================" echo $This_time # Write to database Write_Mysqls(){ USER='xxxx' PASSWORD='xxxxx' # Login mysql mysql -u $USER --password=$PASSWORD <<EOF use monitoringLogs; select "Write to database"; insert into log_table( prject_id, user_id, log_type, ua_info, date, key_name, key_id, key_self_info, error_type, error_type_info, error_stack_info, target_url ) values( "${1}", "${2}", "${3}", "${4}", "${5}", "${6}", "${7}", "${8}", "${9}", "${10}", "${11}", "${12}" ); EOF echo "=================Write to database completed======================" echo $This_time } Handle(){ prjectId='null' userId='null' logType='null' uaInfo='null' Dates='null' keyName='null' keyId='null' keySelfInfo='null' errorType='null' errorTypeInfo='null' errorStackInfo='null' targetUrl='null' _prjectId=$(echo $1 | awk -F '[=]' '{print $2}' ) _userId=$(echo $2 | awk -F '[=]' '{print $2}' ) _logType=$(echo $3 | awk -F '[=]' '{print $2}' ) _uaInfo=$(echo $4 | awk -F '[=]' '{print $2}' ) _Dates=$(echo $5 | awk -F '[=]' '{print $2}' ) _keyName=$(echo $6 | awk -F '[=]' '{print $2}' ) _keyId=$(echo $7 | awk -F '[=]' '{print $2}' ) _keySelfInfo=$(echo $8 | awk -F '[=]' '{print $2}' ) _errorType=$(echo $9 | awk -F '[=]' '{print $2}' ) _errorTypeInfo=$(echo $10 | awk -F '[=]' '{print $2}' ) _errorStackInfo=$(echo $11 | awk -F '[=]' '{print $2}' ) _targetUrl=$(echo $12 | awk -F '[=]' '{print $2}' ) if [ -n "$_logType" ]; then logType=$_logType fi if [ -n "$_uaInfo" ]; then uaInfo=$_uaInfo fi if [ -n "$_Dates" ]; then Dates=$_Dates fi if [ -n "$_keyName" ]; then keyName=$_keyName fi if [ -n "$_keyId" ]; then keyId=$_keyId fi if [ -n "$_keySelfInfo" ]; then keySelfInfo=$_keySelfInfo fi if [ -n "$_errorType" ]; then errorType=$_errorType fi if [ -n "$_errorTypeInfo" ]; then errorTypeInfo=$_errorTypeInfo fi if [ -n "$_errorStackInfo" ]; then errorStackInfo=$_errorStackInfo fi if [ -n "$_targetUrl" ]; then targetUrl=$_targetUrl fi Write_Mysqls $_prjectId $_userId $logType $uaInfo $Dates $keyName $keyId $keySelfInfo $errorType $errorTypeInfo $errorStackInfo $targetUrl } # Cyclic segmentation character set foreach_value(){ #Collection end definition field arr_log=( "prject_id" "user_id" "log_type" "ua_info" "date" "key_name" "key_id" "key_self_info" "error_type" "error_type_info" "error_stack_info" "target_url" ) #Validation rule re=".*?(?=&)" prject_id=$(echo $1 | grep -oP "${arr_log[0]}${re}") user_id=$(echo $1 | grep -oP "${arr_log[1]}${re}") log_type=$(echo $1 | grep -oP "${arr_log[2]}${re}") ua_info=$(echo $1 | grep -oP "${arr_log[3]}${re}") dates=$(echo $1 | grep -oP "${arr_log[4]}${re}") key_name=$(echo $1 | grep -oP "${arr_log[5]}${re}") key_id=$(echo $1 | grep -oP "${arr_log[6]}${re}") key_self_info=$(echo $1 | grep -oP "${arr_log[7]}${re}") error_type=$(echo $1 | grep -oP "${arr_log[8]}${re}") error_type_info=$(echo $1 | grep -oP "${arr_log[9]}${re}") error_stack_info=$(echo $1 | grep -oP "${arr_log[10]}${re}") target_url=$(echo $1 | grep -oP "${arr_log[11]}${re}") # Judge the necessary parameters as the comparison value if [ -n "$prject_id" -a -n "$user_id" ]; then # Processing parameters Handle $prject_id $user_id $log_type $ua_info $dates $key_name $key_id $key_self_info $error_type $error_type_info $error_stack_info $target_url fi } # Print out all folders of the log whlie_log(){ for FLIE in $(ls ${ACCESSDAY}) do # Segmentation custom date log name NEW_FLIE=$( echo $FLIE | awk -F '[-]' '{print substr($0,1,length($0)-11)}' ) # Cut date and minute, and transform format timestamp for judgment CUT_TIME=$( echo ${NEW_FLIE} | awk -F "[-]" '{print $1"-"$2"-"$3" "$4$5 }' ) GET_TEXT=$( cat $ACCESSDAY$FLIE ) This_Sum_Tamp=$(date -d "$CUT_TIME" +"%s") if [ $This_Sum_Tamp -gt $Past_tamp ]; then foreach_value $GET_TEXT fi done } whlie_log

Read the log through the node script for warehousing operation

- node installation depends on [mysql, mysql2, sequelize]

- Create directory

- Compiling logic

Install node dependency

npm install mysql npm install mysql2 npm install sequelize

Create directory

Create directory list, comply with MVC Principles

index.js logic of the entry

const fs = require('fs'); const path = require('path'); const readline = require('readline'); const getLogInfo = require('./models'); // Read file path const _day = '/data/nginx_log/logs/access_day'; // Daily const _minute = '/data/nginx_log/logs/access_minute'; // No minute. // Synchronize all file logs for paths to read files const AllJournal = fs.readdirSync(_minute) AllJournal.map(item => `${_minute}/${item}`).forEach(item => { // Read file stream information line by line readLines(item) }) async function readLines(_file){ // Create a readable binary stream const getData = fs.createReadStream(_file) // Read by line const rl = readline.createInterface({ input:getData, // Listen to readable streams crlfDelay:Infinity // \r \n delay time, default is 100 }); // Print each line asynchronously for await (const line of rl) { getInfo(line) } } // Log information of segmentation string function getInfo(url) { // In order to be compatible with the last & match of regular expression url = `${url}&` const arr_log = [ "prject_id", "user_id", "log_type", "ua_info", "date", "key_name", "key_id", "key_self_info", "error_type", "error_type_info", "error_stack_info", "target_url", ] const reChek = arr_log.map(item => { const itemRe = new RegExp(`${item}.*?(?=&)`, 'g') return url.match(itemRe)[0] }).map(item => { let _key = item.split('=')[0] let _val = item.split('=')[1] return { [_key]: _val } }) // Processing field resources const checkLogList = {} reChek.forEach(item => { Object.assign(checkLogList, item); }) // Read data of each line, store and stock in getLogInfo(checkLogList) }

config/db.js

/** * Database configuration item [https://sequelize.org/v5/class/lib/sequelize.js ~ sequelize.html ා instance constructor constructor] * * @typedef {Object} MySql * @property {string} host IP address of the database * @property {string} user // Database user name * @property {string} password // Database password * @property {string} database // Database name * @property {string} dialect // The database type is one of mysql, postgres, sqlite and mssql. ] * @property {string} port // Database port * @property {Object} pool // Sort connection pool configuration */ const sqlConfig = { host: "xxxx", user: "xxx", password: "xxxx", database: "xxxx" }; const sequelize = new Sequelize(sqlConfig.database, sqlConfig.user, sqlConfig.password, { host: sqlConfig.host, dialect: 'mysql', port:'3306', pool: { max: 100, // Maximum connections in pool min: 10, // Minimum connections in pool acquire: 30000, // The maximum time (in milliseconds) that the pool will attempt to get a connection before throwing an error idle: 10000 // The maximum time (in milliseconds) that a connection can be idle before it is released. } });

config/index.js

const Db = require('./db'); Db .authenticate() .then(() => { console.log('Little brother, the link is successful'); }) .catch(err => { console.error('Report errors:', err); });

models/index.js

// Get the parameter information passed by the user const getLogInfo = function (log) { logTable.bulkCreate([log]) } // logTable.bulkCreate([ // { // prject_id: 'ceshi11111111111', // user_id: 'ceshi', // log_type: 'ceshi', // ua_info: 'ceshi', // date: '20180111', // key_name: 'ceshi', // key_self_info: 'ceshi', // error_type: 'ceshi', // error_type_info: 'ceshi', // error_stack_info: 'ceshi', // target_url: 'ceshi', // key_id: 'ceshi', // }, // { // prject_id: 'hhhhh', // user_id: 'ceshi', // log_type: 'ceshi', // ua_info: 'ceshi', // date: '20180111', // key_name: 'ceshi', // key_self_info: 'ceshi', // error_type: 'ceshi', // error_type_info: 'ceshi', // error_stack_info: 'ceshi', // target_url: 'ceshi', // key_id: 'ceshi', // } // ]) // ;(async function (){ // const all = await logTable.findAll(); // console.log(all) // console.log('query multiple statements') // }()) module.exports = getLogInfo

models/logTable.js

Self defined according to the type of database

const DataTypes = require('sequelize'); const Config = require('../config'); const logTable = Config.define('LogTableModel', { prject_id: { field: 'prject_id', type: DataTypes.STRING(255), allowNull: false }, user_id: { field: 'user_id', type: DataTypes.STRING(255), allowNull: false }, log_type: { field: 'log_type', type: DataTypes.INTEGER(64), allowNull: false }, ua_info: { field: 'ua_info', type: DataTypes.STRING(1000), allowNull: false }, date: { field: 'date', type: DataTypes.DATE, allowNull: false }, key_name: { field: 'key_name', type: DataTypes.STRING(255), allowNull: false }, key_self_info: { field: 'key_self_info', type: DataTypes.STRING(1000), allowNull: false }, error_type: { field: 'error_type', type: DataTypes.INTEGER(64), allowNull: false }, error_type_info: { field: 'error_type_info', type: DataTypes.STRING(1000), allowNull: false }, error_stack_info: { field: 'error_stack_info', type: DataTypes.STRING(1000), allowNull: false }, target_url: { field: 'target_url', type: DataTypes.STRING(1000), allowNull: false }, key_id: { field: 'key_id', type: DataTypes.STRING(64), allowNull: false }, }, { timestamps: false, tableName: 'log_table' } ) module.exports = logTable;