The second component of the Logstash three components is also the most complex and painful component of the real Logstash tool, and of course, the most useful one. 1. The grok plug-in grok plug-in has a very powerful function, it can match all the data, but its performance and the depletion of resources are also criticized.

filter{ grok{ #Just one match attribute, which cuts time out of the message field and assigns it to another field, logdate. #First of all, all text data is in the message field of Logstash, and the data we're going to operate on in the filter is message. #The second point to understand is that the grok plug-in is a very resource intensive plug-in, which is why I am only going to explain a TIMESTAMP_ISO8601 regular expression. #The third point to understand is that grok has super many pre-installed regular expressions, which are not completely accomplished here. Perhaps you can find the expression you need from this god's article #http://blog.csdn.net/liukuan73/article/details/52318243 #However, I still don't recommend using it because it can be replaced by other plug-ins, and of course, grok is a convenient property for time. match => ['message','%{TIMESTAMP_ISO8601:logdate}'] } }

2. The mutate plug-in mutate plug-in is a format used to process data. You can choose to process your time format, or you want to change a string to a number type (legal of course), so you can go back and do the same.Conversion types that can be set include "integer", "float" and "string".

filter { mutate { #Receives an array in the form of value,type #It is important to note that your data should be legitimate during the transition and that you cannot always convert an'abc'string to 123. convert => [ #Swap the value of request_time to floating point "request_time", "float", #Convert value of costTime to integer "costTime", "integer" ] } }

3. The official introduction to Ruby plugins is --- Everything.The Ruby plug-in can use any ruby syntax, whether it is logical judgment, conditional statements, looping statements, string operations, or EVENT objects.

filter { ruby { #The ruby plug-in has two properties, an init and a code #The init property is used to initialize the field, where you can initialize a field, regardless of its type, which is only valid in the ruby {} scope. #Here I initialize a hash field named field.You can use it in the coed property below. init => [field={}] #The code attribute is identified by two colons in which all your ruby syntax can be used. #Next, I'll work on a piece of data. #First, I need to get the value in the message field and divide it by'|'.This splits up an array (ruby's character creation). #In the second step, I need a loop array to determine if its value is the data I need (ruby conditional syntax, loop structure)? #Step 3, I need to add the fields I need into the EVEVT object. #Step 4, select a value for MD5 encryption #What is an event object?Evet is a Logstash object that you can manipulate in the code attribute of the ruby plug-in, add attribute fields, delete, modify, and perform resin operations. #When encrypting MD5, the corresponding package needs to be introduced. #Finally, the redundant message field is removed. code => " array=event. get('message').split('|') array.each do |value| if value.include? 'MD5_VALUE' then require 'digest/md5' md5=Digest::MD5.hexdigest(value) event.set('md5',md5) end if value.include? 'DEFAULT_VALUE' then event.set('value',value) end end remove_field=>"message" " } }

4. The date plug-in needs to be used with the value logdate that was stripped out of the previous group plug-in (of course, you may not be using the group).

filter{ date{ #Remember the field logdate stripped out of the grok plug-in?This is where you use it.You can format it as you need it, as to what it looks like.You have to look at it yourself. #Why format? #This is important for older data because you need to modify the value of the @timestamp field. If you don't, the time you saved in the ES is the system but the time before (+0 time zone) #Once formatted, you can specify @timestamp using the target attribute so that the time of your data is accurate, which is important for your future chart building. #Finally, the logdate field is no longer of any value, so we can remove it from the event object by hand. match=>["logdate","dd/MMM/yyyy:HH:mm:ss Z"] target=>"@timestamp" remove_field => 'logdate' #It is also important to note that the value of the @timestamp field, whether you can modify it or not, is best used at a certain point in time for your data. #If it's a log, use grok to save time. If it's a database, specify the value of a field to format, for example: "timeat", "%{TIMESTAMP_ISO8601:logdate}" #timeat is a field of my database about time. #If you don't have this field, don't try to modify it. } }

5. The json plug-in, which is also a very useful plug-in, now our log information is basically composed of a fixed style, we can use the json plug-in to parse it and get the corresponding values for each field.

filter{ #source specifies which of your values is json data. json { source => "value" } #Note: If your json data is multilevel, then the parsed data is an array in the multilevel knot. You can manipulate it using ruby syntax and eventually swap all the data to flat. }

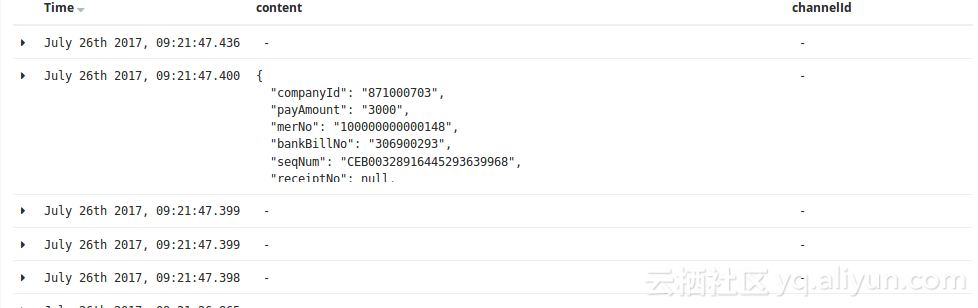

The json plug-in still needs to pay attention to the method used. The drawbacks of the multilayer structure are shown below:

The corresponding solution is:

ruby{ code=>" kv=event.get('content')[0] kv.each do |k,v| event.set(k,v) end" remove_field => ['content','value','receiptNo','channelId','status'] }

The plug-ins for the Logstash filter component are described here, and here's what you need to understand:

add_field, remove_field, add_tag, remove_tag are all Logstash plug-ins.The field name is known by using the inverse method.Better try it yourself.