Data Set Introduction

CIFAR 10, like MINIST, is a very suitable data set for the introduction of machine learning. It contains a total of 50,000 training pictures and 10,000 test pictures. There are ten categories in these pictures. In this paper, we will build a very simple convolutional neural network model and use it as training.

Data Set Import and Processing

import keras as keras import os os.environ['KERAS_BACKEND']='tensorflow' import numpy as np from keras.datasets import cifar10 from keras.layers import Conv2D, MaxPooling2D, Dropout, Dense, Flatten, BatchNormalization from keras.utils import plot_model import matplotlib.pyplot as plt

This data set is integrated into KERAS, which is very convenient to use.

(images, labels),(images_test, labels_test) = cifar10.load_data()

So we import the data set. These pictures are all RGB pictures of 32 x 32 x 332 times 32 times 332 x 32 x 3. First, we normalize them:

images = images / 255.0 images_test = images_test / 255.0

In addition, for the part of the picture label, we need to convert it into one hot encoding, which is convenient for us to train.

labels_onehot = np.zeros([labels.shape[0], 10])

labels_test_onehot = np.zeros([labels_test.shape[0], 10])

for i, j in enumerate(labels):

labels_onehot[i][j] = 1

for i, j in enumerate(labels_test):

labels_test_onehot[i][j] = 1

CNN build

Now we can use keras to build a simple neural network model:

dropout_rate = 0.3 batch_size = 256 epochs = 20

model = keras.Sequential()

model.add(Conv2D(16, (3, 3), padding='same', input_shape=(32,32,3), activation = 'relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(dropout_rate))

model.add(BatchNormalization())

model.add(Conv2D(32, (3, 3), padding='same', activation = 'relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(dropout_rate))

model.add(BatchNormalization())

model.add(Conv2D(64, (3, 3), padding='same', activation = 'relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(dropout_rate))

model.add(BatchNormalization())

model.add(Conv2D(128, (3, 3), padding='same', activation = 'relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(dropout_rate))

model.add(BatchNormalization())

model.add(Conv2D(256, (3, 3), padding='same', activation = 'relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(dropout_rate))

model.add(BatchNormalization())

model.add(Flatten())

model.add(Dense(128, activation = 'relu'))

model.add(Dropout(dropout_rate))

model.add(Dense(10, activation = 'softmax'))

model.compile(optimizer = "adam",

loss = 'categorical_crossentropy',

metrics = ['accuracy'])

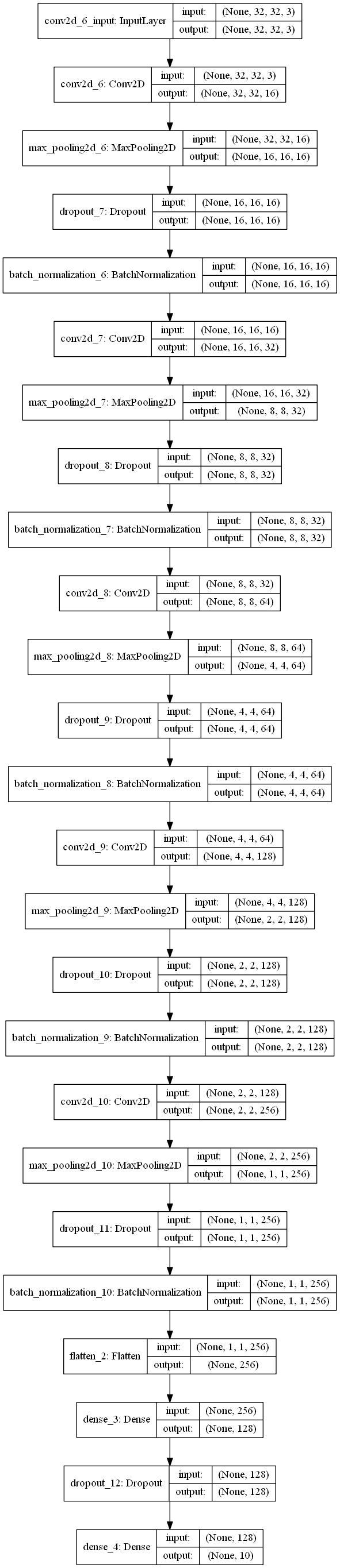

This is a very basic CNN model, we can draw it through keras:

plot_model(model, show_shapes=True)

We get the following images:

Finally, we can start training the model.

history = model.fit(images, labels_onehot, batch_size = 256, epochs = 50,

validation_data = [images_test, labels_test_onehot])

plt.plot(history.epoch, history.history.get("loss"), label = 'loss')

plt.plot(history.epoch, history.history.get("acc"), label = 'accuracy')

The results are as follows:

Epoch 50/50 50000/50000 [==============================] - 70s 1ms/step - loss: 0.7553 - acc: 0.7339 - val_loss: 0.7950 - val_acc: 0.7266

The recognition accuracy of this simple CNN model on CIFAR 10 data set is more than 70%.