1, The video rendering first needs to obtain the corresponding video frame. Here, use AVAssetReader to obtain the video frame. See https://www.cnblogs.com/czwlinux/p/15779598.html About video acquisition. The format used here is kCVPixelFormatType_420YpCbCr8BiPlanarFullRange to obtain video data

2, About how to add kCVPixelFormatType_420YpCbCr8BiPlanarFullRange video frame content converted to RGB

1. On the color space conversion can see https://www.jianshu.com/p/5b437c1df48e Relevant instructions

// BT.601, which is the standard for SDTV. matrix_float3x3 kColorConversion601Default = (matrix_float3x3){ (simd_float3){1.164, 1.164, 1.164}, (simd_float3){0.0, -0.392, 2.017}, (simd_float3){1.596, -0.813, 0.0}, }; //// BT.601 full range (ref: http://www.equasys.de/colorconversion.html) matrix_float3x3 kColorConversion601FullRangeDefault = (matrix_float3x3){ (simd_float3){1.0, 1.0, 1.0}, (simd_float3){0.0, -0.343, 1.765}, (simd_float3){1.4, -0.711, 0.0}, }; //// BT.709, which is the standard for HDTV. matrix_float3x3 kColorConversion709Default[] = { (simd_float3){1.164, 1.164, 1.164}, (simd_float3){0.0, -0.213, 2.112}, (simd_float3){1.793, -0.533, 0.0}, };

The above is the relevant conversion of each format. According to the relevant description of the link given above, the conversion formula of the local conversion has the following matrix initialization and corresponding conversion formula to rgb

- (void)matrixInit { matrix_float3x3 kColorConversion601FullRangeMatrix = (matrix_float3x3){ (simd_float3){1.0, 1.0, 1.0}, (simd_float3){0.0, -0.343, 1.765}, (simd_float3){1.4, -0.711, 0.0}, }; vector_float3 kColorConversion601FullRangeOffset = (vector_float3){ -(16.0/255.0), -0.5, -0.5}; // This is an offset YCConvertMatrix matrixBuffer = {kColorConversion601FullRangeMatrix, kColorConversion601FullRangeOffset}; self.convertMatrix = [self.mtkView.device newBufferWithBytes:&matrixBuffer length:sizeof(matrixBuffer) options:MTLResourceStorageModeShared]; }

fragment float4 fragmentShader(RasterizerData input[[stage_in]], texture2d<float> textureY[[texture(YCFragmentTextureIndexY)]], texture2d<float> textureUV[[texture(YCFragmentTextureIndexUV)]], constant YCConvertMatrix* matrixBuffer[[buffer(YCFragmentBufferIndexMatrix)]]) { constexpr sampler textureSampler(mag_filter::linear, min_filter::linear); float colorY = textureY.sample(textureSampler, input.textureCoordinate).r; float2 colorUV = textureUV.sample(textureSampler, input.textureCoordinate).rg; float3 yuv = float3(colorY, colorUV); float3 rgb = matrixBuffer->matrix * (yuv + matrixBuffer->offset); return float4(rgb, 1.0); }

3, About Y and UV acquisition

- (void)renderTextures:(id<MTLRenderCommandEncoder>)renderEncoder sampleBuffer:(CMSampleBufferRef)sampleBuffer { CVImageBufferRef imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); id<MTLTexture> textureY = nil; { size_t width = CVPixelBufferGetWidthOfPlane(imageBuffer, 0); size_t height= CVPixelBufferGetHeightOfPlane(imageBuffer, 0); MTLPixelFormat pixelFormat = MTLPixelFormatR8Unorm; CVMetalTextureRef texture = nil; CVReturn status = CVMetalTextureCacheCreateTextureFromImage(NULL, self.metalTextureCache, imageBuffer, NULL, pixelFormat, width, height, 0, &texture); if (status == kCVReturnSuccess) { textureY = CVMetalTextureGetTexture(texture); CFRelease(texture); } } id<MTLTexture> textureUV = nil; { size_t height = CVPixelBufferGetHeightOfPlane(imageBuffer, 1); size_t width = CVPixelBufferGetWidthOfPlane(imageBuffer, 1); MTLPixelFormat pixelFormat = MTLPixelFormatRG8Unorm; CVMetalTextureRef texture = nil; CVReturn status = CVMetalTextureCacheCreateTextureFromImage(NULL, self.metalTextureCache, imageBuffer, NULL, pixelFormat, width, height, 1, &texture); if (status == kCVReturnSuccess) { textureUV = CVMetalTextureGetTexture(texture); CFRelease(texture); } } if (textureUV && textureY) { [renderEncoder setFragmentTexture:textureY atIndex:YCFragmentTextureIndexY]; [renderEncoder setFragmentTexture:textureUV atIndex:YCFragmentTextureIndexUV]; } CFRelease(sampleBuffer); }

There are three key points above

1. pixelFormat: Y uses MTLPixelFormatR8Unorm as the acquired format, and then UV uses MTLPixelFormatRG8Unorm as the acquired format. The format here is different from that of GL. GL uses RA and metal uses RG

2. The planeIndex of cvmetaltexturecahecreatetexturefromimage is 0 in Y and 1 in UV; See the official description of this function for details

Mapping a BGRA buffer: CVMetalTextureCacheCreateTextureFromImage(kCFAllocatorDefault, textureCache, pixelBuffer, NULL, MTLPixelFormatBGRA8Unorm, width, height, 0, &outTexture); Mapping the luma plane of a 420v buffer: CVMetalTextureCacheCreateTextureFromImage(kCFAllocatorDefault, textureCache, pixelBuffer, NULL, MTLPixelFormatR8Unorm, width, height, 0, &outTexture); Mapping the chroma plane of a 420v buffer as a source texture: CVMetalTextureCacheCreateTextureFromImage(kCFAllocatorDefault, textureCache, pixelBuffer, NULL, MTLPixelFormatRG8Unorm width/2, height/2, 1, &outTexture); Mapping a yuvs buffer as a source texture (note: yuvs/f and 2vuy are unpacked and resampled -- not colorspace converted) CVMetalTextureCacheCreateTextureFromImage(kCFAllocatorDefault, textureCache, pixelBuffer, NULL, MTLPixelFormatGBGR422, width, height, 1, &outTexture);

Different formats have different planeindexes

Note the settings of width and height. The MTLPixelFormatRG8Unorm here uses width/2 and height/2

But what I use here is the original value, not divided by 2

This is because I get it through cvpixelbuffergethightofplane and cvpixelbuffergetwideofplane; If CVPixelBufferGetWidth and CVPixelBufferGetHeight are used, the original value needs to be divided by 2

Specifically, it is more clear to obtain through OfPlane

4, How does metal quickly create MTLTexture through CMSampleBufferRef

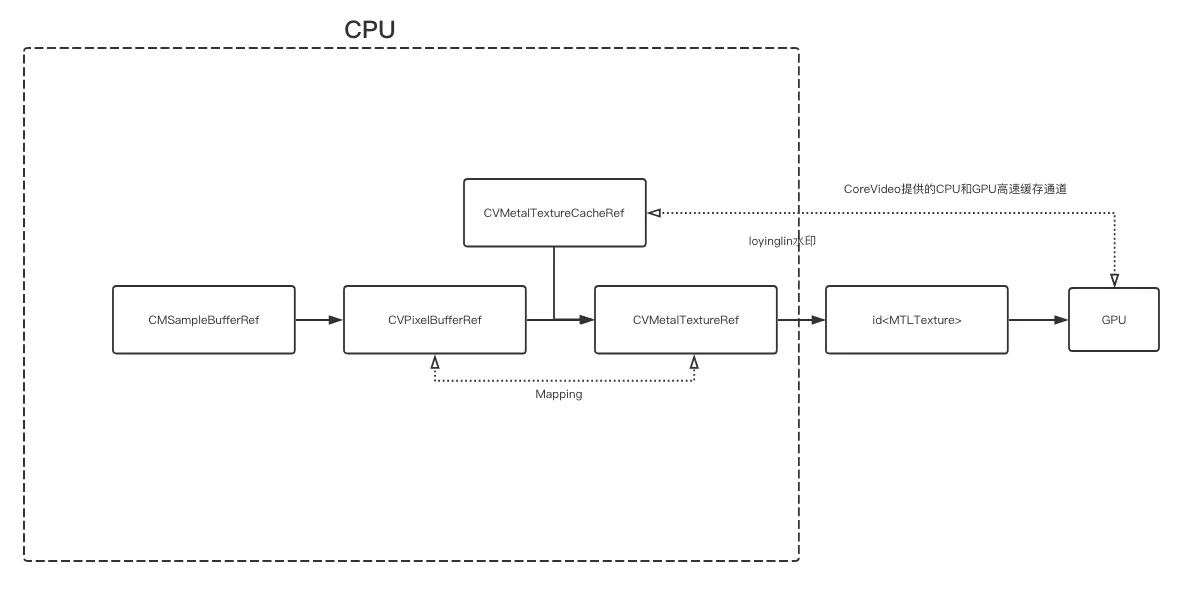

1. To understand how to quickly create a mechanism, first look at the figure

CoreVideo provides the cache channel CVMetalTextureCacheRef for cpu and gpu

As shown in the above figure, CVPixelBufferRef quickly creates CVMetalTExtureRef with the help of CVMetalTextureCacheRef and obtains the corresponding MTLTexture we need for rendering

The specific details are

CVReturn status = CVMetalTextureCacheCreateTextureFromImage(NULL, self.metalTextureCache, imageBuffer, NULL, pixelFormat, width, height, 0, &texture); if (status == kCVReturnSuccess) { textureY = CVMetalTextureGetTexture(texture); CFRelease(texture); }

Conclusion: when you understand the principle of PUV and the corresponding mapping process between PUV and RGB, and then understand the rendering optimization of metal about CoreVideo, you can clearly know the video rendering of metal

reference resources:

https://www.jianshu.com/p/7114536d705a

https://www.jianshu.com/p/5b437c1df48e