Service dependency

- jdk 1.8

- hadoop 2.5.1

- hbase 1.2.6

- pinpoint 3.3.3

- Windows 7 system

hadoop installation

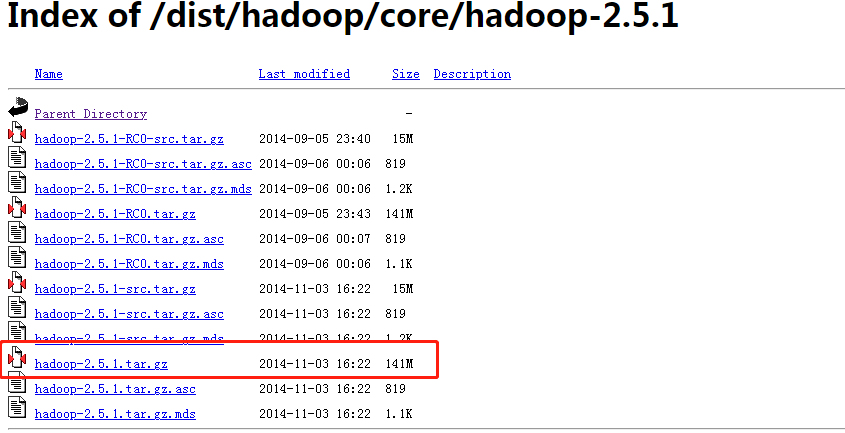

- Download Hadoop package

http://archive.apache.org/dist/hadoop/core/hadoop-2.5.1/ Download the version 2.5.1 installation package at http://archive.apache.org/dist/hadoop/core/hadoop-2.5.1/.

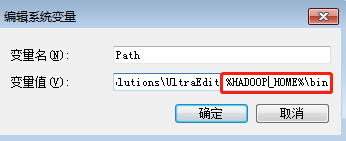

- Unzip the Hadoop package and add environment variables

Unzip the downloaded Hadoop package to a directory and set the environment variable

HADOOP_HOME=D:\hadoop-2.5.1

Add the path '% HADOOP_HOME%\bin' to the system path path

- Download window util for hadoop

The download address is: https://codeload.github.com/gvreddy1210/bin/zip/master Note that the version of the tool is compatible with the Hadoop version. After downloading, unzip and overwrite it to the bin directory of the above path, for example: D:\hadoop-2.5.1\bin.

- Create DataNode and NameNode

Create a Data directory and a Name directory to store Data, such as D:\hadoop-2.5.1\hadoop-2.5.1\data\datanode and D:\hadoop-2.5.1\data\namenode.

- Modify Hadoop related configuration files

Mainly modify four configuration files: core site xml, hdfs-site. xml, mapred-site. xml, yarn-site. XML, the path of these four files is: D:\hadoop-2.5.1\etc\hadoop:

- core-site. The complete content of the XML is as follows

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

- hdfs-site. The complete content of the XML is as follows

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/D:/hadoop-2.5.1/data/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/D:/hadoop-2.5.1/data/datanode</value>

</property>

</configuration>

</configuration>

- mapred-site. The complete content of XML is as follows

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

- yarn-site. The complete content of the XML is as follows

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>4096</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>2</value>

</property>

</configuration>

Note: change the path mentioned above to your own path.

- Initialize node

Enter the hadoop\bin directory and execute the command: hadoop namenode -format

- Start Hadoop

After completing the above initialization work, you can start Hadoop. Enter the hadoop\sbin directory and execute the command: start all (the shutdown command is stop all)

Access after successful startup: http://localhost:50070 Check for success

hbase installation

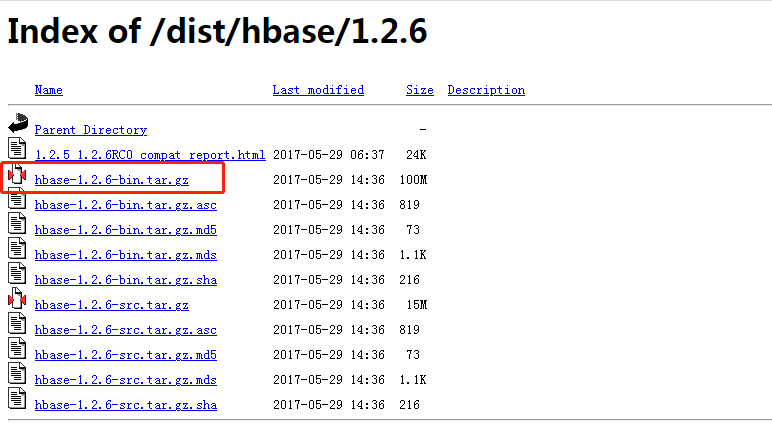

- Download Hbase package

Download address: Index of /dist/hbase/1.2.6

- Extract and modify the configuration

Modify conf / HBase env cmd,conf/hbase-site.xml configuration file

- hbase-env.cmd modify set JAVA_HOME=D:\java\jdk1.8.0_162. The complete contents are as follows:

@rem/** @rem * Licensed to the Apache Software Foundation (ASF) under one @rem * or more contributor license agreements. See the NOTICE file @rem * distributed with this work for additional information @rem * regarding copyright ownership. The ASF licenses this file @rem * to you under the Apache License, Version 2.0 (the @rem * "License"); you may not use this file except in compliance @rem * with the License. You may obtain a copy of the License at @rem * @rem * http://www.apache.org/licenses/LICENSE-2.0 @rem * @rem * Unless required by applicable law or agreed to in writing, software @rem * distributed under the License is distributed on an "AS IS" BASIS, @rem * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. @rem * See the License for the specific language governing permissions and @rem * limitations under the License. @rem */ @rem Set environment variables here. @rem The java implementation to use. Java 1.7+ required. set JAVA_HOME=D:\java\jdk1.8.0_162 @rem Extra Java CLASSPATH elements. Optional. @rem set HBASE_CLASSPATH= @rem The maximum amount of heap to use. Default is left to JVM default. @rem set HBASE_HEAPSIZE=1000 @rem Uncomment below if you intend to use off heap cache. For example, to allocate 8G of @rem offheap, set the value to "8G". @rem set HBASE_OFFHEAPSIZE=1000 @rem For example, to allocate 8G of offheap, to 8G: @rem etHBASE_OFFHEAPSIZE=8G @rem Extra Java runtime options. @rem Below are what we set by default. May only work with SUN JVM. @rem For more on why as well as other possible settings, @rem see http://wiki.apache.org/hadoop/PerformanceTuning @rem JDK6 on Windows has a known bug for IPv6, use preferIPv4Stack unless JDK7. @rem @rem See TestIPv6NIOServerSocketChannel. set HBASE_OPTS="-XX:+UseConcMarkSweepGC" "-Djava.net.preferIPv4Stack=true" @rem Configure PermSize. Only needed in JDK7. You can safely remove it for JDK8+ set HBASE_MASTER_OPTS=%HBASE_MASTER_OPTS% "-XX:PermSize=128m" "-XX:MaxPermSize=128m" set HBASE_REGIONSERVER_OPTS=%HBASE_REGIONSERVER_OPTS% "-XX:PermSize=128m" "-XX:MaxPermSize=128m" @rem Uncomment below to enable java garbage collection logging for the server-side processes @rem this enables basic gc logging for the server processes to the .out file @rem set SERVER_GC_OPTS="-verbose:gc" "-XX:+PrintGCDetails" "-XX:+PrintGCDateStamps" %HBASE_GC_OPTS% @rem this enables gc logging using automatic GC log rolling. Only applies to jdk 1.6.0_34+ and 1.7.0_2+. Either use this set of options or the one above @rem set SERVER_GC_OPTS="-verbose:gc" "-XX:+PrintGCDetails" "-XX:+PrintGCDateStamps" "-XX:+UseGCLogFileRotation" "-XX:NumberOfGCLogFiles=1" "-XX:GCLogFileSize=512M" %HBASE_GC_OPTS% @rem Uncomment below to enable java garbage collection logging for the client processes in the .out file. @rem set CLIENT_GC_OPTS="-verbose:gc" "-XX:+PrintGCDetails" "-XX:+PrintGCDateStamps" %HBASE_GC_OPTS% @rem Uncomment below (along with above GC logging) to put GC information in its own logfile (will set HBASE_GC_OPTS) @rem set HBASE_USE_GC_LOGFILE=true @rem Uncomment and adjust to enable JMX exporting @rem See jmxremote.password and jmxremote.access in $JRE_HOME/lib/management to configure remote password access. @rem More details at: http://java.sun.com/javase/6/docs/technotes/guides/management/agent.html @rem @rem set HBASE_JMX_BASE="-Dcom.sun.management.jmxremote.ssl=false" "-Dcom.sun.management.jmxremote.authenticate=false" @rem set HBASE_MASTER_OPTS=%HBASE_JMX_BASE% "-Dcom.sun.management.jmxremote.port=10101" @rem set HBASE_REGIONSERVER_OPTS=%HBASE_JMX_BASE% "-Dcom.sun.management.jmxremote.port=10102" @rem set HBASE_THRIFT_OPTS=%HBASE_JMX_BASE% "-Dcom.sun.management.jmxremote.port=10103" @rem set HBASE_ZOOKEEPER_OPTS=%HBASE_JMX_BASE% -Dcom.sun.management.jmxremote.port=10104" @rem File naming hosts on which HRegionServers will run. $HBASE_HOME/conf/regionservers by default. @rem set HBASE_REGIONSERVERS=%HBASE_HOME%\conf\regionservers @rem Where log files are stored. $HBASE_HOME/logs by default. @rem set HBASE_LOG_DIR=%HBASE_HOME%\logs @rem A string representing this instance of hbase. $USER by default. @rem set HBASE_IDENT_STRING=%USERNAME% @rem Seconds to sleep between slave commands. Unset by default. This @rem can be useful in large clusters, where, e.g., slave rsyncs can @rem otherwise arrive faster than the master can service them. @rem set HBASE_SLAVE_SLEEP=0.1 @rem Tell HBase whether it should manage it's own instance of Zookeeper or not. @rem set HBASE_MANAGES_ZK=true

- hbase-site. The complete content of the XML is as follows

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

/**

*

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

-->

<configuration>

<property>

<name>hbase.master</name>

<value>localhost</value>

</property>

<!-- appoint hbase Is the file storage path

1,Use local path

file:///F:/hbase/hbase-1.2.6/data/root

2,use hdfs

hdfs://localhost:9000/hbase

use hdfs The cluster wants to hadoop of hdfs-site.xml and core-site.xml put to hbase/conf lower

If hdfs It is a cluster, which can be configured into hdfs://Cluster name / hbase, such as:

hdfs://ns1/hbase

-->

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:9000/hbase</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>localhost</value>

</property>

<!-- appoint hbase master Port number of node information browsing -->

<property>

<name>hbase.master.info.port</name>

<value>60000</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>false</value>

</property>

</configuration>

- Start Hbase

Enter the hbase\bin directory and execute the command: start HBase

After startup, you can http://localhost:60000/master-status Browse master node information

pinpoint installation

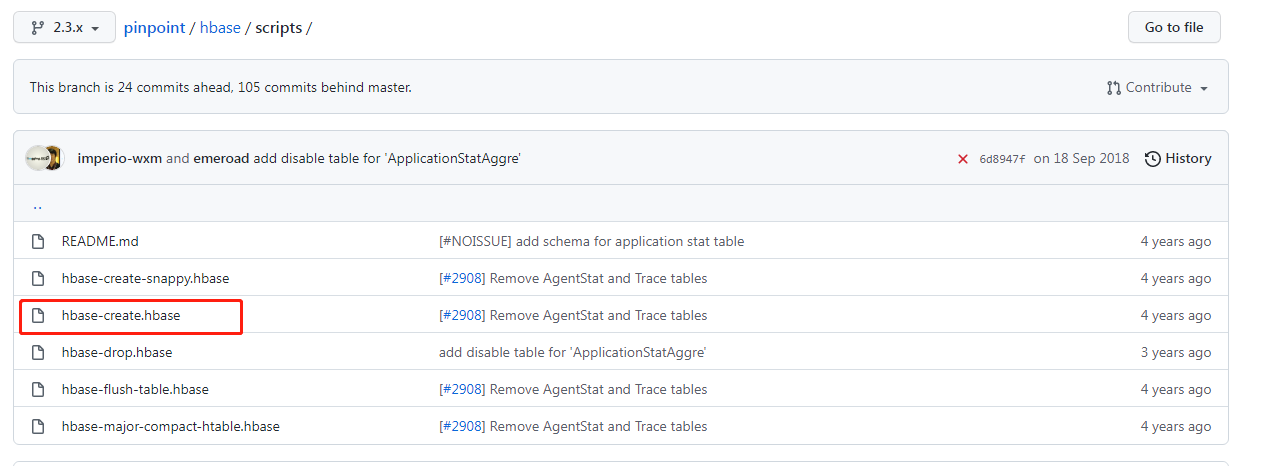

- Initialization script

Download script, script download address: https://github.com/pinpoint-apm/pinpoint/tree/2.3.x/hbase/scripts

Switch to the bin directory of hbase installed above. Execute the following code

hbase shell d:/hbase-create.hbase

- Download and start services

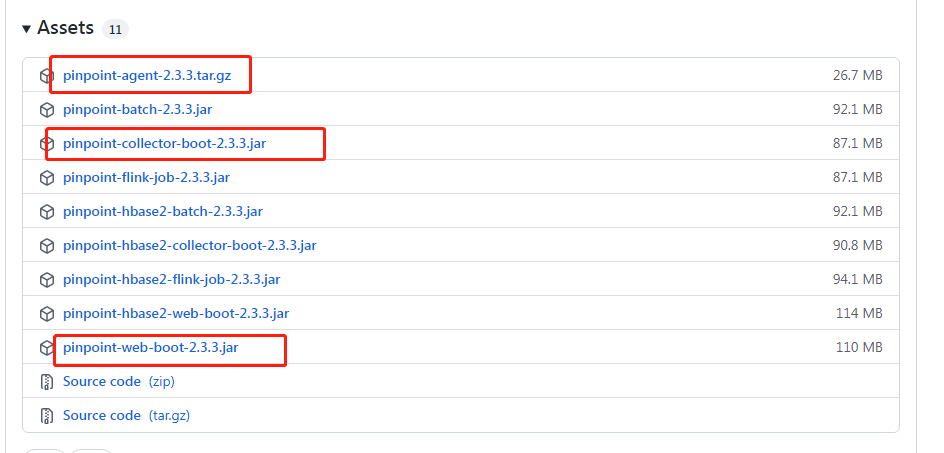

Download address: https://github.com/pinpoint-apm/pinpoint/releases Download pinpoint collector, pinpoint web and pinpoint agent.

- Start the pinpoint collector

java -jar -Dpinpoint.zookeeper.address=127.0.0.1 pinpoint-collector-boot-2.3.3.jar

- Start pinpoint Web

java -jar -Dpinpoint.zookeeper.address=127.0.0.1 pinpoint-web-boot-2.3.3.jar

After startup, access http://127.0.0.1:8080/ You can see the pinpoint interface

- Start agent

Unzip the compressed package of the pinpoint agent and add startup parameters to the service startup, such as

java -jar -javaagent:pinpoint-agent-2.3.3/pinpoint-bootstrap.jar -Dpinpoint.agentId=test-agent -Dpinpoint.applicationName=TESTAPP pinpoint-quickstart-testapp-2.3.3.jar

The application prints out the txid corresponding to the pinpoint

- PtxId and PspanId printing are added to the application logback configuration file

Before modification

<property name="log_pattern" value="[%-5p][%d{yyyy-MM-dd HH:mm:ss}] [%t] [%X{requestId}] %c - %m%n"/>

After modification

<property name="log_pattern" value="[%-5p][%d{yyyy-MM-dd HH:mm:ss}] [%t] [%X{requestId}] [TxId : %X{PtxId:-0} , SpanId : %X{PspanId:-0}] %c - %m%n"/>- agent configuration modification

Modify the pinpoint. Under D:\pinpoint-agent-2.3.3\profiles\release Config configuration file, the modified contents are as follows:

profiler.sampling.rate=1 # If logback is used to modify this, it is true. If other modifications are used, the corresponding profiler xxx. logging. transactioninfo profiler.logback.logging.transactioninfo=true

After modification, restart the service to print out the corresponding txid