epoll related system calls

#include <sys/epoll.h> int epoll_create(int size);

- epoll_create creates an epoll model and returns a file descriptor pointing to the epoll model.

- Parameter is deprecated and reserved for compatibility. Fill in at will.

- The file descriptor is returned successfully, and - 1 is returned for failure.

int epoll_ctl(int epfd, int op, int fd, struct epoll_event *event);

typedef union epoll_data {

void *ptr;

int fd;

uint32_t u32;

uint64_t u64;

} epoll_data_t; /* epoll_data_t */

struct epoll_event {

uint32_t events; /* Epoll events */

epoll_data_t data; /* User data variable */

};

- epoll_ctl is used to add, modify and delete some events of file descriptors to epoll model. So epoll_ctl is what the user tells the kernel.

- The first parameter is the file descriptor of the epoll model, which represents which epoll model you want to modify.

- The second parameter is the operation you want to perform, whether to add, modify or delete.

- The third parameter is the file descriptor you want to monitor.

- The fourth parameter is an epoll_ The event structure represents which events you want to monitor the file descriptor.

- There are only two, epollin (read event) and epollout (write event).

struct epoll_event:

- The first member variable of this structure, events, is a collection of events. The method used is the same as that of poll. Are added to events by bitwise OR. Judge whether there is an event by bitwise AND.

- The second member variable data is an epoll_data Consortium. We know that poll and select need to consider the upper layer protocol, but we don't know how much data is read, and epoll provides us with methods.

- We can operate epoll_ fd in data points to the file descriptor of the event set, or use ptr to implement a more complex protocol.

- 0 is returned for success and - 1 is returned for failure.

int epoll_wait(int epfd, struct epoll_event *events,

int maxevents, int timeout);

- epoll_wait is what the kernel tells the user.

- The first parameter represents the epoll model you want to wait for.

- The second parameter is an epoll_event array, output parameter, epoll_wait adds the ready event to the events array. (events cannot be null pointers. The kernel is only responsible for copying the data into the events array and will not help us allocate memory in the user state).

- maxevents indicates how many events you want to be ready, or the maximum number of events you can allow to be ready. (this is the same as a parameter type of read, which represents your buffer size)

- The last parameter is the same as the timeout of poll.

- Return value: the return value is the same as that of poll, indicating how many events are actually ready. However, epoll returns from the subscript of array No. 0 in turn!! In other words, we only need to traverse the first return value events of the array to find all ready events!!!

Principle of epoll

epoll model:

-

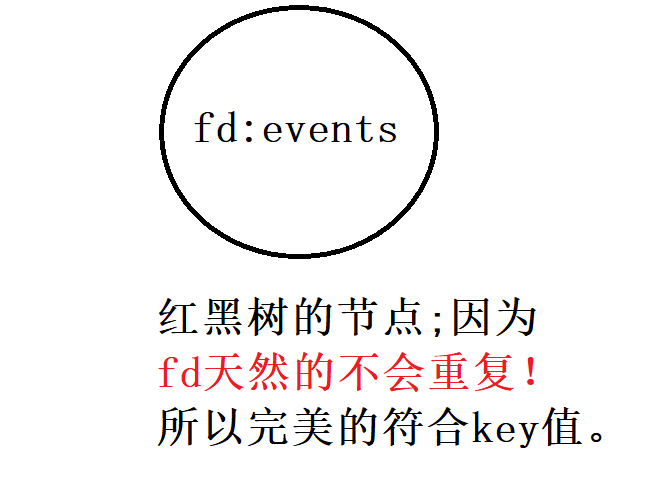

We're in epoll_ When creating, the bottom layer will create an epoll model for us. And this model is an empty red black tree! When we insert, modify and delete file descriptors, the bottom layer will help us create a node. The key of this node is the file descriptor and the value is events.

-

When we add an event, it is equivalent to adding a node to the red black tree; Modifying is equivalent to finding the node first, and then modifying its value through the key value; Deleting fd is equivalent to finding the corresponding node and deleting it.

-

The search efficiency of red black tree is very high!

epoll callback mechanism:

- The bottom layer of select and poll will do a lot of polling detection, because the operating system needs to actively determine which file descriptors and which events are ready. This makes them unable to handle a large number of file descriptors!

- Epoll uses callback mechanism. In epoll_ When create is called, the operating system will not only create a red black tree, but also create a callback mechanism!!

- Then we call epoll_ During CTL, the system will not only create a node and insert it into the red black tree, but also register a callback function in the corresponding driver. When the conditions are met, the callback function will notify the system that the event is ready.

- This becomes the passive reception of the operating system. When there are more file descriptors, the efficiency will increase.

Chestnuts:

There are two ways for the teacher to check his homework.

- Method 1: the teacher took the initiative to check, asked the students to put their homework on the table, and the teacher went to each student's seat to check in person. This is extremely inefficient. (select && poll)

- Method 2: the teacher said directly: who has finished his homework and give it to me for inspection. After inspection, he can finish class. In this way, the teacher passively accepts the homework, and the students who finish it will take it to the teacher for inspection. (epoll)

Ready queue for epoll:

- Another improvement over poll is the ready queue.

- In poll, even if we get the set of ready events, we still don't know which subscripts represent ready events, and polling detection is still needed. However, epoll adds ready queues.

- When an event is ready, the system will add the ready node to the ready queue, so that the ready queue can be returned directly when returning.

- epoll_create creates a ready queue. epoll_ The return value of wait is the length of the ready queue!! To judge whether an event is ready, we only need to judge whether the ready queue is empty, and there is no need for polling detection!! It saves a lot of time.

Advantages of epoll

- The interface is easy to use: although it is divided into three functions, it is more convenient and efficient to use. It does not need to set the concerned file descriptor every cycle, but also separates the input and output parameters.

- Lightweight data copy: call epoll only when appropriate_ CTL_ Add copies the file descriptor structure to the kernel, which is not frequent (while select/poll is copied every cycle).

- Event callback mechanism: instead of traversal, use callback functions to add the ready file descriptor structure to the ready queue.

- epoll_wait returns to directly access the ready queue to know which file descriptors are ready. The operation time complexity is O(1). Even if the number of file descriptors is large, the efficiency will not be affected.

- There is no limit on the number of file descriptors: there is no upper limit on the number of file descriptors, and the more file descriptors, the higher the efficiency.

epoll code

/* sock.hpp */

1 #pragma once

2

3 #include <iostream>

4 #include <string>

5 #include <unistd.h>

6 #include <sys/types.h>

7 #include <sys/socket.h>

8 #include <netinet/in.h>

9 #include <cstring>

10 #include <sys/epoll.h>

11 #define LOGBACK 5

12 using namespace std;

13 class Sock{ // This class is used to encapsulate socket s

14 public:

15 static int Socket(){

16 int sockfd = socket(AF_INET, SOCK_STREAM, 0);

17 if(sockfd < 0){

18 cerr << "socket error "<< endl;

19 exit(1);

20 }

21 return sockfd;

22 }

23 static void Bind(int sockfd, int port){

24 struct sockaddr_in local;

25 local.sin_family = AF_INET;

26 local.sin_port = htons(port);

27 local.sin_addr.s_addr = htonl(INADDR_ANY);

28 int ret = bind(sockfd, (struct sockaddr*)&local, sizeof(local));

29 if(ret < 0){

30 cerr << "bind error" << endl;

31 exit(2);

32 }

33 }

34 static void Listen(int sockfd){

35 int ret = listen(sockfd, LOGBACK);

36 if(ret < 0){

37 cerr << "listen error" << endl;

38 exit(3);

39 }

40 }

41 static int Accept(int sockfd){

42 struct sockaddr_in peer;

43 socklen_t len = sizeof(peer);

44 int sock = accept(sockfd, (struct sockaddr*)&peer, &len);

45 if(sock < 0){

46 cerr << "accept error" << endl;

47 return -1; // accept wrong and the server continues to run

48 }

49 return sock;

50 }

51 static void Setsockopt(int lsock){

52 int opt = 1;

53 setsockopt(lsock, SOL_SOCKET, SO_REUSEADDR, &opt, sizeof(opt));

54 }

55 };

epoll.hpp

/* epoll.hpp */

1 #include "sock.hpp"

2

3 #define SIZE 64

4

5 struct bucket{ // Used to receive each epoll_event data

6 int fd;

7 int pos;

8 char buf[10] = {0};

9

10 bucket(int _fd): fd(_fd), pos(0){}

11 };

12

13 class EpollServer{

14 private:

15 int port;

16 int lsock;

17 int epfd; // Structure of epoll

18

19 public:

20 EpollServer(int _port = 8080) 21 :port(_port)

22 ,lsock(-1)

23 ,epfd(-1){}

24

25 void Init(){

26 lsock = Sock::Socket();

27 Sock::Setsockopt(lsock);

28 Sock::Bind(lsock, port);

29 Sock::Listen(lsock);

30 epfd = epoll_create(10); // The reference has been discarded; Set at will;

31 if(epfd < 0){

32 cerr << "epoll_create error" << endl;

33 exit(6);

34 }

35 }

36 void Start(){

37 // Add lsock to the structure of epoll;

38 struct epoll_event events;

39 events.events = EPOLLIN;

40 events.data.ptr = new bucket(lsock);

41 //int epoll_ctl(int epfd, int op, int fd, struct epoll_event *event);

42 epoll_ctl(epfd, EPOLL_CTL_ADD, lsock, &events);

43

44 struct epoll_event ee[SIZE];

45 while(true){

46 int ret = epoll_wait(epfd, ee, SIZE, 1000);

47 if(ret > 0){

48 HandleEvents(ee, ret);

49 }

50 else if(ret == 0){

51 cout << "timeout..." << endl;

52 }

53 else{

54 cerr << "epoll_wait error" << endl;

55 exit(7);

56 }

57 }//Large loop of server

58 }

59 ~EpollServer(){

60 close(lsock);

61 close(epfd);

62 }

63

64 private: //The functions here are used inside the epoll class

65 void HandleEvents(struct epoll_event* pEvents, int num){

66 for(int i = 0; i < num; ++i){

67 if(pEvents[i].events & EPOLLIN){

68 bucket* b_ptr = static_cast<bucket*>(pEvents[i].data.ptr);

69 if(b_ptr->fd == lsock){

70 int sock = Sock::Accept(lsock);

71 AddFd2Epoll(sock, EPOLLIN);

72 }//Reading is a connection

73 else{

74 ssize_t rest = sizeof(b_ptr->buf) - b_ptr->pos - 1;

75 ssize_t ss = recv(b_ptr->fd, b_ptr->buf + b_ptr->pos, rest, 0);

76 if(ss > 0){

77 if(ss < rest){

78 cout << b_ptr->buf << endl;

79 b_ptr->pos = ss;

80 } //The quantity I want is not read. Continue reading

81 else{

82 cout << b_ptr->buf << endl;

83 struct epoll_event temp; //epoll_ctl will copy the data to the kernel, so don't worry about temp being a temporary variable

84 temp.events = EPOLLOUT;

85 temp.data.ptr = b_ptr;

86 epoll_ctl(epfd, EPOLL_CTL_MOD, b_ptr->fd, &temp);

87 }//When buf is full, start analysis and write data

88 } // Read data;

89 else if(ss == 0){

90 cout << "client quit..." << endl;

91 DelFdFromEpoll(b_ptr->fd);

92 close(b_ptr->fd);

93 delete b_ptr;

94 }

95 else{

96 cerr << "recv error" << endl;

97 exit(8);

98 }

99 }//Read data

100 }//Read event ready

101 else if(pEvents[i].events & EPOLLOUT){

102 bucket* b_ptr = static_cast<bucket*>(pEvents[i].data.ptr);

103 int left = strlen(b_ptr->buf);

104 ssize_t ss = send(b_ptr->fd, b_ptr->buf, strlen(b_ptr->buf), 0);

105 left -= ss;

106 if(left > 0){

107 (b_ptr->buf)[left] = 0;

108 }

109 else{

110 DelFdFromEpoll(b_ptr->fd);

111 close(b_ptr->fd);

112 delete b_ptr;

113 }

114 }//Write event ready

115 else{

116 // other thing,

117 }

118 }//Traverse pEvents

119 } //End of function

120

121 void AddFd2Epoll(int fd, uint32_t MOD){

122 struct epoll_event event;

123 event.events = MOD;

124 event.data.ptr = new bucket(fd);

125 epoll_ctl(epfd, EPOLL_CTL_ADD, fd, &event);

126 }

127 void DelFdFromEpoll(int fd){

128 epoll_ctl(epfd, EPOLL_CTL_DEL, fd, nullptr);

129 }

130 };

epoll.cc

1 #include "epoll.hpp"

2

3 int main(){

4 EpollServer* es = new EpollServer(8080);

5 es->Init();

6 es->Start();

7

8 delete es;

9 }

How epoll works

- Zhang San and Li Si are both couriers.

- One day, Zhang San sent an express to Xiao Ming. Zhang San went downstairs to Xiao Ming. Then call Xiao Ming. At this time, Xiao Ming is playing hero League and is about to be pushed to the highland. Tell Zhang San to wait a minute. After a while, Zhang San called Xiaoming to get the express. Before Xiaoming finished, he waited for a while, and then Zhang San kept calling...

- Li Si, make a phone call and never call again. Unless Xiaoming comes a new express.

Horizontal trigger, LT

-

Zhang San's way of delivering express is level triggered, LT(Level Triggered)

-

epoll works in LT mode by default.

-

When epoll detects that the event on the socket is ready, it can not process it immediately, or only process part of it.

-

For example, the socket receives 2K data, reads 1K data only for the first time, and 1K data remains in the buffer. Epoll is called for the second time_ Epoll when waiting_ Wait will still return immediately and notify the socket that the read event is ready.

-

Until all the data on the buffer is processed, epoll_wait won't return immediately.

-

Supports blocking read-write and non blocking read-write.

To put it simply: if the underlying data is ready, it will always notify the upper layer that the data is ready. If it is not finished, it will keep sending notifications.

Edge trigger, ET

- Li Si's working mode is ET(Edge Triggered)

- To put it simply: when the underlying data comes, recall the upper layer's notification that the data is ready and fast fetched. If it is not fetched or not fetched at one time, epoll_wait won't tell you again. Unless new data comes.

- When epoll detects that the event on the socket is ready, it must be handled immediately.

- As in the above example, although 1K data is read-only, 1K data remains in the buffer. In the second call. epoll_wait, epoll_wait will not return.

- In other words, in ET mode, after the event on the file descriptor is ready, there is only one processing opportunity.

- The performance of ET is higher than that of LT (epoll_wait returns a lot less times). Nginx uses epoll in et mode by default.

- Only non blocking reads and writes are supported.

Other questions:

- If you want an event to be in ET mode, you can press bit or EPOLLET in the events set of its socket.

- Because epoll in ET mode_ The number of wait returns is less, so the requirements for the program are higher. We hope to read all the data at one time, so as to reduce the possibility of data loss.

How to judge whether the data has been read?

- Ask your father for money, 100 at a time. If your father gives you 100, it proves that your father still has money. If your father only gives you 30 (less than 100), it means your father has no money.

- Similarly, we use cyclic reading to read 512 bytes of data each time. When the read data is less than 512, it is proved that the data reading is completed.

- but, if there are 1024 bytes of data in the socket, you will be stuck when you read 512 twice, and then read it the third time!!

- However, our multiplexing is a single process and is not allowed to be stuck. Therefore, the file descriptor in ET mode must be non blocking!!!

LT vs ET

-

LT is the default behavior of epoll. Using ET can reduce the number of epoll triggers. But the price is to force the programmer to process all the data in one response.

-

It is equivalent to that after a file descriptor is ready, it will not be repeatedly prompted for ready, which seems to be more efficient than LT (in short, why et is more efficient than LT: because the notification method of ET does not do repeated actions). However, in LT, if it can be processed immediately every time the ready file descriptor is not repeatedly prompted, In fact, the performance is the same.

-

On the other hand, the code complexity of ET is higher (no way, the bottom layer is simple, then the cost is the complexity of the upper layer).

-

In fact, we can combine the working modes of ET and LT to adopt different working modes for different sockets.