L2 cache principle

1. L2 cache

1.1 definitions

L2 cache is also called application level cache. Different from L1 cache, its scope is the whole application and can be used across threads. Therefore, L2 cache has a higher hit rate and is suitable for caching some data with less modification.

When it comes to L2 cache, let's briefly talk about L1 cache. mybatis we use everyday is basically L1 cache.

During the application running, we may execute SQL with identical query conditions many times in a database session. MyBatis provides a scheme to optimize the first level cache. If the SQL statements are the same, we will give priority to hit the first level cache to avoid querying the database directly and improve performance.

Each SqlSession holds an Executor, and each Executor has a LocalCache. When the user initiates a query, MyBatis generates a MappedStatement according to the currently executed statement and queries in the Local Cache line. If the cache hits, the result is directly returned to the user. If the cache does not hit, the database is queried, the result is written to the Local Cache, and finally the result is returned to the user.

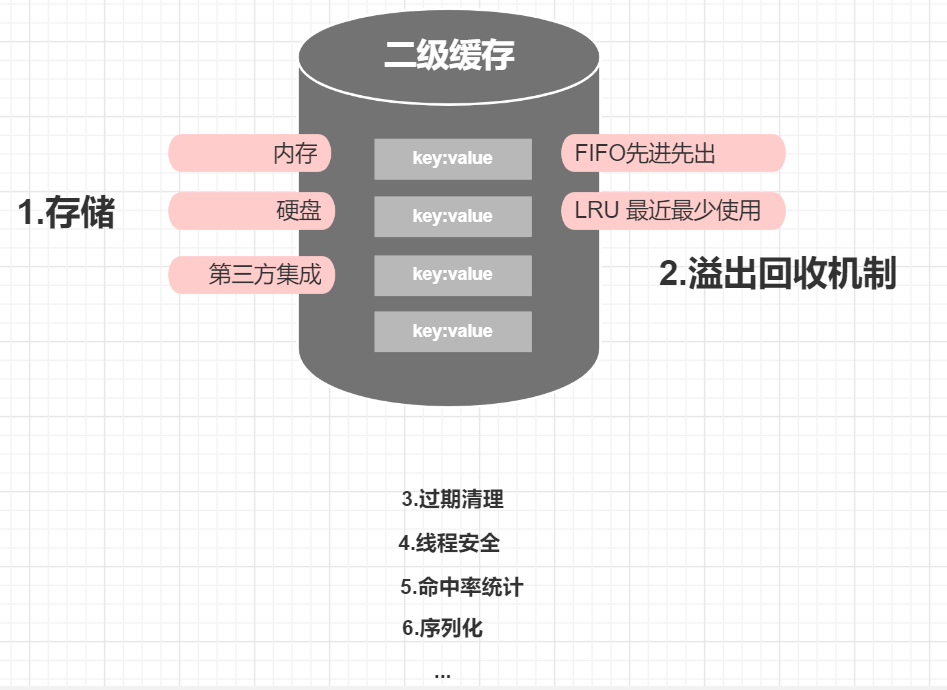

1.2 scalability requirements

The life cycle of L2 cache is the whole application, so the capacity of L2 cache must be limited. MyBatis uses overflow elimination mechanism here. The L1 cache is session level. The life cycle is very short and there is no need to implement these functions. In contrast, the L2 cache mechanism is more perfect.

1.3 structure

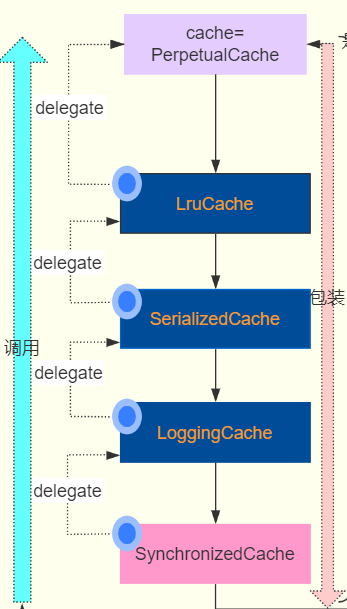

The secondary cache adopts the decorator + responsibility chain mode in the structural design

How does L2 cache assemble these decorators?

CacheBuilder It is the construction class of the second level cache, which defines the properties of some decorators in the above figure. The first level construction combines the behavior of these decorators.

public Cache build() {

this.setDefaultImplementations();

Cache cache = this.newBaseCacheInstance(this.implementation, this.id);

this.setCacheProperties((Cache)cache);

if (PerpetualCache.class.equals(cache.getClass())) {

Iterator var2 = this.decorators.iterator();

while(var2.hasNext()) {

Class<? extends Cache> decorator = (Class)var2.next();

cache = this.newCacheDecoratorInstance(decorator, (Cache)cache);

this.setCacheProperties((Cache)cache);

}

cache = this.setStandardDecorators((Cache)cache);

} else if (!LoggingCache.class.isAssignableFrom(cache.getClass())) {

cache = new LoggingCache((Cache)cache);

}

return (Cache)cache;

}

private void setDefaultImplementations() {

if (this.implementation == null) {

this.implementation = PerpetualCache.class;

if (this.decorators.isEmpty()) {

this.decorators.add(LruCache.class);

}

}

}

private Cache setStandardDecorators(Cache cache) {

try {

MetaObject metaCache = SystemMetaObject.forObject(cache);

if (this.size != null && metaCache.hasSetter("size")) {

metaCache.setValue("size", this.size);

}

if (this.clearInterval != null) {

cache = new ScheduledCache((Cache)cache);

((ScheduledCache)cache).setClearInterval(this.clearInterval);

}

if (this.readWrite) {

cache = new SerializedCache((Cache)cache);

}

Cache cache = new LoggingCache((Cache)cache);

cache = new SynchronizedCache(cache);

if (this.blocking) {

cache = new BlockingCache((Cache)cache);

}

return (Cache)cache;

} catch (Exception var3) {

throw new CacheException("Error building standard cache decorators. Cause: " + var3, var3);

}

}

1.4 synchronized cache thread synchronization cache

The thread synchronization function is realized to ensure the thread safety of the L2 cache together with the serialization cache. If blocking=false is closed, the synchronized cache is located at the front of the responsibility chain, otherwise it is located behind the BlockingCache, and the BlockingCache is located at the front of the responsibility chain, so as to ensure that the whole responsibility chain is thread synchronized.

1.5 LoggingCache statistics hit rate and print log

public class LoggingCache implements Cache {

private final Log log;

private final Cache delegate;

protected int requests = 0;

protected int hits = 0;

public LoggingCache(Cache delegate) {

this.delegate = delegate;

this.log = LogFactory.getLog(this.getId());

}

public Object getObject(Object key) {

++this.requests;//Execute a query plus one

Object value = this.delegate.getObject(key);//Query whether the cache already exists

if (value != null) {

++this.hits;//One hit plus one

}

if (this.log.isDebugEnabled()) {//Open debug log

this.log.debug("Cache Hit Ratio [" + this.getId() + "]: " + this.getHitRatio());

}

return value;

}

private double getHitRatio() {//Calculate hit rate

return (double)this.hits / (double)this.requests;//Hits: number of queries

}

}

1.6 ScheduledCache expiration cleaning cache

@CacheNamespace(flushInterval=100L) sets the expiration cleaning time to 1 hour by default. If flushInterval is set to 0, it means that it will never be cleared.

public class ScheduledCache implements Cache {

private final Cache delegate;

protected long clearInterval;

protected long lastClear;

public ScheduledCache(Cache delegate) {

this.delegate = delegate;

this.clearInterval = 3600000L;

this.lastClear = System.currentTimeMillis();

}

public void clear() {

this.lastClear = System.currentTimeMillis();

this.delegate.clear();

}

private boolean clearWhenStale() {

//Judge whether the difference between the current time and the last cleaning time is greater than the set overdue cleaning time

if (System.currentTimeMillis() - this.lastClear > this.clearInterval) {

this.clear();//Once cleaned up, the entire cache is cleaned up

return true;

} else {

return false;

}

}

}

1.7 LruCache (least recently used) anti overflow buffer

The internal use of linked lists to achieve the least recent use of anti overflow mechanism

public void setSize(final int size) {

this.keyMap = new LinkedHashMap<Object, Object>(size, 0.75F, true) {

private static final long serialVersionUID = 4267176411845948333L;

protected boolean removeEldestEntry(Entry<Object, Object> eldest) {

boolean tooBig = this.size() > size;

if (tooBig) {

LruCache.this.eldestKey = eldest.getKey();

}

return tooBig;

}

};

}

//Each access will traverse the key for reordering, and put the access elements at the end of the linked list.

public Object getObject(Object key) {

this.keyMap.get(key);

return this.delegate.getObject(key);

}

1.8 fifocache (first in first out) anti overflow buffer

Internally, the queue key is used to realize the first in first out anti overflow mechanism

public class FifoCache implements Cache {

private final Cache delegate;

private final Deque<Object> keyList;

private int size;

public FifoCache(Cache delegate) {

this.delegate = delegate;

this.keyList = new LinkedList();

this.size = 1024;

}

public void putObject(Object key, Object value) {

this.cycleKeyList(key);

this.delegate.putObject(key, value);

}

public Object getObject(Object key) {

return this.delegate.getObject(key);

}

private void cycleKeyList(Object key) {

this.keyList.addLast(key);

if (this.keyList.size() > this.size) {//Compare whether the number of elements in the current queue is greater than the set value

Object oldestKey = this.keyList.removeFirst();//Remove queue header element

this.delegate.removeObject(oldestKey);//Remove the corresponding element in the cache according to the key of the removed element

}

}

}

1.9 L2 cache usage (hit condition)

- After session submission

- sql statements and parameters are the same

- Same statementID

- Same as rowboundaries

Transactions set to auto commit do not hit the L2 cache

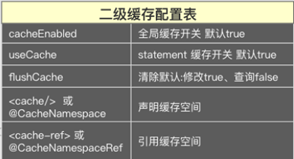

2. L2 cache configuration

2.1 configuration

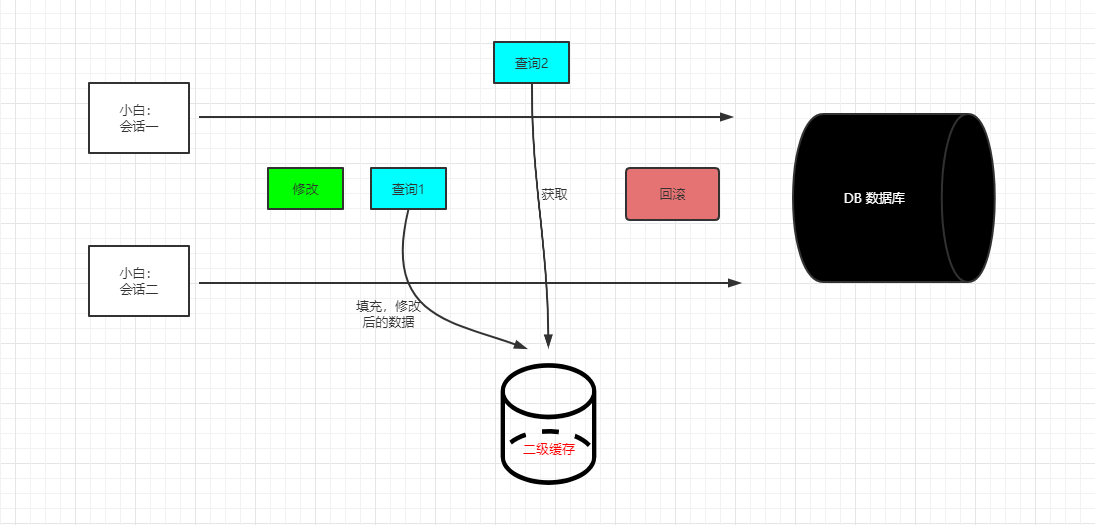

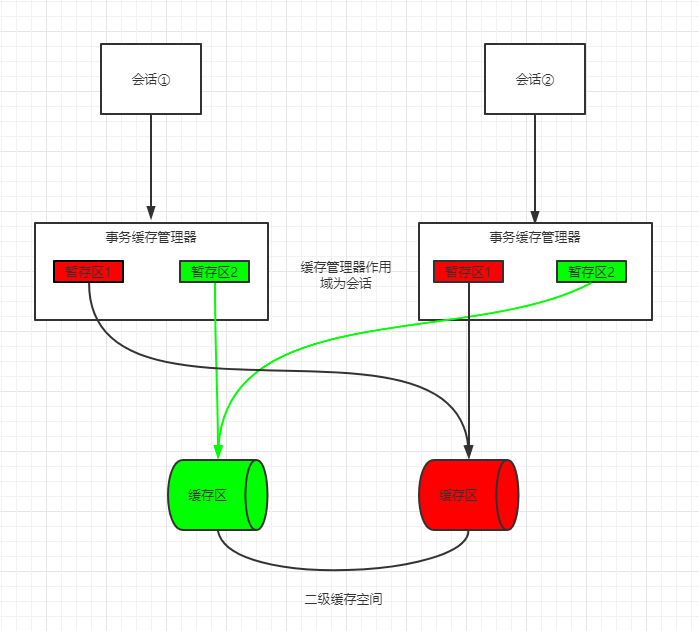

2.2 why can L2 cache hit the cache after submission

Session 1 and session 2 are originally two isolated transactions, but they are visible to each other due to the existence of L2 cache, and dirty reads will occur. If the modification of session 2 is directly filled into the L2 cache, the data that exists in the cache when session 1 queries will be returned directly. At this time, session 2 rolls back, and the data read by session 1 is dirty data. In order to solve this problem, the MyBatis L2 cache mechanism introduces the transaction manager (staging area). All changed data will be staged in the staging area of the transaction manager. Only after the commit action is executed will the data be really filled from the staging area into the L2 cache

- Session: transaction staging Manager: staging = 1:1:N

- Staging area: cache area = 1:1 (one staging area corresponds to the only cache area)

- When the session is closed, the transaction cache manager is closed and the staging area is emptied

- One transaction cache manager manages multiple staging areas

- The number of staging areas depends on how many Mapper files are accessed (the cached key is the full path ID of the Mapper file)

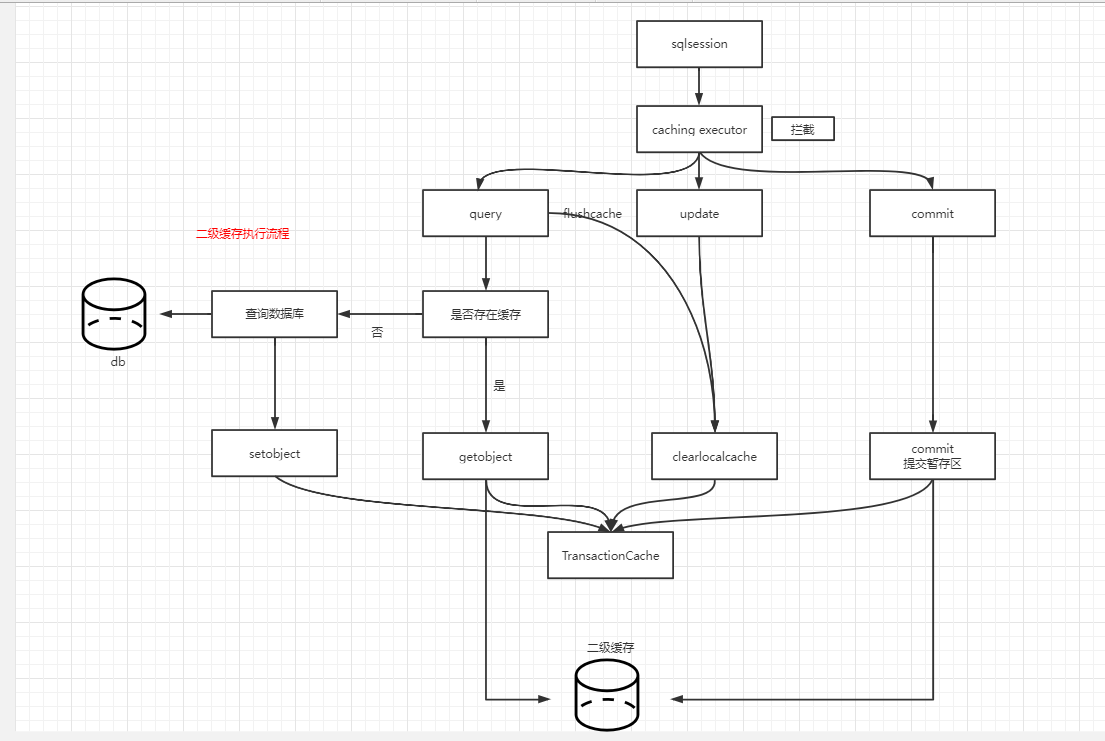

2.3 L2 cache execution process

- Queries are stored in the real-time query cache.

- All real-time changes to the L2 cache are implemented through the staging area

- The staging area will be identified after cleaning, but the data in the L2 cache is not cleaned up at this time. The data in the L2 cache will be cleaned up only after the commit is executed.

- The query will query the cache in real time. If the staging area cleaning flag is true, even if the data is queried from the cache, a null will be returned and the database will be queried again (the staging area cleaning flag bit true will also return null to prevent dirty reading. Once the data in the L2 cache is emptied, what is read is dirty data. Therefore, returning null and re querying the database will get the correct data.)

If you open the L2 cache for query, you will go to the query method in the class cacheingexecution

public <E> List<E> query(MappedStatement ms, Object parameterObject, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql) throws SQLException {

Cache cache = ms.getCache();//Get Cache

if (cache != null) {

this.flushCacheIfRequired(ms);//Judge whether flushCache=true is configured. If it is configured, empty the staging area

if (ms.isUseCache() && resultHandler == null) {

this.ensureNoOutParams(ms, boundSql);

List<E> list = (List)this.tcm.getObject(cache, key);//Get cache

if (list == null) {//If it is empty, query the database and fill the data into the staging area

list = this.delegate.query(ms, parameterObject, rowBounds, resultHandler, key, boundSql);

this.tcm.putObject(cache, key, list);

}

return list;

}

}

return this.delegate.query(ms, parameterObject, rowBounds, resultHandler, key, boundSql);

}

Query the L2 cache according to the tcm.getObject(cache,key) method in the previous step

public Object getObject(Object key) {

2 Object object = this.delegate.getObject(key);//Query L2 cache

3 if (object == null) {//Null is also used to set a value to prevent cache penetration

4 this.entriesMissedInCache.add(key);

5 }

6 //Judge whether the temporary storage area emptying flag is true. If true, directly return null and re query the database to prevent dirty reading

7 return this.clearOnCommit ? null : object;

8 }