background

When the business party migrates, the total data size is only more than 300 M, but the MySQL server prompts that the packet exceeds max_allowed_packet, but there is no problem with 10G pressure test data during test migration?

The final solution to the problem is very simple, adjust the appropriate max_ allowed_ The size of the packet and create a new connection,

However, we can take this opportunity to learn more about MySQL packets, such as where MySQL limits the packet size and so on

net_buffer_length

| edition | mysqldump | mysql client | mysql server |

|---|---|---|---|

| 5.7 | default: 1MB max: 16MB | default: 16KB | default: 16KB max: 1MB |

| 8.0 | default: 1MB | default: 16KB | default: 16KB |

max_allowed_packet

max allowed packet sets the maximum size of any single message between MySQL Server and Client, including replica (master-slave or MGR)

| edition | mysqldump | mysql client | mysql server |

|---|---|---|---|

| 5.5 | default: 24MB | default: 16MB | default: 1MB |

| 5.7 | default: 24MB | default: 16MB | default: 4MB |

| 8.0 | default: 24MB | default: 16MB | default: 64MB |

A MySQL API function that needs to be mentioned here: mysql_stmt_send_long_data()

This function is used (when using the preprocessing function) to allow parameter data to be sent to the server in blocks for multiple times. For example, when the size of blob or text exceeds the size of max_allowed_packet. The premise is that the column type must be text or BLOB data type

effect

The functions of the above two parameters are: each packet sent to or read from the network will be saved in net - > buff first, and will not be sent to the opposite end through socket until Net - > buff is full or a command is completed. Net - > buff has an initial size (net - > max_packet), which will expand with the increase of read data until max_ allowed_ Size of packet

Related source code

MySQL Packet structure

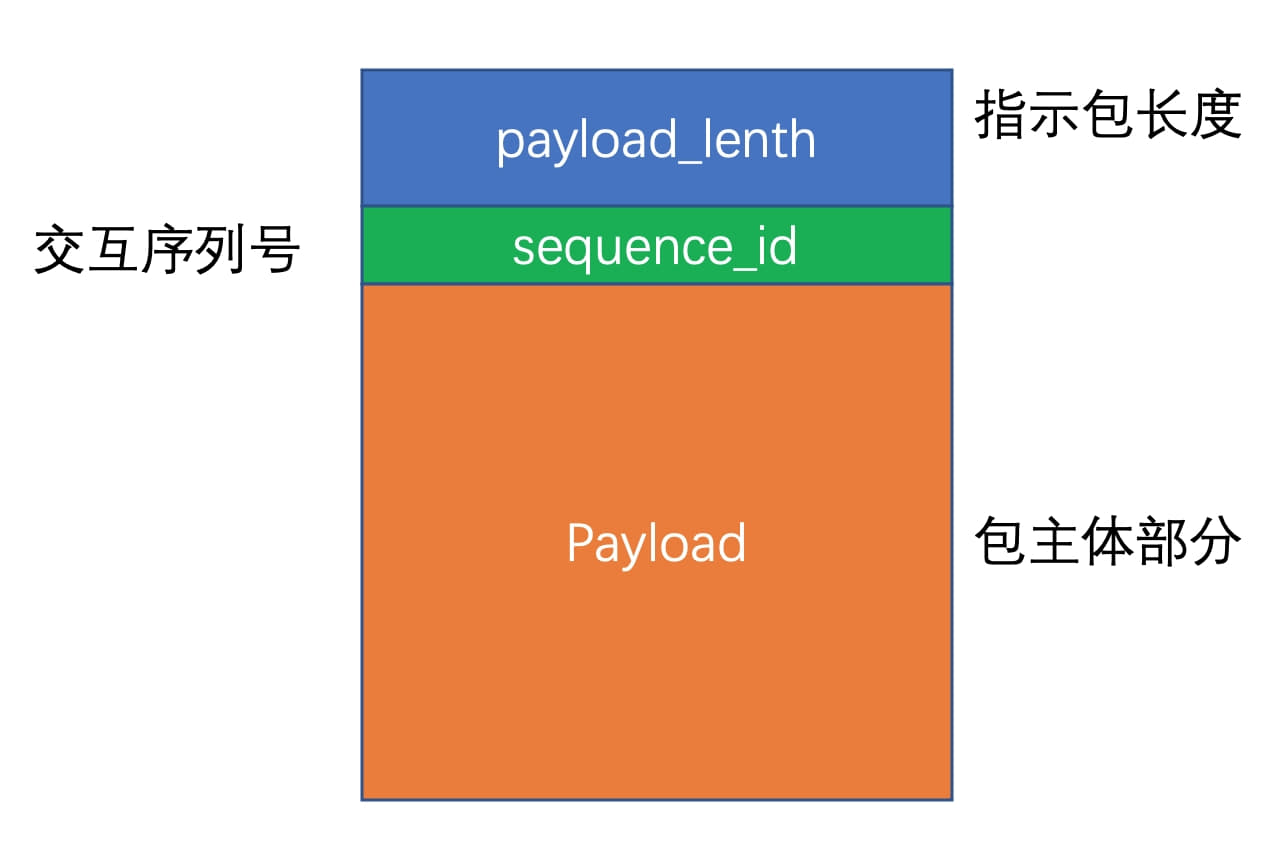

The interaction between MySQL client and server is conducted in packets. The size and length of each packet is limited to 2 ^ 24 − 1 bytes (i.e. 16MB). If the packet length is too large, the client needs to fragment the packet itself so that the length of each segment is below the maximum length of MySQL Packet. MySQL Packet is composed of Header and Body,.

The Header contains two fields: payload_length and sequence_id. the body is the main part of the package, and its length is determined by the payload in the Header_ The length field indicates.

Including payload_length takes up 3 bytes, sequence_id occupies 1 byte.

Therefore, the maximum length of a Pakcet in MySQL is 16M + 4 bytes, which is greater than max_ packet_ The data of size (2 ^ 24-1 bytes) will be unpacked and sent

Receiver

my_net_read()

For large package processing, net will be called circularly in the end_ read_ Packet() until the exit condition is met

ulong my_net_read(NET *net) {

size_t len;

/* turn off non blocking operations */

if (!vio_is_blocking(net->vio)) vio_set_blocking_flag(net->vio, true);

// Enable compression

if (net->compress)

net_read_compressed_packet(net, len);

else

net_read_uncompressed_packet(net, len);

return static_cast<ulong>(len);

}

Similar to the two, let's look at the process of reading uncompressed packet s

net_read_uncompressed_packet()

Read a packet into net - > buff + Net - > where_ b. If it is the first message of multi packet message (represented by [16M] by the length of data packet = 0xffffff), read and splice all sub packets.

static void net_read_uncompressed_packet(NET *net, size_t &len) {

size_t complen;

assert(!net->compress);

// Read the packet for the first time and return the packet length

len = net_read_packet(net, &complen);

// When the packet length is 0xffff, the subsequent packets are read circularly

// Until the subsequent packet length is not 0xffff

if (len == MAX_PACKET_LENGTH) {

/* First packet of a multi-packet. Concatenate the packets */

ulong save_pos = net->where_b;

size_t total_length = 0;

do {

net->where_b += len;

total_length += len;

len = net_read_packet(net, &complen);

} while (len == MAX_PACKET_LENGTH);

if (len != packet_error) len += total_length;

net->where_b = save_pos;

}

net->read_pos = net->buff + net->where_b;

if (len != packet_error)

net->read_pos[len] = 0; /* Safeguard for mysql_use_result */

}

net_read_packet()

Read the packet data into the buffer and return the length of the currently received packet

/*

@return The length of the packet, or @c packet_error on error.

*/

static size_t net_read_packet(NET *net, size_t *complen) {

size_t pkt_len, pkt_data_len;

...

/* Retrieve packet length and number. */

if (net_read_packet_header(net)) goto error;

...

/* Read the first three bytes of the packet and get the payload of the currently received packet_ length */

pkt_len = uint3korr(net->buff + net->where_b);

...

/* The calculation includes the total packet data length obtained before */

pkt_data_len = max(pkt_len, *complen) + net->where_b;

...

/*

Through net_realloc() capacity expansion net_buffer

If the total packet length exceeds max_packet(16M), but not more than

max_allowed_packet The capacity expansion is performed normally_ Buffer and read in payload data

*/

if ((pkt_data_len >= net->max_packet) && net_realloc(net, pkt_data_len))

goto error;

/* Read the packet data (payload). */

if (net_read_raw_loop(net, pkt_len)) goto error;

...

}

#define uint3korr(A) (uint32_t) (((uint32_t) ((uint8_t) (A)[0])) +\

(((uint32_t) ((uint8_t) (A)[1])) << 8) +\

(((uint32_t) ((uint8_t) (A)[2])) << 16))

net_realloc()

net_ The buffer is dynamically expanded. The total buffer length cannot exceed max_allowed_packet size

bool net_realloc(NET *net, size_t length) {

uchar *buff;

size_t pkt_length;

DBUG_TRACE;

DBUG_PRINT("enter", ("length: %lu", (ulong)length));

// When the total packet length has exceeded max_allowed_packet size

// Record error, return true

// You can see ER in the mysql server log_ NET_ PACKET_ TOO_ Large error

if (length >= net->max_packet_size) {

DBUG_PRINT("error",

("Packet too large. Max size: %lu", net->max_packet_size));

/* Error, but no need to stop using the socket. */

net->error = NET_ERROR_SOCKET_RECOVERABLE;

net->last_errno = ER_NET_PACKET_TOO_LARGE;

#ifdef MYSQL_SERVER

my_error(ER_NET_PACKET_TOO_LARGE, MYF(0));

#endif

return true;

}

pkt_length = (length + IO_SIZE - 1) & ~(IO_SIZE - 1);

...

#ifdef MYSQL_SERVER

net->buff = net->write_pos = buff;

#else

size_t cur_pos_offset = NET_ASYNC_DATA(net)->cur_pos - net->buff;

net->buff = net->write_pos = buff;

NET_ASYNC_DATA(net)->cur_pos = net->buff + cur_pos_offset;

#endif

net->buff_end = buff + (net->max_packet = (ulong)pkt_length);

return false;

}

Sender

The sending process is easy to understand. Take the go MySQL driver package often used in go as an example

// Write packet buffer 'data'

func (mc *mysqlConn) writePacket(data []byte) error {

pktLen := len(data) - 4

// The maximum value of the package is exceeded (can be modified), and the default values are different for different versions

if pktLen > mc.maxAllowedPacket {

return ErrPktTooLarge

}

// Perform a stale connection check. We only perform this check for

// the first query on a connection that has been checked out of the

// connection pool: a fresh connection from the pool is more likely

// to be stale, and it has not performed any previous writes that

// could cause data corruption, so it's safe to return ErrBadConn

// if the check fails.

if mc.reset {

mc.reset = false

conn := mc.netConn

if mc.rawConn != nil {

conn = mc.rawConn

}

var err error

// If this connection has a ReadTimeout which we've been setting on

// reads, reset it to its default value before we attempt a non-blocking

// read, otherwise the scheduler will just time us out before we can read

if mc.cfg.ReadTimeout != 0 {

err = conn.SetReadDeadline(time.Time{}) // Set timeout

}

if err == nil {

err = connCheck(conn) // Check connection

}

if err != nil {

errLog.Print("closing bad idle connection: ", err)

mc.Close()

return driver.ErrBadConn

}

}

for {

var size int

// If it is greater than 1 < < 24 - 1 bytes (16M, hard coded, and cannot be modified, as is the case with mysql server), unpack and send it

if pktLen >= maxPacketSize {

// When the receiver reads this header, it will know that there are 'follow-up packages'

data[0] = 0xff

data[1] = 0xff

data[2] = 0xff

size = maxPacketSize

} else {

data[0] = byte(pktLen)

data[1] = byte(pktLen >> 8)

data[2] = byte(pktLen >> 16)

size = pktLen

}

data[3] = mc.sequence // Package number

// Write packet

if mc.writeTimeout > 0 { // Write timeout

if err := mc.netConn.SetWriteDeadline(time.Now().Add(mc.writeTimeout)); err != nil {

return err

}

}

n, err := mc.netConn.Write(data[:4+size]) // send data

if err == nil && n == 4+size {

mc.sequence++

if size != maxPacketSize {

return nil

}

pktLen -= size

data = data[size:]

continue // Continue sending 'data of remaining packets'

}

// Handle error

if err == nil { // n != len(data)

mc.cleanup()

errLog.Print(ErrMalformPkt)

} else {

if cerr := mc.canceled.Value(); cerr != nil {

return cerr

}

if n == 0 && pktLen == len(data)-4 {

// only for the first loop iteration when nothing was written yet

return errBadConnNoWrite

}

mc.cleanup()

errLog.Print(err)

}

return ErrInvalidConn

}

}

matters needing attention

- Statement merging of mysqldump

It should be noted that when exporting mysqldump, if – net buffer length is not specified, but – opt or – extended insert, - E (create multi line insert statement), the default size of a single insert statement is 1MB

Assuming that the single insert statement here does not reach 1MB,

insert into t1 values(1,2);

Then mysqldump will help you splice multiple insert s until the size reaches 1MB, as shown below

insert into t1 values(1,2),(3,4),(5,6),(7,8).....(n,n);

If a single insert exceeds 1MB, mysqldump will not be spliced

- max_allowed_packet variable setting problem

- This system variable needs to be in a new MySQL connection to take effect

- This parameter needs to be adjusted at both ends of the transmission, otherwise overflow at either end will cause data transmission failure

- Consider max_ allowed_ The packet setting is too large and the instance memory is insufficient

summary

The above notes explain why the total data size is only more than 300 M when the business party migrates, but the mysql server prompts that the packet exceeds Max during migration_ allowed_ Packet, but during the test migration, 10G pressure test data did not have any problems: