catalogue

1, MySQL master-slave replication

1.1 master slave replication architecture and principle

1.1.1 service performance expansion mode

1.2.1 what is read-write separation?

1.2.2 why is read-write separation necessary

1.2.3 when should I separate reading from writing

1.2.4 master slave copy and read / write separation

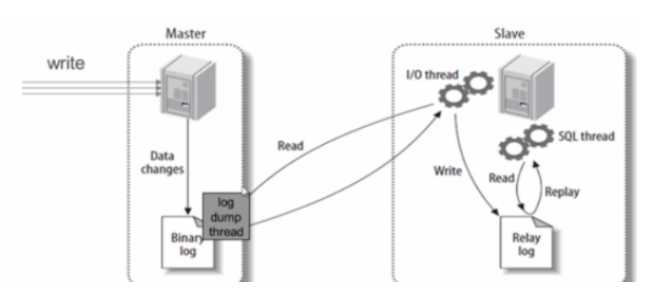

1.5 master slave replication principle

1.5.1 master slave replication related threads

1.5.2 documents related to copy function

1.5.3 MySQL master-slave replication delay

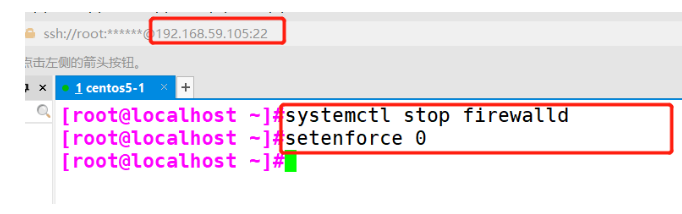

2.2 initial environmental preparation

2.3 build mysql master-slave replication

2.3.1 setup time synchronization (main server: 192.168.59.118)

2.3.2 setup time synchronization (slave servers: 192.168.59.111, 192.168.59.112)

2.3.3 configure the master server (192.168.59.118)

2.3.4 configuring slave servers (192.168.59.111, 192.168.59.112)

2.3.5 verifying master-slave synchronization

2.4 build Amoeba to realize read-write separation (192.168.59.113)

2.4.1 installing the Java environment

2.4.3 testing at the client (192.168.59.105)

1, MySQL master-slave replication

1.1 master slave replication architecture and principle

1.1.1 service performance expansion mode

-

Vertical expansion

-

Scale out

1.2 MySQL extension

-

Read write separation

-

Replication: each node has the same data set, which expands outward and is one-way replication based on binary logs

1.2.1 what is read-write separation?

1. Basic principle of read-write separation

The basic principle is to let the primary database handle transactional add, change and DELETE operations (INSERT, UPDATE and DELETE), while the secondary database handles SELECT query operations. Database replication is used to synchronize changes caused by transactional operations to slave databases in the cluster.

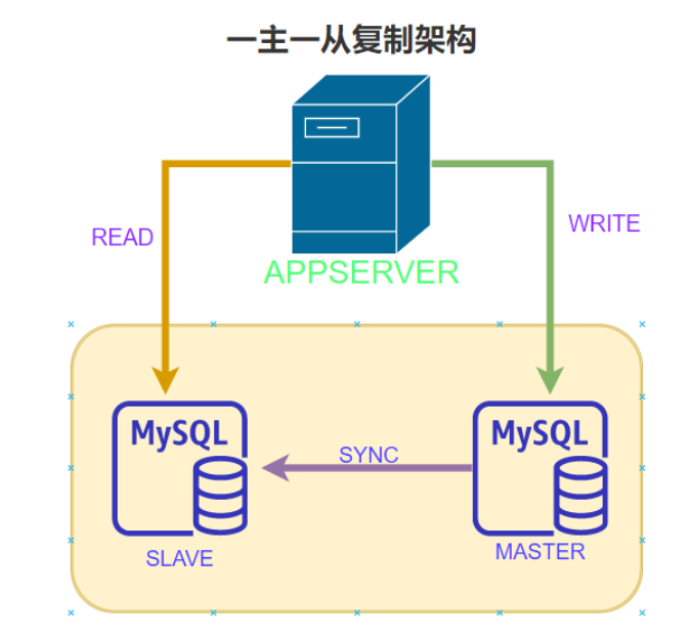

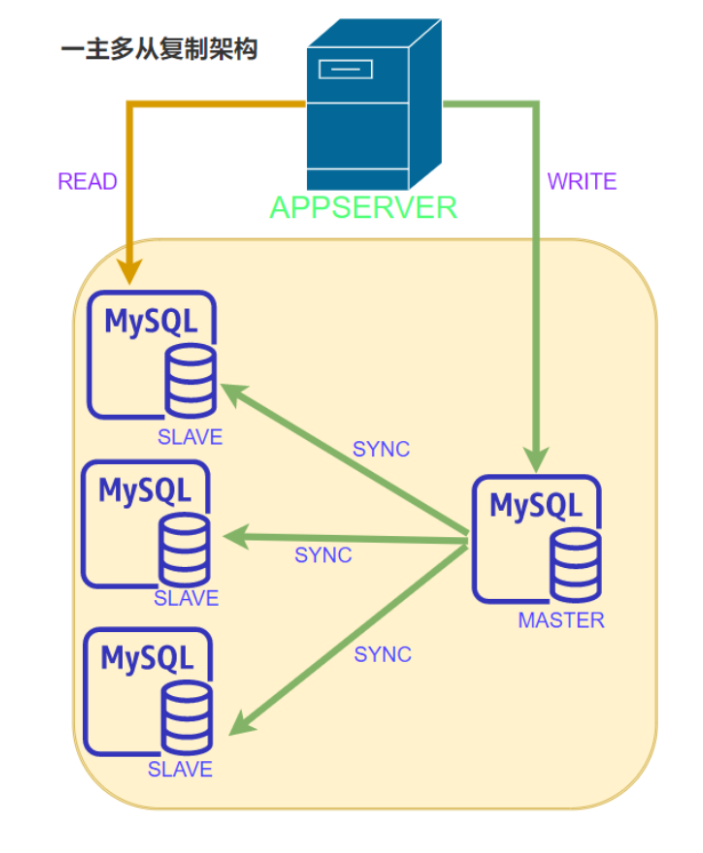

2.MySQL read-write separation principle

Read write separation is to write only on the master server and read only on the slave server. The basic principle is to let the master database handle transactional operations and the select query from the database. Database replication is used to synchronize changes caused by transactional operations on the master database to the slave database in the cluster.

-

At present, the common MySQL read-write separation is divided into the following two types: 1) based on the internal implementation of program code

In the code, routing is classified according to select and insert. This kind of method is also the most widely used in production environment. The advantage is better performance, because it is implemented in program code, and there is no need to add additional equipment for hardware expenditure; The disadvantage is that it needs developers to implement it, and the operation and maintenance personnel have no way to start. However, not all applications are suitable for realizing read-write separation in program code. For example, some large and complex Java applications, if reading-write separation is realized in program code, the code will be greatly changed.

2) Implementation based on intermediate agent layer

The proxy is generally located between the client and the server. After receiving the client request, the proxy server forwards it to the back-end database through judgment. There are the following representative programs.

(1)MySQL-Proxy. MySQL proxy is an open source MySQL project, and SQL judgment is performed through its own lua script. (2)Atlas. It is a data middle tier project based on MySQL protocol developed and maintained by the infrastructure team of Qihoo 360's Web Platform Department. Based on MySQL proxy version 0.8.2, it optimizes it and adds some new features. The MySQL service run by atlas in 360 carries billions of read and write requests every day. Support things and stored procedures. (3)Amoeba. Developed by Chen Siru, the author once worked for Alibaba. The program is developed by the Java language and Alibaba uses it in the production environment. However, it does not support transactions and stored procedures.

-

Because a large number of Lua scripts need to be written to use MySQL Proxy, these Luas are not ready-made, but need to be written by themselves. This is very difficult for people who are not familiar with MySQL Proxy built-in variables and MySQL Protocol.

-

Amoeba is a very easy to use and portable software. Therefore, it is widely used in the agent layer of database in production environment.

1.2.2 why is read-write separation necessary

-

Because the "write" (writing 10000 pieces of data may take 3 minutes) operation of the database is time-consuming.

-

However, the "read" of the database (it may take only 5 seconds to read 10000 pieces of data).

-

Therefore, the separation of reading and writing solves the problem that the writing of the database affects the efficiency of query.

1.2.3 when should I separate reading from writing

-

The database does not have to be read-write separated. If the program uses more databases, less updates and more queries, it will be considered.

-

The use of database master-slave synchronization and read-write separation can share the pressure of the database and improve the performance.

1.2.4 master slave copy and read / write separation

-

In the actual production environment, the reading and writing of the database are in the same database server, which can not meet the actual needs. Whether in security, high availability or high concurrency, it can not meet the actual needs.

-

Therefore, master-slave replication is used to synchronize data, and then read-write separation is used to improve the concurrent load capacity of the database. It is somewhat similar to rsync, but the difference is that rsync backs up disk files, while mysql master-slave replication backs up data and statements in the database.

1.3 functions of replication

-

data distribution

-

Load balanced read operation

-

backups

-

High availability and failover

-

MySQL upgrade test

1.4 replication architecture

1.5 master slave replication principle

-

Generate two threads from the library, one I/O thread and one SQL thread;

-

The i/o thread requests the binlog of the main database and writes the binlog log to the relay log file;

-

The master library will generate a log dump thread to transfer binlog to the slave library i/o thread;

-

The SQL thread will read the logs in the relay log file and parse them into specific operations to achieve consistent master-slave operations and consistent final data;

Note:

● the relay log is usually located in the OS cache, so the overhead of the relay log is very small.

● there is a very important limitation in the replication process, that is, the replication is serialized on the Slave, that is, the parallel update operation on the Master cannot be operated in parallel on the Slave

1.5.1 master slave replication related threads

Master node:

-

dump Thread: start a dump Thread for each Slave I/O Thread to send binary log events

Slave node:

-

I/O Thread: request binary log events from the Master and save them in the relay log

-

SQL Thread: read the log events from the relay log and complete the replay locally

1.5.2 documents related to copy function

-

master.info: used to save relevant information when slave connects to master, such as account, password, server address, etc

-

relay-log.info: saves the correspondence between the copied current binary log and the local relay log on the current slave node

-

mariadb-relay-bin.00000#: a relay log, which stores binary logs copied from the primary node. It is essentially a binary log

1.5.3 MySQL master-slave replication delay

-

The master server is highly concurrent, forming a large number of transactions

-

Network delay

-

The master-slave hardware device causes cpu master frequency, memory io and hard disk io

-

It is not synchronous replication, but asynchronous replication. Optimize Mysql parameters from the library. For example, increase innodb_buffer_pool_size to allow more operations to be completed in Mysql memory and reduce disk operations. Use high-performance hosts from the library. Including strong cpu and increased memory. Avoid using virtual virtual hosts and use physical hosts, which improves the i/o complexity. The slave library uses SSD disk network optimization to avoid synchronization across machine rooms

2, Actual operation

2.1 environment configuration

master Server: 192.168.59.118 mysql5.7 slave1 Server: 192.168.59.111 mysql5.7 slave1 Server: 192.168.59.112 Amoeba Server: 192.168.59.113 jdk1.6,Amoeba Client server: 192.168.59.105 mysql

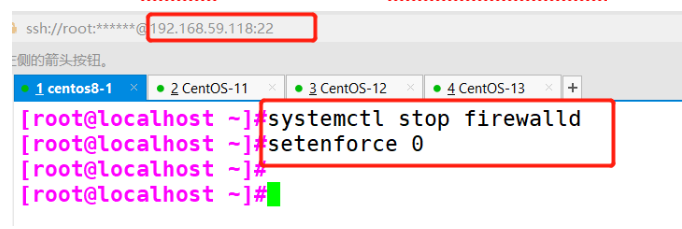

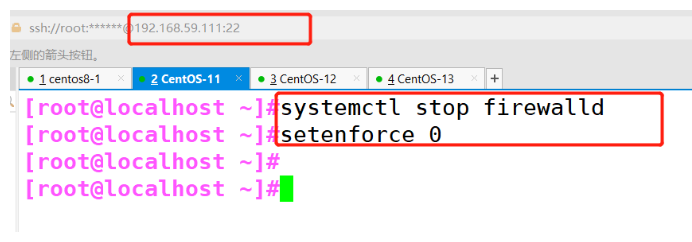

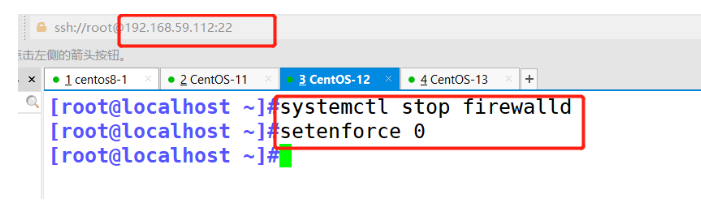

2.2 initial environmental preparation

#Initialized on all five servers systemctl stop firewalld setenforce 0

2.3 build mysql master-slave replication

2.3.1 setup time synchronization (main server: 192.168.59.118)

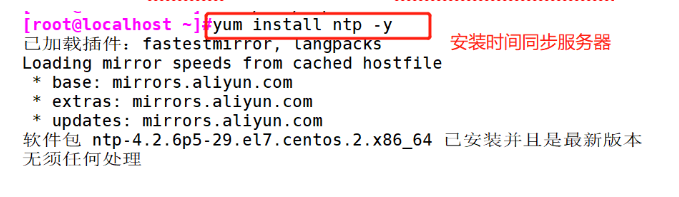

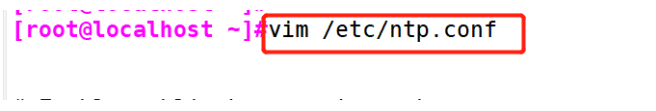

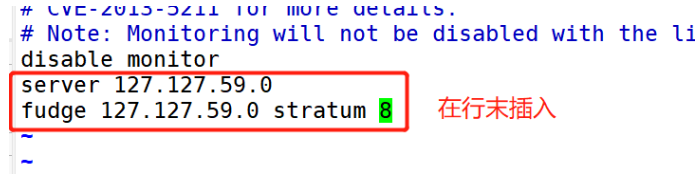

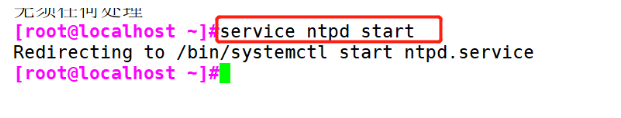

1.#Install time synchronization server yum install ntp -y 2.#Modify profile vim /etc/ntp.conf server 127.127.59.0 #Set local clock source fudge 127.127.59.0 stratum 8 #Set the time level to 8 and limit it to 15 3.#Open service service ntpd start

1. # install time synchronization server

2. Modify the configuration file

3. # turn on the service

2.3.2 setup time synchronization (slave servers: 192.168.59.111, 192.168.59.112)

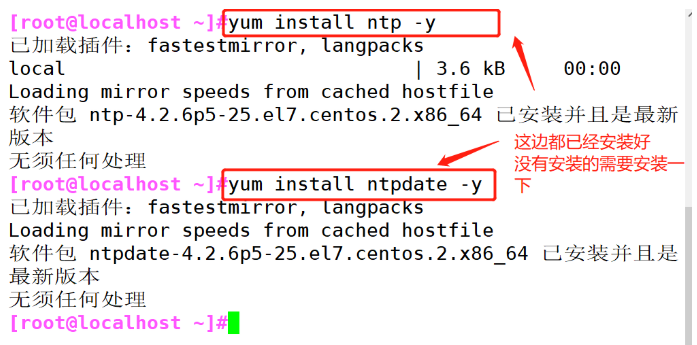

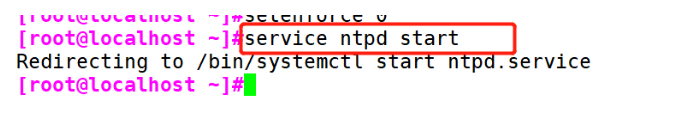

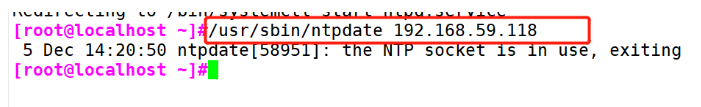

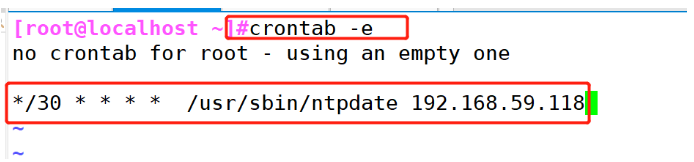

1.#Install time synchronization server and synchronization service yum install ntp -y yum install ntpdate -y 2. #Open service service ntpd start 3. #Perform synchronization /usr/sbin/ntpdate 192.168.59.118 4.#Scheduled tasks crontab -e */30 * * * * /usr/sbin/ntpdate 192.168.59.118 ###########slave2:192.168.59.112 Same as above######

1. # install time synchronization server and synchronization service

2. Start service

3. # perform synchronization

4. # scheduled tasks

2.3.3 configure the master server (192.168.59.118)

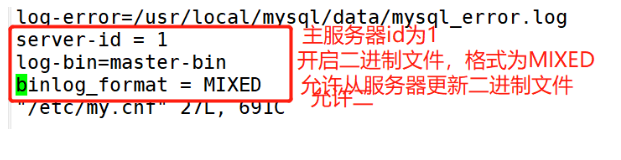

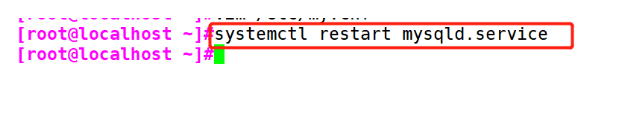

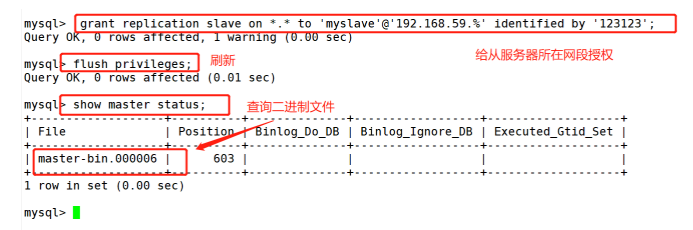

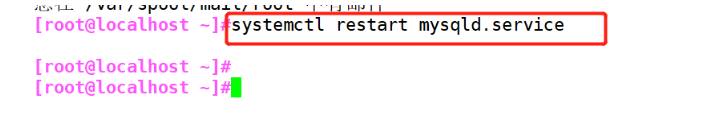

1. #Open binary log vim /etc/my.cnf log-bin=master-bin #Open binary log binlog_format=MIXED #Binary log format log-slave-updates=true #Turn on synchronization from server 2. #Restart service systemctl restart mysqld.service 3. #Log in to mysql and authorize the slave server in the network segment mysql -uroot -p123123 grant replication slave on *.* to 'myslave'@'192.168.59.%' identified by '123123'; #Refresh database flush privileges; #View master server binaries show master status; Open binary log

1. # enable binary log

2. # restart the service

3. # log in to mysql and authorize the slave server in the network segment

2.3.4 configuring slave servers (192.168.59.111, 192.168.59.112)

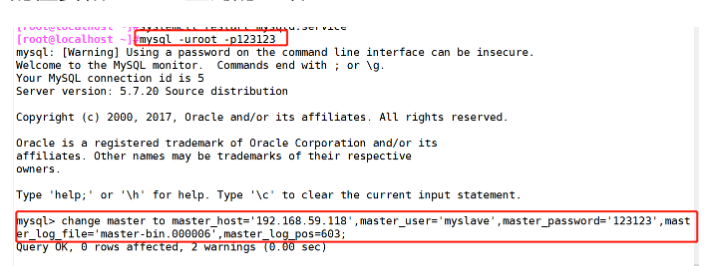

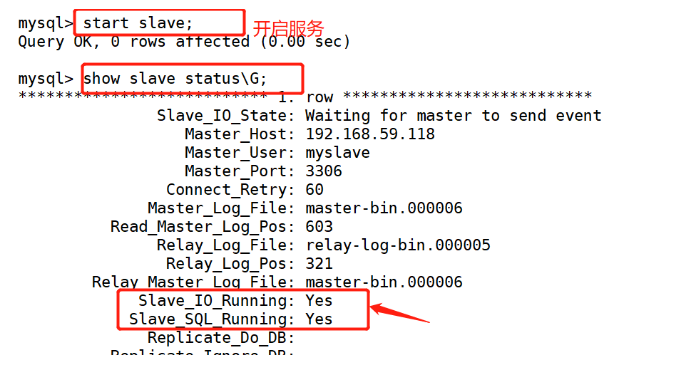

1.#Open binary log vim /etc/my.cnf server-id = 11 #The IDs of slave1 and slave2 cannot be the same. I set slave2 to 22 relay-log=relay-log-bin relay-log-index=slave-relay-bin.index 2.#Restart service systemctl restart mysqld.service 3. #Log in to mysql and configure the synchronization master_log_file and master_ log_ The value of POS should be consistent with that of master query mysql -uroot -p123123 change master to master_host='192.168.59.118',master_user='myslave',master_password='123123',master_log_file='master-bin.000004',master_log_pos=603; 4.#Start synchronization. If an error is reported, execute restart slave start slave; show slave status\G; ##The following two must be YES #Slave_IO_Running: Yes #Slave_SQL_Running: Yes #########slave2: 192.168.59.112 Same as above######

1. # enable binary log

2. # restart the service

3. # log in to mysql and configure the synchronization master_log_file and master_ log_ The value of POS should be consistent with that of master query

3. # log in to mysql and configure the synchronization master_log_file and master_ log_ The value of POS should be consistent with that of master query

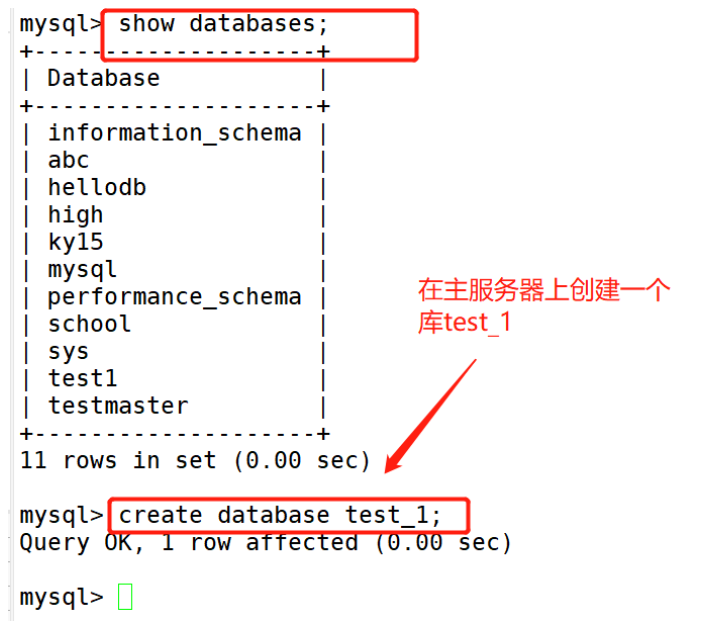

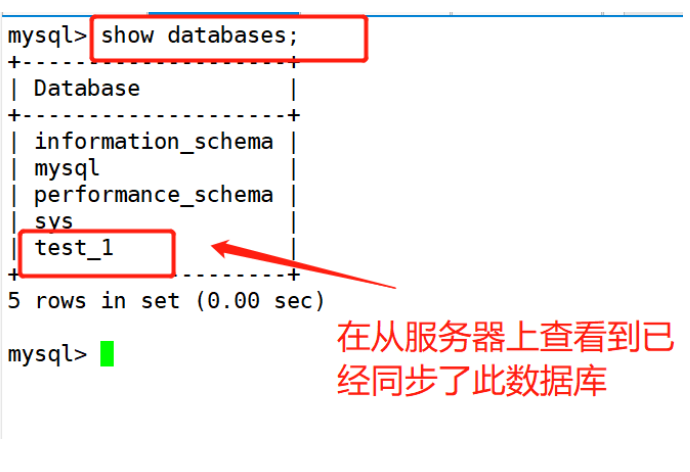

2.3.5 verifying master-slave synchronization

#Create a library on the primary server create database test_1; #View from server show databases;

2.4 build Amoeba to realize read-write separation (192.168.59.113)

2.4.1 installing the Java environment

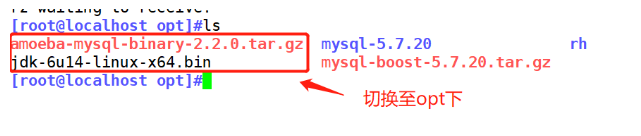

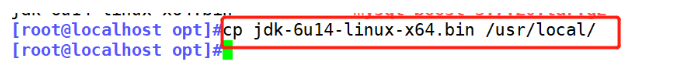

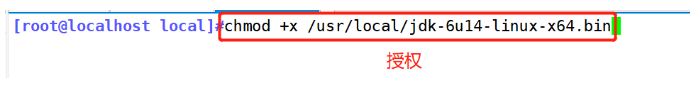

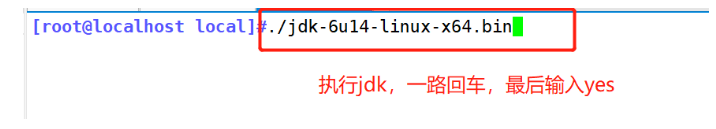

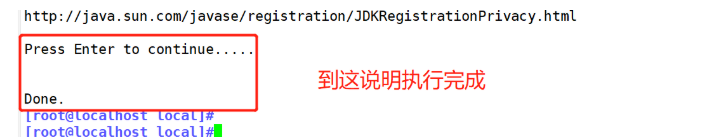

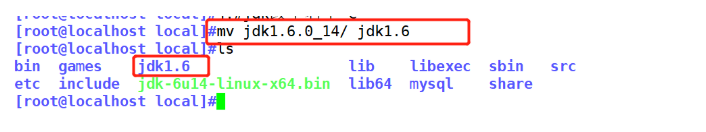

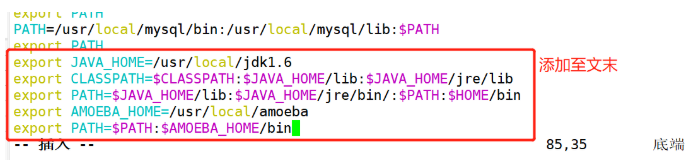

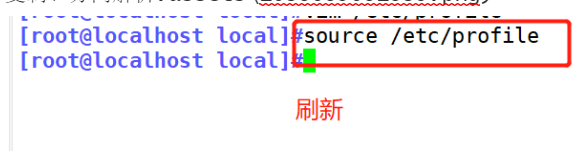

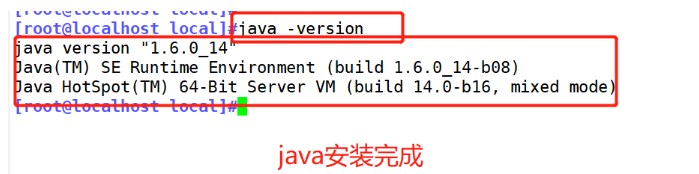

################install Java environment############### 1.#Download the installation package: jdk-6u14-linux-x64.bin, amoeba-mysql-binary-2.2.0.tar.gz cd /opt 2.#Copy the jdk to / usr/local cp jdk-6u14-linux-x64.bin /usr/local/ 3.#Grant jdk permission and execute chmod +x /usr/local/jdk-6u14-linux-x64.bin cd /usr/local/ ./jdk-6u14-linux-x64.bin #Press enter all the way to the end, and finally enter yes for automatic installation 4.#Change the name of jdk mv jdk1.6.0_14/ jdk1.6 5.#Configure environment and refresh vim /etc/profile export JAVA_HOME=/usr/local/jdk1.6 export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib export PATH=$JAVA_HOME/lib:$JAVA_HOME/jre/bin/:$PATH:$HOME/bin export AMOEBA_HOME=/usr/local/amoeba export PATH=$PATH:$AMOEBA_HOME/bin source /etc/profile #Refresh profile

1. # download the installation package

2. # copy the jdk to / usr/local

3. # grant jdk permission and execute

4.#jdk change your name

5. # configuration environment

2.4.2 configuring amoeba

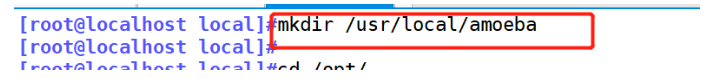

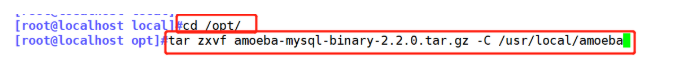

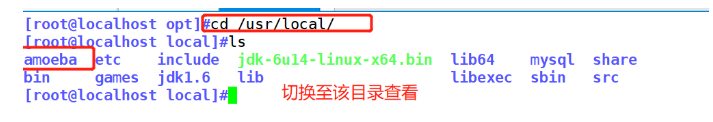

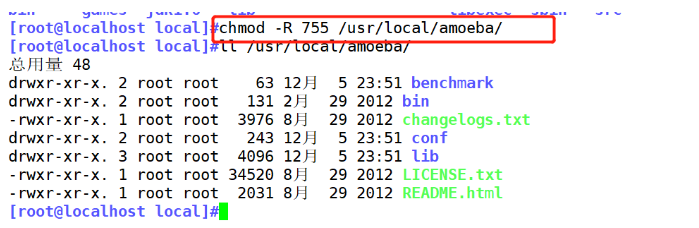

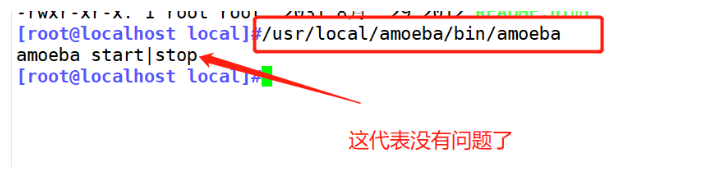

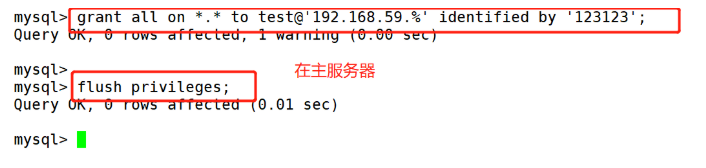

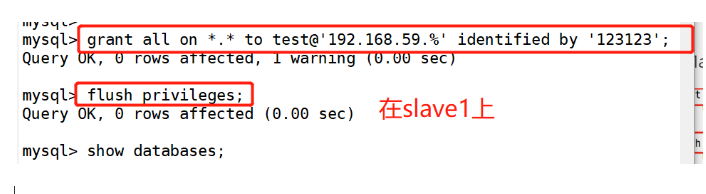

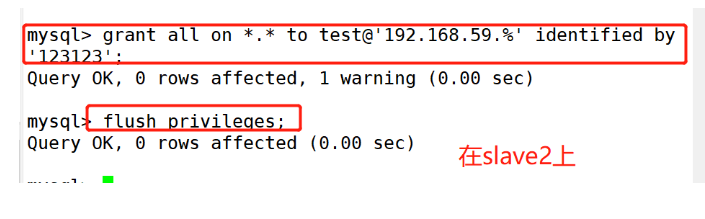

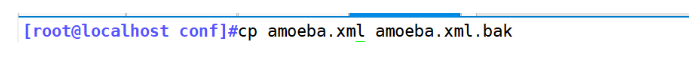

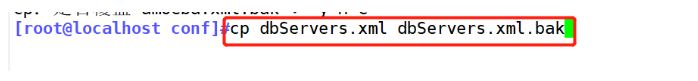

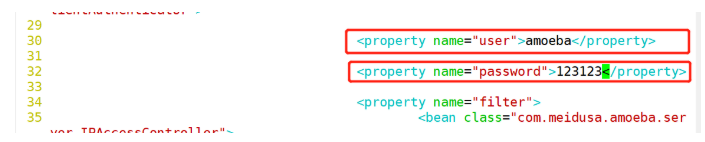

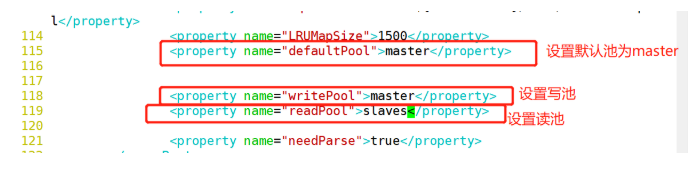

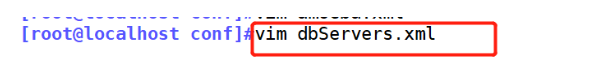

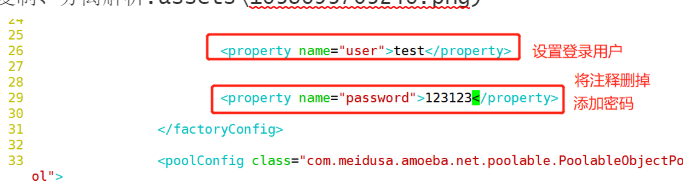

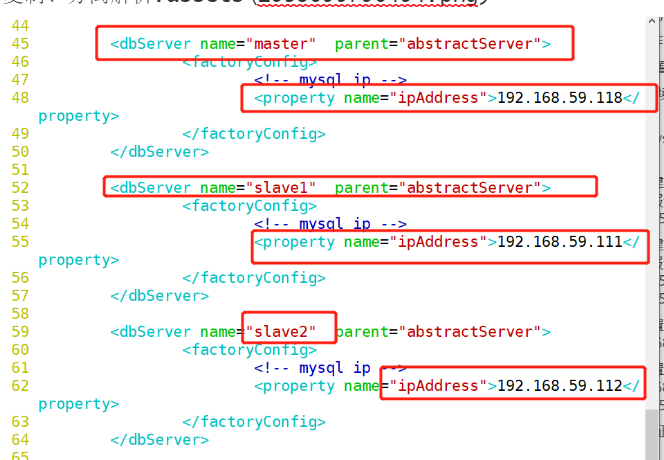

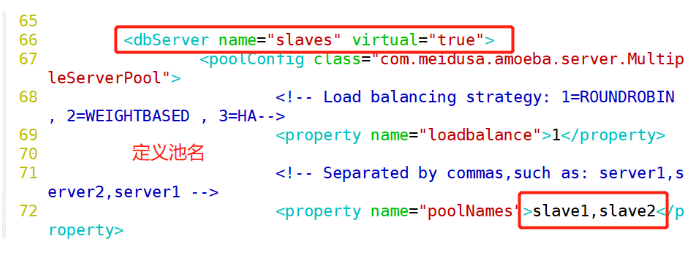

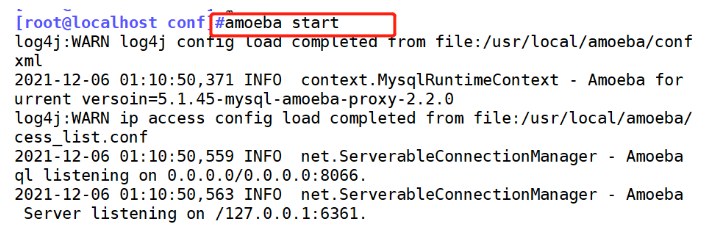

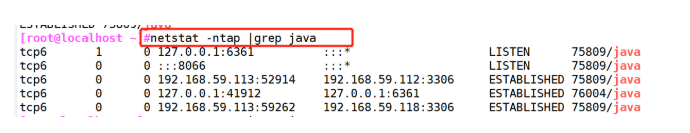

############## install amoeba ########### 1.#Create the amoeba directory in the / usr/local directory mkdir /usr/local/amoeba 2.#Switch to opt to decompress amoeba cd /opt/ tar zxvf amoeba-mysql-binary-2.2.0.tar.gz -C /usr/local/amoeba cd /usr/local/ Switch to directory view 3.#Give the directory / usr/local/amoeba execution permission chmod -R 755 /usr/local/amoeba/ 4.#Run amoeba /usr/local/amoeba/bin/amoeba ###########to configure Amoeba Read write separation #### 5.#First, open permissions on the Master and Slave1mysql to Amoeba grant all on *.* to test@'192.168.59.%' identified by '123123'; flush privileges; 6.#Backup amoeba configuration cd /usr/local/amoeba/conf/ cp amoeba.xml amoeba.xml.bak cp dbserver.dtd dbserver.dtd.bak 7.#Modify amoeba configuration vim amoeba.xml 30 <property name="user">amoeba</property> #Set login user name 32<property name="password">123123</property> #Set password 115<property name="defaultPool">master</property> #Set the default pool to master 118<property name="writePool">master</property> #Set write pool 119<property name="readPool">slaves</property> #Set read pool vim dbServers.xml 23 <!-- <property name="schema">test</property> --> #Line 23 comments 26<property name="user">test</property> #Set login user 28 <!-- mysql password --> #delete 29<property name="password">123123</property> #Solve 28 comments and add password 45<dbServer name="master" parent="abstractServer"> #Service pool name 48<property name="ipAddress">192.168.59.118</property> #Add address 52<dbServer name="slave1" parent="abstractServer"> 55<property name="ipAddress">192.168.59.111</property> Copy 6 rows and add another slave node 59<dbServer name="slave2" parent="abstractServer"> 62<property name="ipAddress">192.168.59.112</property> 66<dbServer name="slaves" virtual="true"> #Define pool name 72<property name="poolNames">slave1,slave2</property> #Write the slave node name 8.#Start amoeba and test amoeba start netstat -ntap |grep java

1. # create amoeba directory under / usr/local directory

2. # switch to opt to decompress amoeba

3. # give execution permission to the directory / usr/local/amoeba

4. # run amoeba

5. # first open the permissions on the Master and Slave1mysql to Amoeba

6. # backup amoeba configuration

7. # modify configuration file

8. # start amoeba

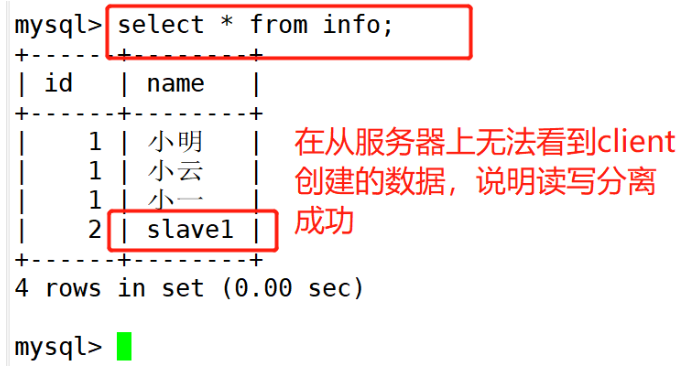

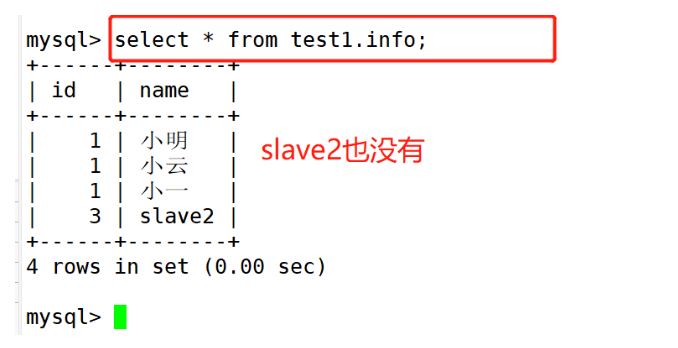

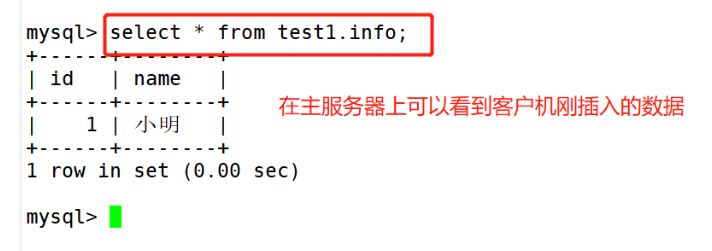

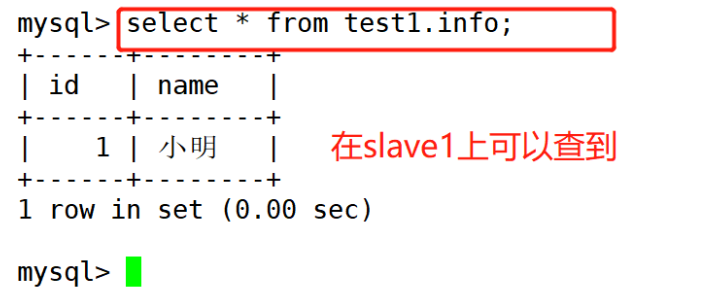

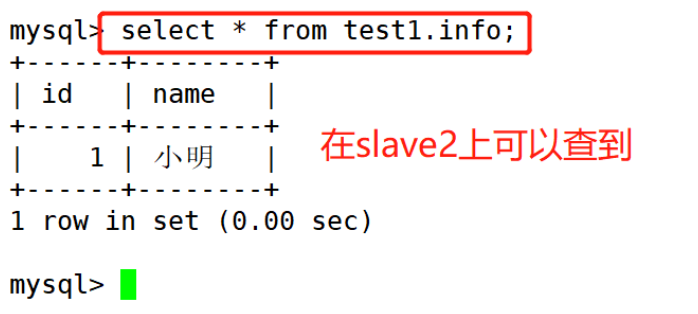

2.4.3 testing at the client (192.168.59.105)

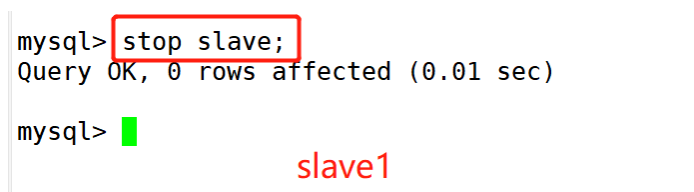

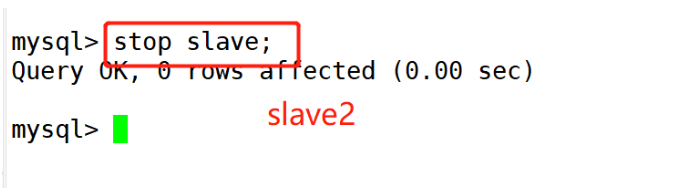

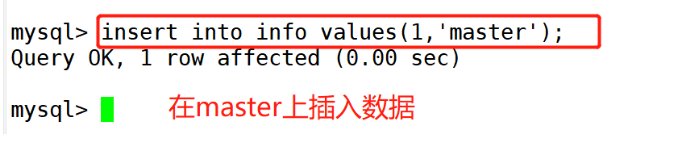

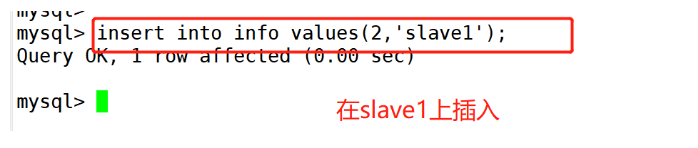

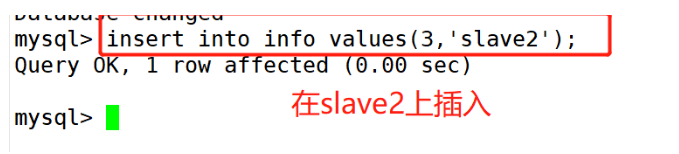

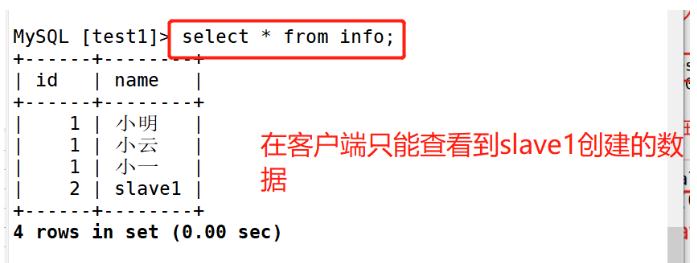

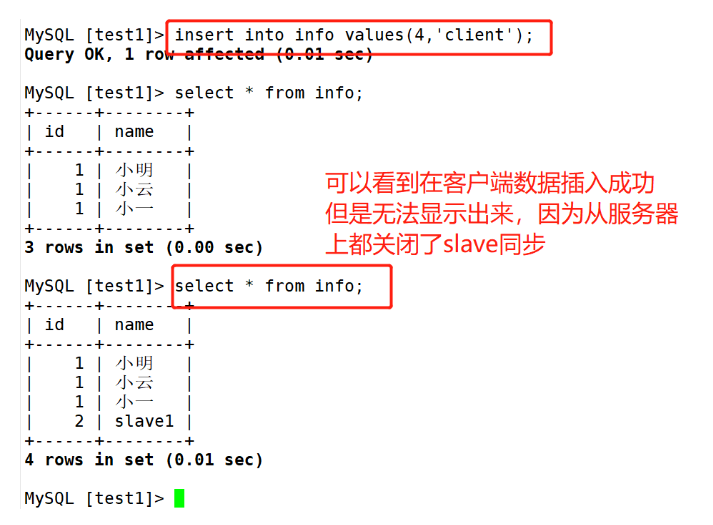

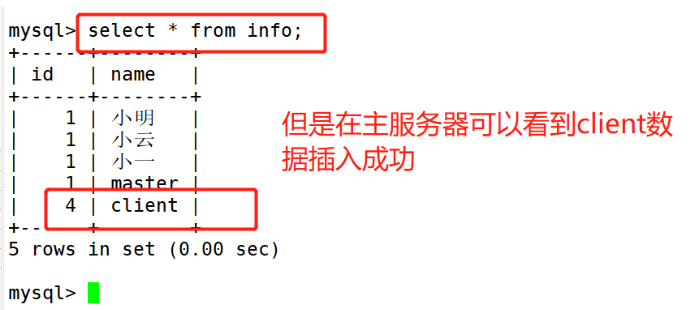

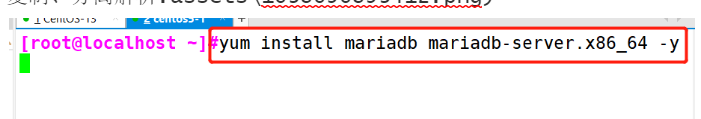

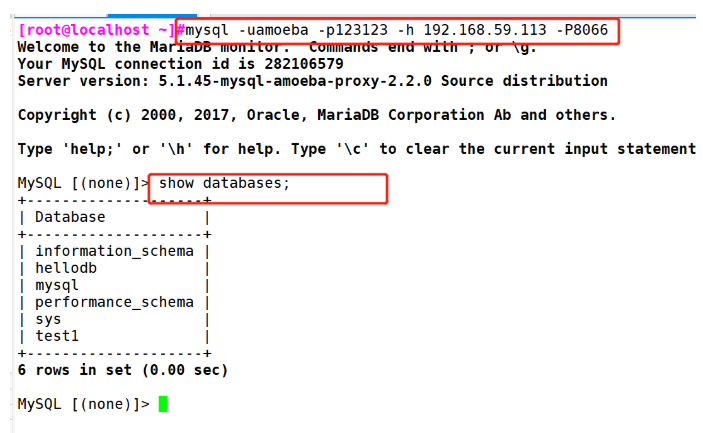

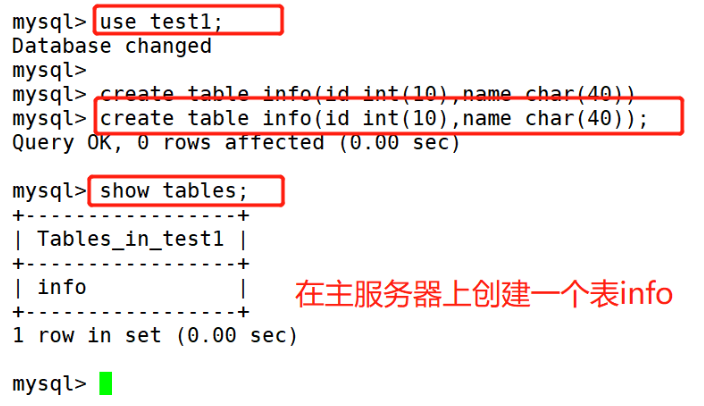

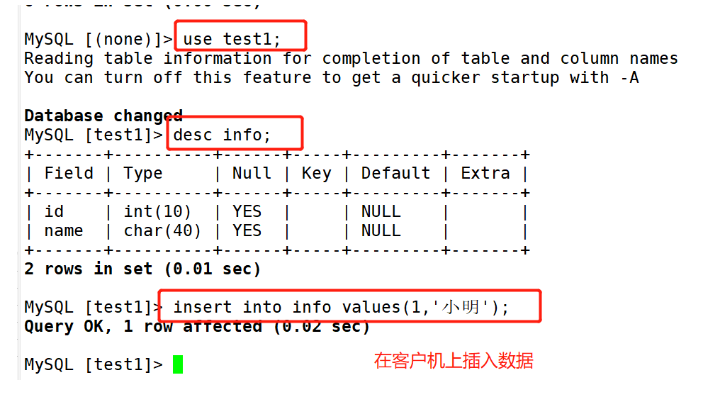

1.#Installing mariadb yum install mariadb mariadb-server.x86_64 -y 2.#Log in and view the database mysql -uamoeba -p123123 -h 192.168.59.113 -P8066 3.#Test synchronization ##Create a new table on the primary server use test1; create table info(id int(10),name char(40)); show tables; ##On the client, the inserted data is synchronized to all databases use test1; insert into info values(1,'Xiao Ming'); ##View on master-slave server 4.#Test read write separation #Stop the slave synchronization function of slave1 and slave2 stop slave; #Insert data on master, slave1 and slave2

1. # install mariadb

2. # log in and view the database

3. # test

#Create a new table on the primary server

#On the client, the inserted data is synchronized to all databases

##View on master-slave server

4. # test read-write separation