genetic algorithm

Intelligent optimization algorithm

Several concepts:

Population: a group of many individuals

Individual (chromosome): equivalent to a solution of the optimization problem to be solved

Genes: components of each solution

Several operators:

Selection: select better individuals from the current population to give them the opportunity to inherit their genes to the next generation.

Crossover: pairing from selected individuals and calling genes in some way to produce the next generation.

Variation: variation with a certain probability for some individuals in the population

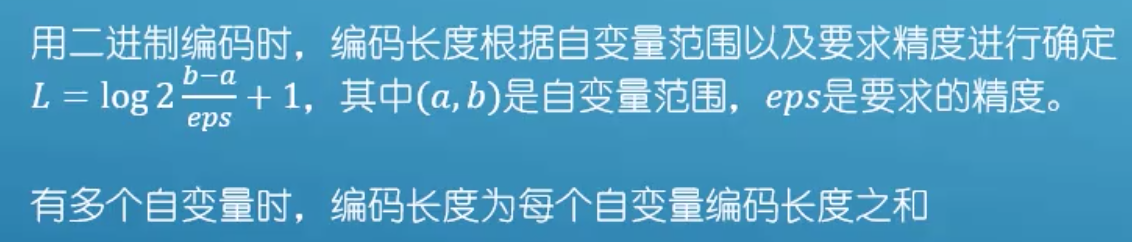

code:

Binary coding

Real value coding

Symbol coding

decode:

Convert binary data to decimal data

x

=

s

+

(

b

−

a

)

∗

X

/

(

2

L

−

1

)

x=s+(b-a)*X/(2^L - 1)

x=s+(b−a)∗X/(2L−1)

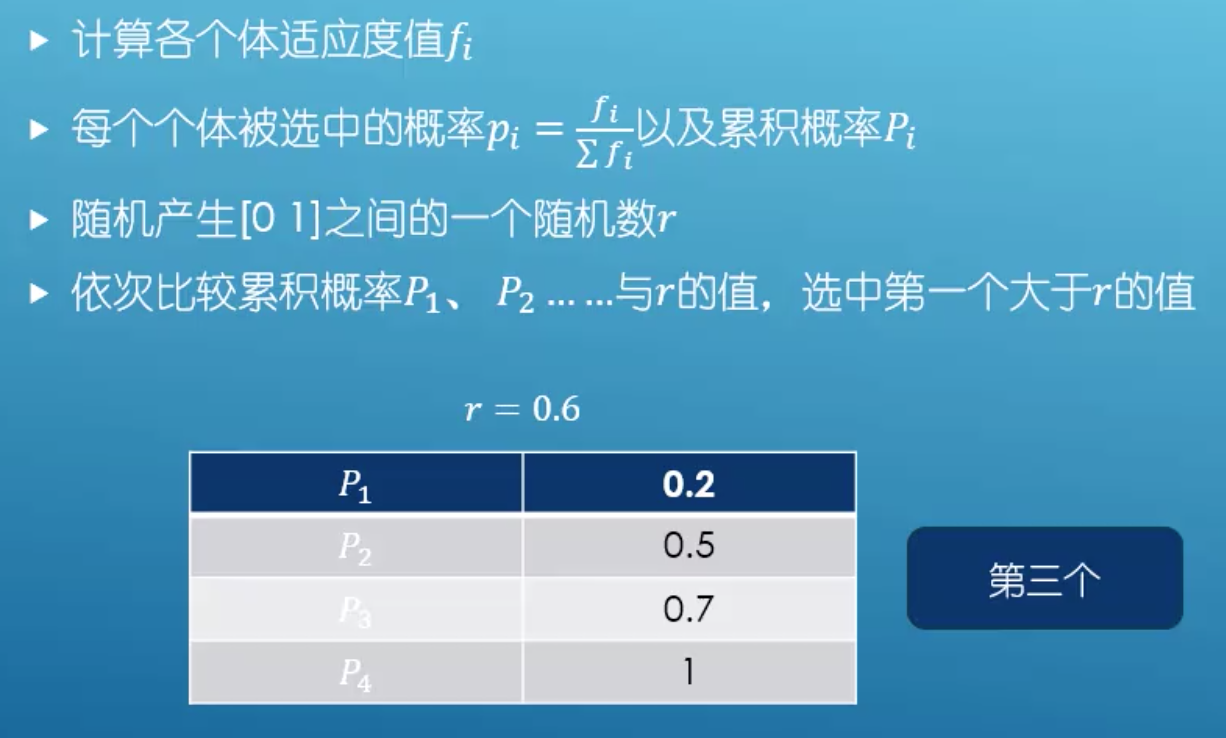

Choice: roulette selection method

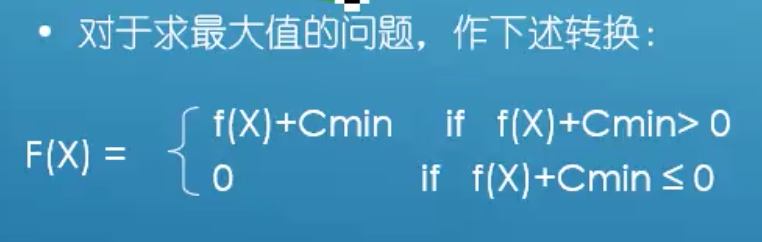

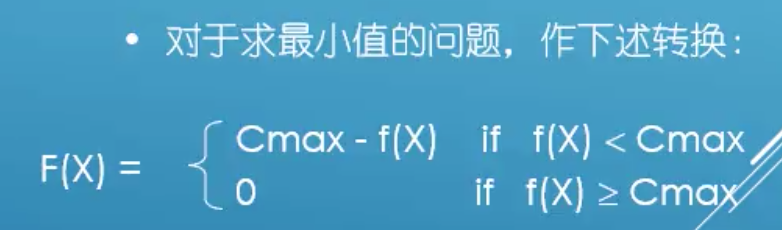

Fitness function scale transformation:

The selection of fitness function directly affects the convergence speed of genetic algorithm and whether it can find the optimal solution, because genetic algorithm basically does not use external information in evolutionary search, only uses the fitness of each individual in the population based on the fitness function

Optimization problems can be divided into two categories: one is to find the global maximum of the objective function, and the other is to find the global minimum of the objective function. For these two types of optimization problems, the conversion method from the objective function value f (x) to the fitness function value f (x) of the corresponding individual in the search space

Crossover:

Binary encoding,

Single point

Two o'clock

Variation:

Specified variation probability Pm

If the length is 10, it is a random integer of [1, 10]. If R < PM, then 0 becomes 1 and 1 becomes 0

Coding - > initialize population - > calculate fitness - > selection - > crossover - > mutation - > new species population - > whether conditions are met - > decoding

#Matlab

Example

The objective function becomes a fitness function

objfun.m file (objective function)

function fvl = objfun(x) fval = 11*sin(6*x) + 7*cos(5*x); //Find the maximum value

fitnessfun.m file (fitness function)

function fitvalue = fitnessfun(x) Cmin = 0.01; [row, ~] = size(x); for i = 1:row fval = objfun(x(i,:)); if fval + Cmin > 0 fitvalue(i) = fval + Cmin; else fitvalue(i) = 0; end fitvalue(i) = 0; end end

decode.m function file (binary to decimal)

function real = decode(pop,lb,ub) //pop population //Number of varnum variables [~,col] = size(pop); for j = col:-1:1 temp(j) = 2^(j-1)*pop(j); end temp = sum(temp); real = lb + temp * (ub-lb)/(2^col-1); end

selection.m selection function

function [dad,mom] = selection(pop,fitvalue) //Calculate cumulative probability PP = cumsum(fitvalue ./ sum(fitvalue)); [row,~] = size(pop); //Select row individuals to play roulette for i = 1:row for j = 1:row r = rand; if r <= PP(j) dad(i,:) = pop(j,:); break; end end mom(i,:) = pop(randi([1,row]),:); end

crossover.m cross function

function newpop = crossover(dad,mom,pc) [row,col] = size(dad); for i = 1:row if rand < pc cpoint = randi([1,col-1]); newpop(i,:) = [dad(i,1:cpoint) mom(i,cpoint+1:end)]; else newpop(i,:) = dad(i,:); end end

mutation.m Variogram

function newpop = mutation(pop,pm) [row,col] = size(pop); newpop = zeros(row,col); for i = 1:row mpoint = randi([1,col]); if rand < pm newpop(i,:) = ~pop(i,mpoint); else newpop(i,:) = pop(i,:); end end

GeneticAlgorithm.m file

Number of varnum variables

eps accuracy

lb ub variable range

n population size

Crossover probability pc

Variation probability pm

Dynamic look ahead transform M

f = @(x) 11*sin(6*x) + 7*cos(5*x); ezplot(f) hold on h = plot(0,0,'*') //Draw a function varnum = 1; n = 200; lb = -pi; ub = pi; eps = 1e-2; pc = 0.9; pm = 0.01; maxgen = 200; //Variable initialization //Initialize population for i = 1:varnum L(i) = cell(log2((ub(i)-lb(i)) / esp + 1)); end LS = sum(L); //Total length pop = randi([0,1],n,LS); //Generating random population is either 0 or 1 spoint = cumsum([0,L]); //Split binary code for iter = 1:maxgen //Convert binary to decimal for i = 1:n for j = 1:varnum startpoint = spoint(j)+1; endpoint = spoint(j+1); real(i,j) = decode(pop(i,startpoint:endpoint),lb(j),ub(j)); end end //Calculate fitness value fitvalue = fitnessfun(real); fval = objfun(real); h.XData = real; h.VData = fval; pause(0.05) //choice [dad,mom] = selection(pop,fitvalue); //overlapping newpop = crossover(dad,mom,pc); //variation newpop = mutation(newpop,pm); pop = newpop; end for i = 1:n for j = 1:varnum startpoint = spoint(j)+1; endpoint = spoint(j+1); real(i,j) = decode(pop(i,startpoint:endpoint),lb(j),ub(j)); end end fitvalue = fitnessfun(real);