1. Construct neural network

Here take the simple cnn network as an example

Note: since I frozen the parameters of the output layer in step 2, in order to distinguish it from other layers, I named the output layer "output" when defining the network. If the network is not named with the name attribute in the network, the system will Automatically assign a name to the network layer during summary.

import keras,os

from keras.models import Sequential

from keras.layers import Dense, Conv2D, MaxPool2D , Flatten, Lambda,Dropout,Concatenate

from keras.preprocessing.image import ImageDataGenerator

import numpy as np

from keras_applications.imagenet_utils import _obtain_input_shape

from keras import backend as K

from keras.layers import Input, Convolution2D, GlobalAveragePooling2D, Dense, BatchNormalization, Activation

from keras.models import Model

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

def cnn():

model = Sequential()

model.add(Conv2D(input_shape=(28,28,1),filters=16,kernel_size=(3,3),padding='same',activation='relu'))

model.add(Conv2D(filters=32,kernel_size=(3,3),padding='same',activation='relu'))

model.add(Conv2D(filters=64,kernel_size=(3,3),padding='same',activation='relu'))

model.add(MaxPool2D())

model.add(Dropout(rate=0.3))

model.add(Conv2D(filters=128,kernel_size=(3,3),padding='same',activation='relu'))

model.add(Conv2D(filters=256,kernel_size=(3,3),padding='same',activation='relu'))

model.add(MaxPool2D())

model.add(Dropout(rate=0.3))

model.add(Flatten())

model.add(Dense(512,activation='relu',name='teacher_feature'))

model.add(Dense(10,activation='softmax',name='output'))

# model.add(Activation('softmax'))

return model

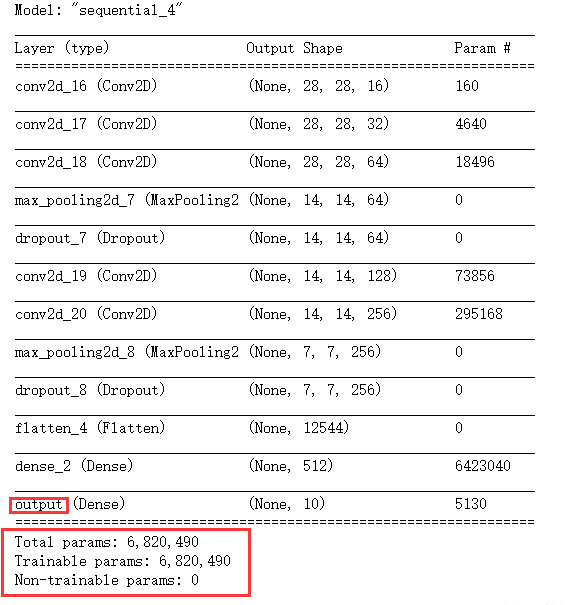

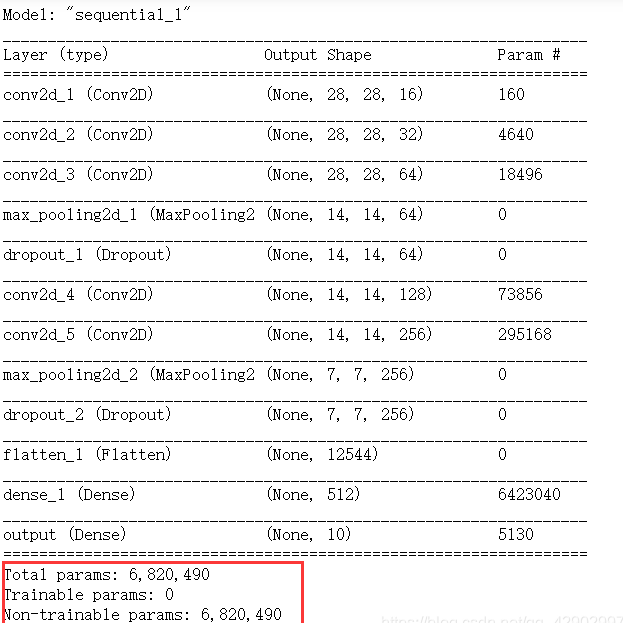

[view network structure]

cnn_net = cnn() cnn_net.summary()

It can be seen that all weight parameters can be trained and trainable.

2. Freeze the network weight of a specific layer

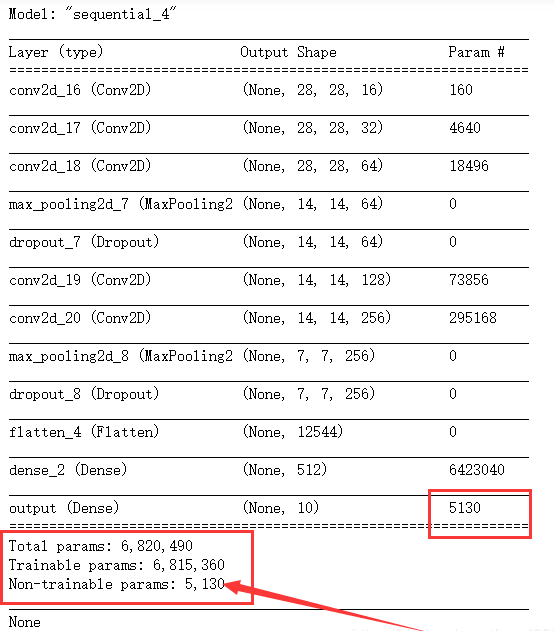

Here, take the weight of the output layer as an example to freeze the parameters of the output layer. Let's see what the output result is:

for layer in cnn_net.layers: if layer.name == 'output': layer.trainable = False cnn_net.compile(optimizer = opt, loss=loss, metrics=['accuracy']) print(cnn_net.summary())

It can be seen that the parameters of the last layer are indeed frozen.

Next, let's take a look at how the neural network with some parameters frozen will output different results from normal during training

Note: after freezing the network layer, it is best to recompile the network, otherwise it will not take effect in some scenarios, and the compile will take effect.

3. Comparison of freezing and non freezing effects

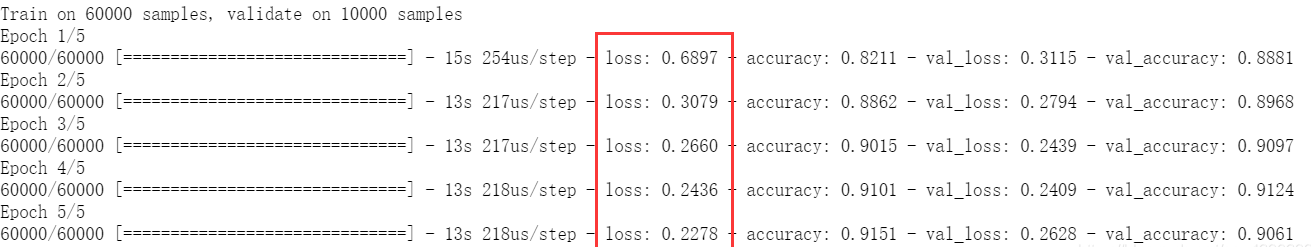

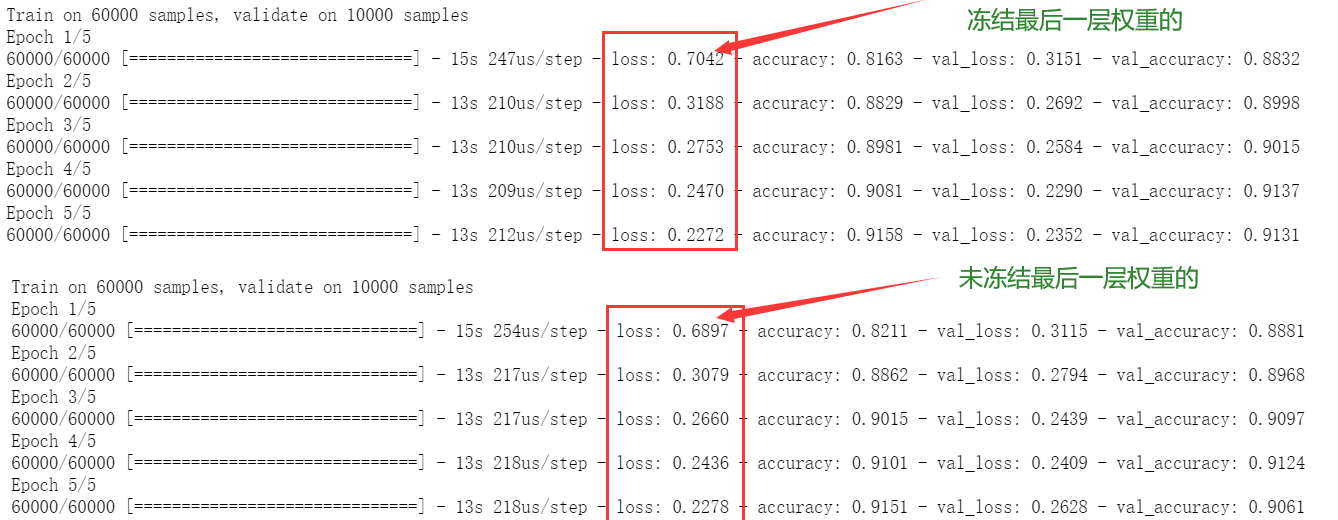

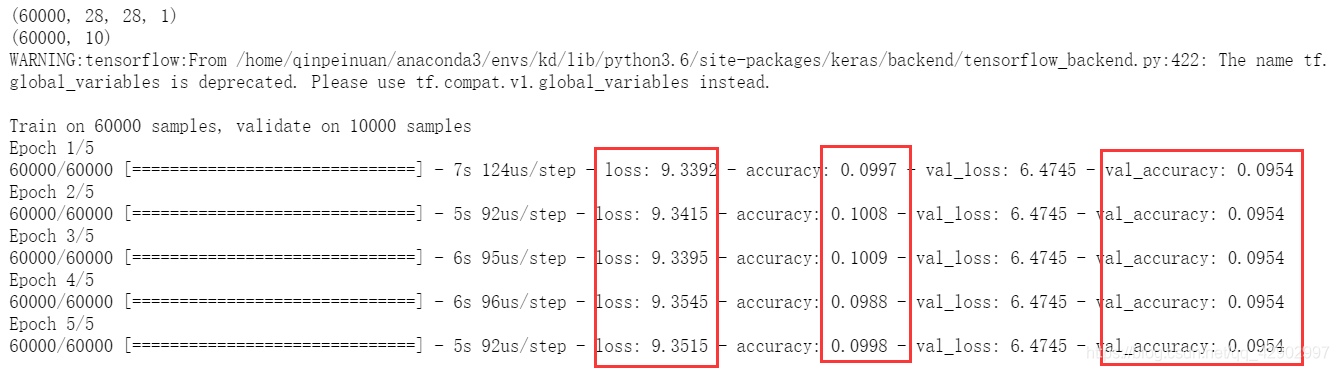

3.1 freeze the results of network training

from keras.datasets import fashion_mnist,cifar10,cifar100

from keras.datasets import mnist

from keras.utils import to_categorical

import keras.optimizers

from keras.losses import categorical_crossentropy

from sklearn.model_selection import train_test_split

(x_train,y_train),(x_test,y_test)= fashion_mnist.load_data()

# x_train = x_train.astype('float32')

# print(x_train.shape)

# print(y_train.shape)

x_train = x_train.reshape(60000,28,28,1)

# x_test = x_test.astype('float32')

x_test = x_test.reshape(10000,28,28,1)

# y_cifar_train = to_categorical(y_cifar_train)

# y_cifar_test = to_categorical(y_cifar_test)

y_test = to_categorical(y_test)

y_train = to_categorical(y_train)

print(x_train.shape)

print(y_train.shape)

opt = keras.optimizers.Adam(learning_rate=0.001)

loss = keras.losses.categorical_crossentropy

cnn_net.compile(optimizer=opt,loss=loss,metrics=['accuracy'])

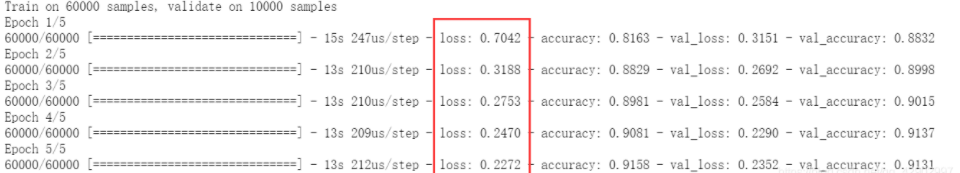

cnn_net_history = cnn_net.fit(x_train,y_train,batch_size=64,epochs=5,shuffle=True,validation_data=(x_test,y_test))

[thaw the frozen layer]

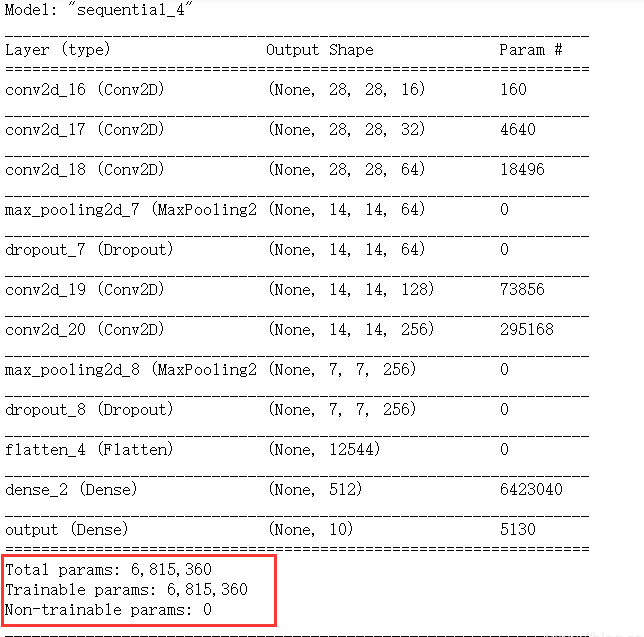

for layer in cnn_net.layers: layer.trainable = True print(cnn_net.summary())

3.2 unfreezing the results of network training

opt = keras.optimizers.Adam(learning_rate=0.001) loss = keras.losses.categorical_crossentropy cnn_net.compile(optimizer=opt,loss=loss,metrics=['accuracy']) cnn_net_history = cnn_net.fit(x_train,y_train,batch_size=64,epochs=5,shuffle=True,validation_data=(x_test,y_test))

3.3 conclusion

The author originally naively thought that when I frozen the last layer of neural network, the final output of this neural network will be the dimension of the penultimate layer (None, 512). Later, I found that I was naive.

When your network structure is built, whether you update its parameters or not, its dimensions will change according to the number of neurons of the network you design.

It can be seen that after freezing the last layer, there is no difference between his loss and the training process without freezing the last layer in this experiment (originally, only more than 5000 parameters of one layer were frozen, which is not worth mentioning compared with millions of parameters as a whole)

--------

[then what is the function of freezing parameters?]

We can freeze a certain layer of neural network to study its role in training, and we can also reduce the amount of parameter training when splicing the network. For example: when your network is very complex, its front-end network is a vgg-16 classified network, and a functional network written by yourself should be spliced behind it. At this time, after defining the network architecture of vgg-16, you can download the trained network parameters of vgg-16 online, then load them into the network you write, and then freeze the relevant layers of vgg-16, Only train the parameters of the small network written by yourself. In this way, you can save a lot of computing resources and time and improve efficiency.

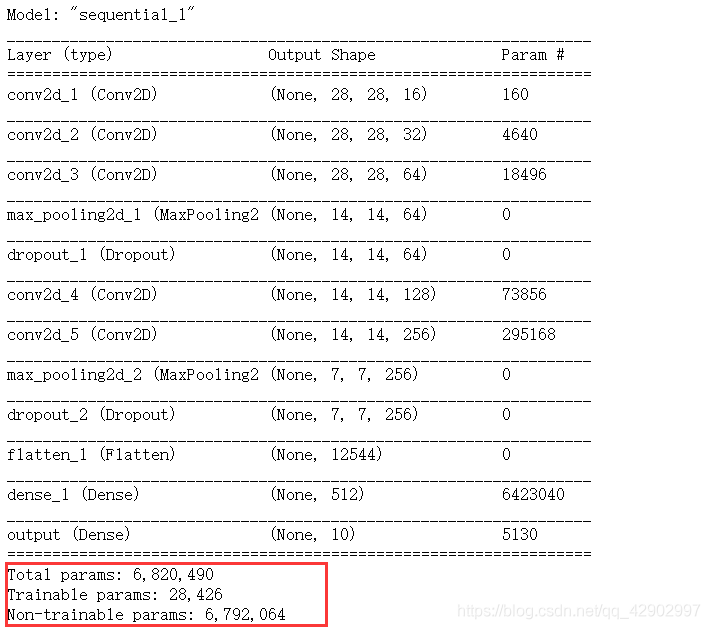

4. Freeze more parameters to see if it will reduce the training accuracy

Using the network just now, we freeze the parameters from layer 6 to the penultimate layer:

for layer in cnn_net.layers[5:-1]:

layer.trainable = False

print(cnn_net.summary())

Now the vast majority are frozen, and only 28426 parameters participated in the training. However, the training results found that:

At the last two epoch s, the network has converged, and the accuracy is no longer rising. The accuracy is 5% lower than that of our unfrozen network, but don't forget that we only use 1 / 300 of the parameters of the unfrozen network

In other words, for mnist data set, tens of thousands of parameters are enough to train it well.

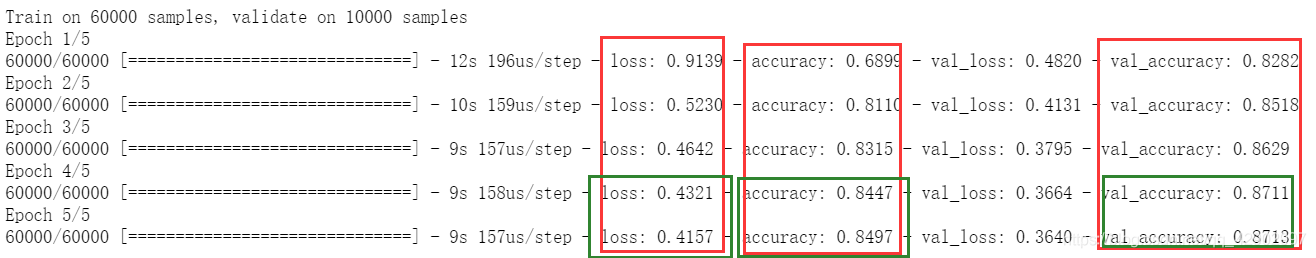

5. Freeze all parameters of the whole network to see what happens

for layer in cnn_net.layers:

layer.trainable = False

print(cnn_net.summary())

[training results]

Not surprisingly, freezing training can reduce accuracy.