This is a series of free knowledge, including graphic version and video version. What you see now is graphic version.

NGINX series courses are divided into three parts: basic part, advanced part and enterprise practice part. What you are reading now is basic part.

The video version is published in my own community. Friends who like watching videos can go there community , it can be played after wechat code scanning login or wechat authorized login.

Write in front

Learning purpose of the basic chapter: understand NGINX, be able to operate by yourself, independently complete load balancing configuration, bind domain names, and access back-end services through domain names.

Learning purpose of NGINX series courses: to understand NGINX, be able to complete load balancing configuration independently, build a highly available enterprise production environment and monitor NGINX.

Friends who have listened to the video lesson have been able to complete the load balancing configuration independently and submitted their homework

In this half year, we will output many open classes, including graphic version and video version. Importantly, these are free!!! You can listen when you come. The course list is as follows

Among them, the green indicates the content that has been released, and the red indicates the content that is preparing the material.

NGINX basic graphic version

OK, here's the point. Let's start.

If you usually have little contact with the back-end or server, you may ask, what is NGINX?

We can refer to NGINX for what it is Official website and Baidu Encyclopedia As described in, NGINX is a high-performance HTTP server and a reverse proxy server. In addition to supporting HTTP protocol, it also supports mail protocol, TCP/UDP, etc.

What can it do?

In my opinion, it is actually a gateway. Role 1: request forwarding, role 2: current limiting, role 3: authentication, role 4: load balancing. The reverse proxy server mentioned above can be classified as request forwarding.

Forward proxy, reverse proxy???

We won't talk about too many reasons. We can read the interpretation of this issue on other platforms https://zhuanlan.zhihu.com/p/...

Here we briefly summarize that the object of the forward proxy is the client, and the object of the reverse proxy is the server.

Crawler friends, usually the IP proxy you use is the forward proxy. The crawler forwards the request to the back end through the proxy. The NGINX reverse proxy we mentioned is to forward the client's request to the back end. Borrow some pictures from the article mentioned above

Are there many companies using NGINX?

Most companies use NGINX, ranging from Google meta (Facebook), Amazon Alibaba Tencent Huawei, to 70% + (I guess, actually more than this) Internet enterprises all over the world. The community also uses NGINX

Installing NGINX

Install cloud servers based on Ubuntu 20.04. The basic chapter first allows us to operate and learn some basics through quick installation. The subsequent advanced chapters will have compilation and installation.

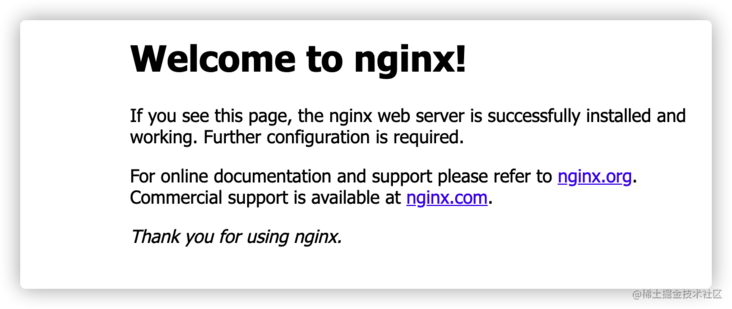

Open Terminal, execute sudo apt install nginx -y, and wait for the command to complete the installation. After the installation is completed, it will start by itself. You can access the address of your server. For example, my server IP is 101.42.137.185, so what do I access http://101.42.137.185

If the page displays Welcome to nginx, the service is normal. If not, please check the error information output by Terminal during installation, or check your own firewall, security group policy, etc. (if you don't understand or don't operate correctly, you can use the information previously published by the community Linux ECS open class (learning)

Basic working principle and module relationship of NGINX

NGINX has a main process and multiple worker processes. The main process is used to maintain its own operation, such as reading configuration, parsing configuration, maintaining working process, reloading configuration, etc; The work process is the process that specifically responds to the request.

The number of work processes can be adjusted in the configuration file.

NGINX consists of modules, which are controlled by the configuration in the configuration file, that is, the configuration file determines the working mode of NGINX.

I still quote other articles here, so I won't write them one by one. The principle and architecture of NGINX can be referred to https://zhuanlan.zhihu.com/p/... In fact, in the early stage, there is only one place we need to pay attention to, that is, the module. Just look at it and have a general understanding. There is no need to go deep.

NGINX signal

Signal, here refers to the control signal. The signal is a module that controls the working state of NGINX. The syntax format of the signal is

nginx -s signal

Common signals are

stop Quick shutdown quit Normal shutdown reload service crond reload reopen Reopen the log file

The correct shutdown of NGINX is nginx -s quit, which allows NGINX to exit after processing the started work.

NGINX configuration description

Based on the previous community open class, we can take a look at the application management configuration of NGINX before we formally talk about its configuration. Find the Server configuration file of NGINX through the status command

> systemctl status nginx

View NGINX Server configuration

[Unit] Description=A high performance web server and a reverse proxy server Documentation=man:nginx(8) After=network.target [Service] Type=forking PIDFile=/run/nginx.pid ExecStartPre=/usr/sbin/nginx -t -q -g 'daemon on; master_process on;' ExecStart=/usr/sbin/nginx -g 'daemon on; master_process on;' ExecReload=/usr/sbin/nginx -g 'daemon on; master_process on;' -s reload ExecStop=-/sbin/start-stop-daemon --quiet --stop --retry QUIT/5 --pidfile /run/nginx.pid TimeoutStopSec=5 KillMode=mixed [Install] WantedBy=multi-user.target

When you see the ExecStart option, you can confirm that NGINX is installed in / usr/sbin/nginx. This configuration file is the same as our previous open class Linux ECS open class The knowledge mentioned echoes each other from afar. Let's mention it here.

Find default master profile

The configuration file section officially begins

NGINX has a main configuration file and an auxiliary configuration file. The default name of the main configuration file is nginx.conf, which is stored in / etc/nginx/nginx.conf by default. The path of the auxiliary configuration file is controlled by the main configuration file. The specific path is set through the main configuration file. The file name and path of the auxiliary configuration can be changed. The file name usually ends with conf.

After installation, if you don't know where the main configuration file is, you can find it through the default path or through the find command.

> sudo find / -name nginx.conf /etc/nginx/nginx.conf

Basic structure and function of master configuration file. Use cat /etc/nginx/nginx.conf to list the contents of the file. If you don't understand, you can use the open class published by the community before Linux ECS open class Learn specific Linux file viewing instructions.

user www-data; # user

worker_processes auto; Number of work processes

pid /run/nginx.pid; # Process file

include /etc/nginx/modules-enabled/*.conf; # Plug in module configuration

events {

worker_connections 768; # Number of connections allowed to connect at the same time

# multi_accept on;

}

http {

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2 TLSv1.3; # Dropping SSLv3, ref: POODLE

ssl_prefer_server_ciphers on;

access_log /var/log/nginx/access.log;

error_log /var/log/nginx/error.log;

include /etc/nginx/conf.d/*.conf; # Secondary profile path

include /etc/nginx/sites-enabled/*;

}

# Example

#mail {

...

#}I have made appropriate adjustments to the configuration files listed here, deleted the annotated contents and retained the valid contents. The meanings of important items are marked in the form of notes in Chinese.

You must be a little confused when you see the configuration. What are these. Next, let's learn the basic syntax of NGINX configuration files.

NGINX configuration file basic syntax

The configuration items in the NGINX configuration file become instructions, which are divided into simple instructions and block instructions. Simple instructions consist of instruction names and parameters, separated by spaces and ending with English semicolons, such as

worker_processes auto;

Including worker_processes is an instruction that sets the number of work processes. Auto represents the number of processes, which can be either a number or auto (calculated according to the number of CPUs according to a fixed mathematical formula, generally CPU+1).

The syntax format of block instructions is similar to that of simple instructions. More simple instructions are wrapped in curly braces, such as

http {

server {

...

}

}Context / Context

Context is also called context in some places. If a block instruction contains other instructions, the block instruction is called context. Common contexts such as

events http server location

There is a hidden context instruction, main. It does not need to display declarations. The outermost layer of all instructions is the scope of main. Main is used as a reference for other contexts. For example, events and http must be in the scope of main; The server must be in http; location must be in the server; The above restrictions are fixed and cannot be placed at will, otherwise the NGINX program cannot be run, but the error message can be seen in the log.

After talking so much, you must be tired. Take us!

Configuring agents for backend applications using NGINX

A simple WEB service, such as the following flash application

from flask import Flask

from flask_restful import Resource, Api

app = Flask(__name__)

api = Api(app)

class HelloWorld(Resource):

def get(self):

app.logger.info("receive a request, and response 'Armored technology community'")

return {'message': 'Armored technology community', "address": "https://chuanjiabing.com"}

api.add_resource(HelloWorld, '/')

if __name__ == '__main__':

app.run(debug=True, host="127.0.0.1", port=6789)Write the content to a file on the server, such as / home/ubuntu/ke.py.

Remember to install the relevant Python library PIP3 install flash restful before startup

On Ubuntu 20.04, there is a new version of Python by default. Don't worry about the environment. Run the Web backend service python3 /home/ubuntu/ke.py

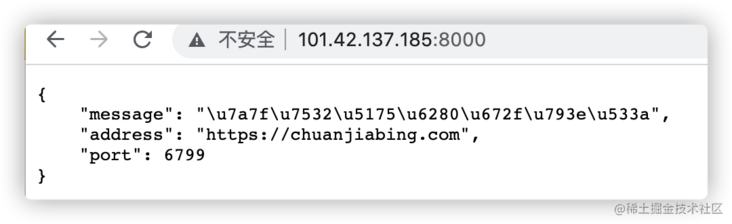

After the backend is started, let's configure NGINX

From the previous view of the main configuration file, we can see that the directory of the auxiliary configuration file is / etc/nginx/conf.d. now we add a configuration file in the auxiliary configuration file directory

> sudo vim /etc/nginx/conf.d/ke.conf

server {

listen 8000;

server_name localhost;

location / {

proxy_pass http://localhost:6789;

}

}Check whether the configuration file is correct

> sudo nginx -t nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful

service crond reload

> sudo nginx -s reload

Browser access http://ip:port For example, my server http://101.42.137.185:8000/

You can see the back-end output

NGINX log file

By default, it is divided into normal log and internal error log. The log path can be set in the main configuration file

/var/log/nginx/access.log /var/log/nginx/error.log

View normal log

> cat /var/log/nginx/access.log 117.183.211.177 - - [19/Nov/2021:20:18:46 +0800] "GET / HTTP/1.1" 200 107 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.71 Safari/537.36" 117.183.211.177 - - [19/Nov/2021:20:18:48 +0800] "GET /favicon.ico HTTP/1.1" 404 209 "http://101.42.137.185:8000/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.71 Safari/537.36"

Official document - log format http://nginx.org/en/docs/http...

Default log format

log_format compression '$remote_addr - $remote_user [$time_local] ' '"$request" $status $bytes_sent ' '"$http_referer" "$http_user_agent" "$gzip_ratio"';

It can be configured in the main configuration file. Refer to the official documents for specific configuration items.

Configuring agents for front-end applications using NGINX

A simple HTML document

> vim /home/ubuntu/index.html <html><meta charset="utf-8"/><body><title>Armored technology community</title><div><p>Armored technology community<p><a>https://chuanjiabing.com</a></div><body></html>

Whether large-scale front-end projects or small and medium-sized front-end projects, generally speaking, they need to be compiled into HTML documents, and then use applications like NGINX to provide accessible services.

Note: some Vue/React services may be deployed for server-side rendering, but most of them are still compiled into HTML. The simple example here is no different in configuration from those front-end engineering projects. As an example, don't worry, learning NGINX is important.

> sudo vim /etc/nginx/conf.d/page.conf

server {

listen 1235;

server_name localhost;

charset utf-8;

location / {

root /home/ubuntu/;

index index.html index.htm;

}

}Load balancing based on NGINX

Imagine a scenario. For example, the back-end service on your server is mainly used to format time. Many crawlers need to call it and ensure that the service is stable and available.

Scenario extension: suppose you reverse a JS algorithm. Now all reptiles need to call the algorithm to generate sign value before sending a request, with the value to request. If you put JS code in Python/Golang code for local call and execution, you need to change / redeploy all crawlers when you change the algorithm, but if you make it into a WEB service, you only need to change / restart the WEB service.

There are two obvious disadvantages in the case of one back-end service:

1. Insufficient service performance and too many requests will cause program jamming, slow response speed and affect the overall efficiency;

2. The overall service is unstable. Once the process exits or the server crashes, the service will not be accessible;

Benefits of using load balancing

1. Start multiple back-end services, configure load balancing, and forward requests to other doors on demand (for example, in turn) for processing, so as to meet more work requirements;

2. An NGINX loads multiple back-end services. When one or several services exit, other services are working;

NGINX only needs to introduce proxy_ The pass instruction and the corresponding upstream context can achieve load balancing. A simple load balancing configuration, such as

⚠️ Before the experiment, please start multiple back-end programs. You can copy the flash code just now to another file (e.g. / home/ubuntu/main.py, but remember to change the port number inside. It is recommended to change it to 6799 as in the tutorial). If you want to see the load effect on the web page, you can use 6789 / 6799 to distinguish the specific back-end program in the response content.

# /Change the content of / etc/nginx/conf.d/ke.conf to

upstream backend{

server localhost:6789;

server localhost:6799;

}

server {

listen 8000;

server_name localhost;

location / {

proxy_pass http://backend;

}

}After saving, reload the configuration

> sudo nginx -s reload

Multiple visits http://101.42.137.185:8000/ , you can see that the two back-end services 6789 and 6799 alternately return information, which indicates that the load balancing configuration is successful.

Domain name resolution and configuration practice

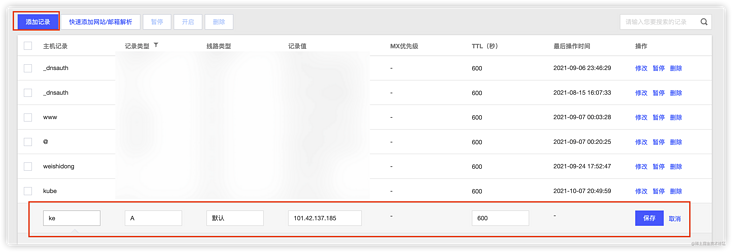

Open the cloud service provider console (take Tencent cloud as an example later, because Tencent cloud lightweight server is used when recording the tutorial). There are differences in the interfaces of other cloud service providers. Please act according to your circumstances.

Search for domain name resolution in the search box (Tencent's is DNSPOD)

Enter to find the domain name to be resolved (the premise here is that you have bought the domain name and put it on record. If not, it's OK to see my operation), and click resolve

Click Add record

Enter the subdomain name (e.g. ke) in the host record, and select save after entering the server IP address in the record value. Other options are default.

After setting the ECS console, you cannot access the applications on our server through the domain name

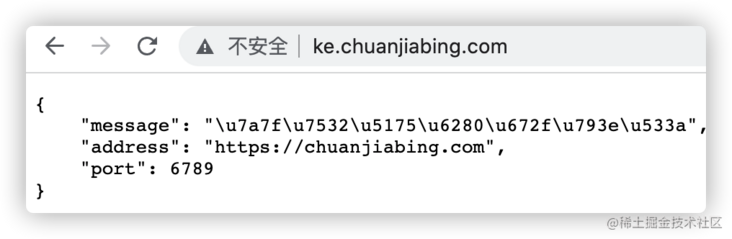

Go to the server to change the NGINX auxiliary configuration file, change the port and bind the domain name

> sudo vim/etc/nginx/conf.d/ke.conf # Change the listen and server in the server context_ name listen 80; server_name ke.chuanjiabing.com;

Remember to reload the configuration

> sudo nginx -s reload

Then you can use the domain name http://ke.chuanjiabing.com/ Access the service

After class homework: expose 3 screenshots of NGINX load balancing configuration of back-end program under the post of community course. One is the configuration screenshot; The other two are screenshots of the load configuration taking effect when the browser is accessed.

The syllabus of the follow-up advanced part and enterprise practice part are as follows. The learning purpose of the follow-up course is to apply NGINX well in work and complete the deployment and monitoring of enterprise production environment

NGINX advanced level chapter

NGINX load balancing strategy theory

Compile and install NGINX

Implementation of authority verification based on NGINX

Implementation of access flow restriction based on NGINX

Simple anti crawler based on NGINX

Implementation of non-stop update based on NGINX

NGINX enterprise level practice

HTTPS configuration practice of NGINX

NGINX plug-in installation

NGINX data monitoring practice

High availability deployment practice in NGINX production environment