catalogue

4,Node. Asynchronous IO for JS

5,Node.js main thread is a single thread

6,Node.js application scenario

7. Nodejs implements API services

9,Node.js common global variables

10. process of global variable

1. PATH of built-in module (PATH for processing files / directories)

5. Large file read / write operation

6. Custom implementation of file copy

7. Directory operation API of FS

8. Synchronization implementation of creating directory

9. Asynchronous implementation of directory creation

10. Asynchronous implementation of directory deletion

14. Module classification and loading process

15. Module loading source code analysis

16. VM module of built-in module

17. Module loading simulation implementation

19. Eventloop event ring in browser

1, Node Foundation

1. Course overview

Node. What can JS do?

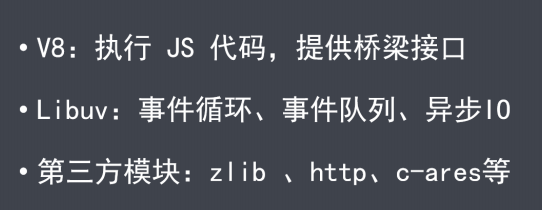

2,Node.js architecture

- Native modules

- Builtin modules "glue layer"

- Bottom

3. Why node js ?

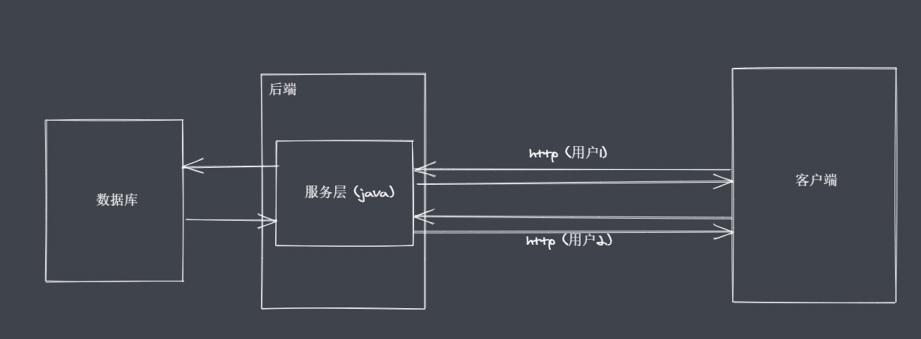

Node slowly evolved into a server "language"

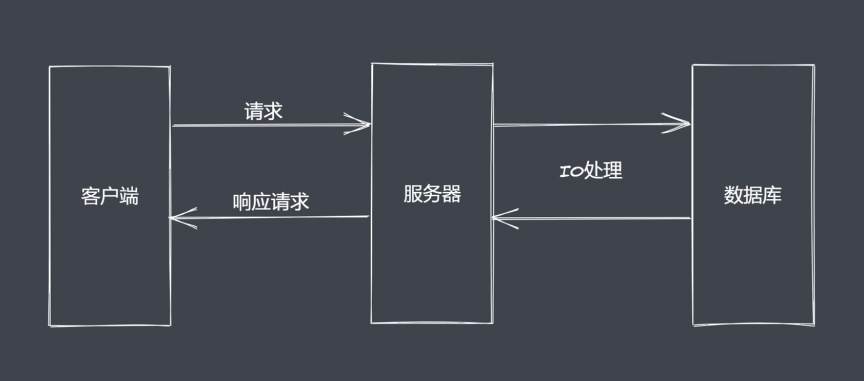

IO is the slowest link in computing operations

Reactor mode, single thread completes multi-threaded work

Implement asynchronous IO and event driven in Reactor mode

Node.js is more suitable for IO intensive and highly concurrent requests

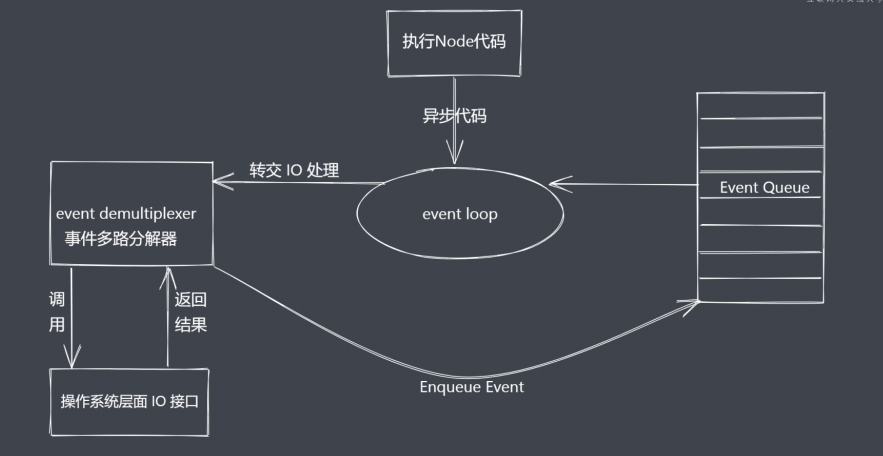

4,Node. Asynchronous IO for JS

It is divided into non blocking IO and blocking io

Call IO operation repeatedly to judge whether IO ends

read,select ,poll,kqueue,event ports

The expected implementation does not require active judgment of non blocking IO

Asynchronous IO summary

Asynchronous IO summary

- IO is the bottleneck of the application

- Asynchronous IO improves performance. Wait for the result to return

- IO operations belong to the operating system level, and all platforms have corresponding implementations

- Node.js single thread implements asynchronous IO with event driven architecture and libuv

5,Node.js main thread is a single thread

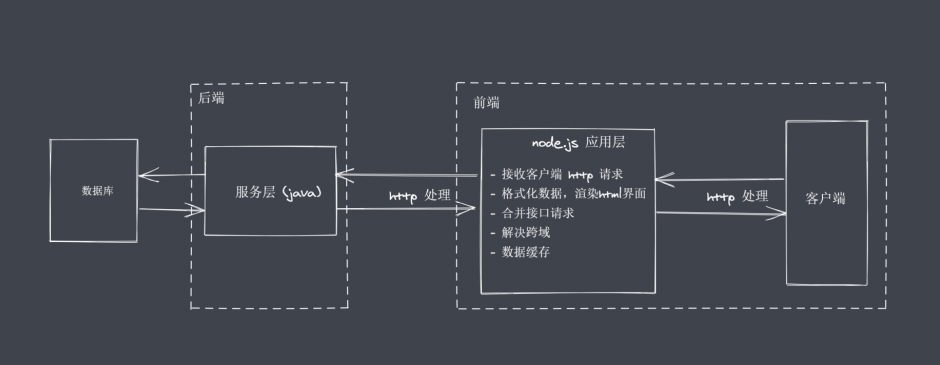

6,Node.js application scenario

IO intensive high concurrency requests

Node.js as the middle layer

Operation database provides API services

Real time chat application

Node.js is more suitable for IO intensive tasks

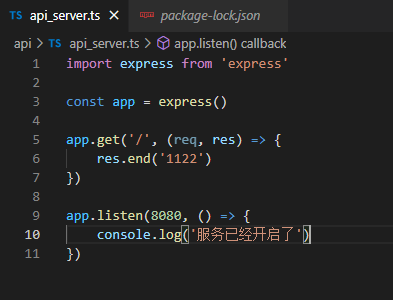

7. Nodejs implements API services

(1)npm init -y

(2)npm i typescript -g

(3)tsc --init

(4) NPM i ts node - D / / used to run TS script

(5)npm i express

(6) npm i @ types/express -D / / used to compile variables not defined in ts

(7) Write api_server.ts

(8) start the file TS node \api_ server. ts

8,Node.js global object

- The window is not exactly the same as the browser platform

- Node. Many properties are mounted on the JS global object

Global objects are special objects in Javascript

Node. The global object in JS is global

The fundamental role of Global is to act as a host

Global objects can be regarded as hosts of global variables

9,Node.js common global variables

- __ filename: returns the absolute path of the executing script file

- __ dirname: returns the directory where the executing script is located

- timer function: relationship between execution order and event loop

- process: provides an interface to interact with the current interface

- require: implement module loading

- Module, exports: export the processing module

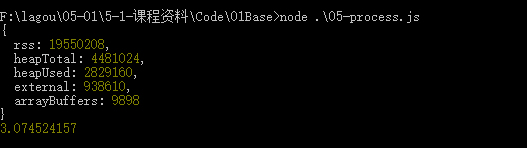

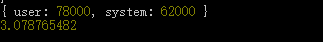

10. process of global variable

- It can be used directly without requiring

- Get process information

- Perform process operations

(1) Resources: CPU memory

console.log(process.memoryUsage())

console.log(process.cpuUsage())

(2) Operating environment

- process.cwd() / / running directory

-

process.version / / run version} process Versions / / detailed running version

-

process.arch / / cpu architecture

-

process.env.NODE_ENV / / node environment

-

process.env.PATH / / path

-

process.env.USERPROFILE / / user environment mac is HOME

-

process.platform / / system platform

(3) Running status

-

process.argv / / startup parameters

-

process.argv0 / / get the first startup parameter

-

process.pid // PID

-

process.uptime / / runtime

(4) Events

process.on('beforeExit', (code) => {

console.log('before exit' + code)

})

process.on('exit', (code) => { // Exit program

console.log('exit' + code)

})

console.log('Code execution finished')

process.exit()

// Code execution finished

// exit0(5) Standard output input error

console.log = function(data) {

process.stdout.write('---' + data + '\n')

}

console.log(11)

console.log(22)

// 11

// 22const fs = require('fs')

fs.createReadStream('test.txt') //Read file contents

.pipe(process.stdout)

// Hook Education

process.stdin.pipe(process.stdout) // Custom input / output

// 123

// 123

process.stdin.setEncoding('utf-8') // Custom text format input and output

process.stdin.on('readable', () => {

let chunk = process.stdin.read()

if (chunk !== null) {

process.stdout.write('data' + chunk)

}

})

// 123

// data1232, Core module

1. PATH of built-in module (PATH for processing files / directories)

Common API s in path module

- basename() gets the base name in the path‘

const path = require('path')

console.log(__filename); // F:\lagou-01-1 - course materials \ code \ 01base \ 06-path js

// 1 get the base name in the path

// 01 returns the last part of the receiving path

// 02 the second parameter indicates the extension. If it is not set, the complete file name with suffix will be returned

// 03 when the second parameter is used as a suffix, if it is not matched in the current path, it will be ignored

// 04 the process of directory path is oh Hu. If there is a path separator at the end, it will also be ignored

console.log(path.basename(__filename)); // 06-path.js

console.log(path.basename(__filename, '.js')); // 06-path

console.log(path.basename(__filename, '.css')); // 06-path.js

console.log(path.basename('a/b/c')); // c

console.log(path.basename('a/b/c/')); // c- dirname() gets the directory name in the path

// 2. Get directory name (path)

// 01 return the path of the upper level directory of the last part of the path

console.log(path.dirname(__filename)) // F:\lagou-01-1 - course materials \ Code Base

console.log(path.dirname('a/b/c')) // a/b

console.log(path.dirname('a/b/c/')) // a/b

- extname() gets the extension name in the path

// 3. Gets the extension of the path

// 01 returns the suffix of the corresponding file in the path

// 02 if there are multiple points in the path, it matches the content from the last point to the end

console.log(path.extname(__filename)); // .js

console.log(path.extname('a/b')); // empty

console.log(path.extname('a/b/index.html.js.css')); // .css

console.log(path.extname('a/b/index.js.')); // .- isAbsolute() gets whether the path is absolute

// 6. Judge whether the current path is absolute

console.log(path.isAbsolute('foo')); // false

console.log(path.isAbsolute('/foo')); // true

console.log(path.isAbsolute('///foo')); // true

console.log(path.isAbsolute('')); // false

console.log(path.isAbsolute('.')); // false

console.log(path.isAbsolute('../bar')); // false- join() splices multiple path fragments

// 7. Splicing path

console.log(path.join('a/b', 'c', 'index.html')); // a\b\c\index.html

console.log(path.join('/a/b', 'c', 'index.html')); // \a\b\c\index.html

console.log(path.join('/a/b', 'c', '../', 'index.html')); // \a\b\index.html

console.log(path.join('a/b', 'c', './', 'index.html')); // a\b\c\index.html

console.log(path.join('a/b', 'c', '', 'index.html')); // a\b\c\index.html

console.log(path.join('')); // .- resolve() returns the absolute path

// 9. Absolute path

// console.log(path.resolve());

// resolve([from], to)

console.log(path.resolve('/a', '../b')); // \b has nothing to do with a

console.log(path.resolve('index.html')); // F:\lagou-01-1 - course materials \ code \ 01base \ index html- parse() parse path

// 4. Resolution path

// 01 accept a path and return an object containing different information

// 02 root dir base ext name

const obj = path.parse('a/b/c/index.html')

// {

// root: '',

// dir: 'a/b/c',

// base: 'index.html',

// ext: '.html',

// name: 'index'

// }

const obj = path.parse('a/b/c/')

// { root: '', dir: 'a/b', base: 'c', ext: '', name: 'c' }

const obj = path.parse('./a/b/c/')

// { root: '', dir: './a/b', base: 'c', ext: '', name: 'c' }

console.log(obj)- format() serialization path

// 5. Serialization path

const obj = path.parse('./a/b/c/')

console.log(path.format(obj)) // ./a/b\c- normalize() normalize path

// 8. Normalized path

console.log(path.normalize('')); // .

console.log(path.normalize('a/b/c/d')); // a\b\c\d

console.log(path.normalize('a///b/c../d')); // a\b\c..\d

console.log(path.normalize('a//\\/b/c\\/d')); // a\b\c\d

console.log(path.normalize('a//\b/c\\/d')); // a\c\d2. Buffer of global variable

Buffer buffer

Buffer allows Javascript to manipulate binaries

The Javascript language originally served the browser platform

IO can be realized by {Javascript on Nodejs platform

The IO behavior operates on binary data

The Stream operation was not created by Nodejs

Stream operation cooperates with pipeline to realize data segmented transmission

The end-to-end transmission of data will have producers and consumers

There is often waiting in the process of production and consumption

Where is the data stored when waiting? Buffer in Nodejs is a piece of memory space

(1) Buffer summary

- A global variable that does not require

- Realize binary data operation under Nodejs platform

- Does not occupy the memory space of V8 for memory size

- Memory usage is controlled by Node and recycled by V8 GC

- It is generally used in conjunction with Stream streams to act as data buffers

(2) create a buffer

- alloc: create a buffer with a specified byte size

- allocUnsafe: create a buffer of specified size (unsafe)

- from: receive data and create a buffer

const b1 = Buffer.alloc(10) // <Buffer 00 00 00 00 00 00 00 00 00 00>

const b2 = Buffer.allocUnsafe(10) // <Buffer ff ff ff ff ff ff ff ff 00 00>

console.log(b1);

// from

const b1 = Buffer.from('1')

console.log(b1); // <Buffer 31>

const b1 = Buffer.from('in')

console.log(b1); // <Buffer e4 b8 ad>

const b1 = Buffer.from([1, 2, 'in'], 'utf8')

console.log(b1); // <Buffer 01 02 00>

const b1 = Buffer.from([0xe4, 0xb8, 0xad]) // It is recommended not to put the contents of non array, but directly put the array

console.log(b1); // <Buffer e4 b8 ad>

console.log(b1.toString()); // in

const b1 = Buffer.alloc(3)

const b2 = Buffer.from(b1)

console.log(b1) // <Buffer 00 00 00>

console.log(b2) // <Buffer 00 00 00>

// Test whether b1 and b2 share memory, and the results show that they do not share internal memory

b1[0] = 1

console.log(b1) // <Buffer 01 00 00>

console.log(b2) // <Buffer 00 00 00>(3) Buffer instance method

- Fill: fill the buffer with data

let buf = Buffer.alloc(6)

// There are three parameters (value, start position and end position) in fill

buf.fill('123')

console.log(buf); // <Buffer 31 32 33 31 32 33>

console.log(buf.toString()); // 123123- write: writes data to the buffer

// write

buf.write('123', 1, 4)

console.log(buf); // <Buffer 00 31 32 33 00 00>

console.log(buf.toString()); // 123- toString: extract data from buffer

// toString()

buf = Buffer.from('Hook Education')

console.log(buf) // < buffer E6 8b 89 E9 92 A9 E6 95 99 E8 82 B2 > 12 bytes

console.log(buf.toString('utf-8', 3, 9)) // Hook teaching obtains the third to ninth bytes, and a Chinese character accounts for 3 bytes- slice: intercept buffer

// slice

buf = Buffer.from('Hook Education')

let b1 = buf.slice(-3)

console.log(b1) // <Buffer e8 82 b2>

console.log(b1.toString()); // Nurture- indexOf: find data in} buffer

// indexOf

buf = Buffer.from('ace Love front-end, love hook education, love everyone')

console.log(buf); // <Buffer 61 63 65 e7 88 b1 e5 89 8d e7 ab af ef bc 8c e7 88 b1 e6 8b 89 e9 92 a9 e6 95 99 e8 82 b2 ef bc 8c e7 88 b1 e5 a4 a7 e5 ae b6>

console.log(buf.indexOf('love', 4)); // 15- Copy: copy the data in} buffer

// copy

let b1 = Buffer.alloc(6)

let b2 = Buffer.from('Draw hook')

b2.copy(b1, 3, 3, 6) // The following parameters are read from the third byte and then from the third byte

console.log(b1.toString()); // hook

console.log(b2.toString()); // Draw hook(3) Buffer static method

- concat: concatenate multiple buffers into a new buffer

let b1 = Buffer.from('Draw hook')

let b2 = Buffer.from('education')

let b = Buffer.concat([b1, b2], 9)

console.log(b); // <Buffer e6 8b 89 e9 92 a9 e6 95 99>

console.log(b.toString()); // Hook Teaching- isBuffer: determines whether the current data is buffer

let b1 = '123' console.log(Buffer.isBuffer(b1)); // false let b2 = Buffer.alloc(123) console.log(Buffer.isBuffer(b2)); // true

(4) spilt of custom Buffer

ArrayBuffer.prototype.split = function (sep) {

let len = Buffer.from(sep).length

let ret = []

let start = 0

let offset = 0

while( offset = this.indexOf(sep, start) !== -1 ) {

ret.push(this.slice(start, offset))

start = offset + len

}

ret.push(this.slice(start))

return ret

}

let buf = 'ace Eat steamed bread and noodles. I eat delicious food'

let bufArr = buf.split('eat')

console.log(bufArr); // ['ace', 'steamed bread', 'noodles, me', 'OK', 'yes']3. FS module

- One is the buffer and the other is the data stream

- FS is a built-in core module that provides API s for file system operations

- FS basic operation class

- FS # common API s

- Permission bit, identifier, file descriptor

- Used for the operation permission of the file

- The flag in Nodejs indicates the file operation mode

- Common flag operators

- r: Represents readable

- w: Indicates writable

- s: Indicates synchronization

- +: indicates the reverse operation

- x: Indicates an exclusive operation

- a: Indicates an append operation

- fd is the ID assigned by the operating system to the open file

fs introduction summary

- fs is a built-in core module in Nodejs

- At the code level, fs is divided into basic operation classes and common API s

- Permission bit, identifier, operator

4. File opening and operation

- fs.open(path, flags, [mode], [callback(err,fd)])

- Path file path

- flags can be the following values

- 'r' - open file in read mode

- 'r +' - open the file in read-write mode

- 'rs' - open and read files using synchronous mode. Instructs the operating system to ignore the local file system cache

- 'rs +' - open in synchronization, read and write files

- Note: This is not to let FS Open becomes a blocking operation in synchronous mode. If you want synchronization mode, use FS openSync().

- 'w' - open the file in read mode and create if the file does not exist

- 'wx' - as in 'w' mode, if the file exists, it returns failure

- 'w +' - open the file in read-write mode and create it if it does not exist

- 'wx +' - the same as' w + 'mode. If the file exists, it returns failure

- 'a' - open the file in append mode and create if the file does not exist

- 'ax' - as in 'a' mode, if the file exists, it returns failure

- 'a +' - open the file in read append mode and create if the file does not exist

- 'ax +' - the same as' a + 'mode. If the file exists, it returns failure

- mode is used to set permissions for files when creating files. The default is 0666

- The callback function passes a file descriptor fd and an exception err

const fs = require('fs')

const path = require('path')

// open

fs.open(path.resolve('data.txt'), 'r', (err, fd) => {

console.log(fd);

})

// 3

// close

fs.open('data.txt', 'r', (err, fd) => {

console.log(fd);

fs.close(fd, err => {

console.log('Closed successfully');

})

})

// 3

// Closed successfully

5. Large file read / write operation

To copy the contents of file A to file B, you need A memory to temporarily store the contents. You can read and write while using Buffer to avoid memory overflow caused by direct copying in the past.

const fs = require('fs')

// Read: the so-called read operation is to write data from the disk file to the buffer

let buff = Buffer.alloc(10)

/**

* fd Locate the currently open file

* rfd File identifier

* buf Used to represent the current buffer

* offset Indicates from which location of the buf the write is currently started

* length Indicates the length of the current write

* position Indicates the location from which the file is currently being read

*/

fs.open('data.txt', 'r', (err, rfd) => {

console.log(rfd); // 3

fs.read(rfd, buff, 0, 4, 3, (err, readBytes, data) => {

console.log(readBytes); // 4

console.log(data); // <Buffer 34 35 36 37 00 00 00 00 00 00>

console.log(data.toString()); // 4567

})

})

buf = Buffer.from('1234567890')

fs.open('b1.txt', 'w', (err, wfd) => {

/**

* wfd File identifier

* buf Cache for storing data

* 1 The first location is where the data is fetched from the buffer

* 4 Indicates how many lengths to write

* 0 Indicates where the write operation is performed from the file. The first version starts from 0

*/

fs.write(wfd, buf, 1, 4, 0, (err, written, buffer) => {

console.log(written, '----'); // 4 ----

fs.close(wfd) // Remember to close the file to reduce memory consumption

})

})

// Will generate a B1 Txt file, the content is 23456. Custom implementation of file copy

const fs = require('fs')

/**

* 01 Open the a file and save the data in the buffer using read for temporary storage

* 02 Open the b file and use write to write the data in the buffer to the b file

*/

let buf = Buffer.alloc(10)

// 01 open the specified file and copy 10 bytes

fs.open('a.txt', 'r', (err, rfd) => {

// 03 open the B file for data writing

fs.open('b.txt', 'w', (err, wfd) => {

// 02 number of reads from open files

fs.read(rfd, buf, 0, 10, 0, (err, readBytes) => {

// 04 write the data in buffer to b.txt

fs.write(wfd, buf, 0, 10, 0, (err, writter) =>{

console.log('Write successful');

})

})

})

})

// 02 full copy of data

// fs.open('a.txt', 'r', (err, rfd) => {

// fs.open('b.txt', 'w', (err, wfd) => {

// fs.read(rfd, buf, 0, 10, 0, (err, readBytes) => {

// fs.write(wfd, buf, 0, 10, 0, (err, writter) =>{

// fs.read(rfd, buf, 0, 5, 10, (err, readBytes) => {

// fs.write(wfd, buf, 0, 5, 10, (err,writter) => {

// console.log('write succeeded ')

// })

// })

// })

// })

// })

// })

const BUFFER_SIZE = buf.length

let readOffset = 0

fs.open('a.txt', 'r', (err, rfd) => {

fs.open('b.txt', 'w', (err, wfd) => {

function next() {

fs.read(rfd, buf, 0, BUFFER_SIZE, readOffset, (err, readBytes) => {

if (!readBytes) {

// If the condition is true, the content has been read

fs.close(rfd, () => {})

fs.close(wfd, () => {})

console.log('copy complete')

return

}

readOffset += readBytes

fs.write(wfd, buf, 0, readBytes, (err, writter) => {

next()

})

})

}

next()

})

})

// Generate a b.txt file with the content of A7. Directory operation API of FS

Common directory operation API

- access: judge whether the file or directory has operation permission

const fs = require('fs')

// 1, access

fs.access('a.txt', (err) => {

if (err) {

console.log(err);

} else {

console.log('Have operation authority');

}

})

// Have operation authority- stat: get directory and file information

// 2, stat

fs.stat('a.txt', (err, statObj) => {

console.log(statObj.size); // 108

console.log(statObj.isFile()); // true

console.log(statObj.isDirectory()); // false

})- mkdir: create directory

// 3, mkdir recursive operation

fs.mkdir('a/b/c', {recursive: true}, (err) => {

if (!err) {

console.log('Created successfully');

} else {

console.log(err);

}

})- rmdir: delete directory

// 4, rmdir

fs.rmdir('a', {recursive: true}, (err) => {

if (!err) {

console.log('Delete succeeded');

} else {

console.log(err);

}

})- readdir: reads the contents of the directory

// 5, readdir

fs.readdir('a', (err, files) => {

console.log(files); // [ 'a.txt', 'b' ]

})- unlink: deletes the specified file

// 6, unlink

fs.unlink('a/a.txt', (err) => {

if (!err) {

console.log('Delete succeeded');

} else {

console.log(err);

}

})8. Synchronization implementation of creating directory

const fs = require('fs')

const path = require('path')

/**

* 01 When calling in the future, you need to receive paths like a/b/c, which are connected by / between them

* 02 Use the / separator to split the path, and put each item into an array for management ['a ',' B ',' C ']

* 03 To traverse the above array, we need to get each item, and then splice it with the previous item/

* 04 Judge whether a current path after splicing has operable permission. If so, it is proved to exist. Otherwise, it needs to be created

*/

function makeDirSync (dirPath) {

let items = dirPath.split(path.sep)

for(let i = 1; i <= items.length; i++) {

let dir = items.slice(0, i).join(path.sep)

try {

fs.accessSync(dir)

} catch (err) {

fs.mkdirSync(dir)

}

}

}

makeDirSync('a\\b\\c')9. Asynchronous implementation of directory creation

const fs = require('fs')

const path = require('path')

function mkDir (dirPath, cb) {

let parts = dirPath.split('/')

let index = 1

function next () {

if (index > parts.length) return cb && cb()

let current = parts.slice(0, index++).join('/')

fs.access(current, (err) => {

if (err) {

fs.mkdir(current, next)

} else {

next()

}

})

}

next()

}

mkDir('a/b/c', () => {

console.log('Created successfully');

})10. Asynchronous implementation of directory deletion

const { dir } = require('console')

const fs = require('fs')

const path = require('path')

/**

* Requirements: customize a function, accept a path, and then delete it

* 01 Judge whether the current incoming path is a file, and directly delete the current file

* 02 If a directory is currently passed in, we need to continue reading the contents of the directory before deleting it

* 03 The deletion behavior is defined as a function, and then reused recursively

* 04 Splices the current name into a path that can be used when deleting

*/

function myRmdir (dirPath, cb) {

// Determine the type of current dirPath

fs.stat(dirPath, (err, statObj) => {

if (statObj.isDirectory()) {

// Directory -- > continue reading

fs.readdir(dirPath, (err, files) => {

let dirs = files.map(item => {

return path.join(dirPath, item)

})

let index = 0

function next () {

if (index === dirs.length) return fs.rmdir(dirPath, cb)

let current = dirs[index++]

myRmdir(current, next)

}

next ()

})

} else {

// File -- > delete directly

fs.unlink(dirPath, cb)

}

})

}

myRmdir('tmp', () => {

console.log('Delete succeeded');

})11. Modular process

(1) Why does front-end development need modularization?

Component front end development

a. Common problems in traditional development

- Naming conflicts and contamination

- Code redundancy, invalid requests

- The relationship between documents is complex

b. The project is difficult to maintain and inconvenient to reuse

c. The module is small and precise and easy to maintain code fragments

d. Using function, object and self executing function to realize blocking

(2) Common modular specifications

- Commonjs specification

- AMD specification

- CMD specification

- ES mdules specification

(3) Modular specification

- Modularization is an important part of the front-end towards engineering

- There was no modular specification at the early JavaScript language level

- Commonjs, AMD and CMD are modular specifications

- Modularity is incorporated into the standard specification in ES6

- At present, common specifications are} Commonjs and ESM

12. Commonjs specification

The Commonjs specification is mainly applied to node js

Commonjs is a language level specification i

- Module Yin Yong

- Module definition

- Module identification

(1) Nodejs and {Commonjs

- The first mock exam is any module, and it has independent scope.

- Import other modules using require

- Pass the module ID into require to locate the target module

(2) module attribute

- Any js file is a module, and the module attribute can be used directly

- id: returns the module identifier, usually an absolute path

- filename: returns the absolute path of the file module

- loaded: returns a Boolean value indicating whether the module has completed loading

- parent: return the module of the object calling the module in front of the house

- childern: returns an array to store other modules called by the current module

- exports: returns the contents to be exposed by the current module

- paths: returns an array to store nodes in different directories_ Modules location

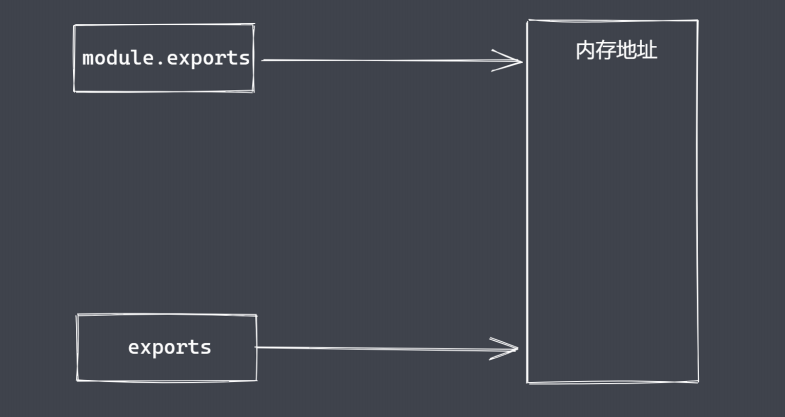

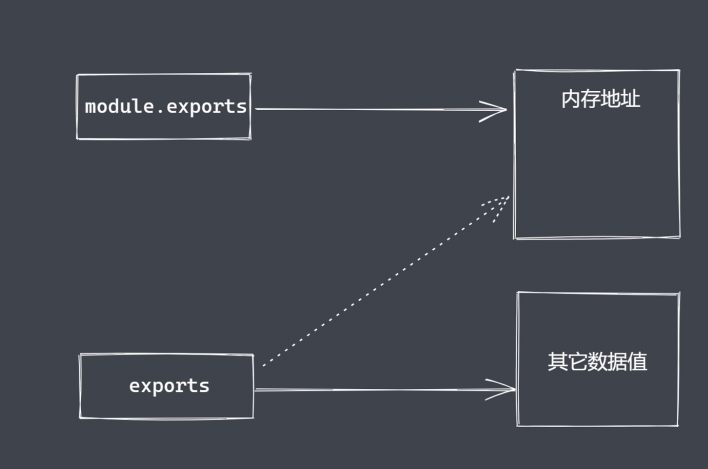

(3) What is the difference between module,exports and exports?

(4) require attribute

- The basic function is to read in and execute a module file

- resolve: returns the absolute path of the module file

- extensions: performs parsing operations based on different suffixes

- Main: returns the main module object

(5) CommonJs specification

- CommonJs specification is to make up for the modularity defect of JS language

- CommonJs is a language level specification, which is currently mainly used for node js

- CommonJs stipulates that modularity is divided into three parts: introduction, definition and identifier

- Module can be used directly in any module, including module information

- require receives the identifier and loads the target module

- Exports and module Exports can export module data

- The CommonJs specification defines that the loading of modules is completed synchronously

13. Nodejs and CommonJS

- Use module Exports and require implement module import and export

// Document 01 js

// 1, Import

let obj = require('./m')

console.log(obj); // { age: 20, agefn: [Function: ageFn] }// File m.js

// Module import and export

const age = 20

const ageFn = (x, y) =>{

return x + y

}

module.exports = {

age: age,

agefn:ageFn

}- module attribute and its common information acquisition

// Document 01 js

// 2, module import

let obj = require('./m')

console.log(obj);

// File m.js

// 2, module export

module.exports = 111

console.log(module);

// Module {

// id: '.',

// path: 'F:\lagou\05-01\5-1 - course materials \ \ Code\04FS\05Modules',

// exports: 111,

// parent: null,

// filename: 'F:\lagou\05-01\5-1 - course materials \ \ Code\04FS\05Modules\m.js',

// loaded: false,

// children: [],

// paths: [

// 'F:\lagou\05-01\5-1 - course materials \ \ Code\04FS\05Modules\node_modules',

// 'F:\lagou\05-01\5-1 - course materials \ \ Code\04FS\node_modules',

// 'F:\lagou\05-01\5-1 - course materials \ \ Code\node_modules',

// 'F:\lagou\05-01\5-1 - course materials \ \ node_modules',

// 'F:\\lagou\\05-01\\node_modules',

// 'F:\\lagou\\node_modules',

// 'F:\\node_modules'

// ]

// }- Exports export data and its relationship with module Exports difference (you cannot assign a value to exports directly)

// Document 01 js

// 3, exports

let obj = require('./m')

console.log(obj); // { name: 'ace' }// File m.js //3, exports exports.name = 'ace'

- Module synchronous loading under CommonJS specification

// Document 01 js

//4, Synchronous loading

let obj = require('./m')

console.log('01.js The code is executed')

// m.js was loaded and imported

// The 01.js code is executed

let obj = require('./m')

console.log(require.main == module); //true

// false

// true// File m.js

// 4, Synchronous loading

let name = 'lg'

let iTime = new Date()

while(new Date() - iTime < 4000) {}

module.exports = name

console.log('m.js Loaded and imported');

console.log(require.main == module); // false14. Module classification and loading process

(1) Module classification

- Built in module

- File module

(2) Module loading speed

- Core module: the Node source code is written to the binary and file during compilation

- File module: dynamically loaded when the code is running

(3) Loading process

- Path analysis: determine the module location according to the identifier

- File location: determine the specific files and file types in the target module

- Compilation and execution: complete the compilation and execution of files in a corresponding way

(4) Identifier of path analysis

- Path identifier

- Non path identifier (common in core modules)

(5) File location

(6) compilation and execution

- Compile and execute a specific type of file in the corresponding way

- Create a new object, load it according to the path, and complete the compilation and execution

(7) Compilation and execution of JS files

(8) JSON file compilation execution

Pass the read content through JSON Parse()

(9) cache optimization principle

- Increase module loading speed

- If the current module does not exist, it will go through a complete loading process

- After the module is loaded, the path is used as the index for caching

(10) Load process summary

- Path analysis: determine the location of the target module

- File location: determine the specific files in the target module

- Compilation execution: compile the module content and return the available exports object

15. Module loading source code analysis

Use vscode to debug and view the require d code

16. VM module of built-in module

Create a separate sandbox environment

// text.txt var age = 19

// vm.js

const fs = require('fs')

const vm = require('vm')

let age = 33

let content = fs.readFileSync('text.txt', 'utf-8')

// eval

eval(content) // 19 if age is declared again, an error will be reported. The variable has been declared and is not applicable

console.log(age);

// new Function

console.log(age); // 33

let fn = new Function('age', 'return age + 1') // 34

console.log(fn(age));

// vm

vm.runInThisContext(content) //33

age = 33

vm.runInThisContext('age += 10') // 29

console.log(age)17. Module loading simulation implementation

Core logic

- path analysis

- Cache priority

- File location

- Compilation execution

// v.json file

{

"age":18

}

// Or v.js

const age = 18

module.exports = name

// Simulation module loading implementation

const fs = require('fs')

const path = require('path')

const vm = require('vm')

function Module (id) {

this.id = id

this.exports = {}

console.log(1111)

}

Module._resolveFilename = function (filename) {

// Convert filename to absolute Path using Path

let absPath = path.resolve(__dirname, filename)

// Judge whether the content corresponding to the current path exists ()

if (fs.existsSync(absPath)) {

// If the condition is true, the content corresponding to absPath exists

return absPath

} else {

// File location

let suffix = Object.keys(Module._extensions)

for(var i=0; i<suffix.length; i++) {

let newPath = absPath + suffix[i]

if (fs.existsSync(newPath)) {

return newPath

}

}

}

throw new Error(`${filename} is not exists`)

}

Module._extensions = {

'.js'(module) {

// read

let content = fs.readFileSync(module.id, 'utf-8')

// packing

content = Module.wrapper[0] + content + Module.wrapper[1]

// VM

let compileFn = vm.runInThisContext(content)

// Prepare values for parameters

let exports = module.exports

let dirname = path.dirname(module.id)

let filename = module.id

// call

compileFn.call(exports, exports, myRequire, module, filename, dirname)

},

'.json'(module) {

let content = JSON.parse(fs.readFileSync(module.id, 'utf-8'))

module.exports = content

}

}

Module.wrapper = [

"(function (exports, require, module, __filename, __dirname) {",

"})"

]

Module._cache = {}

Module.prototype.load = function () {

let extname = path.extname(this.id)

Module._extensions[extname](this)

}

function myRequire (filename) {

// 1 absolute path

let mPath = Module._resolveFilename(filename)

// 2 cache priority

let cacheModule = Module._cache[mPath]

if (cacheModule) return cacheModule.exports

// 3 create an empty object and load the target module

let module = new Module(mPath)

// 4 cache loaded modules

Module._cache[mPath] = module

// 5 execute loading (compile execution)

module.load()

// 6 return data

return module.exports

}

let obj = myRequire('./v')

console.log(obj.age)

18. Events of core module

(1) Unified event management through EventEmitter class

(2) events and EventEmitter

- node.js is a time driven asynchronous operation architecture with built-in events module

- The events module provides the EventEmitter class

- node. Many built-in core modules in JS inherit EventEmitter

(3) EventEmitter common API

- on: add the callback function called when the event is triggered

- emit: trigger events and call each event listener synchronously according to the registered order

const EventEmitter = require('events')

const ev = new EventEmitter()

// on

ev.on('Event 1', () => {

console.log('Event 1 was executed');

})

ev.on('Event 1', () => {

console.log('Event 1 was executed---2');

})

// emit

// ev.emit('event 1 ')

// ev.emit('event 1 ')

//Event 1 was executed

// Event 1 executed -- - 2

//Event 1 was executed

// Event 1 executed -- - 2- once: adds a callback function that is called when the event is first triggered after registration

// once

ev.once('Event 1', () => {

console.log('Event 1 was executed');

})

ev.once('Event 1', () => {

console.log('Event 1 was executed---2');

})

// emit

ev.emit('Event 1')

ev.emit('Event 1')

//Event 1 was executed

// Event 1 executed -- - 2- off: removes a specific listener

// off

let cbFn = (...args) => {

console.log(args); // [ 1, 2, 3 ]

}

ev.on('Event 1', cbFn)

// ev.off('event 1', cbFn)

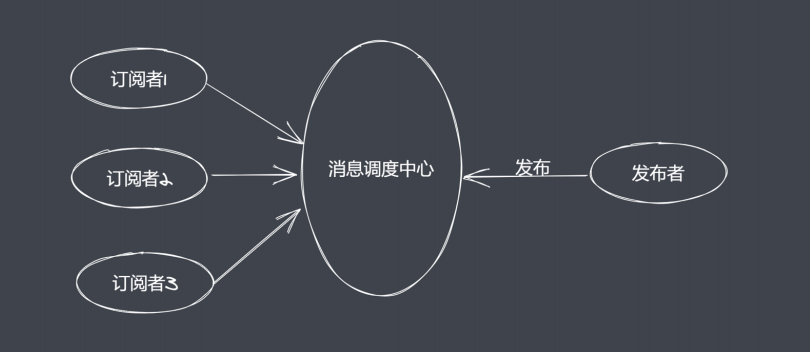

ev.emit('Event 1', 1, 2, 3)18. Publish and subscribe

(1) Define one to many relationships between objects

(2) What problems can publish and subscribe solve?

(3) publish and subscribe elements

- Cache queue to store subscriber information

- It has the ability to add and delete subscriptions

- Notify all subscribers to listen when the status changes

(4) There is a dispatch center in the publication subscription

(5) When the status changes, the publishing subscriber does not need to take the initiative to notify

(6) EventEmitter simulation

function MyEvent () {

// Prepare a data structure for caching subscriber information

this._events = Object.create(null)

}

MyEvent.prototype.on = function (type, callback) {

// Judge whether the current event already exists, and then decide if to cache

if (this._events[type]) {

this._events[type].push(callback)

} else {

this._events[type] = [callback]

}

}

MyEvent.prototype.emit = function (type, ...args) {

if (this._events && this._events[type].length) {

this._events[type].forEach((callback) => {

callback.call(this, ...args)

})

}

}

MyEvent.prototype.off = function (type, callback) {

// Judge whether the current type event listening exists. If it exists, cancel the specified listening

if (this._events && this._events[type]) {

this._events[type] = this._events[type].filter((item) => {

return item !== callback && item.link !== callback

})

}

}

MyEvent.prototype.once = function (type, callback) {

let foo = function (...args) {

callback.call(this, ...args)

this.off(type, foo)

}

foo.link = callback

this.on(type, foo)

}

let ev = new MyEvent()

let fn = function (...data) {

console.log('Event 1 was executed', data);

}

// ev.on('event 1', fn)

// ev.on('event 1 ', () = >{

// console.log('event 1 --- 2 ');

// })

// ev.emit('event 1 ', 1, 2)

// ev.emit('event 1 ', 1, 2)

// Event 1 performed [1, 2]

// Event 1 --- 2

// Event 1 performed [1, 2]

// ev.on('event 1', fn)

// ev.emit('event 1 ',' before ')

// ev.off('event 1', fn)

// ev.emit('event 1 ',' after ')

// Event 1 executed ['before']

ev.once('Event 1', fn)

ev.off('Event 1', fn)

// ev.emit('event 1 ',' before ')

ev.emit('Event 1', 'after')

// 1

// Event 1 executed ['before']19. Eventloop event ring in browser

setTimeout(() => {

console.log('s1');

Promise.resolve().then(() => {

console.log('p1');

})

Promise.resolve().then(() => {

console.log('p2');

})

})

setTimeout(() => {

console.log('s2');

Promise.resolve().then(() => {

console.log('p3');

})

Promise.resolve().then(() => {

console.log('p4');

})

})

// s1 p1 p2 s2 p3 p4Complete event loop execution sequence

- Execute all synchronization codes from top to bottom

- Add the macro and micro tasks encountered during execution to the corresponding queue

- After the synchronization code is executed, execute the qualified micro task callback

- After the micro task queue is executed, execute all macro task callbacks that meet the requirements

- Loop event loop operation

Note: the micro task pair columns are checked immediately after each macro task is executed

setTimeout(() => {

console.log('s1');

Promise.resolve().then(() => {

console.log('p2');

})

Promise.resolve().then(() => {

console.log('p3');

})

})

Promise.resolve().then(() => {

console.log('p1');

setTimeout(() => {

console.log('s2');

})

setTimeout(() => {

console.log('s3');

})

})

// p1 s1 p2 p3 s2 s3

/**

* Execution sequence

* (1)The code is executed synchronously from top to bottom. setTimeout is encountered first, and the macro task is recorded as s1 event (the code inside will not be executed temporarily); Next, if Promise is encountered, it is put into the micro task and recorded as p1 event (the code in it will not be executed temporarily), and the first code execution ends;

* Then go to the micro task to see if there is any executed code. It is found that there is a p1 event

* Output p1

* (2)Then, after the p1 event is executed from top to bottom, it is found that there are two macro tasks, which are recorded as s2 event and s3 event respectively, and put them into the macro task list. Now the macro task list is sorted as s1, s2 and s3, so that the p1 event of the micro task is completed

* The execution of the micro task p1 event ends and the micro task queue is cleared

* (3)Now switch to the macro task queue. According to the first in first out principle, the s1 event will be executed first

* Output s1

* (4)Then execute from top to bottom, encounter the micro task p2 event and p3 event, put them in the micro task list, and then empty the s1 event

* The micro task pair columns are checked immediately after each macro task is executed

* Output p2 p3, and the micro task list will be cleared

* (5)Switch to the macro task list, find s2 and s3, and clear them according to the column order

* Output s2 s3

* Final output result: p1 s1 p2 p3 s2 s3

*/20. Event ring under Nodejs

(1) Nodejs event loop mechanism

Queue Description:

- timers: execute setTimeout and setInterval callbacks

- Pending callbacks: callbacks that perform system operations, such as top udp

- idle, prepare: used only within the system

- poll: performs I/O related callbacks

- check: execute callback in setImmediate

- close callbacks: the callback that executes the close event

(2) Nodejs complete event ring

- Execute the synchronization code to add different tasks to the corresponding queue

- After all synchronization codes are executed, go back to execute the qualified micro task

- After all macro tasks in timer are completed, the queue will be switched in turn

Note: the micro task code will be before the queue switching is completed

See the next blog: node JS advanced programming [2]