Node. Application scenario of JS

Front end Engineering

- bundle: webpack, vite, esbuild, parcel

- Code compression (uglify): uglifyjs

- Syntax translation: bablejs, typescript

- Other languages join the competition: esbuild (golang), parcel (t rust), prisma

- Node. Current situation of JS: it is still difficult to replace in the field of front-end engineering

Web server application

- The learning curve is gentle and the development efficiency is high

- The running efficiency is close to that of common compiling languages

- Rich community ecology and mature tool chain (npm, V8 inspector)

- Scenes combined with the front end will have advantages (server-side rendering ssr)

- Current situation: fierce competition, node JS has its own unique advantages

Electron cross end desktop application

- Business applications: vscode, slack, discord, zoom

- Efficiency tools in large companies

- Status quo: most scenarios are worth considering when selecting models

Other application scenarios

- BFF (Backends For Frontends) application: as a middleware, it can freely process the back-end interface, making the front and back-end development more separated

- SSR (Server Side Render) application: generate HTML strings from components or pages through the server, and then send them to the browser

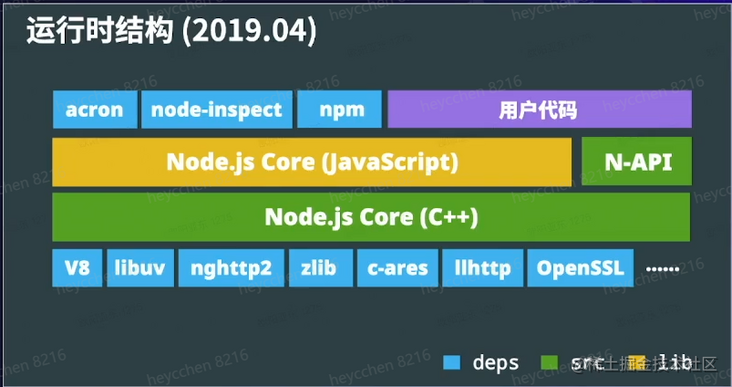

Node.js runtime structure

Node.js composition

- V8: JavaScript Runtime, diagnostic debugging tool (inspector)

- libuv: eventLoop, syscall

- Node.js running example (take node fetch as an example):

Node.js runtime structure features

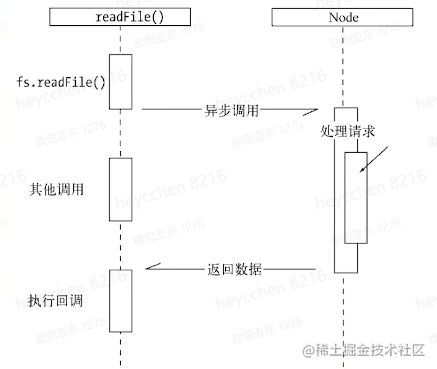

Asynchronous I/O

- When node JS when performing I/O operations, it will resume the operation after the response returns, instead of blocking the thread and occupying additional memory to wait

- When node JS when performing I/O operations, it will resume the operation after the response returns, instead of blocking the thread and occupying additional memory to wait

Single thread

- JavaScript is single threaded (there is only one main thread), but it is actually in node JS environment: Javascript single thread + libuv thread pool + V8 task thread pool + V8 inspector thread

- For example, when reading a file (common I/O operation), the task is handed over to the libuv thread pool for operation, and the JS main thread can carry out other tasks

- V8 inspector functions as a thread alone: for example, if you write an dead loop and cannot debug it under normal circumstances, you can use V8 inspector thread to debug the damn loop

- Advantages: there is no need to consider the problem of multi-threaded state synchronization, there is no need for locks, and the system resources can be used more efficiently

- Disadvantages: blocking will have more negative effects. Solution: multi process or multi thread

Cross platform

- Node.js cross platform + JS no compilation environment + Web cross platform + diagnostic tool cross platform

- Advantages: low development cost and low overall learning cost

Write HTTP Server

Write a simple server service to make the browser open the 3000 port of the host and see hello!nodejs!

const http = require('http'); const port = 3000; const server = http.createServer((req, res) => { res.end('hello!nodejs!'); }) server.listen(port, () => { console.log('success!', port); });Convert the data in the user request into JSON format and return it to the browser in the response

const http = require('http'); const port = 3000; const server = http.createServer((req, res) => { const bufs = []; req.on('data', (buf) => { bufs.push(buf); }); req.on('end', () => { const buf = Buffer.concat(bufs).toString('utf-8'); let msg = 'hello!' try { const ret = JSON.parse(buf); msg = ret.msg; } catch (err) { //The request data here is illegal and will not be processed temporarily (because legal data cannot be sent), and the value of msg is still hello } // Processing response const responseJson = { msg: `receive : ${msg}`, } // Set content type in response header res.setHeader('Content-Type', 'application/json'); res.end(JSON.stringify(responseJson)); }); }); server.listen(port, () => { console.log('success!', port); });Build a client that can send post requests to the server

const http = require('http'); const port = 3000; const body = JSON.stringify({ msg: 'Hello from my client', }) const req = http.request('http://127.0.0.1:3000', { method: 'POST', headers: { 'Content-Type': 'application/json', } }, (res) => { // After receiving the response, it is converted to JSON format and output on the console const bufs = []; res.on('data', (buf) => { bufs.push(buf); }) res.on('end', () => { const buf = Buffer.concat(bufs); const res = JSON.parse(buf); console.log('json.msg is : ', res); }) }); // Send request req.end(body);Rewrite these two examples with promise + async await

const http = require('http'); const port = 3000; const server = http.createServer(async (req, res) => { // recieve body from client const msg = await new Promise((resolve, reject) => { const bufs = []; req.on('data', (buf) => { bufs.push(buf); }); req.on('error', (err) => { reject(err); }); req.on('end', () => { const buf = Buffer.concat(bufs).toString('utf-8'); let msg = 'hello!nodejs!' try { const ret = JSON.parse(buf); msg = ret.msg; } catch (err) { // } resolve(msg); }); }) // response const responseJson = { msg: `receive : ${msg}`, } res.setHeader('Content-Type', 'application/json'); res.end(JSON.stringify(responseJson)); }); server.listen(port, () => { console.log('success!', port); });Build a server that can read static resources on the server

const http = require('http'); const fs = require('fs'); const url = require('url'); const path = require('path'); // File path const folderPath = path.resolve(__dirname, './static_server'); const server = http.createServer((req, res) => { // expected http://127.0.0.1:3000/index.html?abc=10 const info = url.parse(req.url); // static_server.html const filepath = path.resolve(folderPath, './' + info.path); // stream api const filestream = fs.createReadStream(filepath); filestream.pipe(res); }); const port = 3000; server.listen(port, () => { console.log('listening on : ', port); })- Compared with high-performance and reliable static file server, it also needs the ability of caching, acceleration and distributed caching

What are the characteristics of SSR (server side render)

- Compared with the traditional HTML template engine, it avoids writing code repeatedly

- Compared with SPA (single page application), the first screen rendering is faster and SEO (Search Engine Optimization) is friendly

- Disadvantages: generally, qps (queries per second) is low, and the rendering of the server needs to be considered when writing the front-end code

Write react SSR HTTP server

const React = require('react'); const ReactDOMServer = require('react-dom/server'); const http = require('http'); const App = (props) => { // Avoid compilation problems without jsx return React.createElement('div', {}, props.children || 'Hello!') } const port = 3000; const server = http.createServer((req, res) => { res.end(` <!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <meta http-equiv="X-UA-Compatible" content="IE=edge"> <meta name="viewport" content="width=device-width, initial-scale=1.0"> <title>my application</title> </head> <body> ${ReactDOMServer.renderToString( React.createElement(App, {}, 'my_content') )} <script> init...... </script> </body > </html > `); }) server.listen(port, () => { console.log('success!', port); });SSR difficulties:

- Need to process packaged code

- You need to think about the logic of front-end code running on the server

- Remove side effects that are meaningless to the server or reset the environment

HTTP Server -Debug

- When running the js file, add the -- inspector instruction before the file name, enter the corresponding debugging address in the browser, view the json information, and jump to the front-end debugging interface. With static_file_server as an example, enter 127.0.0.1:9229/json

- In the actual development environment, breaking point debugging is dangerous. You can call logpoint

- Memory panel: you can click a snapshot to view the memory usage information in the JavaScript main thread

- Profile panel: you can record a profile, view the operation of the CPU for a period of time, and see which functions are called. For example, if an application has a dead loop, you can view what code is running during the dead loop through the profile

- You can also observe the code performance through the above data to judge whether the function running time meets the expectation

HTTP Server - Deployment

Problems to be solved in deployment

- Daemon: pull up again when the process exits

- Multi process: cluster makes portable use of multi process

- Record process status for diagnostics

Container environment

- There is usually a means of health check, which only needs to consider the utilization of multi-core CPU