1 Overview

Data reading and writing can be regarded as a special case of Event mode. The continuously sent data blocks are like events. Reading data is a read Event, writing data is a write Event, and data block is the information attached to the Event. Node provides a special interface Stream for such situations.

stream is a way of handling system caches. The operating system reads data in the form of chunk s, and stores the data in the cache every time it receives it. Node applications have two cache processing methods:

1. After all data is received, it is read from the cache at one time (traditional way). Advantages: it is intuitive and the process is very natural; Disadvantages: if you encounter large files, it will take a long time to enter the data processing step.

2. The "data flow" method is adopted. When a piece of data is received, one piece is read. Advantages: improve the performance of the program.

2 what is flow

A stream is a continuous target object that can read or write data from a source. On node JS, there are four types of data streams:

- Readable: used for read operation.

- Writable: used for write operations.

- Duplex: used for read and write operations.

- Transform: a two-phase flow whose output is calculated based on the place of input.

Each flow is an event trigger. When a flow is called, it will trigger and throw an event. Some common events are:

| event | explain |

|---|---|

| data | Indicates that there is data in the stream that can be read |

| end | Indicates that there is no data in the stream to read |

| error | Triggered when reading and writing data error |

| finish | Triggered when data is refreshed to the underlying system |

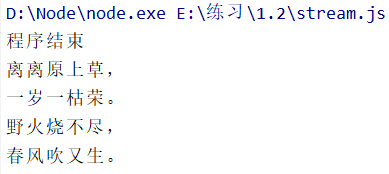

3 read from stream

The createReadStream method of fs module can create a data stream to read data.

Example code:

const fs = require("fs");

let data = "";

//Create an input stream

let readerStream = fs.createReadStream("./out.txt");

//Set character set

readerStream.setEncoding("UTF8");

//Binding stream events -- > data, end, and error

readerStream.on("data", function (chunk) {

data += chunk;

})

readerStream.on("end", function () {

console.log(data);

})

readerStream.on("error", function (err) {

console.log(err.stack);

})

console.log("Program end");

The above procedure will be out Txt read out.

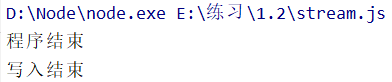

4 write stream

The createWriteStream method of fs module can create a data stream to write data.

Example code:

var fs = require("fs");

var data = "Orange cat is not fat";

//Create a write stream

var writerStream = fs.createWriteStream('./out.txt');

//Sets the character set for writing data

writerStream.write(data, 'UTF8');

//End of file exit

writerStream.end();

//Bind stream event -- > finish, and error

writerStream.on("finish", function () {

console.log("Write end");

});

writerStream.on("error", function (err) {

console.log(err.stack);

});

console.log("Program end");

This procedure will be out The content in TXT file is updated to: orange cat is not fat, and the original ancient poetry has been deleted.

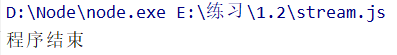

5 pipeline flow

A pipeline is a mechanism by which the output of one stream is used as input to another stream. It is usually used to get data from one stream and output it to another stream through the stream.

Example: a pipeline reads and writes from one file to another

var fs = require("fs");

//Create a readable stream

var readerStream = fs.createReadStream("./out.txt");

//Create a writable stream

var writerStream = fs.createWriteStream("./output.txt");

//Read and write through pipes

//Read out Txt and write the data to output txt

readerStream.pipe(writerStream);

console.log("Program end");

Original out Txt file contains content: orange cat is not fat. After running this program, output Txt file also contains this content (there was an output.txt file and the file is empty).

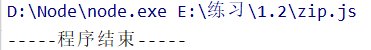

4 chain flow

Chaining is a mechanism that connects the output of one stream to another and creates a chain multi stream operation. It is usually used for pipeline operation.

Example: use pipes and links to compress files first and then extract them

zlib module: used to compress and decompress files

Example code: compressed file

const fs = require("fs");

const zlib = require("zlib");

//Create a stream that reads data

let readerStream = fs.createReadStream("./out.txt").pipe(zlib.createGzip()).pipe(fs.createWriteStream("./out.zip"));

readerStream.on("error", function (err) {

console.log(err.stack);

})

console.log("-----Program end-----");

A compressed package appears in the folder: out zip

Example code: unzip file

const fs = require("fs");

const zlib = require("zlib");

fs.createReadStream("./test.zip").pipe(zlib.createGunzip()).pipe(fs.createWriteStream("./output.txt"))

Test. In folder The zip file was unzipped into output Txt file.