1. Point cloud environment

1.1 point cloud

Relationship between point cloud and 3D image: 3D image is a special form of information expression, which is characterized by the data of three dimensions in the expressed space, including depth map (expressing the distance between the object and the camera in gray scale), geometric model (established by CAD software), point cloud model (all reverse engineering equipment sample the object into point cloud). Compared with two-dimensional image, three-dimensional image can realize natural object background decoupling with the help of the information of the third dimension. Point cloud data is the most common and basic 3D model. The point cloud model is often obtained directly from measurement. Each point corresponds to a measurement point without other processing means, so it contains the maximum amount of information. These information is hidden in the point cloud and needs to be extracted by other extraction methods. The process of extracting the information in the point cloud is three-dimensional image processing.

Concept of Point Cloud: a Point Cloud is a massive set of points that express the spatial distribution of the target and the characteristics of the target surface under the same spatial reference system. After obtaining the spatial coordinates of each sampling point on the object surface, a set of points is obtained, which is called "Point Cloud".

1.2 environment configuration

1.2.1 PCL library installation

The first time: failed and could not be solved, so he gave up reinstalling the system.

The second time:

step1: a grub install fatal error occurred during the installation of ubuntu. It was not resolved in two days, so it was abandoned and the u disk was replaced successfully.

Step 2: install dependent Libraries

sudo apt-get update sudo apt-get install git build-essential linux-libc-dev sudo apt-get install cmake cmake-gui sudo apt-get install libusb-1.0-0-dev libusb-dev libudev-dev sudo apt-get install mpi-default-dev openmpi-bin openmpi-common sudo apt-get install libflann1.9 libflann-dev #1.9 corresponding to Ubuntu 18 sudo apt-get install libeigen3-dev sudo apt-get install libboost-all-dev sudo apt-get install libvtk7.1-qt libvtk7.1 libvtk7-dev #New version 7.1 sudo apt-get install libqhull* libgtest-dev sudo apt-get install freeglut3-dev pkg-config sudo apt-get install libxmu-dev libxi-dev sudo apt-get install openjdk-8-jdk openjdk-8-jre #QT SDK has been discarded, use this code instead

step3: Download

git clone https://github.com/PointCloudLibrary/pcl.git

step4: Compiling

cd pcl mkdir release cd release cmake -DCMAKE_BUILD_TYPE=None -DCMAKE_INSTALL_PREFIX=/usr \ -DBUILD_GPU=ON-DBUILD_apps=ON -DBUILD_examples=ON \ -DCMAKE_INSTALL_PREFIX=/usr .. make #After compilation sudo make install

step5: check

Create test_pcl folder, create test through vscode_ Pcl.cpp and write

#include <iostream>

#include <pcl/common/common_headers.h>

#include <pcl/io/pcd_io.h>

#include <pcl/visualization/pcl_visualizer.h>

#include <pcl/visualization/cloud_viewer.h>

#include <pcl/console/parse.h>

using namespace std;

int main(int argc, char **argv) {//Cylindrical point cloud test

cout << "Test PCL !" << endl;

pcl::PointCloud<pcl::PointXYZRGB>::Ptr point_cloud_ptr (new pcl::PointCloud<pcl::PointXYZRGB>);

uint8_t r(255), g(15), b(15);

for (float z(-1.0); z <= 1.0; z += 0.05) {//Making cylindrical point clouds

for (float angle(0.0); angle <= 360.0; angle += 5.0) {

pcl::PointXYZRGB point;

point.x = cos (pcl::deg2rad(angle));

point.y = sin (pcl::deg2rad(angle));

point.z = z;

uint32_t rgb = (static_cast<uint32_t>(r) << 16 | static_cast<uint32_t>(g) << 8 | static_cast<uint32_t>(b));

point.rgb = *reinterpret_cast<float*>(&rgb);

point_cloud_ptr->points.push_back (point);

}

if (z < 0.0) {//Color gradient

r -= 12;

g += 12;

}

else {

g -= 12;

b += 12;

}

}

point_cloud_ptr->width = (int) point_cloud_ptr->points.size ();

point_cloud_ptr->height = 1;

pcl::visualization::CloudViewer viewer ("pcl—test test");

viewer.showCloud(point_cloud_ptr);

while (!viewer.wasStopped()){ };

return 0;

}

Use touch CMakeLists.txt and write

cmake_minimum_required(VERSION 2.6)

project(test_pcl)

find_package(PCL 1.2 REQUIRED)

include_directories(${PCL_INCLUDE_DIRS})

link_directories(${PCL_LIBRARY_DIRS})

add_definitions(${PCL_DEFINITIONS})

add_executable(test_pcl test_pcl.cpp)

target_link_libraries (test_pcl ${PCL_LIBRARIES})

install(TARGETS test_pcl RUNTIME DESTINATION bin)

Then in test_ Create a build folder under the PCL folder.

Start compilation:

Open the terminal in the build folder (right click + T under the folder)

Terminal input

cmake ..

make

./test_pcl

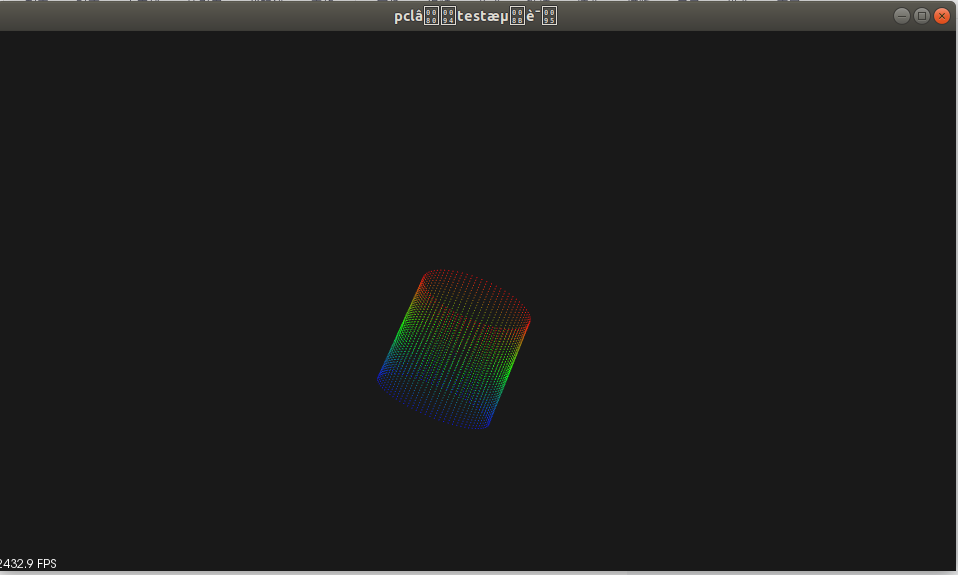

appear

Except that the file name is garbled, it is basically completed!

1.2.2 installing realsense D455

Note: install the version suitable for the local kernel from the official website, otherwise it will show that you can't connect.

Download decompression

cd librealsense sudo apt-get install libudev-dev pkg-config libgtk-3-dev sudo apt-get install libusb-1.0-0-dev pkg-config sudo apt-get install libglfw3-dev sudo apt-get install libssl-dev mkdir build cd build cmake ../ -DBUILD_EXAMPLES=true make -j4 sudo make install

verification

realsense-viewer

1.2.3 installing openGL

See Installing opengl under linux_ Csp123258 column - CSDN blog_ linux Installation opengl

1.2.4 installation glfw

See Installation and use of glfw under Ubuntu_ dxmcu's column - CSDN blog Installation and use of glfw under buntu_ dxmcu Installation and use of glfw under Ubuntu_ dxmcu's column - CSDN blog

Tsinghua source, China University of science and technology source, and the source code package relying on build dep glfw can not be found without changing the source. Skip direct compilation. I don't know if it's OK.

1.2.5 installing opencv4.5.3

See Ubuntu 18 installing opencv3.4_zhongqli's blog - CSDN blog

There may be errorE: unable to locate libjasper-dev

Input at terminal

sudo add-apt-repository "deb http://security.ubuntu.com/ubuntu xenial-security main" sudo apt update sudo apt upgrade sudo apt install libjasper1 libjasper-dev

One more time will solve it.

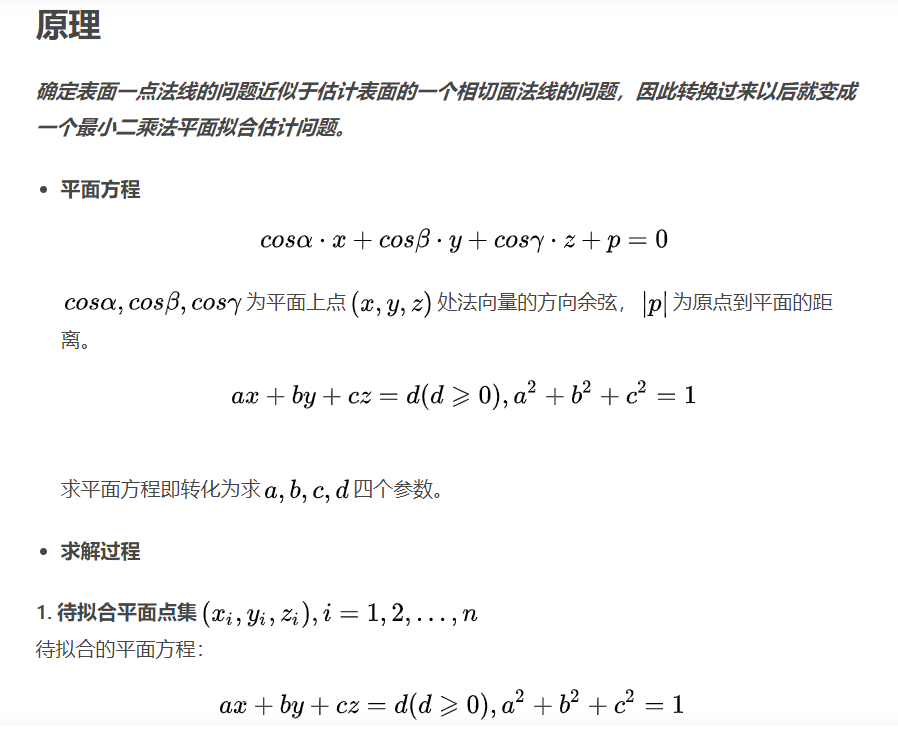

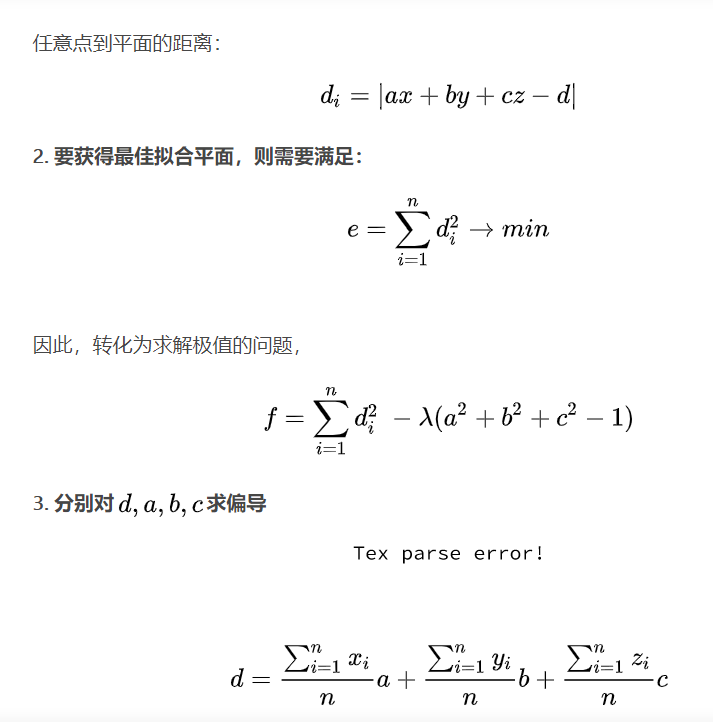

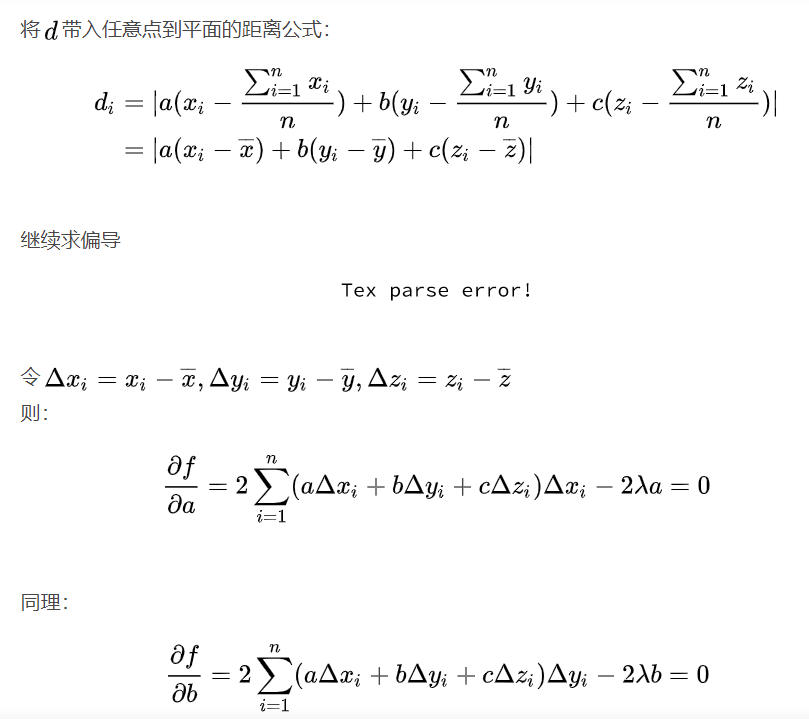

2. Calculation of normal vector

2.1 normal vector

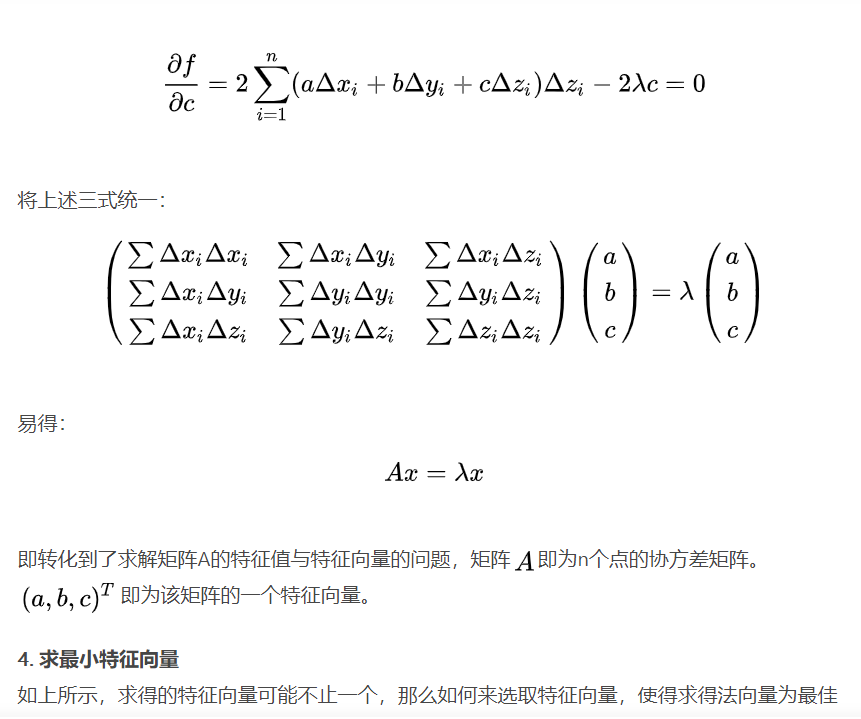

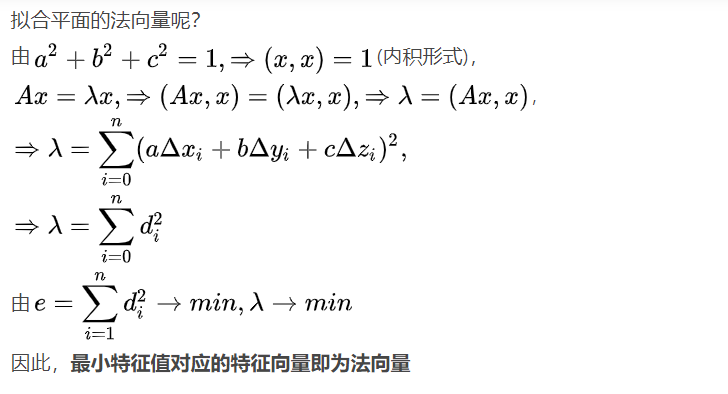

The method based on local surface fitting firstly, Hoppe et al. Proposed the assumption that the sampling plane of the point cloud is smooth everywhere in the surface reconstruction algorithm based on the directed distance function. Therefore, the local neighborhood of any point can be well fitted with the plane; Therefore, for each point P in the point cloud, k nearest adjacent points are obtained, and then a local plane P in the sense of least squares is calculated for these points, and the normal vector is found.

2.2 PCL processing point cloud data of realsense

Initial idea: there are two kinds of point cloud data obtained by realsense: photo data. ply and recording data. bag, but pcl needs to process pcd data, so. ply should be converted to. pcd (x,y,z,RGB) format, and then imported into pcl for processing.

Wrong thinking: instead of saving data directly from realsense, you should read the data and directly use PCL to process and return the normal vector.

First, get the position and color data from realsense

#ifndef RSDEVICE_H

#define RSDEVICE_H

#include <librealsense/rs.hpp>

#include <QString>

#Encapsulates the operation of realsense

class rsdevice

{

public:

rs::context ctx;

rs::device * dev;

int devCount;

QString devName;

QString devSerial;

QString devFwVersion;

QString devCamType;

QString devIspFwVersion;

bool streamEnable;

public:

QString getDevName(){

return devName;

}

QString getDevSerial(){

return devSerial;

}

QString getDevFwVersion(){

return devFwVersion;

}

QString getCamType(){

return devCamType;

}

QString getIspFwVersion(){

return devIspFwVersion;

}

public:

rsdevice();

~rsdevice();

void initRsDevice();

void enableStream();

uchar * getFrameData();

bool isSteamEnable();

float getDistance(int x,int y);

};

#endif // RSDEVICE_H

#Initialize camera

void rsdevice::initRsDevice()

{

devCount = ctx.get_device_count();

dev = ctx.get_device(0);

.....................

.....................

}

#Turn on the camera data stream. Here, we only turn on two channels of data, one channel of RGB color data and one channel of depth camera data

void rsdevice::enableStream(){

streamEnable = true;

dev->enable_stream(rs::stream::color, 640, 480, rs::format::rgb8, 60);

dev->enable_stream(rs::stream::depth,640,480, rs::format::z16, 60);

dev->start();

}

#Get RGBC color data

uchar * rsdevice::getFrameData(){

dev->wait_for_frames();

return (uchar *) dev->get_frame_data(rs::stream::color);

}

#Get depth data

float rsdevice::getDistance(int x, int y){

uint16_t *depthImage = (uint16_t *) dev->get_frame_data(rs::stream::depth);

float scale = dev->get_depth_scale();

rs::intrinsics depthIntrin = dev->get_stream_intrinsics(rs::stream::depth);

uint16_t depthValue = depthImage[y * depthIntrin.width + x];

float depthInMeters = depthValue * scale;

return depthInMeters;

}

Then PCL is used to obtain the normal vector

#PCL get normal vector

#include <pcl/point_types.h>

#include <pcl/features/normal_3d.h>

{

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud (new pcl::PointCloud<pcl::PointXYZ>);

... read, pass in or create a point cloud ...

// Create the normal estimation class, and pass the input dataset to it

pcl::NormalEstimation<pcl::PointXYZ, pcl::Normal> ne;

ne.setInputCloud (cloud);

// Create an empty kdtree representation, and pass it to the normal estimation object.

// Its content will be filled inside the object, based on the given input dataset (as no other search surface is given).

pcl::search::KdTree<pcl::PointXYZ>::Ptr tree (new pcl::search::KdTree<pcl::PointXYZ> ());

ne.setSearchMethod (tree);

// Output datasets

pcl::PointCloud<pcl::Normal>::Ptr cloud_normals (new pcl::PointCloud<pcl::Normal>);

// Use all neighbors in a sphere of radius 3cm

ne.setRadiusSearch (0.03);

// Compute the features

ne.compute (*cloud_normals);

// cloud_normals->points.size () should have the same size as the input cloud->points.size ()*

}The theory is over, and the assembly of c + + has to be solved! (smile)