preface

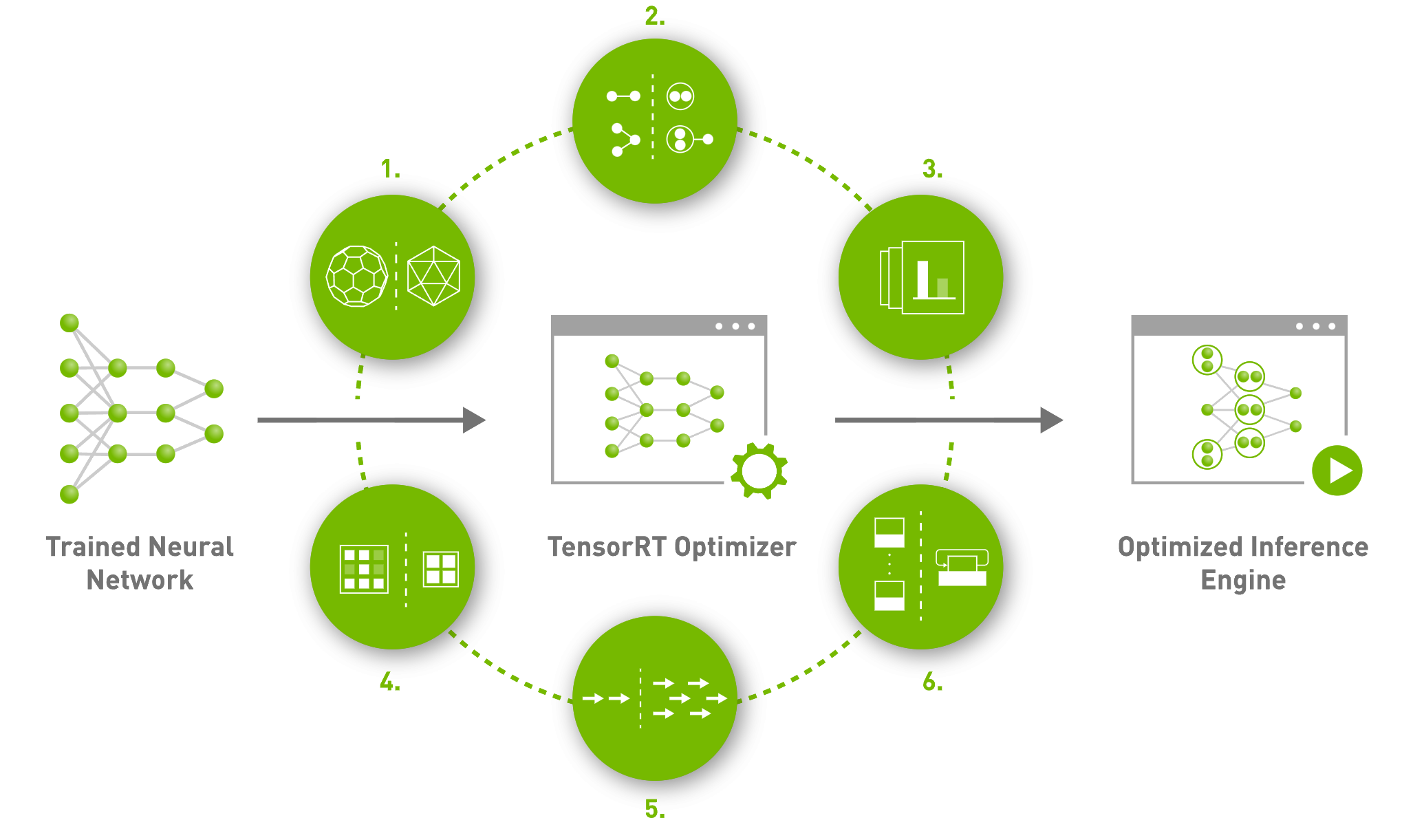

TensorRT is an efficient deep learning model reasoning framework launched by NVIDIA. It includes deep learning reasoning optimizer and runtime, which can make deep learning reasoning applications have the advantages of low latency and high throughput.

In essence, it is to accelerate the reasoning speed of the whole network by fusing some operators in the model, selecting better implementation methods for operators with specific sizes, and using mixed accuracy.

After using PyTorch to train the network model, we hope to accelerate the model reasoning through TensorRT during model deployment. Then we can first convert the PyTorch model to ONNX, and then turn ONNX to TensorRT engine.

Implementation steps

PyTorch model to ONNX

The specific process can be referred to PyTorch model to ONNX format_ TracelessLe's column - CSDN blog

ONNX to TensorRT engine

Method 1: trtexec

Directly use trtexec command line to convert ONNX model to TensorRT engine:

trtexec --onnx=net_bs8_v1_simple.onnx --tacticSources=-cublasLt,+cublas --workspace=2048 --fp16 --saveEngine=net_bs8_v1.engine --verbose

Note: (Reference: TensorRT-trtexec-README)

① -- ONNX specifies the ONNX file path

② -- tacticSources specifies the method library to use

③ -- workspace specifies the size of the workspace in MB

④ -- FP16 enable FP16 mode

⑤ -- saveEngine specifies the save path of the generated engine

⑥ -- verbose open verbose mode for more printing information.

Under normal circumstances, the engine file can be generated normally after a long waiting time. In case of any problem, it needs to be analyzed according to the specific problem.

Method 2: engine generation based on Python API

TensorRT provides API s such as C + + and Python, which can be used to generate engine.

__author__ = 'TracelessLe'

import os

import tensorrt as trt

ONNX_SIM_MODEL_PATH = 'net_bs8_v1_simple.onnx'

TENSORRT_ENGINE_PATH_PY = 'net_bs8_v1_fp16_py.engine'

def build_engine(onnx_file_path, engine_file_path, flop=16):

trt_logger = trt.Logger(trt.Logger.VERBOSE) # trt.Logger.ERROR

builder = trt.Builder(trt_logger)

network = builder.create_network(

1 << (int)(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)

)

parser = trt.OnnxParser(network, trt_logger)

# parse ONNX

with open(onnx_file_path, 'rb') as model:

if not parser.parse(model.read()):

print('ERROR: Failed to parse the ONNX file.')

for error in range(parser.num_errors):

print(parser.get_error(error))

return None

print("Completed parsing ONNX file")

builder.max_workspace_size = 2 << 30

# default = 1 for fixed batch size

builder.max_batch_size = 1

# set mixed flop computation for the best performance

if builder.platform_has_fast_fp16 and flop == 16:

builder.fp16_mode = True

if os.path.isfile(engine_file_path):

try:

os.remove(engine_file_path)

except Exception:

print("Cannot remove existing file: ",

engine_file_path)

print("Creating Tensorrt Engine")

config = builder.create_builder_config()

config.set_tactic_sources(1 << int(trt.TacticSource.CUBLAS))

config.max_workspace_size = 2 << 30

config.set_flag(trt.BuilderFlag.FP16)

engine = builder.build_engine(network, config)

with open(engine_file_path, "wb") as f:

f.write(engine.serialize())

print("Serialized Engine Saved at: ", engine_file_path)

return engine

if __name__ == "__main__":

build_engine(ONNX_SIM_MODEL_PATH, TENSORRT_ENGINE_PATH_PY)

Under normal circumstances, this method can generate the same engine file as trtexec, and has the same file size and reasoning speed.

Result comparison

Compare the results obtained by engine reasoning with the results obtained by ONNX:

__author__ = 'TracelessLe'

import os

import time

import onnxruntime

import pycuda.driver as cuda

import tensorrt as trt

import numpy as np

ONNX_SIM_MODEL_PATH = 'net_bs8_v1_simple.onnx'

TENSORRT_ENGINE_PATH_PY = 'net_bs8_v1_fp16_py.engine'

def get_numpy_data():

batch_size = 8

img_input = np.ones((batch_size, 3, 128, 128), dtype=np.float32)

return img_input

def test_onnx(inputs, loop=100):

inputs = inputs.astype(np.float32)

print(onnxruntime.get_device())

sess = onnxruntime.InferenceSession(ONNX_SIM_MODEL_PATH)

batch_size = 8

time1 = time.time()

for i in range(loop):

time_bs1 = time.time()

out_ort_img = sess.run(None, {sess.get_inputs()[0].name: inputs,})

time_bs2 = time.time()

time_use_onnx_bs = time_bs2 - time_bs1

print(f'ONNX use time {time_use_onnx_bs} for bs8')

time2 = time.time()

time_use_onnx = time2-time1

print(f'ONNX use time {time_use_onnx} for loop {loop}, FPS={loop*batch_size//time_use_onnx}')

return out_ort_img

class HostDeviceMem(object):

def __init__(self, host_mem, device_mem):

self.host = host_mem

self.device = device_mem

def __str__(self):

return "Host:\n" + str(self.host) + "\nDevice:\n" + str(self.device)

def __repr__(self):

return self.__str__()

def _load_engine(engine_file_path):

trt_logger = trt.Logger(trt.Logger.ERROR)

with open(engine_file_path, 'rb') as f:

with trt.Runtime(trt_logger) as runtime:

engine = runtime.deserialize_cuda_engine(f.read())

print('_load_engine ok.')

return engine

def _allocate_buffer(engine):

binding_names = []

for idx in range(100):

bn = engine.get_binding_name(idx)

if bn:

binding_names.append(bn)

else:

break

inputs = []

outputs = []

bindings = [None] * len(binding_names)

stream = cuda.Stream()

for binding in binding_names:

binding_idx = engine[binding]

if binding_idx == -1:

print("Error Binding Names!")

continue

size = trt.volume(engine.get_binding_shape(binding)) * engine.max_batch_size

dtype = trt.nptype(engine.get_binding_dtype(binding))

host_mem = cuda.pagelocked_empty(size, dtype)

device_mem = cuda.mem_alloc(host_mem.nbytes)

bindings[binding_idx] = int(device_mem)

if engine.binding_is_input(binding):

inputs.append(HostDeviceMem(host_mem, device_mem))

else:

outputs.append(HostDeviceMem(host_mem, device_mem))

return inputs, outputs, bindings, stream

def _test_engine(engine_file_path, data_input, num_times=100):

# Code from blog.csdn.net/TracelessLe

engine = _load_engine(engine_file_path)

# print(engine)

input_bufs, output_bufs, bindings, stream = _allocate_buffer(engine)

batch_size = 8

context = engine.create_execution_context()

###heat###

input_bufs[0].host = data_input

cuda.memcpy_htod_async(

input_bufs[0].device,

input_bufs[0].host,

stream

)

context.execute_async_v2(

bindings=bindings,

stream_handle=stream.handle

)

cuda.memcpy_dtoh_async(

output_bufs[0].host,

output_bufs[0].device,

stream

)

stream.synchronize()

trt_outputs = [output_bufs[0].host.copy()]

##########

start = time.time()

for _ in range(num_times):

time_bs1 = time.time()

input_bufs[0].host = data_input

cuda.memcpy_htod_async(

input_bufs[0].device,

input_bufs[0].host,

stream

)

context.execute_async_v2(

bindings=bindings,

stream_handle=stream.handle

)

cuda.memcpy_dtoh_async(

output_bufs[0].host,

output_bufs[0].device,

stream

)

stream.synchronize()

trt_outputs = [output_bufs[0].host.copy()]

time_bs2 = time.time()

time_use_bs = time_bs2 - time_bs1

print(f'TRT use time {time_use_bs} for bs8')

end = time.time()

time_use_trt = end - start

print(f"TRT use time {(time_use_trt)}for loop {num_times}, FPS={num_times*batch_size//time_use_trt}")

return trt_outputs

def test_engine(data_input, loop=100):

engine_file_path = TENSORRT_ENGINE_PATH_PY

cuda.init()

cuda_ctx = cuda.Device(0).make_context()

trt_outputs = None

try:

trt_outputs = _test_engine(engine_file_path, data_input, loop)

finally:

cuda_ctx.pop()

return trt_outputs

if __name__ == "__main__":

img_input = get_numpy_data()

trt_outputs = test_engine(img_input, 100)

trt_outputs = trt_outputs[0].reshape((8,3,128,128))

trt_image_numpy = (np.transpose(trt_outputs[0], (1, 2, 0)) + 1) / 2.0 * 255.0

trt_image_numpy = np.clip(trt_image_numpy, 0, 255)

out_ort_img = test_onnx(img_input, loop=1)[0]

onnx_image_numpy = (np.transpose(out_ort_img[0], (1, 2, 0)) + 1) / 2.0 * 255.0

onnx_image_numpy = np.clip(onnx_image_numpy, 0, 255)

mse = np.square(np.subtract(onnx_image_numpy, trt_image_numpy)).mean()

print('mse between onnx and trt result: ', mse)

Other instructions

(1) PyTorch to TensorRT engine

Methods in addition to the conventional PyTorch - > onnx - > tensorrt, there are other methods, such as NVIDIA-AI-IOT torch2trt And NVIDIA TRTorch , you can also try.

(2) ONNX operator support

TensorRT does not support all ONNX operators. See the specific support list Related documents.

(3)engine debug

TensorRT provides a set of tools that can be used for debug ging during engine generation, including Polygraphy, ONNX GraphSurgeon and pytorch quantification. These gadgets are very useful and worthy of further research.

Copyright description

This article is an original article, which is exclusively published in blog.csdn.net/TracelessLe No reprint is allowed without personal permission. If you need help, please email tracelessle@163.com.

reference material

[1] NVIDIA TensorRT | NVIDIA Developer

[2] TensorRT-trtexec-README

[3] PyTorch model to ONNX format _tracelesslecolumn - CSDN blog

[4] How to use TensorRT to accelerate the trained PyTorch model? - cloud + community - Tencent cloud

[5] How to Convert a Model from PyTorch to TensorRT and Speed Up Inference | LearnOpenCV #

[6] How to use TensorRT to accelerate the trained PyTorch model? - what do you know

[7] TensorRT tutorial 3: converting to engine using the trtexec tool

[8] onnx-tensorrt/operators.md at master · onnx/onnx-tensorrt

[9] mmediting/onnx2tensorrt.py at master · open-mmlab/mmediting

[10] TensorRT detailed introduction to the north. If you don't know TensorRT, come and have a look! - SegmentFault

[11] Share some valuable in-depth learning about NVIDIA new technology for AI deployment - what do you know