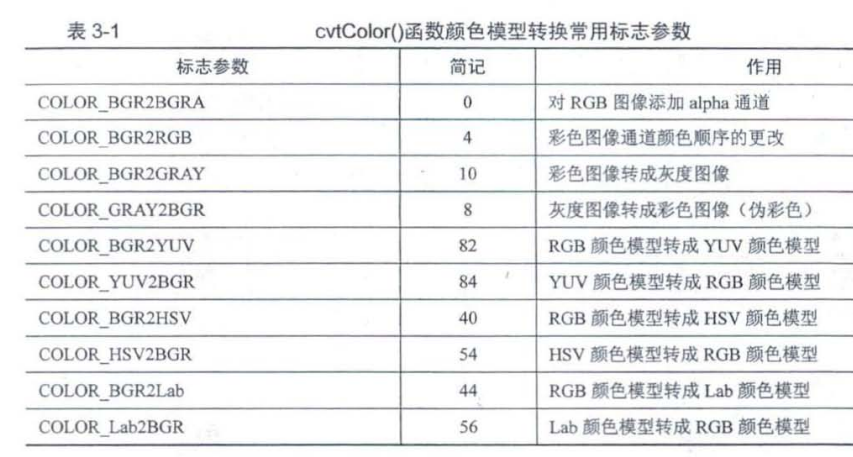

Color space

RGB, RGBA, YUV (brightness, interpolation between red component and brightness signal, interpolation between blue and brightness), HSV (chroma, saturation, brightness).....

void cv::cvtColor ( InputArray src, OutputArray dst, int code, int dstCn = 0 ) // Data type conversion void cv::Mat::convertTo ( OutputArray m, int rtype, double alpha = 1, double beta = 0 ) const

Change the color space. The first two parameters are used for the input image and the target image. The third parameter indicates the converted color space, and the fourth parameter is on the line by default.. It should be noted that the image value range before and after the function transformation

The pixels of the 8-bit unsigned image are 0 - 255. CV_8U

16. The pixels of the unsigned image are 0-65535 CV_ 16U

The pixels of the 32-bit floating-point image are 0-1,

Therefore, we must pay attention to the pixel range of the target image In the case of linear transformation, the range problem does not need to be considered, and the pixels of the target image will not exceed the range. In the case of nonlinear transformation, the input RGB image should be normalized to an appropriate range to obtain correct results. For example, to convert an 8-bit unsigned image into a 32-bit floating-point image, the image pixels need to be scaled in the range of 0-1 by dividing by 255 to prevent wrong results

Data type conversion. The parameters are output image, target type of converted image, alpha, beta, scaling factor and offset factor.

m

(

x

,

y

)

=

s

a

t

u

r

a

t

e

c

a

s

t

<

r

t

p

y

e

>

(

α

(

∗

t

h

i

s

)

(

x

,

y

)

+

β

)

m(x,y) = saturate_cast<rtpye>(\alpha(*this)(x,y) + \beta)

m(x,y)=saturatecast<rtpye>(α(∗this)(x,y)+β)

The conversion method is linear transformation, which is output according to the specified type.

#include <iostream>

#include <opencv2/opencv.hpp>

#include <string>

#include <vector>

using namespace std;

using namespace cv;

int main(int argc, char *argv[])

{

system("color E");

Mat img = imread("D:\\wallpaper\\2k.jpg");

cout<<img.type()<<endl;

cout << size(img) << img.type() << endl;

if (img.empty())

{

cout << "error";

}

Mat gray, HSV, YUV, Lab, img32;

img.convertTo(img32, CV_32F, 1.0 / 255); // Type conversion, CV_8U to CV_32F

cvtColor(img32, HSV, COLOR_BGR2HSV);

cvtColor(img32, YUV, COLOR_BGR2YUV);

cvtColor(img32, Lab, COLOR_BGR2Lab);

cvtColor(img32, gray, COLOR_BGR2GRAY);

imshow("Original drawing", img32);

imshow("hsv", HSV);

imshow("YUV", YUV);

imshow("Lab", Lab);

imshow("gray", gray);

waitKey(100);

return 0;

}

Separation and merging of channels

Different components of the picture are stored in different channels, and the channel separation processing is used to merge and regenerate the image.

void cv::split ( const Mat & src,

Mat * mvbegin // Separate channels are in the form of arrays. You need to know the number of channels

)

void cv::split ( InputArray m,

OutputArrayOfArrays mv // Separate single channel, vector format, do not know the number of channels

)

void cv::merge ( const Mat * mv, // Array, same size, same type

size_t count, // Enter the length of the image array, greater than 0

OutputArray dst

)

void cv::merge ( InputArrayOfArrays mv, // Corresponding to split

OutputArray dst

)

#include <iostream>

#include <opencv2/opencv.hpp>

#include <string>

#include <vector>

using namespace std;

using namespace cv;

int main(int argc, char *argv[])

{

system("color E");

Mat img = imread("D:\\wallpaper\\2k.jpg");

Mat HSV;

cvtColor(img, HSV, COLOR_RGB2HSV);

Mat imgs0, imgs1, imgs2; // Store the results of array type

Mat imgv0, imgv1, imgv2; // Store results of vector type

Mat result0, result1, result2; // Consolidated results

Mat imgs[3];

split(img, imgs);

imgs0 = imgs[0];

imgs1 = imgs[1];

imgs2 = imgs[2];

imshow("B", imgs0); // Results of separation

imshow("G", imgs1);

imshow("R", imgs2);

imgs[2] = img; // Change the number of channels

merge(imgs, 3, result0); // Merge into 5 channels

// imshow("result0", result0); // imshow displays up to 4 channels. It can be viewed in image watch

Mat zero = cv::Mat::zeros(img.rows, img.cols, CV_8UC1);

imgs[0] = zero;

imgs[2] = zero;

merge(imgs, 3, result1); // Only the green channel is left

imshow("resutl1", result1);

vector<Mat> imgv;

split(HSV, imgv);

imgv0 = imgv.at(0);

imgv1 = imgv.at(1);

imgv2 = imgv.at(2);

imshow("H", imgv0);

imshow("S", imgv1);

imshow("V", imgv2);

imgv.push_back(HSV); // Channel number change

merge(imgv, result2); // Six channels

// imshow("result2", result2);

waitKey(0);

return 0;

}

Pixel operation

Pixel max, min

void cv::minMaxLoc ( InputArray src, // Single channel, multiple maximum values return to the first one on the upper left

double * minVal, // Value, and the unneeded value is set to NULL

double * maxVal = 0, // Input parameters plus&

Point * minLoc = 0, // Coordinate pointer, the upper left corner is the far point, Point(x,y)

Point * maxLoc = 0, // Horizontal x-axis, numerical y-axis

InputArray mask = noArray() // Mask, specifying area

)

cv::Point2i Set the shaping type for 2D coordinates,Point2d double Point2f float,three-dimensional Point3i

Point.x The specific coordinates can be determined by changing the variables x,y,z Property access.

Mat cv::Mat::reshape ( int cn, // Number of channels in the converted matrix

int rows = 0 // The number of rows of the converted matrix, 0 means unchanged

) const

#include <iostream>

#include <opencv2/opencv.hpp>

#include <string>

#include <vector>

using namespace std;

using namespace cv;

int main(int argc, char *argv[])

{

system("color E");

// Mat img = imread("D: \ \ wallpaper \ \ 2k.jpg");

float a[12] = { 1,2,3,4,5,10,6,7,8,9,10,0 };

Mat img = Mat(3, 4, CV_32FC1, a); // single channel

Mat imgs = Mat(2, 3, CV_32FC2, a); // Multichannel

double minVal, maxVal;

Point minIdx, maxIdx;

minMaxLoc(img, &minVal, &maxVal, &minIdx, &maxIdx); // Put address

cout << "max" << maxVal << " " << " point" << maxIdx << endl;

cout << "min" << minVal << " " << "point" << minIdx << endl;

Mat imgs_re = imgs.reshape(1, 4); // The maximum value of multi-channel shall be converted to single channel, (4, 3)

minMaxLoc(imgs_re, &minVal, &maxVal, &minIdx, &maxIdx);

cout << "max" << maxVal << " " << " point" << maxIdx << endl;

cout << "min" << minVal << " " << "point" << minIdx << endl;

return 0;

}

Mean, standard deviation

Scalar cv::mean ( InputArray src, // Channels are 1-4, and Scalar type variables are returned. The last three digits of a 4-bit single channel are 0

InputArray mask = noArray() // Mask

)

void cv::meanStdDev ( InputArray src,

OutputArray mean, // Mean, standard deviation

OutputArray stddev, // Mat type variable, the number of data is the same as the number of incoming channels

InputArray mask = noArray()

)

int main(int argc, char *argv[])

{

system("color E");

// Mat img = imread("D: \ \ wallpaper \ \ 2k.jpg");

float a[12] = { 1,2,3,4,5,10,6,7,8,9,10,0 };

Mat img = Mat(3, 4, CV_32FC1, a); // single channel

Mat imgs = Mat(2, 3, CV_32FC2, a); // Multichannel

Scalar Mean;

Mean = mean(imgs);

cout << Mean << Mean[0] << Mean[1] << endl;

Mat MeanMat, StddevMat;

meanStdDev(img, MeanMat, StddevMat);

cout << MeanMat << StddevMat << endl;

meanStdDev(imgs, MeanMat, StddevMat);

cout << MeanMat << StddevMat << endl;

return 0;

}

Image comparison operation

Calculate the large value and small value of each pixel of the two images, and keep the larger value

#include <iostream>

#include <opencv2/opencv.hpp>

#include <string>

#include <vector>

using namespace std;

using namespace cv;

int main(int argc, char *argv[])

{

system("color E");

Mat img0 = imread("lena.png");

Mat img1 = imread("noobcv.jpg");

Mat comMin, comMax;

max(img0, img1, comMax); // Keep the larger value of the corresponding position of the two images

min(img0, img1, comMin);

imshow("max", comMax);

imshow("min", comMin);

Mat src1 = Mat::zeros(Size(512, 512), CV_8UC3);

Rect rect(100, 100, 300, 300); // x,y,w,h

src1(rect) = Scalar(255, 255, 255); // Assign values within this range to build a mask

Mat comsrc1, comsrc2;

min(img0, src1, comsrc1); // When a smaller value is displayed, the part of mask 255 (white) is extracted.

imshow("src1", comsrc1);

Mat img0G, img1G, comMinG, comMaxG;

cvtColor(img0, img0G, COLOR_BGR2GRAY);

cvtColor(img1, img1G, COLOR_BGR2GRAY);

max(img0G, img1G, comMaxG);

min(img0G, img1G, comMinG);

imshow("MinG", comMinG);

imshow("MaxG", comMaxG);

return 0;

}

Logical operation

Is a bitwise and or non exclusive or. The corresponding function is bitwise_and/or/xor/not, the parameters are the same, two input images, one output image and one mask.

int main()

{

Mat img = imread("lena.png");

if (img.empty())

{

cout << "Please confirm whether the image file name is correct" << endl;

return -1;

}

//Create two black and white images

Mat img0 = Mat::zeros(200, 200, CV_8UC1);

Mat img1 = Mat::zeros(200, 200, CV_8UC1);

Rect rect0(50, 50, 100, 100);

img0(rect0) = Scalar(255);

Rect rect1(100, 100, 100, 100);

img1(rect1) = Scalar(255);

imshow("img0", img0);

imshow("img1", img1);

//Perform logical operations

Mat myAnd, myOr, myXor, myNot, imgNot;

bitwise_not(img0, myNot);

bitwise_and(img0, img1, myAnd);

bitwise_or(img0, img1, myOr);

bitwise_xor(img0, img1, myXor);

bitwise_not(img, imgNot);

imshow("myAnd", myAnd);

imshow("myOr", myOr);

imshow("myXor", myXor);

imshow("myNot", myNot);

imshow("img", img);

imshow("imgNot", imgNot);

waitKey(0);

return 0;

}

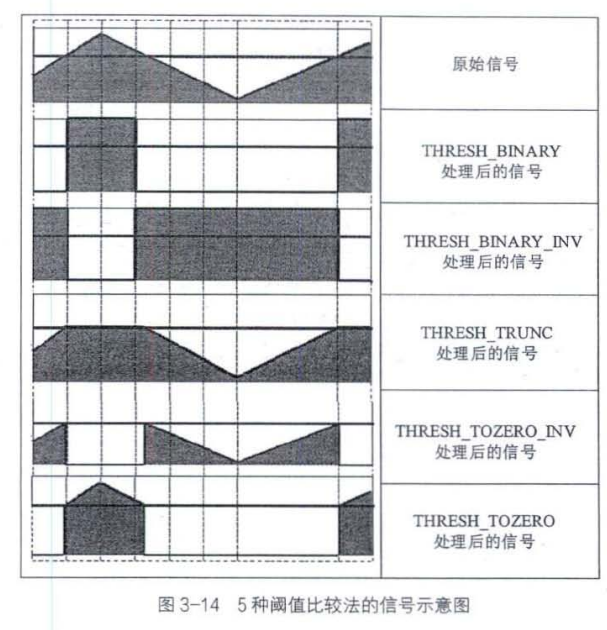

Binarization

Black or white

double cv::threshold ( InputArray src, // CV_ 8U,CV_ There are two types of 32F, and the number of channels is related to the selection method OutputArray dst, // Result diagram double thresh, // threshold double maxval, // The maximum value in the binarization process is related to BINARY method int type // Sign of binarization )

| sign | Abbreviation | effect |

|---|---|---|

| THRESH_BINARY | 0 | If the gray value is greater than the threshold value, it is the maximum value, and the rest is 0 |

| THREESH_BINARY_INY | 1 | If the gray value is greater than the threshold value, it is 0, and the rest are the maximum values |

| THRESH_TRUNC | 2 | If the gray value is greater than the threshold, it is the threshold, and other values remain unchanged |

| THRESH_TOZERO | 3 | If the gray value is greater than the threshold, it remains unchanged, and the others are 0 |

| THRESH_TOZERO_INV | 4 | If the gray value is greater than the threshold value, it is 0, and the others remain unchanged |

| THRESH_OTSU | 8 | Automatic calculation of global threshold by Otsu method |

| THRESH_TRIANGLE | 16 | Automatic calculation of global threshold by triangle method |

The latter two flags are the methods to obtain the threshold, which can be used together with the previous flags, such as "THRESH_OTSU|THRESH_BINARY". For the first five threshold settings that need to be considered, the automatic acquisition will be more appropriate. However, the thresh parameter should be entered when calling, that is, the system will not recognize them.

The threshold() function uses a threshold globally. In fact, due to the influence of illumination and shadow, it is unreasonable to have only one i threshold globally. adativeThershold() provides a binarization method of two locally adaptive thresholds.

void cv::adaptiveThreshold ( InputArray src, // It can only be CVs_ 8U

OutputArray dst,

double maxValue, // Maximum value of binarization

int adaptiveMethod, // There are two methods of adaptive threshold. Gaussian method or mean method

int thresholdType, // Binary flag can only be binary or binary_ Inv two

int blockSize, // Adaptively determine the neighborhood of the threshold, 3, 5, 7 odd

double C // The constant subtracted from the average or weighted average, which can be positive or negative

)

int main()

{

Mat img = imread("lena.png");

Mat gray;

cvtColor(img, gray, COLOR_BGR2GRAY);

Mat img_B, img_B_V, gray_B, gray_B_V, gray_T, gray_T_V, gray_TRUNC;

//Color image binarization

threshold(img, img_B, 125, 255, THRESH_BINARY);

threshold(img, img_B_V, 125, 255, THRESH_BINARY_INV);

imshow("img_B", img_B);

imshow("img_B_V", img_B_V);

//BINARY binarization of gray image

threshold(gray, gray_B, 125, 255, THRESH_BINARY);

threshold(gray, gray_B_V, 125, 255, THRESH_BINARY_INV);

imshow("gray_B", gray_B);

imshow("gray_B_V", gray_B_V);

//TOZERO transform of gray image

threshold(gray, gray_T, 125, 255, THRESH_TOZERO);

threshold(gray, gray_T_V, 125, 255, THRESH_TOZERO_INV);

imshow("gray_T", gray_T);

imshow("gray_T_V", gray_T_V);

//TRUNC transform of gray image

threshold(gray, gray_TRUNC, 125, 255, THRESH_TRUNC);

imshow("gray_TRUNC", gray_TRUNC);

//Binarization of gray image by Otsu method and triangle method

Mat img_Thr = imread("threshold.png", IMREAD_GRAYSCALE);

Mat img_Thr_O, img_Thr_T;

threshold(img_Thr, img_Thr_O, 100, 255, THRESH_BINARY | THRESH_OTSU);

threshold(img_Thr, img_Thr_T, 125, 255, THRESH_BINARY | THRESH_TRIANGLE);

imshow("img_Thr", img_Thr);

imshow("img_Thr_O", img_Thr_O);

imshow("img_Thr_T", img_Thr_T);

//Adaptive binarization of gray image

Mat adaptive_mean, adaptive_gauss;

adaptiveThreshold(img_Thr, adaptive_mean, 255, ADAPTIVE_THRESH_MEAN_C, THRESH_BINARY, 55, 0);

adaptiveThreshold(img_Thr, adaptive_gauss, 255, ADAPTIVE_THRESH_GAUSSIAN_C, THRESH_BINARY, 55, 0);

imshow("adaptive_mean", adaptive_mean);

imshow("adaptive_gauss", adaptive_gauss);

waitKey(0);

return 0;

}

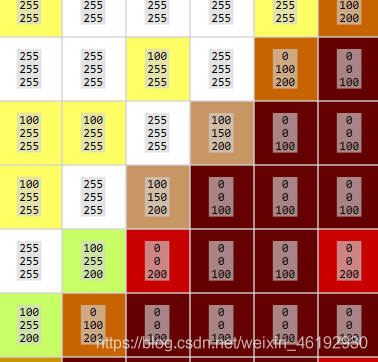

LUT (display lookup table)

The threshold comparison methods described above have only one threshold. To compare with multiple thresholds, use LUT, a mapping table of pixel gray value, which takes the image gray value as the index and the mapped value of gray value as the content of the table.

void cv::LUT ( InputArray src, // The input image matrix can only be cv_8u, multi-channel

InputArray lut, // 1 * 256 pixel gray value lookup table, single channel or multi-channel

OutputArray dst // The output type is consistent with the type of LUT, and the gray value is mapped to a new spatial type

)

#include <iostream>

#include <opencv2/opencv.hpp>

#include <string>

#include <vector>

using namespace std;

using namespace cv;

int main()

{

//LUT lookup table layer 1

uchar lutFirst[256];

for (int i = 0; i < 256; i++)

{

if (i <= 100)

lutFirst[i] = 0;

if (i > 100 && i <= 200) // Is the i pixel of the original image, mapped to the one we specified.

lutFirst[i] = 100;

if (i > 200)

lutFirst[i] = 255;

}

Mat lutOne(1, 256, CV_8UC1, lutFirst);

//LUT lookup table layer 2

uchar lutSecond[256];

for (int i = 0; i < 256; i++)

{

if (i <= 100)

lutSecond[i] = 0;

if (i > 100 && i <= 150)

lutSecond[i] = 100;

if (i > 150 && i <= 200)

lutSecond[i] = 150;

if (i > 200)

lutSecond[i] = 255;

}

Mat lutTwo(1, 256, CV_8UC1, lutSecond);

//LUT lookup table layer 3

uchar lutThird[256];

for (int i = 0; i < 256; i++)

{

if (i <= 100)

lutThird[i] = 100;

if (i > 100 && i <= 200)

lutThird[i] = 200;

if (i > 200)

lutThird[i] = 255;

}

Mat lutThree(1, 256, CV_8UC1, lutThird);

//LUT lookup table matrix with three channels

vector<Mat> mergeMats;

mergeMats.push_back(lutOne);

mergeMats.push_back(lutTwo);

mergeMats.push_back(lutThree);

Mat LutTree;

merge(mergeMats, LutTree); // Channel merging

//Calculate the lookup table for the image

Mat img = imread("lena.png");

if (img.empty())

{

cout << "Please confirm whether the image file name is correct" << endl;

return -1;

}

Mat gray, out0, out1, out2;

cvtColor(img, gray, COLOR_BGR2GRAY);

LUT(gray, lutOne, out0);

LUT(img, lutOne, out1);

LUT(img, LutTree, out2);

imshow("out0", out0);

imshow("out1", out1);

imshow("out2", out2);

waitKey(0);

return 0;

}

As you can see, the pixels of each channel are mapped to our specified value.

summary

| function | explain |

|---|---|

| cvtColor | Color space conversion |

| converTo | Data type conversion |

| saturate_cast<rype>() | Zoom to standard |

| split | Channel segmentation |

| merge | Merging of channels |

| minMaxLoc | Pixel max min and position |

| mean | average value |

| meanStdDev | Mean plus standard deviation |

| max/min | Compare the larger and smaller values of the two images |

| bitwise_and/or/xor/not | Logical operation between two picture pixels |

| threshold | Binarization |

| adaptiveThreshold | Local adaptive binarization |

| LUT | Gray value mapping, multi threshold |

// Build a mask Mat src1 = Mat::zeros(Size(512, 512), CV_8UC3); Rect rect(100, 100, 300, 300); // x,y,w,h src1(rect) = Scalar(255, 255, 255); // Assign values within this range to build a mask