brief introduction

Opencv's "findContours" function is often used by computer vision engineers to detect objects. The existence of OpenCV makes us only need to write a few lines of code to detect the contour (object). However, the contours detected by opencv are usually scattered. For example, a feature rich image may have hundreds to thousands of contours, but that doesn't mean there are so many objects in the image. Some contours belonging to the same object are detected separately, so we are interested in grouping them so that one contour corresponds to one object.

Realization idea

When I encountered this problem in the project, I spent a lot of time trying to use different parameters or different OpenCV functions to detect the contour, but none of them was effective. Then, I did more research and found a post on the OpenCV forum, which mentioned agglomerative clustering. However, the source code is not given. I also found that sklearn supports aggregation clustering, but I didn't use it for two reasons:

- This function seems complicated to me. I don't know how to input the correct parameters. I doubt whether the data type of contour detection is suitable for this function.

- I need to use python 2.7, OpenCV 3.3.1 and Numpy 1.11.3. They are not compatible with the version of sklearn (0.20 +), which supports clustering.

source code

In order to share the functions I wrote, I open source them in Github and publish them below as key points. The following versions are applicable to Python 3. If necessary, use Python 2 To use it in 7, simply change "range" to "xrange".

#!/usr/bin/env python3

import os

import cv2

import numpy

def calculate_contour_distance(contour1, contour2):

x1, y1, w1, h1 = cv2.boundingRect(contour1)

c_x1 = x1 + w1/2

c_y1 = y1 + h1/2

x2, y2, w2, h2 = cv2.boundingRect(contour2)

c_x2 = x2 + w2/2

c_y2 = y2 + h2/2

return max(abs(c_x1 - c_x2) - (w1 + w2)/2, abs(c_y1 - c_y2) - (h1 + h2)/2)

def merge_contours(contour1, contour2):

return numpy.concatenate((contour1, contour2), axis=0)

def agglomerative_cluster(contours, threshold_distance=40.0):

current_contours = contours

while len(current_contours) > 1:

min_distance = None

min_coordinate = None

for x in range(len(current_contours)-1):

for y in range(x+1, len(current_contours)):

distance = calculate_contour_distance(current_contours[x], current_contours[y])

if min_distance is None:

min_distance = distance

min_coordinate = (x, y)

elif distance < min_distance:

min_distance = distance

min_coordinate = (x, y)

if min_distance < threshold_distance:

index1, index2 = min_coordinate

current_contours[index1] = merge_contours(current_contours[index1], current_contours[index2])

del current_contours[index2]

else:

break

return current_contoursbe careful:

- The "calculate_contour_distance" function obtains the bounding box of the contour and calculates the distance between two rectangles.

- For the "merge_contains" function, we just need to use 'numpy Concatenate 'is enough, because each contour is just a numpy array of points.

- Using clustering algorithm, we do not need to know how many clusters there are in advance. Instead, a threshold distance, such as 40 pixels, can be provided to the function, so if the nearest distance in all contours is greater than the threshold, the function stops processing.

result

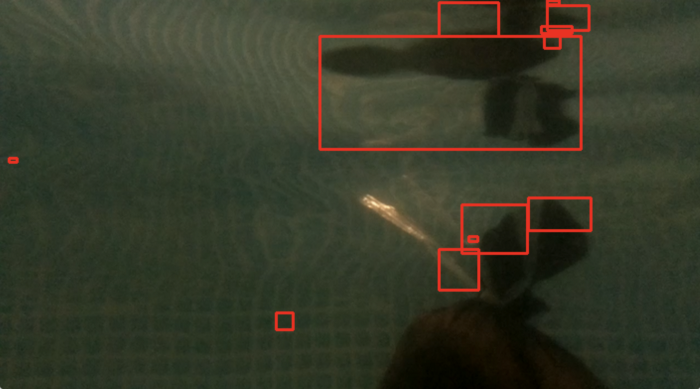

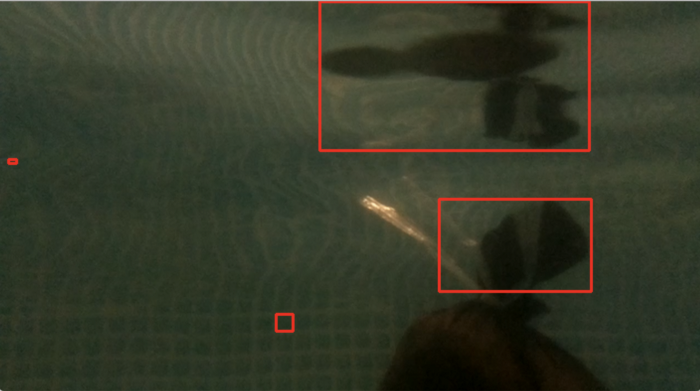

To visualize the clustering effect, see the following two images. The first image shows that 12 contours are detected at first, and only 4 contours are left after clustering, as shown in the second image. These two small objects are caused by noise, and they are not merged because they are too far away compared with the threshold distance.

GITHUB code link:

https://github.com/CullenSUN/fish_vision/blob/master/obstacle_detector_node/src/opencv_utils.py