| Reading guide | This article mainly introduces the OpenCV implementation of separable filtering in detail. The example code in this article is very detailed and has certain reference value. Interested partners can refer to it |

Custom filtering

Whether it is image convolution or filtering, each calculation result in the process of moving the filter on the original image will not affect the calculation results of the later process. Therefore, image filtering is a parallel algorithm, which can greatly speed up the processing speed of image filtering in the processor that can provide parallel computing.

Image filtering also has separability

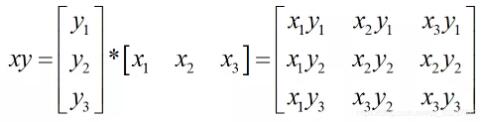

The result of filtering in the X (y) direction and then in the Y (x) direction is the same as that of the overall filtering after combining the filters in the two directions. The combination of filters in two directions is to multiply the filters in two directions to obtain a rectangular filter

void filter2D( InputArray src, OutputArray dst, int ddepth,

InputArray kernel, Point anchor = Point(-1,-1),

double delta = 0, int borderType = BORDER_DEFAULT );

- src: image to be filtered

- dst: output image with the same size, number of channels and data type as the input image src.

- ddepth: the data type (depth) of the output image. It has different value ranges according to the data types of the input image. The specific value range is given in Table 5-1. When the value is - 1, the data type of the output image is automatically selected.

- kernel: filter.

- Anchor: the benchmark (anchor) of the kernel. Its default value is (- 1, - 1), which means that the kernel benchmark is located in the center of the kernel. The reference point is the point in the convolution kernel that coincides with the pixel to be processed, and its position must be inside the convolution kernel.

- delta: bias value, which is added to the calculation result.

- borderType: pixel extrapolation selection flag, and the value range is given in table 3-5. The default parameter is BORDER_DEFAULT, indicating that the reverse order padding does not contain boundary values.

void sepFilter2D( InputArray src, OutputArray dst, int ddepth,

InputArray kernelX, InputArray kernelY,

Point anchor = Point(-1,-1),

double delta = 0, int borderType = BORDER_DEFAULT );

- src: image to be filtered

- dst: output image with the same size, number of channels and data type as the input image src.

- ddepth: the data type (depth) of the output image. It has different value ranges according to the data types of the input image. The specific value range is given in Table 5-1. When the value is - 1, the data type of the output image is automatically selected.

- kernelX: filter in X direction,

- Kernel Y: filter in Y direction.

- Anchor: the benchmark (anchor) of the kernel. Its default value is (- 1, - 1), which means that the kernel benchmark is located in the center of the kernel. The reference point is the point in the convolution kernel that coincides with the pixel to be processed, and its position must be inside the convolution kernel.

- delta: bias value, which is added to the calculation result.

- borderType: pixel extrapolation selection flag, and the value range is given in table 3-5. The default parameter is BORDER_DEFAULT, indicating that the reverse order padding does not contain boundary values.

Simple example

//

// Created by smallflyfly on 2021/6/15.

//

#include "opencv2/highgui.hpp"

#include "opencv2/opencv.hpp"

#include

using namespace std;

using namespace cv;

int main() {

float points[] = {

1, 2, 3, 4, 5,

6, 7, 8, 9, 10,

11, 12, 13, 14, 15,

16, 17, 18, 19, 20,

21, 22, 23, 24, 25

};

Mat data(5, 5, CV_32FC1, points);

// Verify that the Gaussian filter is separable

Mat gaussX = getGaussianKernel(3, 1);

cout << gaussX << endl;

Mat gaussDstData, gaussDataXY;

GaussianBlur(data, gaussDstData, Size(3, 3), 1, 1, BORDER_CONSTANT);

sepFilter2D(data, gaussDataXY, -1, gaussX, gaussX, Point(-1, -1), 0, BORDER_CONSTANT);

cout << gaussDstData << endl;

cout << gaussDataXY << endl;

cout << "######################################" << endl;

// Filtering in Y direction

Mat a = (Mat_(3, 1) << -1, 3, -1);

// Filtering in X direction

Mat b = a.reshape(1, 1);

// XY joint filtering

Mat ab = a * b;

Mat dataX, dataY, dataXY1, dataXY2, dataSepXY;

filter2D(data, dataX, -1, b);

filter2D(dataX, dataXY1, -1, a);

filter2D(data, dataXY2, -1, ab);

sepFilter2D(data, dataSepXY, -1, a, b);

// Verification results

cout << dataXY1 << endl;

cout << dataXY2 << endl;

cout << dataSepXY << endl;

Mat im = imread("test.jpg");

resize(im, im, Size(0, 0), 0.5, 0.5);

Mat imX, imY, imXY, imSepXY;

filter2D(im, imX, -1, b);

filter2D(imX, imXY, -1, a);

sepFilter2D(im, imSepXY, -1, a, b);

imshow("imXY", imXY);

imshow("imSepXY", imSepXY);

waitKey(0);

destroyAllWindows();

return 0;

}