target

In this chapter, you will learn

- Feature matching and finding (homography matrix) homology will be confused from Calib3D module to find known objects in complex images.

Basics

In the previous content, a query image was used, in which some feature points were found, another train image was taken, the features were also found in the image, and the best match was found. In short, the position of some parts of the object is found in another messy image. This information is sufficient to accurately find the object on the train image.

To do this, you can use the function of the calib3d module, CV2. Findgeometry(). If you pass a set of points from an image, it finds the perspective transformation of the object. You can then use cv2.perspectiveTransform() to find the object. At least four correct points are required to find the conversion.

As can be seen from the previous content, there may be some possible errors during matching, which may affect the results. To solve this problem, the algorithm uses RANSAC or leap_ Media (can be determined by the flag). Such a good match that provides a correct estimate is called inliers, and the rest is called outliers. CV2. Findschema() returns a mask specifying Inlier and exception values

realization

First, as usual, find SIFT features in the image and apply ratio test to find the best match.

Now set a condition of at least 10 matches (defined by min_match_count) where the object is found. Otherwise, simply display a message indicating that there is not enough match.

**If enough matches are found, the position of the matching point will be extracted in the two images** To find similar changes. Once this 3x3 transformation matrix is obtained, it will be used to convert the corners of QueryImage into corresponding points in TrainImage, and then draw them.

import cv2

import numpy as np

from matplotlib import pyplot as plt

MIN_MATCH_COUNT = 10

img1 = cv2.imread('box2.png', 0) # query image

img2 = cv2.imread('box_in_scene.png', 0) # train image

# Initial SIFT detector

sift = cv2.xfeatures2d.SIFT_create()

# find the keypoints and descriptiors with SIFT

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)

FLANN_INDEX_KDTREE = 1

index_params = dict(algorithm=FLANN_INDEX_KDTREE, trees=5)

search_params = dict(checks=50)

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1, des2, k=2)

# store all the good matches as per lows ratio test

good = []

for m, n in matches:

if m.distance < 0.7 * n.distance:

good.append(m)

if len(good) > MIN_MATCH_COUNT:

src_pts = np.float32([ kp1[m.queryIdx].pt for m in good ]).reshape(-1,1,2)

dst_pts = np.float32([ kp2[m.trainIdx].pt for m in good ]).reshape(-1,1,2)

M, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, 5.0)

matchesMask = mask.ravel().tolist()

h, w = img1.shape

pts = np.float32([[0, 0], [0, h-1], [w-1, h-1], [w-1, 0]]).reshape(-1, 1, 2)

dst = cv2.perspectiveTransform(pts, M)

img2 = cv2.polylines(img2, [np.int32(dst)], True, 255, 3, cv2.LINE_AA)

else:

print("Not enough matches are found - {} / {}".format(len(good), MIN_MATCH_COUNT))

matchesMask = None

draw_params = dict(

matchColor=(0, 255, 0),

singlePointColor=None,

matchesMask=matchesMask,

flags=2)

img3 = cv2.drawMatches(img1, kp1, img2, kp2, good, None, **draw_params)

plt.imshow(img3, 'gray')

plt.show()

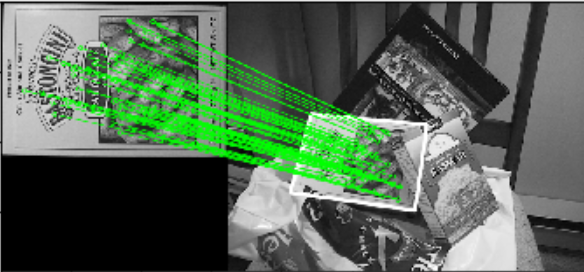

The results are as follows. Mark matching objects with white color in cluttered images

Additional resources

- https://docs.opencv.org/4.1.2/d9/d0c/group__calib3d.html#ga4abc2ece9fab9398f2e560d53c8c9780

- https://docs.opencv.org/4.1.2/d1/de0/tutorial_py_feature_homography.html

- https://blog.csdn.net/liubing8609/article/details/85340015

- https://docs.opencv.org/4.1.2/d2/de8/group__core__array.html#gad327659ac03e5fd6894b90025e6900a7

- cv2.findHomography()