After the PaddleX model is deployed in C + +, Python calls and reads the data returned from the dll

Tip: you can add the directories of all articles in the series here. You need to add the directories manually

For example: the first chapter is the use of pandas, an introduction to Python machine learning

Tip: after writing the article, the directory can be generated automatically. For how to generate it, please refer to the help document on the right

preface

The last post talked about the c + + deployment mode of PaddleX. This post talks about how to use Python to call the generated dll for reasoning, and python reads the prediction results returned by the dll. dllpython calls for classification, detection, semantic segmentation and instance segmentation will be covered in this paper.

1, Prediction result data type C + + predefined

1. Prediction results of four models

Because the result data types of detection, semantic segmentation and instance segmentation are complex, in addition to int and float, other result types containing structures or arrays or lists are packaged into PyObject and passed to python.

Classification result: contains a category, category id and score, which are str, int and float respectively

Test results: category, category ID, score of all test targets and [x,y,h,w] of bboxx

Semantic segmentation results: label map and score map

Instance segmentation result: category id, category, score, bbox and mask of all instances

Predefined C + + results

In paddlex Structure defined in H

Classification results

typedef struct classi {

char* cate;

int cate_id;

float score;

}Classi;

The test results are converted to Python list. The X, y, h and W of bbox are divided into four lists

typedef struct _DETECT {

PyObject* cate;

PyObject* cate_id;

PyObject* score;

PyObject* xmin;

PyObject* ymin;

PyObject* w;

PyObject* h;

} DETECT;

In addition to label and score, label is added to the semantic segmentation result_ Size and score_size

typedef struct _SemSeg {

int label_size;

int score_size;

PyObject* label;

PyObject* score;

}SemSeg;

Instance segmentation also divides x, y, h and W of bbox results into four lists, and adds an additional box_ Num is the number of instances

typedef struct _InsSeg {

PyObject* cate;

PyObject* cate_id;

PyObject* score;

PyObject* xmin;

PyObject* ymin;

PyObject* w;

PyObject* h;

PyObject* InsSegMask;

PyObject* mask_len;

int boxes_num;

} InsSeg;

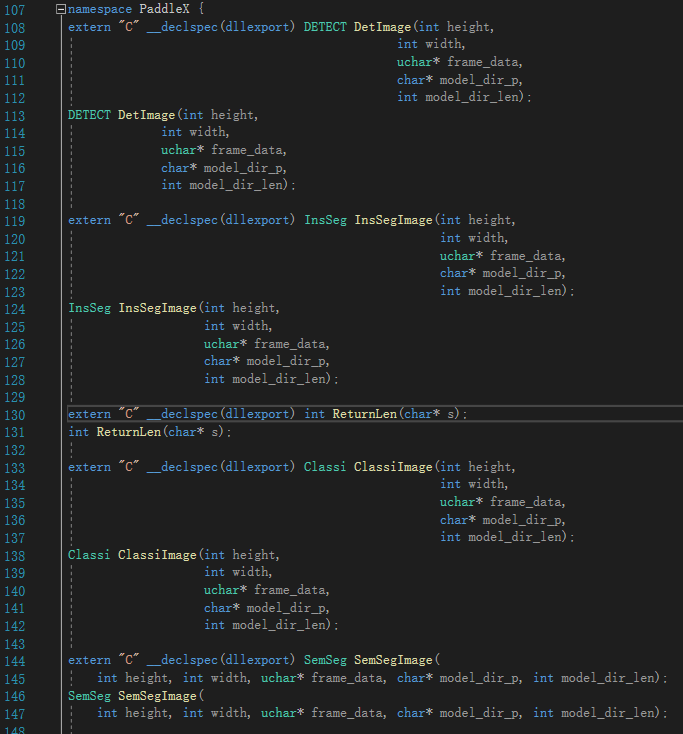

paddlex. Declare external functions in H

2, C + + prediction code

1. Classification C + + prediction code

First declare that you need to Introduce Python into cpp H Library

#include <Python.h>

Classi ClassiImage(int height, int width, uchar* frame_data, char* model_dir_p, int model_dir_len);

Classi ClassiImage(int height,

int width,

uchar* frame_data,

char* model_dir_p,

int model_dir_len) {

int count = 0;

cv::Mat image(height, width, CV_8UC3);

uchar* pxvec = image.ptr<uchar>(0);

for (int row = 0; row < height; row++) {

pxvec = image.ptr<uchar>(row);

for (int col = 0; col < width; col++) {

for (int c = 0; c < 3; c++) {

pxvec[col * 3 + c] = frame_data[count];

count++;

}

}

}

int length = model_dir_len;

char* aa = new char[length];

for (int i = 0; i < length; i++) {

aa[i] = model_dir_p[i * 2];

}

std::string model_dir = aa;

PaddleX::ClsResult result;

std::string key = "";

std::string image_list;

int gpu_id = 0;

bool use_trt = 0;

bool use_gpu = 1;

PaddleX::Model model;

model.Init(model_dir, use_gpu, use_trt, gpu_id, false);

model.predict(image, &result);

Classi ret;

char CATE;

strcpy(&CATE, result.category.c_str());

// ret.cate = NULL;

ret.cate = &CATE;

ret.cate_id = result.category_id;

ret.score = result.score;

return ret;

}

Passed in parameters int height, int width, uchar* frame_data, char* model_dir_p, int model_dir_len is the height and width of the predicted image, the address pointer of the image matrix, the address pointer of the model path, and the length of the model path string.

The following code is used to restore the image matrix. The code refers to

Add link description

//Read image matrix

int count = 0;

cv::Mat image(height, width, CV_8UC3);

uchar* pxvec = image.ptr<uchar>(0);

for (int row = 0; row < height; row++) {

pxvec = image.ptr<uchar>(row);

for (int col = 0; col < width; col++) {

for (int c = 0; c < 3; c++) {

pxvec[col * 3 + c] = frame_data[count];

count++;

}

}

}

The following code is used to restore the model path string

int length = model_dir_len;

char* aa = new char[length];

for (int i = 0; i < length; i++) {

aa[i] = model_dir_p[i * 2];

}

std::string model_dir = aa;

The following code calls the model prediction

PaddleX::ClsResult result; std::string key = ""; std::string image_list; int gpu_id = 0; bool use_trt = 0; bool use_gpu = 1; PaddleX::Model model; model.Init(model_dir, use_gpu, use_trt, gpu_id, false); model.predict(image, &result);

The above three code segments are the same in classification, detection, semantic segmentation and instance segmentation

The following code is the return of classification results. The four models here are different.

Classi ret; char CATE; strcpy(&CATE, result.category.c_str()); // ret.cate = NULL; ret.cate = &CATE; ret.cate_id = result.category_id; ret.score = result.score; return ret;

The category string is assigned to ret.cat through the pointer address

cate_ When ID and score are dB, int and float types can be assigned directly, and ret is finally returned.

2. Detect C + + prediction code

The restoration of image matrix, the restoration of model path and the prediction code of the result are consistent with the classification. The following paragraph returns the detection result, which is more complex than the classification. We put x, y, h and W of category, category ID, score and bbox in different lists, and finally return the structure ret of all the results

std::cout << "result mask data size: " << typeid(result.boxes[1].mask.data[0]).name() << std::endl;

DETECT ret;

ret.cate = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

PyList_Append(ret.cate, Py_BuildValue("s", result.boxes[i].category));

}

ret.cate_id = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

int cate_id = result.boxes[i].category_id;

PyList_Append(ret.cate_id, Py_BuildValue("i",result.boxes[i].category_id));

}

ret.score = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

//int a = round(result.boxes[i].score * 1000000);

PyList_Append(ret.score, Py_BuildValue("f", result.boxes[i].score));

}

ret.xmin = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

PyList_Append(ret.xmin, Py_BuildValue("f", result.boxes[i].coordinate[0]));

}

ret.ymin = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

PyList_Append(ret.ymin, Py_BuildValue("f", result.boxes[i].coordinate[1]));

}

ret.w = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

PyList_Append(ret.w, Py_BuildValue("f", result.boxes[i].coordinate[2]));

}

ret.h = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

PyList_Append(ret.h, Py_BuildValue("f", result.boxes[i].coordinate[3]));

}

return ret;

3. Semantic segmentation C + + prediction code

The restoration of image matrix, the restoration of model path and the prediction code of the result are consistent with the classification. The following paragraph returns the result of semantic segmentation. We use a loop to read the label from the prediction result_ Map and score_map and write it into the list, and also return label_size and score_size.

PaddleX::SegResult result;

std::string model_dir = aa;

std::string key = "";

std::string image_list;

int gpu_id = 0;

bool use_trt = 0;

bool use_gpu = 1;

PaddleX::Model model;

model.Init(model_dir, use_gpu, use_trt, gpu_id, false);

model.predict(image, &result);

SemSeg ret;

ret.label_size = result.label_map.data.size();

ret.score_size = result.score_map.data.size();

ret.label = PyList_New(0);

std::cout << "type of ret.label" << typeid(ret.label).name()

<< std::endl;

for (int i = 0; i < result.label_map.data.size(); i++) {

PyList_Append(ret.label, Py_BuildValue("i", result.label_map.data[i]));

}

ret.score = PyList_New(0);

for (int i = 0; i < result.score_map.data.size(); i++) {

int a = round(result.score_map.data[i] * 1000000);

PyList_Append(ret.score, Py_BuildValue("i", a));

}

return ret;

4. Instance segmentation C + + prediction code

The restoration of image matrix, the restoration of model path and the prediction code of the result are consistent with the classification. The following paragraph returns the result of example segmentation. Read category, category ID, score, x, y, h, w, inssegmask and mask of bbox from each bbox cycle in the boxes of prediction results_ Len and boxes_num is written into different pylists.

InsSeg ret;

ret.cate = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

PyList_Append(ret.cate, Py_BuildValue("s", result.boxes[i].category));

}

ret.cate_id = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

int cate_id = result.boxes[i].category_id;

PyList_Append(ret.cate_id, Py_BuildValue("i", result.boxes[i].category_id));

}

ret.score = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

// int a = round(result.boxes[i].score * 1000000);

PyList_Append(ret.score, Py_BuildValue("f", result.boxes[i].score));

}

ret.xmin = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

PyList_Append(ret.xmin, Py_BuildValue("f", result.boxes[i].coordinate[0]));

}

ret.ymin = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

PyList_Append(ret.ymin, Py_BuildValue("f", result.boxes[i].coordinate[1]));

}

ret.w = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

PyList_Append(ret.w, Py_BuildValue("f", result.boxes[i].coordinate[2]));

}

ret.h = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

PyList_Append(ret.h, Py_BuildValue("f", result.boxes[i].coordinate[3]));

}

ret.InsSegMask = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

for (int j = 0; j < result.boxes[i].mask.data.size(); j++) {

PyList_Append(ret.InsSegMask, Py_BuildValue("i",

result.boxes[i].mask.data[j]));

//std::cout << result.boxes[j].mask.data[j] << std::endl;

}

}

ret.mask_len = PyList_New(0);

for (int i = 0; i < result.boxes.size(); i++) {

PyList_Append(ret.mask_len, Py_BuildValue("i", result.boxes[i].mask.data.size()));

}

ret.boxes_num = result.boxes.size();

return ret;

4, python call dll

1. Define each model result class in Python

class InsSegResult(Structure):

_fields_ = [

("cate", py_object),

("cate_id", py_object),

("score", py_object),

("xmin", py_object),

("ymin", py_object),

("w", py_object),

("h", py_object),

("InsSegMask", py_object),

("mask_len", py_object),

("boxes_num", c_int)

]

class ClassiResult(Structure):

_fields_ = [

("cate", c_wchar_p),

("cate_id", c_int),

("score", c_float)

]

class SemSegResult(Structure):

_fields_ = [

("label_size", c_int),

("score_size", c_int),

("label", py_object),

("score", py_object)

]

class DetResult(Structure):

_fields_ = [

("cate", py_object),

("cate_id", py_object),

("score", py_object),

("xmin", py_object),

("ymin", py_object),

("w", py_object),

("h", py_object)

]

2. Result display

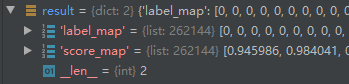

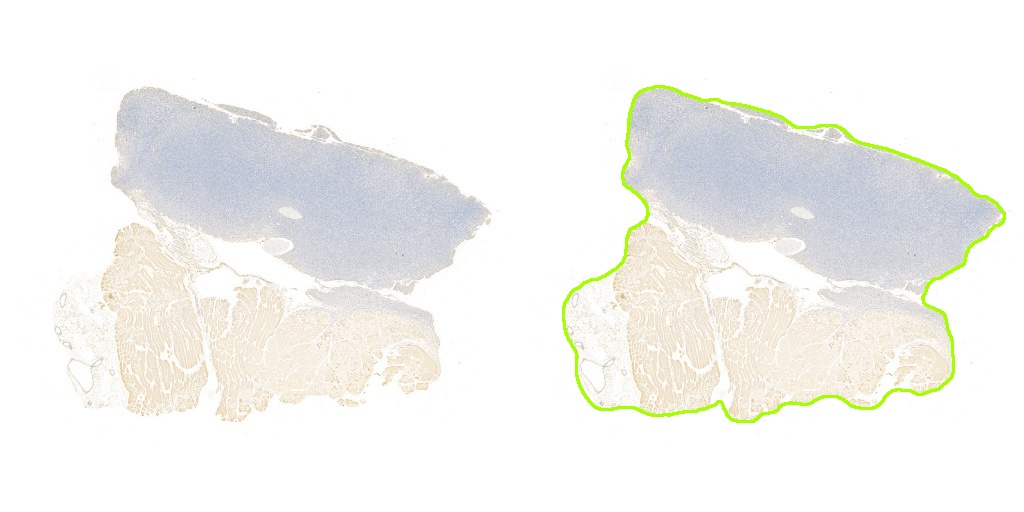

The following figure is the result of the semantic segmentation model I deduced by calling dll with python. The model is UNet, and the result returned by reading dll with python is successful

Here are some results drawn by UNet

summary

Tip: here is a summary of the article:

For example, the above is what we want to talk about today. This paper only briefly introduces the use of pandas, which provides a large number of functions and methods that enable us to process data quickly and conveniently.