do_fault refers to the processing of file page fault. When vma is specifically mapped to a file, page fault will be regarded as file page fault:

static vm_fault_t handle_pte_fault(struct vm_fault *vmf)

{

... ...

if (!vmf->pte) {

if (vma_is_anonymous(vmf->vma))

return do_anonymous_page(vmf);

else

return do_fault(vmf);

}

... ...

}When the pte table is empty, that is, it has not been mapped before, and it is a file mapping, do will be called_ Fault processing.

File mapping criteria

vma_is_anonymous function is the criterion for judging whether anonymous mapping or file mapping. When it is true, it is anonymous mapping, otherwise it is file mapping:

static inline bool vma_is_anonymous(struct vm_area_struct *vma)

{

return !vma->vm_ops;

}

vma->vm_ If OPS is NULL, it is an anonymous mapping, otherwise it is a file mapping.

do_fault

do_ Key functions in fault function processing:

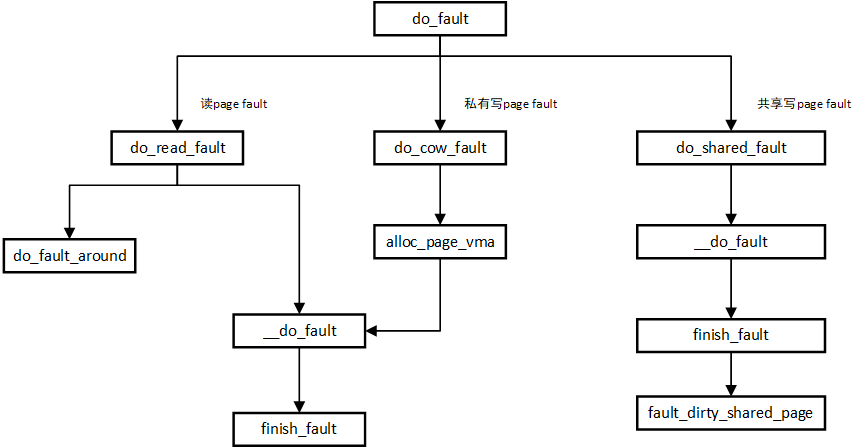

- do_ Faults are mainly handled according to three situations: read page fault, private write page fault and shared write page fault, which correspond to do respectively_ read_ fault,do_cow_fault,do_shared_fault.

- Finally, the core processing function__ do_fault and finish_fault processing.

do_ The source code of fault is as follows:

static vm_fault_t do_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

struct mm_struct *vm_mm = vma->vm_mm;

vm_fault_t ret;

/*

* The VMA was not fully populated on mmap() or missing VM_DONTEXPAND

*/

if (!vma->vm_ops->fault) {

/*

* If we find a migration pmd entry or a none pmd entry, which

* should never happen, return SIGBUS

*/

if (unlikely(!pmd_present(*vmf->pmd)))

ret = VM_FAULT_SIGBUS;

else {

vmf->pte = pte_offset_map_lock(vmf->vma->vm_mm,

vmf->pmd,

vmf->address,

&vmf->ptl);

/*

* Make sure this is not a temporary clearing of pte

* by holding ptl and checking again. A R/M/W update

* of pte involves: take ptl, clearing the pte so that

* we don't have concurrent modification by hardware

* followed by an update.

*/

if (unlikely(pte_none(*vmf->pte)))

ret = VM_FAULT_SIGBUS;

else

ret = VM_FAULT_NOPAGE;

pte_unmap_unlock(vmf->pte, vmf->ptl);

}

} else if (!(vmf->flags & FAULT_FLAG_WRITE))

ret = do_read_fault(vmf);

else if (!(vma->vm_flags & VM_SHARED))

ret = do_cow_fault(vmf);

else

ret = do_shared_fault(vmf);

/* preallocated pagetable is unused: free it */

if (vmf->prealloc_pte) {

pte_free(vm_mm, vmf->prealloc_pte);

vmf->prealloc_pte = NULL;

}

return ret;

}

- Check VMA - > VM_ Ops - > fault set, VMA - > VM_ Ops - > fault is the page fault of the corresponding file. If it is not defined, it returns failure. pte_offset_map_lock is to obtain the corresponding PTE again, mainly to prevent other CPUs or hardware from modifying the PTE at this time.

- ! (VMF - > Flags & fault_flag_write): call do if it is to read pag fault_ read_ fault.

- Write page fault privately and call do_cow_fault processing.

- Share write page fault, call do_shared_fault.

- If pre applied PTE VMF - > prealloc_ PTE is not used. Release it.

do_read_fault

do_read_fault is the page fault in which the read file mapping occurs:

static vm_fault_t do_read_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

vm_fault_t ret = 0;

/*

* Let's call ->map_pages() first and use ->fault() as fallback

* if page by the offset is not ready to be mapped (cold cache or

* something).

*/

if (vma->vm_ops->map_pages && fault_around_bytes >> PAGE_SHIFT > 1) {

ret = do_fault_around(vmf);

if (ret)

return ret;

}

ret = __do_fault(vmf);

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY)))

return ret;

ret |= finish_fault(vmf);

unlock_page(vmf->page);

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY)))

put_page(vmf->page);

return ret;

}

- vma->vm_ ops->map_ If pages is not empty, it indicates that preloading can be carried out. Up to 16 pages in the file can be loaded into memory in advance to reduce the number of page faut, do_fault_around is handled by the preload function.

- If VMA - > VM_ ops->map_ If pages is empty, it means that preloading is not supported. Only one page of the application is loaded, and the content of a page with the corresponding address in the file is loaded__ do_fault

- finish_fault refreshes the applied physical into pte

- unlock_page: release the page lock to prevent the file from entering the page_ do_fault is locked, causing the file to be locked.

do_cow_fault

do_cow_fault write page fault for private file mapping. If the address is inherited, the old mapping relationship will already exist. You need to re apply for the physical page and refresh the physical page corresponding to the virtual address:

static vm_fault_t do_cow_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

vm_fault_t ret;

if (unlikely(anon_vma_prepare(vma)))

return VM_FAULT_OOM;

vmf->cow_page = alloc_page_vma(GFP_HIGHUSER_MOVABLE, vma, vmf->address);

if (!vmf->cow_page)

return VM_FAULT_OOM;

if (mem_cgroup_charge(vmf->cow_page, vma->vm_mm, GFP_KERNEL)) {

put_page(vmf->cow_page);

return VM_FAULT_OOM;

}

cgroup_throttle_swaprate(vmf->cow_page, GFP_KERNEL);

ret = __do_fault(vmf);

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY)))

goto uncharge_out;

if (ret & VM_FAULT_DONE_COW)

return ret;

copy_user_highpage(vmf->cow_page, vmf->page, vmf->address, vma);

__SetPageUptodate(vmf->cow_page);

ret |= finish_fault(vmf);

unlock_page(vmf->page);

put_page(vmf->page);

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY)))

goto uncharge_out;

return ret;

uncharge_out:

put_page(vmf->cow_page);

return ret;

}

- anon_vma_prepare whether to initialize reverse mapping.

- vmf->COW_ Page calls alloc_ in advance for new pages. page_ VMA applies for physical memory mainly to prevent the COW scenario (copy on write), that is, the mapping relationship is inherited from the parent process. In order to speed up the fork processing, only copy the mapping relationship and mark it as read-only. When writing the address, a write page fault will occur, re apply for physical memory, and copy the old memory into the newly applied physical memory, And save the modified contents to the physical memory of the new application.

- mem_cgroup_charge: add the applied page to CGroup.

- __ do_fault: the file content will be loaded in the application physical page.

- copy_user_highpage: copy the previous VMA - > page to VMF - > cow_ Page.

- __ Status: set to update page.

- finish_fault: refresh page table pte.

- unlock_page: Unlock VMF - > page.

- put_ Page (VMF - > page): the reference count of VMF - > page is reduced by one. At this time, VMF - > page is no longer used because a new physical page is requested for address, so the count should be reduced by one.

do_shared_fault

do_shared_fault is used to handle shared page write fault scenarios:

static vm_fault_t do_shared_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

vm_fault_t ret, tmp;

ret = __do_fault(vmf);

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY)))

return ret;

/*

* Check if the backing address space wants to know that the page is

* about to become writable

*/

if (vma->vm_ops->page_mkwrite) {

unlock_page(vmf->page);

tmp = do_page_mkwrite(vmf);

if (unlikely(!tmp ||

(tmp & (VM_FAULT_ERROR | VM_FAULT_NOPAGE)))) {

put_page(vmf->page);

return tmp;

}

}

ret |= finish_fault(vmf);

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE |

VM_FAULT_RETRY))) {

unlock_page(vmf->page);

put_page(vmf->page);

return ret;

}

ret |= fault_dirty_shared_page(vmf);

return ret;

}

- __ do_fault: load the contents of the file to the page.

- Define VMA - > VM_ ops->page_mkwrite, call page if defined_ Mkwrite, used to notify that the page is writable.

- finish_fault: refresh page table

- Because this page is a shared page, other processes or threads may also use it to read the file, so when the page is written, it will be marked as dirty page, indicating that the file memory is different from the hard disk. It is necessary to write the contents to the hard disk and call fault_. dirty_ shared_ Page processes, and finally calls balance_. dirty_ pages_ Ratelimited determines whether to write dirty pages to the hard disk according to the system conditions.

__do_fault

__ do_fault is calling a custom VM_ Ops - > fault load the contents of a page file into the corresponding physical memory:

/*

* The mmap_lock must have been held on entry, and may have been

* released depending on flags and vma->vm_ops->fault() return value.

* See filemap_fault() and __lock_page_retry().

*/

static vm_fault_t __do_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

vm_fault_t ret;

/*

* Preallocate pte before we take page_lock because this might lead to

* deadlocks for memcg reclaim which waits for pages under writeback:

* lock_page(A)

* SetPageWriteback(A)

* unlock_page(A)

* lock_page(B)

* lock_page(B)

* pte_alloc_pne

* shrink_page_list

* wait_on_page_writeback(A)

* SetPageWriteback(B)

* unlock_page(B)

* # flush A, B to clear the writeback

*/

if (pmd_none(*vmf->pmd) && !vmf->prealloc_pte) {

vmf->prealloc_pte = pte_alloc_one(vmf->vma->vm_mm);

if (!vmf->prealloc_pte)

return VM_FAULT_OOM;

smp_wmb(); /* See comment in __pte_alloc() */

}

ret = vma->vm_ops->fault(vmf);

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY |

VM_FAULT_DONE_COW)))

return ret;

if (unlikely(PageHWPoison(vmf->page))) {

if (ret & VM_FAULT_LOCKED)

unlock_page(vmf->page);

put_page(vmf->page);

vmf->page = NULL;

return VM_FAULT_HWPOISON;

}

if (unlikely(!(ret & VM_FAULT_LOCKED)))

lock_page(vmf->page);

else

VM_BUG_ON_PAGE(!PageLocked(vmf->page), vmf->page);

return ret;

}

- If pte is empty, apply for VMF - > prealloc in advance_ pte, which can prevent in VM_ Applying for physical memory in Ops - > fault causes a lock problem.

- vma->vm_ Ops - > fault load the contents of the file corresponding to the size of page into the contents.

- PageHWPoison: if a hardware error occurs on the physical page at this time, it returns failure and releases the page.

finish_fault

finish_fault refresh the pte page table, that is, the physical address corresponding to the virtual address:

/**

* finish_fault - finish page fault once we have prepared the page to fault

*

* @vmf: structure describing the fault

*

* This function handles all that is needed to finish a page fault once the

* page to fault in is prepared. It handles locking of PTEs, inserts PTE for

* given page, adds reverse page mapping, handles memcg charges and LRU

* addition.

*

* The function expects the page to be locked and on success it consumes a

* reference of a page being mapped (for the PTE which maps it).

*

* Return: %0 on success, %VM_FAULT_ code in case of error.

*/

vm_fault_t finish_fault(struct vm_fault *vmf)

{

struct page *page;

vm_fault_t ret = 0;

/* Did we COW the page? */

if ((vmf->flags & FAULT_FLAG_WRITE) &&

!(vmf->vma->vm_flags & VM_SHARED))

page = vmf->cow_page;

else

page = vmf->page;

/*

* check even for read faults because we might have lost our CoWed

* page

*/

if (!(vmf->vma->vm_flags & VM_SHARED))

ret = check_stable_address_space(vmf->vma->vm_mm);

if (!ret)

ret = alloc_set_pte(vmf, page);

if (vmf->pte)

pte_unmap_unlock(vmf->pte, vmf->ptl);

return ret;

}

- If page fault is written privately, the physical memory is VMF - > cow_ Page, otherwise VMF - > page.

- alloc_set_pte: refresh page table pte.

- pte_unmap_unlock:pte unlock.

do_fault_around

Page fault caused by reading memory is yes, if VM is defined for the corresponding file mapping_ ops->map_ Pages, you can also load some file contents behind the page fault address into memory in advance, which can reduce the number of page faults later:

do_fault_around() tries to map few pages around the fault address. The hope is that the pages will be needed soon and this will lower the number of faults to handle.

fault_around_bytes

fault_around_bytes determines when do is called_ fault_ When around, the maximum memory size that can be preloaded is 65536=64K by default:

static unsigned long fault_around_bytes __read_mostly = rounddown_pow_of_two(65536);

do_fault_around source code analysis

static vm_fault_t do_fault_around(struct vm_fault *vmf)

{

unsigned long address = vmf->address, nr_pages, mask;

pgoff_t start_pgoff = vmf->pgoff;

pgoff_t end_pgoff;

int off;

vm_fault_t ret = 0;

nr_pages = READ_ONCE(fault_around_bytes) >> PAGE_SHIFT;

mask = ~(nr_pages * PAGE_SIZE - 1) & PAGE_MASK;

vmf->address = max(address & mask, vmf->vma->vm_start);

off = ((address - vmf->address) >> PAGE_SHIFT) & (PTRS_PER_PTE - 1);

start_pgoff -= off;

/*

* end_pgoff is either the end of the page table, the end of

* the vma or nr_pages from start_pgoff, depending what is nearest.

*/

end_pgoff = start_pgoff -

((vmf->address >> PAGE_SHIFT) & (PTRS_PER_PTE - 1)) +

PTRS_PER_PTE - 1;

end_pgoff = min3(end_pgoff, vma_pages(vmf->vma) + vmf->vma->vm_pgoff - 1,

start_pgoff + nr_pages - 1);

if (pmd_none(*vmf->pmd)) {

vmf->prealloc_pte = pte_alloc_one(vmf->vma->vm_mm);

if (!vmf->prealloc_pte)

goto out;

smp_wmb(); /* See comment in __pte_alloc() */

}

vmf->vma->vm_ops->map_pages(vmf, start_pgoff, end_pgoff);

/* Huge page is mapped? Page fault is solved */

if (pmd_trans_huge(*vmf->pmd)) {

ret = VM_FAULT_NOPAGE;

goto out;

}

/* ->map_pages() haven't done anything useful. Cold page cache? */

if (!vmf->pte)

goto out;

/* check if the page fault is solved */

vmf->pte -= (vmf->address >> PAGE_SHIFT) - (address >> PAGE_SHIFT);

if (!pte_none(*vmf->pte))

ret = VM_FAULT_NOPAGE;

pte_unmap_unlock(vmf->pte, vmf->ptl);

out:

vmf->address = address;

vmf->pte = NULL;

return ret;

}

- First, calculate the memory size that needs to be preloaded, Nr_ pages = READ_ ONCE(fault_around_bytes) >> page_ Shift, set fault_ around_ The number of pages required for byte conversion is 16 pages by default.

- mask = ~(nr_pages * PAGE_SIZE - 1) & PAGE_ Mask: set fault_around_bytes are converted according to page alignment, which is called mask to facilitate subsequent address conversion.

- VMF - > address = max (address & mask, VMF - > VMA - > vm_start): the calculation requires the virtual address VMF - > addresses, which cannot be lower than the file start address VMF - > VMA - > VM_ Start and align the addresses according to the mask

- off: offset between the address and the page fault address after calculating the alignment

- start_pgoff -= off: subtract the excess offset of alignment.

- end_pgoff: calculate the end offset according to the page alignment.

- end_pgoff: configure fault according to the actual file size and_ around_ Bytes can preload the page nr_pages to determine the actual end offset position end_pgoff

- If the PTE is empty, you need to apply for physical memory for the PTE table in advance and store it in VMF - > prealloc_ pte

- After the actual work is completed, the vm_ is called. ops->map_ Pages to load the page. The starting position is start_pgoff, end position_ pgoff

- huge page is not supported

- VMF - > pte: update page table pte.

- pte_unmap_unlock: release the page PTE lock.