Welcome to focus on Python, data analysis, data mining and fun tools!

When we buy products online, many people like to use credit cards. But credit card fraud often happens around us. Network security is becoming a vital part of our life.

In order to solve this problem, we need to use machine learning algorithm to build an abnormal behavior recognition system. If it is found suspicious, stop the operation.

In this article, I will share an end-to-end model training method, from data acquisition to final model screening. My favorite partners are welcome to pay attention, like and support.

About data

The data used in this article is kaggle data: https://www.kaggle.com/mlg-ulb/creditcardfraud , this data set is the real bank transactions of European cardholders in 2013. For security reasons, the data has been converted to PCA version, with 29 feature columns and 1 class column.

Import the necessary libraries

Here I will import all the necessary libraries. Since the credit card data feature is the converted version of PCA, we do not need to perform feature selection again. Otherwise, it is recommended to use RFE, RFECV, SelectKBest, and VIF score to find features that fit the model.

#Packages related to general operating system & warnings

import os

import warnings

warnings.filterwarnings('ignore')

#Packages related to data importing, manipulation, exploratory data #analysis, data understanding

import numpy as np

import pandas as pd

from pandas import Series, DataFrame

from termcolor import colored as cl # text customization

#Packages related to data visualizaiton

import seaborn as sns

import matplotlib.pyplot as plt

%matplotlib inline

#Setting plot sizes and type of plot

plt.rc("font", size=14)

plt.rcParams['axes.grid'] = True

plt.figure(figsize=(6,3))

plt.gray()

from matplotlib.backends.backend_pdf import PdfPages

from sklearn.model_selection import train_test_split, GridSearchCV

from sklearn import metrics

from sklearn.impute import MissingIndicator, SimpleImputer

from sklearn.preprocessing import PolynomialFeatures, KBinsDiscretizer, FunctionTransformer

from sklearn.preprocessing import StandardScaler, MinMaxScaler, MaxAbsScaler

from sklearn.preprocessing import LabelEncoder, OneHotEncoder, LabelBinarizer, OrdinalEncoder

import statsmodels.formula.api as smf

import statsmodels.tsa as tsa

from sklearn.linear_model import LogisticRegression, LinearRegression, ElasticNet, Lasso, Ridge

from sklearn.neighbors import KNeighborsClassifier, KNeighborsRegressor

from sklearn.tree import DecisionTreeClassifier, DecisionTreeRegressor, export_graphviz, export

from sklearn.ensemble import BaggingClassifier, BaggingRegressor,RandomForestClassifier,RandomForestRegressor

from sklearn.ensemble import GradientBoostingClassifier,GradientBoostingRegressor, AdaBoostClassifier, AdaBoostRegressor

from sklearn.svm import LinearSVC, LinearSVR, SVC, SVR

from xgboost import XGBClassifier

from sklearn.metrics import f1_score

from sklearn.metrics import accuracy_score

from sklearn.metrics import confusion_matrix

Import dataset

Importing datasets is very simple. You only need to use the pandas module in python to import it, run the following command, and the data set can be downloaded at the end of the text.

data=pd.read_csv("creditcard.csv")

Data processing and understanding

With regard to these data, you may notice that the data set is unbalanced, because the vast majority of normal transactions in the data set, and only a small percentage of transactions are fraudulent.

Let's examine the data distribution.

Total_transactions = len(data)

normal = len(data[data.Class == 0])

fraudulent = len(data[data.Class == 1])

fraud_percentage = round(fraudulent/normal*100, 2)

print(cl('Total number of Trnsactions are {}'.format(Total_transactions), attrs = ['bold']))

print(cl('Number of Normal Transactions are {}'.format(normal), attrs = ['bold']))

print(cl('Number of fraudulent Transactions are {}'.format(fraudulent), attrs = ['bold']))

print(cl('Percentage of fraud Transactions is {}'.format(fraud_percentage), attrs = ['bold']))

We can also use the following code to check for null values.

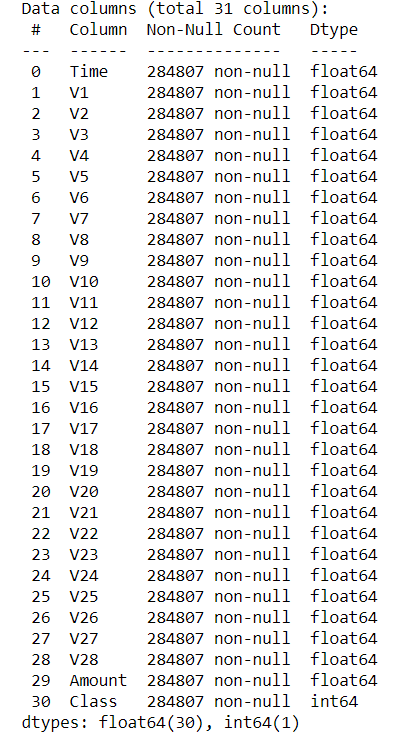

data.info()

According to the count of each column, we have no null value. In addition, you can try to apply the feature selection method to check whether the results are optimized.

I observed that 28 features in the data are the converted version of PCA, but the field "Amount" is original. When checking the minimum and maximum values, I found that there was a great difference and might deviate from our results.

In this case, I sort it out as follows.

sc = StandardScaler() amount = data['Amount'].values data['Amount'] = sc.fit_transform(amount.reshape(-1, 1))

We also have a variable, time, which may be an external determinant. We abandon it in our modeling process.

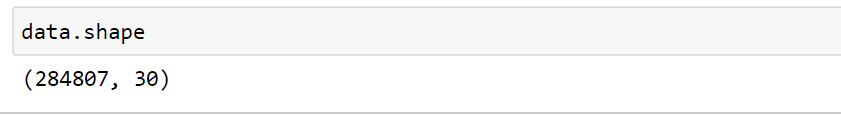

We can also check for any duplicate data. There are 284807 rows in the dataset before any duplicate data is deleted.

duplicate removal

data.drop_duplicates(inplace=True)

Therefore, we have about 9000 duplicate transactions.

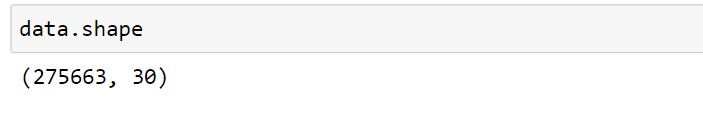

Separation of training and testing

Before splitting training and testing, we need to define dependent and independent variables. The dependent variable is also called X and the independent variable is called y.

X = data.drop('Class', axis = 1).values

y = data['Class'].values

Now let's split the training and test data.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.25, random_state = 1)

So, we now have two different data sets.

Build model

We will try different machine learning models. Defining a model is much easier. One line of code can define our model. Similarly, one line of code can fit the model on our data. We can also adjust these models by selecting different optimization parameters.

1) Decision tree

DT = DecisionTreeClassifier(max_depth = 4, criterion = 'entropy') DT.fit(X_train, y_train) dt_yhat = DT.predict(X_test)

Let's look at the accuracy of the decision tree model.

print('Accuracy score of the Decision Tree model is {}'.format(accuracy_score(y_test, tree_yhat)))

Accuracy score of the Decision Tree model is 0.999288989494457

View the F1 score of the decision tree model.

print('F1 score of the Decision Tree model is {}'.format(f1_score(y_test, tree_yhat)))

F1 score of the Decision Tree model is 0.776255707762557

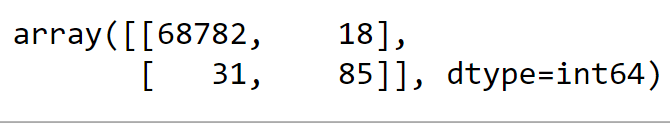

To view the confusion matrix:

confusion_matrix(y_test, tree_yhat, labels = [0, 1])

2) Random forest

rf = RandomForestClassifier(max_depth = 4) rf.fit(X_train, y_train) rf_yhat = rf.predict(X_test)

Let's look at the accuracy of the random forest model.

print('Accuracy score of the Random Forest model is {}'.format(accuracy_score(y_test, rf_yhat)))

Accuracy score of the Random Forest model is 0.9993615415868594

View the F1 score of the random forest model.

print('F1 score of the Random Forest model is {}'.format(f1_score(y_test, rf_yhat)))

F1 score of the Random Forest model is 0.7843137254901961

3)XGBoost

xgb = XGBClassifier(max_depth = 4) xgb.fit(X_train, y_train) xgb_yhat = xgb.predict(X_test)

Let's look at the accuracy of the XGBoost model.

print('Accuracy score of the XGBoost model is {}'.format(accuracy_score(y_test, xgb_yhat)))

Accuracy score of the XGBoost model is 0.9995211561901445

View the F1 score of the XGBoost model.

print('F1 score of the XGBoost model is {}'.format(f1_score(y_test, xgb_yhat)))

F1 score of the XGBoost model is 0.8421052631578947

conclusion

We just got 99.95% credit card fraud detection accuracy. This figure is not surprising because our data is for one category.

According to our F1 score, XGBoost is the winner of our case. The only thing to note here is the data we use for model training. The data feature is a transformed version of PCA.

Technical exchange

Welcome to reprint, collect, gain, praise and support!

At present, a technical exchange group has been opened, with more than 2000 friends. The addition methods are as follows:

The following methods can be used. The best way to add is: source + Interest direction, which is convenient to find like-minded friends

- Method 1: send the following pictures to wechat for long press recognition and reply to group addition;

- Mode 2: directly add a small assistant micro signal: Python 666. Remarks: from CSDN

- Mode three, WeChat search official account: Python learning and data mining, background reply: add group