The whole process of building hadoop cluster includes

- preparation in advance

- Install zookeeper and configure the environment

- Compile, install and start hadoop

- Install HDFS to manage namenode and dataname to manage cluster hard disk resources

- Install and start yarn to build MapReduce to manage cpu and memory resources

01 Preparations:

1. Deployment environment

- VMware15

- CentOS7

- jdk8

First, start a centos7 virtual machine and configure Huawei cloud yum source

[root@localhost ~]# cp -a /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.bak [root@localhost ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo [root@localhost ~]# yum clean all [root@localhost ~]# yum makecache [root@localhost ~]# yum update -y

Then install some messy common software

[root@localhost ~]# yum install -y openssh-server vim gcc gcc-c++ glibc-headers bzip2-devel lzo-devel curl wget openssh-clients zlib-devel autoconf automake cmake libtool openssl-devel fuse-devel snappy-devel telnet unzip zip net-tools.x86_64 firewalld systemd

2. Close the firewall and SELinux settings of the virtual machine

[root@localhost ~]# firewall-cmd --state [root@localhost ~]# systemctl stop firewalld.service [root@localhost ~]# systemctl disable firewalld.service [root@localhost ~]# systemctl is-enabled firewalld.service

[root@localhost ~]# / usr/sbin/sestatus-v View the status of selinux [root@localhost ~]# vim /etc/selinux/config #Modified state to close SELINUX=disabled [root@localhost ~]# reboot

3. Install jdk8 and configure environment variables

Download address http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

[root@localhost ~]# rpm -ivh jdk-8u144-linux-x64.rpm [root@localhost ~]# vim /etc/profile #Modify the environment variables and add the following at the end of the file export JAVA_HOME=/usr/java/jdk1.8.0_144 export JRE_HOME=$JAVA_HOME/jre export PATH=$PATH:$JAVA_HOME/bin export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

After the modification, the session will only take effect for this user. If you want the session to take effect permanently and globally, you need to

[root@localhost ~]# source /etc/profile

4. Install ntpdate service to facilitate synchronization time after each virtual machine hangs up and restarts

[root@localhost ~]# yum install -y ntp-tools [root@localhost ~]# ntpdate ntp1.aliyun.com

5. Create hadoop users and user groups and join the wheel group

[root@localhost ~]# useradd hadoop [root@localhost ~]# passwd hadoop

Only users in wheel group are allowed to log in to root user through su - root command, which improves security

[root@localhost ~]# sed -i 's/#auth\t\trequired\tpam_wheel.so/auth\t\trequired\tpam_wheel.so/g' '/etc/pam.d/su' [root@localhost ~]# cp /etc/login.defs /etc/login.defs_bak [root@localhost ~]# echo "SU_WHEEL_ONLY yes" >> /etc/login.defs

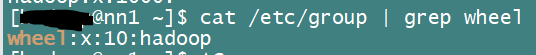

Add hadoop users to the wheel group

[root@localhost ~]# gpasswd -a hadoop wheel [root@localhost ~]# Cat/etc/group | grep wheel to see if hadoop has joined the wheel group

6. Configure the hosts file of the virtual machine

[root@localhost ~]# vim /etc/hosts 192.168.10.3 nn1.hadoop #This is the native ip, hostname will be configured together later 192.168.10.4 nn2.hadoop 192.168.10.5 s1.hadoop 192.168.10.6 s2.hadoop 192.168.10.7 s3.hadoop

7. Cloning four other virtual machines with virtual machine cloning function

After completing, change the hostname of each machine and configure static ip, which requires consistency and correspondence with the hosts file above.

[root@localhost ~]# hostnamectl set-hostname nn1.hadoop [root@localhost ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33 TYPE="Ethernet" PROXY_METHOD="none" BROWSER_ONLY="no" BOOTPROTO="static" #Modify this to static IPADDR="192.168.10.3" #Here add the corresponding ip for each of your virtual machines NETMASK="255.255.255.0" #Add to GATEWAY="192.168.10.2" #Add Gateway to your Virtual Machine DNS="192.168.10.2" #Add to NM_CONTROLLED="no" #If you add it, it will automatically take effect after you have changed the file. It may be that the direct network will hang up. DEFROUTE="yes" IPV4_FAILURE_FATAL="no" IPV6INIT="yes" IPV6_AUTOCONF="yes" IPV6_DEFROUTE="yes" IPV6_FAILURE_FATAL="no" IPV6_ADDR_GEN_MODE="stable-privacy" NAME="ens33" UUID="49f05112-b80b-45c2-a3ec-d64c76ed2d9b" DEVICE="ens33" ONBOOT="yes"

[root@localhost ~]# System CTL stop Network Manager. service stops network management services [root@localhost ~]# System CTL disable NetworkManager. service boot prohibits self-startup [root@localhost ~]# System CTL restart network. service restart network services

At this point, we should have five virtual machines, all according to the following ip and host name configuration, five have set up the hosts file.

192.168.10.3 nn1.hadoop

192.168.10.4 nn2.hadoop

192.168.10.5 s1.hadoop

192.168.10.6 s2.hadoop

192.168.10.7 s3.hadoop

Then the firewall and selinux have been closed, jdk8 has been installed correctly and environment variables have been configured, and hadoop user groups have been created correctly and added to wheel group.

8. Configure five machines to ssh each other for secret login

The above operations are performed under root users, and now switch to hadoop users for almost all subsequent operations. -

[root@nn1 ~]# su - hadoop notes that the "-" here means that both user and environment variables are switched at the same time. [hadoop@nn1 ~]$ At this time the delegate entered. hadoop Users, and#And $represent the identity distinction between root users and regular users, respectively

Start building ssh secret-free

The idea is to create their own keys on each machine first, and then aggregate these keys. pubs into the ~/. ssh/authorized_keys file and distribute them to all machines together. At this time, the mutual confidential SSH access of five machines is realized.

[hadoop@nn1 ~]$ pwd View the current path to ensure that hadoop User's home lower /home/hadoop [hadoop@nn1 ~]$ mkdir .ssh [hadoop@nn1 ~]$ chmod 700 ./.ssh [hadoop@nn1 ~]$ ll -a drwx------ 2 hadoop hadoop 132 7 June 1622:13 .ssh

[hadoop@nn1~]$ssh-keygen-t RSA creates key files

At this time, we have completed the setting of NN1 machine (nn1 as our main operating machine in the future). Follow the steps above to get the remaining four machines ready. Then rename the. / ssh/id_rsa.pub of the other four machines (to prevent duplication and replacement), and send it to nn1. / ssh / below.

[hadoop@nn2 ~]$ scp ~/.ssh/id_rsa.pub hadoop@nn1.hadoop ~/.ssh/id_rsa.pubnn2

At this point, there should be five pub files (not renamed) under nn1 ~/. ssh /, and then they should be appended to the files below.

[hadoop@nn1 ~]$ touch authorized_keys [hadoop@nn1 ~]$ chmod 600 authorized_keys [hadoop@nn1 ~]$ cat ./ssh/id_rsa.pub >> authorized_keys [hadoop@nn1 ~]$ cat ./ssh/id_rsa.pubnn2 >> authorized_keys [hadoop@nn1 ~]$ cat ./ssh/id_rsa.pubs1 >> authorized_keys ............

Finally, send the file in batches to the other four machines (forgot to write the batch script, so send it in turn with the scp command)

So far, the ssh configurations of the five machines have been completed, and we can test them separately (omitted).

9. Batch scripting

Because there are five machines, many operations need to be done together, so scripts need to be executed in batches.

#File name: ips "nn1.hadoop" "nn2.hadoop" "s1.hadoop" "s2.hadoop" "s3.hadoop"

#!/bin/bash #File name: ssh_all.sh RUN_HOME=$(cd "$(dirname "$0")"; echo "${PWD}") NOW_LIST=(`cat ${RUN_HOME}/ips`) SSH_USER="hadoop" for i in ${NOW_LIST[@]}; do f_cmd="ssh $SSH_USER@$i \"$*\"" echo $f_cmd if eval $f_cmd; then echo "OK" else echo "FAIL" fi done

#!/bin/bash #File name: ssh_root.sh RUN_HOME=$(cd "$(dirname "$0")"; echo "${PWD}") NOW_LIST=(`cat ${RUN_HOME}/ips`) SSH_USER="hadoop" for i in ${NOW_LIST[@]}; do f_cmd="ssh $SSH_USER@i ~/exe.sh \"$*\"" echo $f_cmd if eval $f_cmd; then echo "OK" else echo "FAIL" fi done

#File name exe.sh cmd=$* su - <<EOF $cmd EOF

#!/bin/bash RUN_HOME=$(cd "(dirname "$0")"; echo "${PWD}") NOW_LIST=(`cat ${UN_HOME}/ips`) SSH_USER="hadoop" for i in ${NOW_LIST[@]}; do f_cmd="scp $1 $SSH_USER@i:$2" echo $f_cmd if eval $f_cmd; then echo "ok" else echo "FAIL" fi done

Preliminary preparations are over, and the next install and configure zookeeper