catalogue

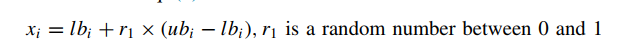

Population initialization code:

Introduction:

Honey badger is a mammal with black and white fur. It often lives in the semi deserts and rainforests of Africa, southwest Asia and the Indian subcontinent. It is famous for its fearless nature.

In recent years, the field of numerical optimization has attracted the research community to propose and develop various meta heuristic optimization algorithms. This paper presents a new meta heuristic optimization algorithm - honey badger algorithm (HBA). Inspired by the intelligent foraging behavior of honeybadger, the algorithm mathematically develops an effective search strategy for solving optimization problems. In HbA, the dynamic search behavior of honey badger is described as the exploration and development stage through mining and honey discovery methods. In addition, using controlled randomization technology, HBA can maintain sufficient population diversity even at the end of the search process. In order to evaluate the efficiency of HbA, 24 standard benchmark functions, 17 test suites of CEC and four engineering design problems were solved. The solutions obtained using HbA have been compared with ten famous meta heuristic algorithms, including simulated annealing (SA), particle swarm optimization (PSO), covariance matrix adaptive evolution strategy (CMA-ES), linear population size reduction adaptive differential evolution variant based on success history (L-shape), moth flame optimization (MFO), elephant grazing optimization (EHO) Whale optimization algorithm (WOA), grasshopper optimization algorithm (GOA), heat exchange optimization (TEO) and Harris Hawkes optimization (HHO). Experimental results and statistical analysis show that compared with other methods used in this study, HBA is effective in solving optimization problems with complex search space, and has advantages in convergence speed and exploration development balance.

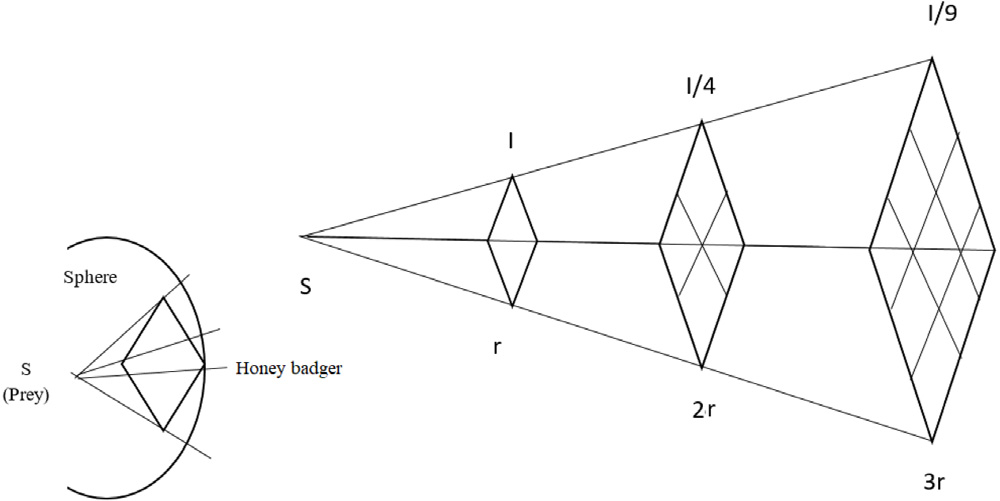

Generally speaking, a honey badger can use its sense of smell to continuously locate its prey. Honey badger likes honey, but it is not good at locating beehives. But interestingly, the honey Guide (a bird) can find the hive, but can't get honey. These phenomena even form a cooperative relationship: the guide bird takes the honey badger to the hive, which uses its front claws to open the hive, and then both enjoy the rewards of teamwork.

So in order to find the hive, the honey badger either sniffs and digs wildly, or follows the guide bird. The first case is called mining mode, while the second case is honey mode. In the digging mode, the honey badger uses its olfactory ability to locate the hive. When it approaches the hive, it will choose a suitable place to dig; In honey mode, the honey badger directly uses the guide bird to locate the hive.

Paper download link:

Honeybadger algorithm original paper PDF - machine learning document resources - CSDN Download

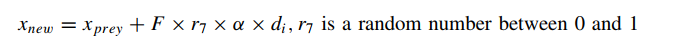

Main flow of algorithm:

1. Initialization phase

Initialize the number of honeybadgers (population size N) and their respective positions

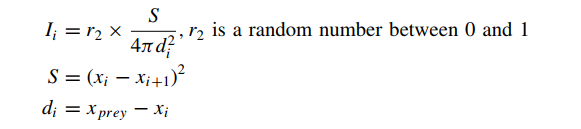

2. Define density factor

The intensity and concentration of the prey are related to the distance between it and the prey. I'm a honey badger. Ii is the odor intensity of prey; If the smell is high, the movement will be fast, and vice versa, given by the inverse square law

3. Update density factor

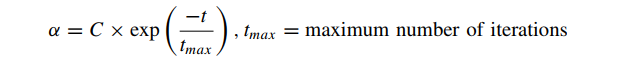

Density factor( α) Control time-varying randomization to ensure a smooth transition from exploration to development. Update the decreasing factor that decreases with the number of iterations α, To reduce randomization over time using formula (3):

4. Escape from local optimum

This step and the next two steps are used to escape the local optimal solution region. In this case, the proposed algorithm uses a flag F to change the search direction, so as to take advantage of the opportunity of high search efficiency agent to strictly scan the search space

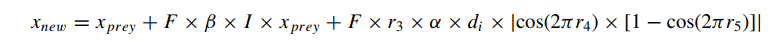

5. Update agent location

5.1 excavation stage

5.2 attraction stage

Source code analysis:

Honey badger algorithm mainly includes population initialization, fitness evaluation, population renewal, honey attraction calculation, renewal density factor and so on.

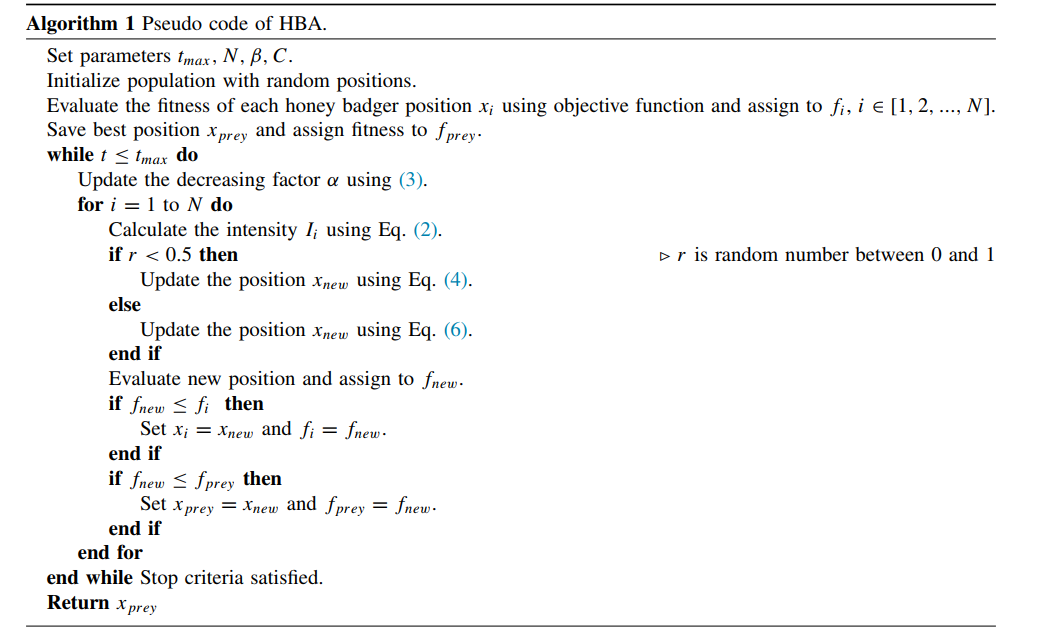

Population initialization code:

function [X]=initialization(N,dim,up,down)

if size(up,2)==1

X=rand(N,dim).*(up-down)+down;

end

if size(up,2)>1

for i=1:dim

high=up(i);low=down(i);

X(:,i)=rand(N,1).*(high-low)+low;

end

end

endAlgorithm operation code:

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Honey Badger Algorithm source code

% paper:

% Hashim, Fatma A., Essam H. Houssein, Kashif Hussain, Mai S. % Mabrouk, Walid Al-Atabany.

% "Honey Badger Algorithm: New Metaheuristic Algorithm for % % Solving Optimization Problems."

% Mathematics and Computers in Simulation, 2021.

%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%

function [Xprey, Food_Score,CNVG] = HBA(objfunc, dim,lb,ub,tmax,N)

beta = 6; % the ability of HB to get the food Eq.(4)

C = 2; %constant in Eq. (3)

vec_flag=[1,-1];

%initialization

X=initialization(N,dim,ub,lb);

%Evaluation

fitness = fun_calcobjfunc(objfunc, X);

[GYbest, gbest] = min(fitness);

Xprey = X(gbest,:);

for t = 1:tmax

alpha=C*exp(-t/tmax); %density factor in Eq. (3)

I=Intensity(N,Xprey,X); %intensity in Eq. (2)

for i=1:N

r =rand();

F=vec_flag(floor(2*rand()+1));

for j=1:1:dim

di=((Xprey(j)-X(i,j)));

if r<.5

r3=rand; r4=rand; r5=rand;

Xnew(i,j)=Xprey(j) +F*beta*I(i)* Xprey(j)+F*r3*alpha*(di)*abs(cos(2*pi*r4)*(1-cos(2*pi*r5)));

else

r7=rand;

Xnew(i,j)=Xprey(j)+F*r7*alpha*di;

end

end

FU=Xnew(i,:)>ub;FL=Xnew(i,:)<lb;Xnew(i,:)=(Xnew(i,:).*(~(FU+FL)))+ub.*FU+lb.*FL;

tempFitness = fun_calcobjfunc(objfunc, Xnew(i,:));

if tempFitness<fitness(i)

fitness(i)=tempFitness;

X(i,:)= Xnew(i,:);

end

end

end

...

end

Objective function:

function Y = fun_calcobjfunc(func, X)

N = size(X,1);

for i = 1:N

Y(i) = func(X(i,:));

end

end

function I=Intensity(N,Xprey,X)

for i=1:N-1

di(i) =( norm((X(i,:)-Xprey+eps))).^2;

S(i)=( norm((X(i,:)-X(i+1,:)+eps))).^2;

end

di(N)=( norm((X(N,:)-Xprey+eps))).^2;

S(N)=( norm((X(N,:)-X(1,:)+eps))).^2;

for i=1:N

r2=rand;

I(i)=r2*S(i)/(4*pi*di(i));

end

end

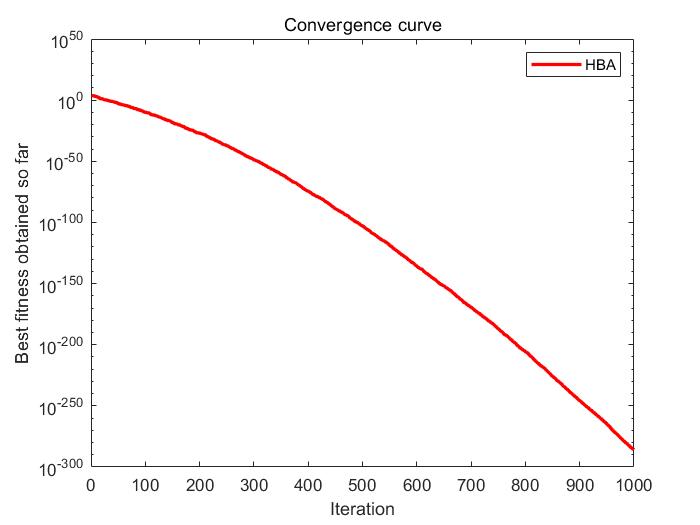

Algorithm test:

The best location= 8.7878e-145 -7.5945e-149 -5.1457e-145 -1.2854e-145 -1.9505e-146 -4.7359e-147 1.7092e-144 -3.8037e-145 -2.195e-145 -1.809e-146 8.3212e-145 -1.339e-145 -3.7686e-145 1.8082e-145 -2.389e-146 3.5925e-146 -7.7913e-145 -2.7082e-145 2.0061e-145 8.9784e-146 -6.0477e-145 -3.403e-145 -4.933e-146 -3.4153e-145 -1.4537e-144 1.0279e-145 3.1436e-145 6.0489e-146 -4.4064e-146 9.6791e-146 The best fitness score = 1.1571e-286 >>

Algorithm summary

HBA algorithm design is simple and clear. The algorithm ensures good local search ability through honey attraction, and guides the individuals in the population to approach the optimal individuals. In addition, the density factor is set to ensure the global search ability of the algorithm. Through the test, it can be seen that the algorithm has good performance in solving most test functions. At present, the algorithm has also been studied by many scholars and applied to many fields,

If you need the original paper or source code, or have any ideas about algorithm improvement, please communicate with me in private letters~

About the author: graduated from the control major of top universities in China, focusing on the research of intelligent optimization algorithm and its application, operation research and optimization scheduling, solving of various solvers, artificial intelligence machine learning, deep learning algorithm and application. He is good at the improvement of various algorithms, modeling and solving of various mathematical models, and is familiar with the use of various solvers.