Article catalog

preface

With the continuous development of artificial intelligence, machine learning technology is becoming more and more important. Many people have started learning machine learning. This paper introduces the basic content of machine learning - k-nearest neighbor algorithm and the implementation of python.

Tip: the following is the main content of this article. The following cases can be used for reference

1, What is the k-nearest neighbor algorithm?

K-nearest neighbor (KNN) classification algorithm is a basic classification and regression method proposed by Cover T and Hart P in 1967. It is also one of the simplest machine learning algorithms. The idea of this method is: in the feature space, if most of the k nearest (i.e. the nearest) samples near a sample belong to a certain category, the sample also belongs to this category. Generally speaking, we only select the first k most similar data in the sample data set, which is the source of K in the k-nearest neighbor algorithm. Generally, K is an integer not greater than 20. Finally, the most frequent classification among the k most similar data is selected as the classification of new data. (he who is near to Zhu is red, and he who is near to ink is black)

2, sklearn handwritten numeral recognition based on k-nearest neighbor algorithm

1. General flow of k-nearest neighbor algorithm

(1) Data collection: crawlers can be used to collect data, and free or charged data provided by third parties can also be used. Generally speaking, the data is stored in a txt text file in a certain format for easy analysis and processing.

(2) Prepare data: parse and preprocess data using Python.

(3) Analyze data: there are many ways to analyze data, such as visualizing data using Matplotlib.

(4) Test algorithm: calculate the error rate.

(5) Use algorithm: if the error rate is within an acceptable range, you can run k-nearest neighbor algorithm for classification.

2. Actual combat background

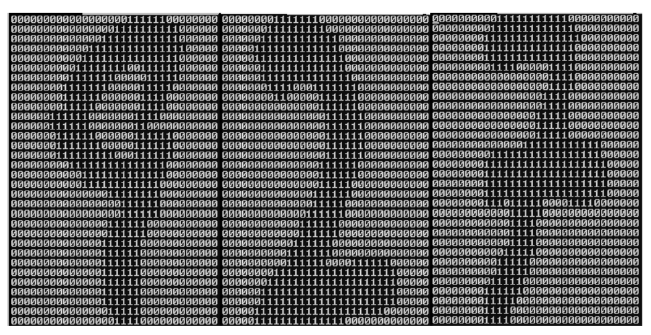

In this section, we construct a handwriting recognition system using K-nearest neighbor classifier step by step. For simplicity, the system constructed here can only recognize numbers 0 to 9, see Figure 1-1. The number to be recognized has been processed into a black-and-white image with the same color and size 1: width and height of 32 pixels x 32 pixels using graphics processing software.

Although using text format to store images can not make effective use of memory space, we still convert images to text format for ease of understanding.

Figure 1-1 Examples of handwritten digital data sets

In order to use the classifier of the previous two examples, we must format the image into a vector. We will convert a 32x32 binary image matrix into 1x1024 vectors, so that the classifiers used in the first two sections can process digital image information.

3. Prepare data: convert the image into test vector

Download dataset:

http://labfile.oss.aliyuncs.com/courses/777/digits.zip (source network)

There are two folders under the digits Directory:

trainingDigits: training data, 1934 files, about 200 files per number.

testDigits: test data, 946 files, about 100 files per number.

A handwritten number is stored in each file, and the file name is similar to 0_7.txt, the first number 0 means that the handwritten number in the file is 0, and the following 7 is a serial number.

We first write a function img2vector to convert the image into a vector: this function creates a 1x1024 NumPy array, then opens the given file, reads out the first 32 lines of the file circularly, stores the first 32 character values of each line in the NumPy array, and finally returns the array.

def img2vector(filename):

returnVect = zeros((1,1024))

fr = open(filename)

for i in range(32):

lineStr = fr.readline()

for j in range(32):

returnVect[0,32*i+j] = int(lineStr[j])

return returnVect

Then in Python Enter the following command on the command line to test img2vector Function, and then compare it with the file opened by the text editor:

>>> testVector = kNN.img2vector('testDigits/0_13.txt')

>>> testVector[0,0:31]

array([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 1., 1., 1., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.])

>>> testVector[0,31:63]

array([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 1., 1., 1., 1., 1., 1., 1., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.])4. Test algorithm

We have put the data processing component into a format that the classer can recognize. Next, we input these data into the classifier to detect the execution effect of the classifier. Before writing these codes, we must ensure that the from os import listdir is written to the beginning of the file. The main function of this code is to import the function listdir from the os module, which can list the file name of a given directory.

Test steps:

(1) Read the training data to the vector (handwritten picture data), and extract the category label list (the real number corresponding to each vector) from the data file name

(2) Read the test data to the vector and extract the category label from the data file name

(3) The K-nearest neighbor algorithm is implemented to test the test data, and the classification results are obtained

(4) Compare with the actual category label and record the classification error rate

(5) Print the classified data and error rate of each data file as the final result

def handwritingClassTest():

hwLabels = []

trainingFileList = listdir('digits/trainingDigits')

m = len(trainingFileList)

trainingMat = np.zeros((m, 1024))

for i in range(m):

fileNameStr = trainingFileList[i]

fileStr = fileNameStr.split('.')[0]

classNumStr = int(fileStr.split('_')[0])

hwLabels.append(classNumStr)

trainingMat[i, :] = img2vector(

'digits/trainingDigits/%s' % fileNameStr)

testFileList = listdir('digits/testDigits')

errorCount = 0.0

mTest = len(testFileList)

for i in range(mTest):

fileNameStr = testFileList[i]

fileStr = fileNameStr.split('.')[0]

classNumStr = int(fileStr.split('_')[0])

vectorUnderTest = img2vector('digits/testDigits/%s' % fileNameStr)

classifierResult = classify0(vectorUnderTest, trainingMat, hwLabels, 3)

print("Test sample %d, Classifier prediction: %d, Real category: %d" %

(i+1, classifierResult, classNumStr))

# Judge whether the result of K-nearest neighbor algorithm is accurate

if (classifierResult != classNumStr):

errorCount += 1.0

# Print error rate

print("\n Error classification count: %d" % errorCount)

print("\n Misclassification ratio: %f" % (errorCount/float(mTest)))

Finally, enter handwritingClassTest() to test the output of the function:

kNN.handwritingClassTest() the classifier came back with:6, the real answer is: 0 the classifier came back with:4, the real answer is: 0 .................. the classifier came back with:1, the real answer is: 7 the classifier came back with:1, the real answer is: 7 the classifier came back with:7, the real answer is: 7 the classifier came back with:0, the real answer is: 8 .................. the classifier came back with:7, the real answer is: 9 the classifier came back with:9, the real answer is: 9 the total number of errors is: 11 the total error rate is: 0.011628

K-nearest neighbor algorithm recognizes handwritten numeral data set, and the error rate is 1.2%. Changing the value of K, modifying the function handwritingClassTest, randomly selecting training samples and changing the number of training samples will affect the error rate of k-nearest neighbor algorithm.

summary

k-nearest neighbor algorithm is the most simple and effective algorithm for classifying data. k-nearest neighbor algorithm is case-based learning. When using the algorithm, we must have training sample data close to the actual data. k-nearest neighbor algorithm must save all data sets. If the training data set is large, it must use a lot of storage space. In addition, the distance value must be calculated for each data in the dataset, which may be very time-consuming in actual use.

Another defect of k-nearest neighbor algorithm is that it can not give any data infrastructure information, so we can not know the characteristics of average instance samples and typical instance samples.