Background description

When the collection of too many indicators exceeds the processing capacity of a single prometheus, we usually use multiple prometheus instances to collect certain indicator fragments respectively, and then aggregate them through thanos to provide unified query services.

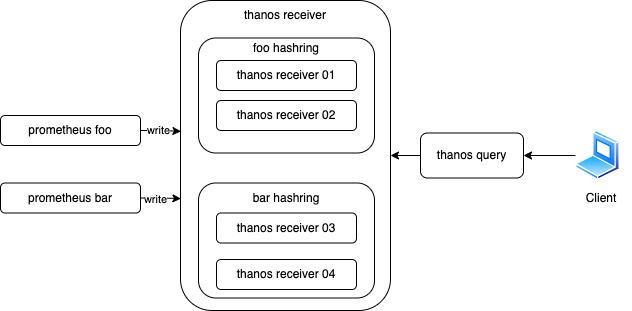

Through simple experiments, this paper demonstrates thanos's ability of piecemeal data collection and aggregation query. The architecture is as follows:

The experiment assumes that there are two prometheus instances, which collect different monitoring indicators and slice them respectively. The prometheus instances write the data to thanos receiver through remote write. The receiver operates in multi copy mode and writes the data of different tenants to different receiver instances through hashing, that is, receiver 01 and receiver 02 store the monitoring index data from prometheus foo, while receiver 03 and receiver 04 store the monitoring index data from prometheus bar, Finally, aggregate the data of multiple receivers through thanos query to provide a unified query interface.

experimental verification

This article starts prometheus and thanos based on docker. Docker needs to be pre installed on the machine. Ubuntu or CentOS environment can be installed in one piece through the following command:

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

1. Deploy Prometheus instance

# cat << EOF > prometheus-foo-conf.yaml

global:

scrape_interval: 5s

external_labels:

cluster: foo

replica: 0

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['127.0.0.1:9090']

remote_write:

- url: 'http://127.0.0.1:10908/api/v1/receive'

headers:

THANOS-TENANT: foo

EOF

# docker run -d --net=host --rm \

-v $(pwd)/prometheus-foo-conf.yaml:/etc/prometheus/prometheus.yaml \

-u root \

--name prometheus-foo \

quay.io/prometheus/prometheus:v2.27.0 \

--config.file=/etc/prometheus/prometheus.yaml \

--storage.tsdb.path=/prometheus \

--web.listen-address=:9090 \

--web.enable-lifecycle

# cat << EOF > prometheus-bar-conf.yaml

global:

scrape_interval: 5s

external_labels:

cluster: bar

replica: 0

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['127.0.0.1:9090']

remote_write:

- url: 'http://127.0.0.1:10908/api/v1/receive'

headers:

THANOS-TENANT: bar

EOF

# docker run -d --net=host --rm \

-v $(pwd)/prometheus-bar-conf.yaml:/etc/prometheus/prometheus.yaml \

-u root \

--name prometheus-bar \

quay.io/prometheus/prometheus:v2.27.0 \

--config.file=/etc/prometheus/prometheus.yaml \

--storage.tsdb.path=/prometheus \

--web.listen-address=:9091 \

--web.enable-lifecycle

Note that in the experiment, we judge the source of data indicators by adding the labels of cluster:foo and cluster:bar to prometheus, and through remote_ The headers field (THANOS-TENANT) in write distinguishes different tenants so that thanos receiver s can be stored in pieces.

2. Deploy Thanos Receiver instance

# cat << EOF > hashring.json

[

{

"hashring": "foo",

"endpoints": ["127.0.0.1:10907","127.0.0.1:10910"],

"tenants": ["foo"]

},

{

"hashring": "bar",

"endpoints": ["127.0.0.1:10913","127.0.0.1:10916"],

"tenants": ["bar"]

}

]

EOF

# docker run -d --rm \

--net=host \

-v $(pwd)/hashring.json:/data/hashring.json \

--name receive-01 \

quay.io/thanos/thanos:v0.25.0 \

receive \

--tsdb.path "/receive/data" \

--grpc-address 127.0.0.1:10907 \

--receive.local-endpoint 127.0.0.1:10907 \

--receive.hashrings-file=/data/hashring.json \

--receive.replication-factor 2 \

--label "receive_cluster=\"thanos\"" \

--http-address 127.0.0.1:10909 \

--remote-write.address 127.0.0.1:10908

# docker run -d --rm \

--net=host \

-v $(pwd)/hashring.json:/data/hashring.json \

--name receive-02 \

quay.io/thanos/thanos:v0.25.0 \

receive \

--tsdb.path "/receive/data" \

--grpc-address 127.0.0.1:10910 \

--receive.local-endpoint 127.0.0.1:10910 \

--receive.hashrings-file=/data/hashring.json \

--receive.replication-factor 2 \

--label "receive_cluster=\"thanos\"" \

--http-address 127.0.0.1:10912 \

--remote-write.address 127.0.0.1:10911

# docker run -d --rm \

--net=host \

-v $(pwd)/hashring.json:/data/hashring.json \

--name receive-03 \

quay.io/thanos/thanos:v0.25.0 \

receive \

--tsdb.path "/receive/data" \

--grpc-address 127.0.0.1:10913 \

--receive.local-endpoint 127.0.0.1:10913 \

--receive.hashrings-file=/data/hashring.json \

--receive.replication-factor 2 \

--label "receive_cluster=\"thanos\"" \

--http-address 127.0.0.1:10915 \

--remote-write.address 127.0.0.1:10914

# docker run -d --rm \

--net=host \

-v $(pwd)/hashring.json:/data/hashring.json \

--name receive-04 \

quay.io/thanos/thanos:v0.25.0 \

receive \

--tsdb.path "/receive/data" \

--grpc-address 127.0.0.1:10916 \

--receive.local-endpoint 127.0.0.1:10916 \

--receive.hashrings-file=/data/hashring.json \

--receive.replication-factor 2 \

--label "receive_cluster=\"thanos\"" \

--http-address 127.0.0.1:10918 \

--remote-write.address 127.0.0.1:10917

Note: all thanos receiver s use the same hashing configuration, so they can find each other's peer addresses and forward request s according to the tenant ID. Each receiver must be configured with -- receive Local endpoint parameter and ensure that it is consistent with the address configured in hashing, so as to correctly realize the mutual discovery between peers. If you forget to configure this parameter for a receiver, a context deadline exceeded exception will occur:

caller=handler.go:351 component=receive component=receive-handler msg="failed to handle request" err="context deadline exceeded"

3. Deploy Thanos Query instance

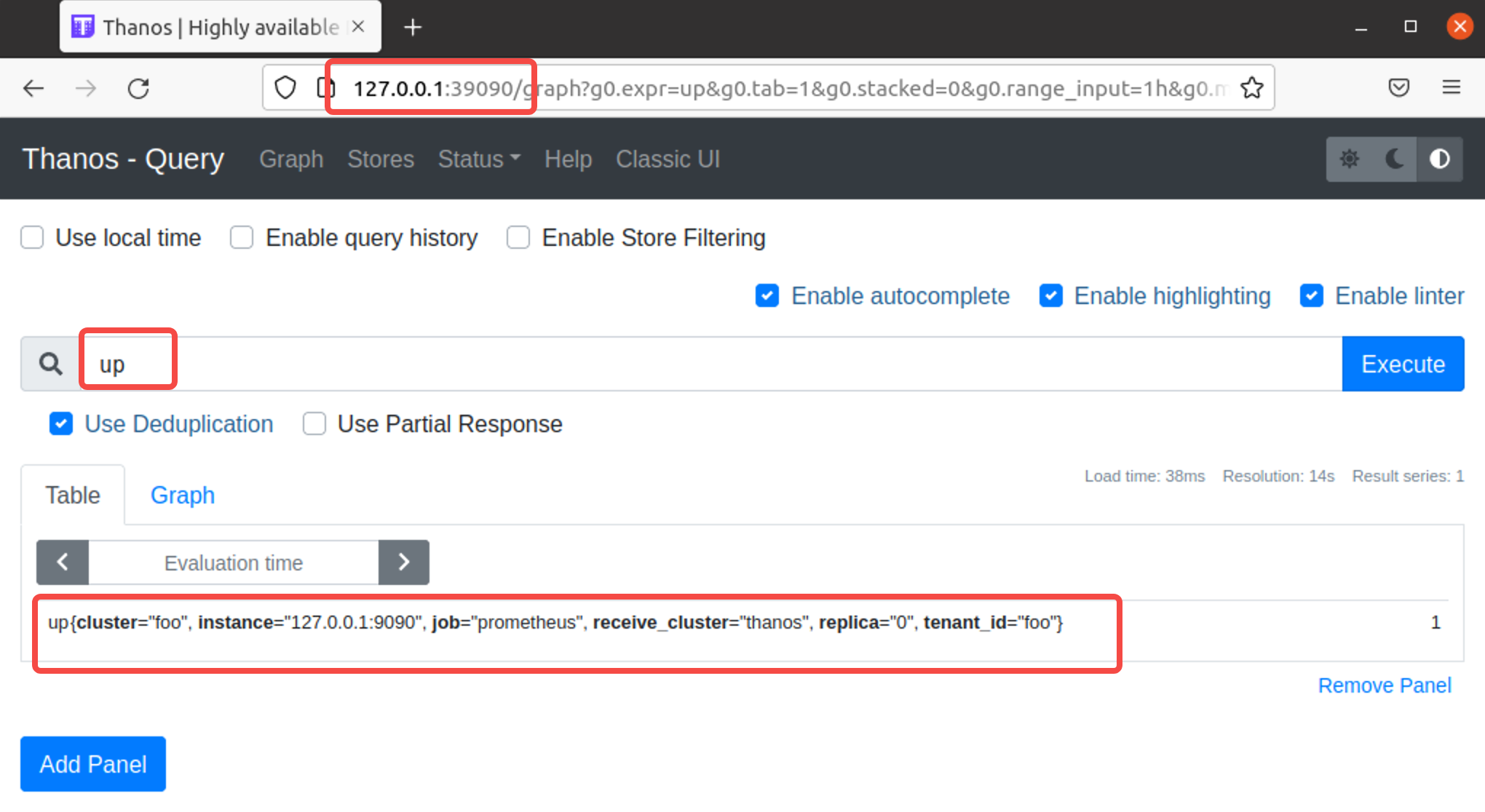

3.1 connect thanos receiver 01 only

# docker run -d --rm \

--net=host \

--name query \

quay.io/thanos/thanos:v0.25.0 \

query \

--http-address "0.0.0.0:39090" \

--store "127.0.0.1:10907"

Only foo indicators can be viewed, which proves that it has been stored in pieces according to tenants.

Note that – store can also specify the address of receiver 02, and the effect remains unchanged, because we set -- receive Replication factor 2 replication.

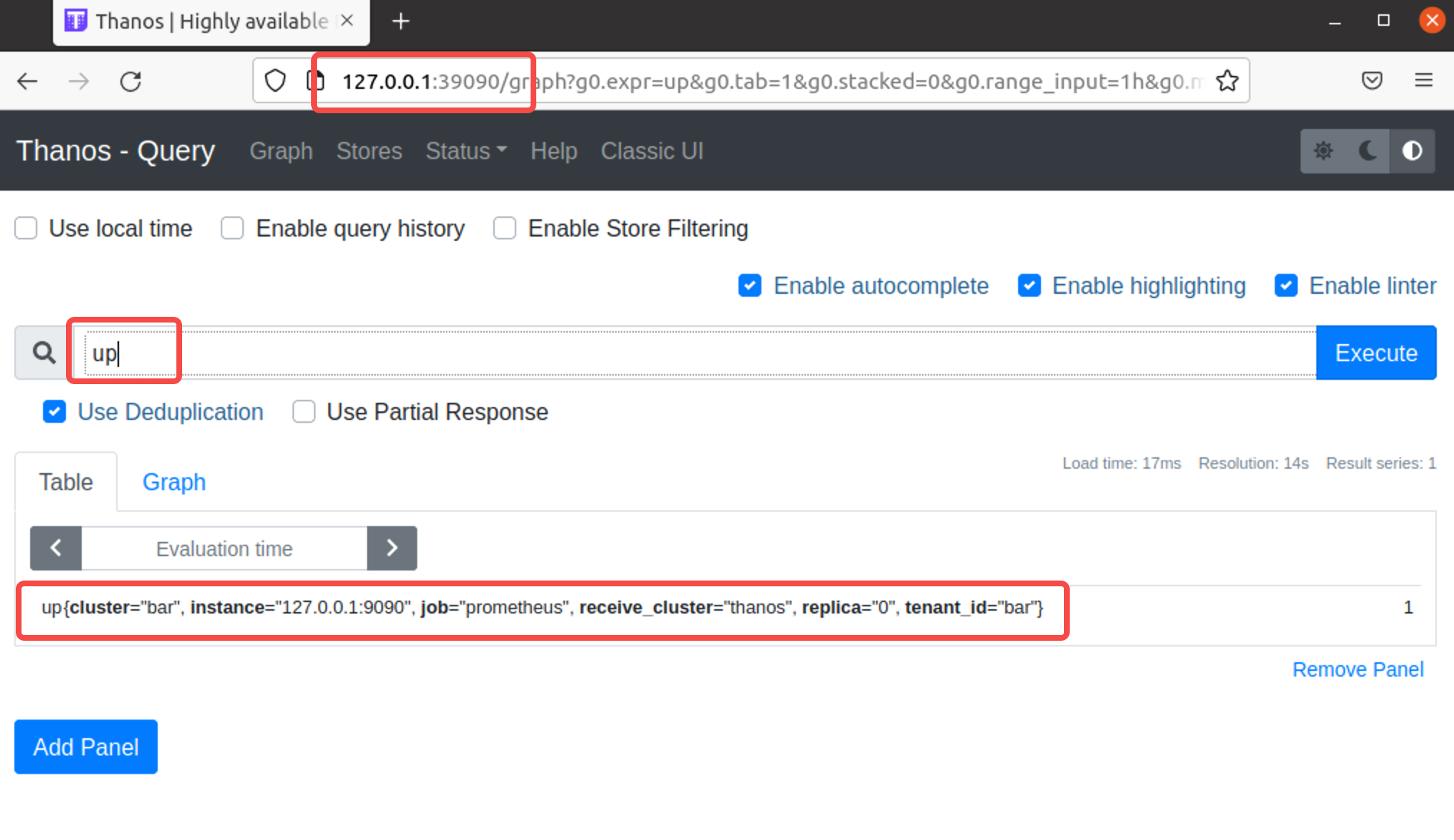

3.2 connect thanos receiver 03 only

# docker rm -f query

# docker run -d --rm \

--net=host \

--name query \

quay.io/thanos/thanos:v0.25.0 \

query \

--http-address "0.0.0.0:39090" \

--store "127.0.0.1:10913"

Similarly, only bar indicators can be viewed

Note that – store can also specify the address of receiver 04, and the effect remains unchanged, because we set -- receive Replication factor 2 replication.

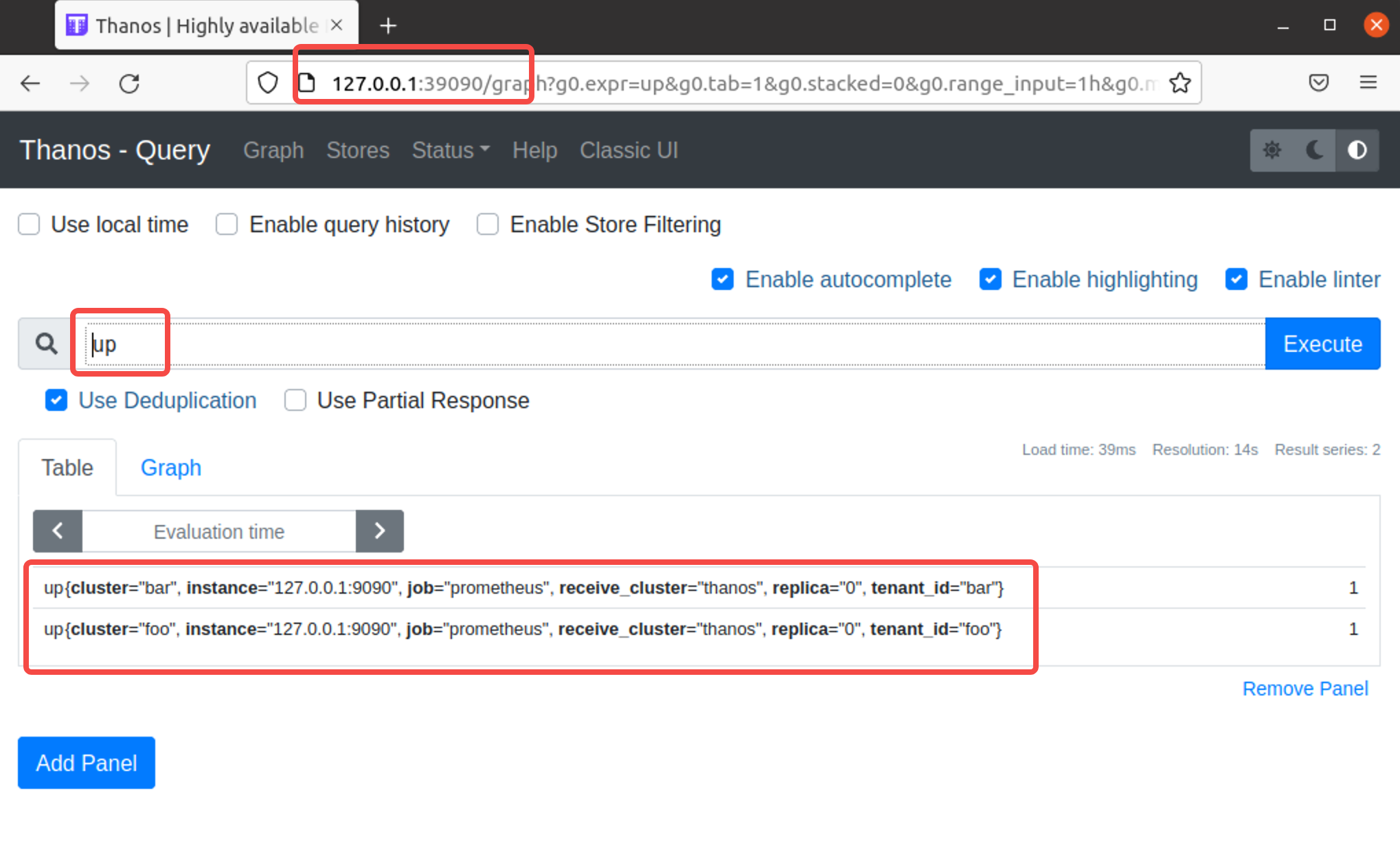

3.3 connect all receiver s at the same time

# docker rm -f query

# docker run -d --rm \

--net=host \

--name query \

quay.io/thanos/thanos:v0.25.0 \

query \

--http-address "0.0.0.0:39090" \

--store "127.0.0.1:10907" \

--store "127.0.0.1:10910" \

--store "127.0.0.1:10913" \

--store "127.0.0.1:10916"

It can be seen that the queried indicators are the indicators of final aggregation (including the data collected by two prometheus instances, foo and bar)

Summary

When the amount of data collected is too large, we can expand prometheus instances horizontally. Each instance is responsible for the collection of some indicators (for example, each prometheus is responsible for the data collection of a small cluster), and then use thanos to aggregate data from different prometheus. thanos receiver itself can be expanded horizontally, and supports hashing the prometheus data write request according to the tenant information, that is, load balancing to different receivers for processing and storage, which greatly improves the monitoring carrying capacity of the system. Moreover, thanos query supports aggregate query, provides a consistent query interface with prometheus, and can easily realize the smooth expansion of prometheus.