background

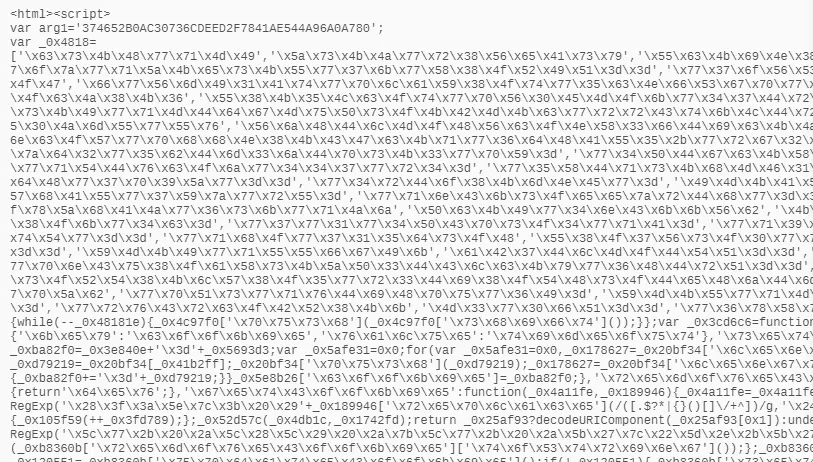

Recently, I've been working on forum crawlers. Crawling, suddenly encounter a forum of anti-crawler mechanism is stronger. For example: http://bbs.nubia.cn/forum-64-1.html . When you visit this page, the first time you return is not the html page, but the encrypted js content. Then you write the cookie, wait for the set time, and then jump to the real page. As follows:

- Think of the plan:

- Analyse the encrypted js, see how to calculate the cookie, whether there are rules to generate the cookie and so on, and then bring the cookie with you every time you visit.

- The encrypted JS is executed using the Phantom Js script that comes with Pypisder, and the content of html is retrieved.

- Use Selenium +WebDriver + Headless Chrome to get html content.

- Use puppeteer + Headless Chrome to get the content of html.

- Analysis scheme:

It is not easy to analyze the js of encryption. It is relatively difficult to crack the encryption method, and the time cost is limited, so it is temporarily abandoned.

The intention was to use the phanthom js mode that comes with pyspider. As a result, phanthoms were always stuck when accessing the url above; moreover, the author of phantom js has given up maintaining it, and there is insufficient support for bug repair and new js grammar. (Need further analysis on why you got stuck?)

Finally, I think of the combination of selenium + webdriver + headless chrome artifacts. Think about if you can use seleniu+chrome to crawl dynamic pages, with pyspider crawl dynamic pages is not very pleasant. Chrome is a real browser, supporting js features more comprehensive, can real simulate user requests, and Chrome official also issued an API for headless chrome( node version The reliability of the api and the strength to support chrome features are excellent.

puppeteer is the api of nodejs version. it is not familiar with nodejs, so it should be ignored for the time being.

Chrome

Chrome browser has supported headless mode since version 59. Users can perform various operations without interface, such as screenshots, output html to PDF, etc. MAC, Linux and Windows all have their corresponding Chrome. Please proceed according to the platform. download Installation. More headless chrome usage can be consulted: headless chrome usage

Selenium

Selenium is an automatic browser. Although official documents say Selenium is an automatic browser, it is not a real browser, it can only drive browsers. Selenium drives browsers through Web Driver, and each browser has a corresponding Web Driver. If we want to use Chrome, we need to download Chrome's Web Driver.

Selenium has many language versions, you can choose to use java, python and other versions. I chose to use the python version here. Python version selenium can be installed through pip: pip install selenium

download WebDriver When you need to choose the appropriate platform, for example, I run on win, download is the windows platform.

Configure PATH

Placing the downloaded WebDriver in the place where the PATH environment variable can be loaded makes it much easier not to specify the webDriver in the program.

Python version of Headless Chrome Web Server

- pyspider uses phantom js to crawl js dynamic pages.

Pyspider searches the phantomjs command in PATH and then uses phantomsjs to execute phantomjs_fetcher.js to start a web server service that listens to fixed ports; when the self.crawl (fetxxx) method in Handler takes the ch_type='js'parameter, pyspider sends a request to the port and uses phantomjs to forward the request. Visit JS dynamic pages to crawl dynamic pages.

If I want to use selenium+chrome to crawl dynamic pages, I also need to implement a web server so that pyspider can access it and use it to forward requests. The python version is implemented as follows:

from urllib.parse import urlparse

import json

import time

import datetime

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from flask import Flask, request

app = Flask(__name__)

@app.route('/', methods=['POST', 'GET'])

def handle_post():

if request.method == 'GET':

body = "method not allowed!"

headers = {

'Cache': 'no-cache',

'Content-Length': len(body)

}

return body, 403, headers

else:

start_time = datetime.datetime.now()

raw_data = request.get_data()

fetch = json.loads(raw_data, encoding='utf-8')

print('fetch=', fetch)

result = {'orig_url': fetch['url'],

'status_code': 200,

'error': '',

'content': '',

'headers': {},

'url': '',

'cookies': {},

'time': 0,

'js_script_result': '',

'save': '' if fetch.get('save') is None else fetch.get('save')

}

driver = InitWebDriver.get_web_driver(fetch)

try:

InitWebDriver.init_extra(fetch)

driver.get(fetch['url'])

if InitWebDriver.isFirst:

time.sleep(2)

InitWebDriver.isFirst = False

result['url'] = driver.current_url

result['content'] = driver.page_source

result['cookies'] = _parse_cookie(driver.get_cookies())

except Exception as e:

result['error'] = str(e)

result['status_code'] = 599

end_time = datetime.datetime.now()

result['time'] = (end_time - start_time).seconds

# print('result=', result)

return json.dumps(result), 200, {

'Cache': 'no-cache',

'Content-Type': 'application/json',

}

def _parse_cookie(cookie_list):

if cookie_list:

cookie_dict = dict()

for item in cookie_list:

cookie_dict[item['name']] = item['value']

return cookie_dict

return {}

class InitWebDriver(object):

_web_driver = None

isFirst = True

@staticmethod

def _init_web_driver(fetch):

if InitWebDriver._web_driver is None:

options = Options()

if fetch.get('proxy'):

if '://' not in fetch['proxy']:

fetch['proxy'] = 'http://' + fetch['proxy']

proxy = urlparse(fetch['proxy']).netloc

options.add_argument('--proxy-server=%s' % proxy)

set_header = fetch.get('headers') is not None

if set_header:

fetch['headers']['Accept-Encoding'] = None

fetch['headers']['Connection'] = None

fetch['headers']['Content-Length'] = None

if set_header and fetch['headers']['User-Agent']:

options.add_argument('user-agent=%s' % fetch['headers']['User-Agent'])

if fetch.get('load_images'):

options.add_experimental_option("prefs", {"profile.managed_default_content_settings.images": 2})

# set viewport

fetch_width = fetch.get('js_viewport_width')

fetch_height = fetch.get('js_viewport_height')

width = 1024 if fetch_width is None else fetch_width

height = 768 * 3 if fetch_height is None else fetch_height

options.add_argument('--window-size={width},{height}'.format(width=width, height=height))

# options.add_argument('--headless')

InitWebDriver._web_driver = webdriver.Chrome(chrome_options=options)

@staticmethod

def get_web_driver(fetch):

if InitWebDriver._web_driver is None:

InitWebDriver._init_web_driver(fetch)

return InitWebDriver._web_driver

@staticmethod

def init_extra(fetch):

driver = InitWebDriver._web_driver

if fetch.get('timeout'):

driver.set_page_load_timeout(fetch.get('timeout'))

driver.set_script_timeout(fetch.get('timeout'))

else:

driver.set_page_load_timeout(20)

driver.set_script_timeout(20)

# reset cookie

cookie_str = fetch['headers']['Cookie']

if fetch.get('headers') and cookie_str:

# driver.delete_all_cookies()

cookie_dict = dict()

for item in cookie_str.split('; '):

key = item.split('=')[0]

value = item.split('=')[1]

cookie_dict[key] = value

# driver.add_cookie(cookie_dict)

@staticmethod

def quit_web_driver():

if InitWebDriver._web_driver is not None:

InitWebDriver._web_driver.quit()

if __name__ == '__main__':

app.run('localhost', 8099)

InitWebDriver.quit_web_driver()

This implementation refers to the pyspider source code: tornado_fetcher.py and phantomjs_fetcher.js. However, the implementation of the function is not complete, only to achieve the functions I need. For example: setting cookie s, executing JS and so on are not implemented, you can refer to the above implementation to achieve their own needs.

Let pyspider fetcher access Headless Chrome Web Server

- Start the chrome web server and run it directly: python selenium_fetcher.py, port 8099

- When starting pyspider, specify -- phantomjs-proxy=http://localhost:8099 parameter, such as: pyspider --phantomjs-proxy=http://localhost:8099