pytest framework learning notes

Absrtact: pytest framework learning notes, record relevant knowledge points of pytest, and install and execute use cases of pytest.

pytest simple operation

Prerequisites for learning:

- python syntax learned

- Knowledge of testing

- python3 and pycharm are installed

Install pytest

After pycharm creates a project, create a * * test_** First, execute the following statement in the terminal to install pytest:

pip3 install pytest

View pytest version

Method 1: execute pip3 show pytest

(venv) zydeMacBook-Air:learnpytest zy$ pip3 show pytest Name: pytest Version: 6.2.5 Summary: pytest: simple powerful testing with Python Home-page: https://docs.pytest.org/en/latest/ Author: Holger Krekel, Bruno Oliveira, Ronny Pfannschmidt, Floris Bruynooghe, Brianna Laugher, Florian Bruhin and others Author-email: License: MIT Location: /Users/zy/PycharmProjects/learnpytest/venv/lib/python3.7/site-packages Requires: attrs, importlib-metadata, iniconfig, packaging, pluggy, py, toml Required-by: (venv) zydeMacBook-Air:learnpytest zy$

Method 2: execute pytest --version

(venv) zydeMacBook-Air:learnpytest zy$ pytest --version pytest 6.2.5

View pytest command line parameters

You can use pytest -h or pytest --help to view the pytest command line parameters.

(venv) zydeMacBook-Air:learnpytest zy$ pytest -h

usage: pytest [options] [file_or_dir] [file_or_dir] [...]

positional arguments:

file_or_dir

general:

-k EXPRESSION only run tests which match the given substring expression. An expression is a python evaluatable expression where

all names are substring-matched against test names and their parent classes. Example: -k 'test_method or test_other'

matches all test functions and classes whose name contains 'test_method' or 'test_other', while -k 'not test_method'

matches those that don't contain 'test_method' in their names. -k 'not test_method and not test_other' will

eliminate the matches. Additionally keywords are matched to classes and functions containing extra names in their

'extra_keyword_matches' set, as well as functions which have names assigned directly to them. The matching is case-

insensitive.

-m MARKEXPR only run tests matching given mark expression.

For example: -m 'mark1 and not mark2'.

--markers show markers (builtin, plugin and per-project ones).

-x, --exitfirst exit instantly on first error or failed test.

--fixtures, --funcargs

show available fixtures, sorted by plugin appearance (fixtures with leading '_' are only shown with '-v')

--fixtures-per-test show fixtures per test

--pdb start the interactive Python debugger on errors or KeyboardInterrupt.

--pdbcls=modulename:classname

start a custom interactive Python debugger on errors. For example: --pdbcls=IPython.terminal.debugger:TerminalPdb

--trace Immediately break when running each test.

--capture=method per-test capturing method: one of fd|sys|no|tee-sys.

-s shortcut for --capture=no.

--runxfail report the results of xfail tests as if they were not marked

--lf, --last-failed rerun only the tests that failed at the last run (or all if none failed)

--ff, --failed-first run all tests, but run the last failures first.

This may re-order tests and thus lead to repeated fixture setup/teardown.

--nf, --new-first run tests from new files first, then the rest of the tests sorted by file mtime

--cache-show=[CACHESHOW]

show cache contents, don't perform collection or tests. Optional argument: glob (default: '*').

--cache-clear remove all cache contents at start of test run.

--lfnf={all,none}, --last-failed-no-failures={all,none}

which tests to run with no previously (known) failures.

--sw, --stepwise exit on test failure and continue from last failing test next time

--sw-skip, --stepwise-skip

ignore the first failing test but stop on the next failing test

reporting:

--durations=N show N slowest setup/test durations (N=0 for all).

--durations-min=N Minimal duration in seconds for inclusion in slowest list. Default 0.005

-v, --verbose increase verbosity.

--no-header disable header

--no-summary disable summary

-q, --quiet decrease verbosity.

--verbosity=VERBOSE set verbosity. Default is 0.

-r chars show extra test summary info as specified by chars: (f)ailed, (E)rror, (s)kipped, (x)failed, (X)passed, (p)assed,

(P)assed with output, (a)ll except passed (p/P), or (A)ll. (w)arnings are enabled by default (see --disable-

warnings), 'N' can be used to reset the list. (default: 'fE').

--disable-warnings, --disable-pytest-warnings

disable warnings summary

-l, --showlocals show locals in tracebacks (disabled by default).

--tb=style traceback print mode (auto/long/short/line/native/no).

--show-capture={no,stdout,stderr,log,all}

Controls how captured stdout/stderr/log is shown on failed tests. Default is 'all'.

--full-trace don't cut any tracebacks (default is to cut).

--color=color color terminal output (yes/no/auto).

--code-highlight={yes,no}

Whether code should be highlighted (only if --color is also enabled)

--pastebin=mode send failed|all info to bpaste.net pastebin service.

--junit-xml=path create junit-xml style report file at given path.

--junit-prefix=str prepend prefix to classnames in junit-xml output

pytest-warnings:

-W PYTHONWARNINGS, --pythonwarnings=PYTHONWARNINGS

set which warnings to report, see -W option of python itself.

--maxfail=num exit after first num failures or errors.

--strict-config any warnings encountered while parsing the `pytest` section of the configuration file raise errors.

--strict-markers markers not registered in the `markers` section of the configuration file raise errors.

--strict (deprecated) alias to --strict-markers.

-c file load configuration from `file` instead of trying to locate one of the implicit configuration files.

--continue-on-collection-errors

Force test execution even if collection errors occur.

--rootdir=ROOTDIR Define root directory for tests. Can be relative path: 'root_dir', './root_dir', 'root_dir/another_dir/'; absolute

path: '/home/user/root_dir'; path with variables: '$HOME/root_dir'.

collection:

--collect-only, --co only collect tests, don't execute them.

--pyargs try to interpret all arguments as python packages.

--ignore=path ignore path during collection (multi-allowed).

--ignore-glob=path ignore path pattern during collection (multi-allowed).

--deselect=nodeid_prefix

deselect item (via node id prefix) during collection (multi-allowed).

--confcutdir=dir only load conftest.py's relative to specified dir.

--noconftest Don't load any conftest.py files.

--keep-duplicates Keep duplicate tests.

--collect-in-virtualenv

Don't ignore tests in a local virtualenv directory

--import-mode={prepend,append,importlib}

prepend/append to sys.path when importing test modules and conftest files, default is to prepend.

--doctest-modules run doctests in all .py modules

--doctest-report={none,cdiff,ndiff,udiff,only_first_failure}

choose another output format for diffs on doctest failure

--doctest-glob=pat doctests file matching pattern, default: test*.txt

--doctest-ignore-import-errors

ignore doctest ImportErrors

--doctest-continue-on-failure

for a given doctest, continue to run after the first failure

test session debugging and configuration:

--basetemp=dir base temporary directory for this test run.(warning: this directory is removed if it exists)

-V, --version display pytest version and information about plugins.When given twice, also display information about plugins.

-h, --help show help message and configuration info

-p name early-load given plugin module name or entry point (multi-allowed).

To avoid loading of plugins, use the `no:` prefix, e.g. `no:doctest`.

--trace-config trace considerations of conftest.py files.

--debug store internal tracing debug information in 'pytestdebug.log'.

-o OVERRIDE_INI, --override-ini=OVERRIDE_INI

override ini option with "option=value" style, e.g. `-o xfail_strict=True -o cache_dir=cache`.

--assert=MODE Control assertion debugging tools.

'plain' performs no assertion debugging.

'rewrite' (the default) rewrites assert statements in test modules on import to provide assert expression

information.

--setup-only only setup fixtures, do not execute tests.

--setup-show show setup of fixtures while executing tests.

--setup-plan show what fixtures and tests would be executed but don't execute anything.

logging:

--log-level=LEVEL level of messages to catch/display.

Not set by default, so it depends on the root/parent log handler's effective level, where it is "WARNING" by

default.

--log-format=LOG_FORMAT

log format as used by the logging module.

--log-date-format=LOG_DATE_FORMAT

log date format as used by the logging module.

--log-cli-level=LOG_CLI_LEVEL

cli logging level.

--log-cli-format=LOG_CLI_FORMAT

log format as used by the logging module.

--log-cli-date-format=LOG_CLI_DATE_FORMAT

log date format as used by the logging module.

--log-file=LOG_FILE path to a file when logging will be written to.

--log-file-level=LOG_FILE_LEVEL

log file logging level.

--log-file-format=LOG_FILE_FORMAT

log format as used by the logging module.

--log-file-date-format=LOG_FILE_DATE_FORMAT

log date format as used by the logging module.

--log-auto-indent=LOG_AUTO_INDENT

Auto-indent multiline messages passed to the logging module. Accepts true|on, false|off or an integer.

[pytest] ini-options in the first pytest.ini|tox.ini|setup.cfg file found:

markers (linelist): markers for test functions

empty_parameter_set_mark (string):

default marker for empty parametersets

norecursedirs (args): directory patterns to avoid for recursion

testpaths (args): directories to search for tests when no files or directories are given in the command line.

filterwarnings (linelist):

Each line specifies a pattern for warnings.filterwarnings. Processed after -W/--pythonwarnings.

usefixtures (args): list of default fixtures to be used with this project

python_files (args): glob-style file patterns for Python test module discovery

python_classes (args):

prefixes or glob names for Python test class discovery

python_functions (args):

prefixes or glob names for Python test function and method discovery

disable_test_id_escaping_and_forfeit_all_rights_to_community_support (bool):

disable string escape non-ascii characters, might cause unwanted side effects(use at your own risk)

console_output_style (string):

console output: "classic", or with additional progress information ("progress" (percentage) | "count").

xfail_strict (bool): default for the strict parameter of xfail markers when not given explicitly (default: False)

enable_assertion_pass_hook (bool):

Enables the pytest_assertion_pass hook.Make sure to delete any previously generated pyc cache files.

junit_suite_name (string):

Test suite name for JUnit report

junit_logging (string):

Write captured log messages to JUnit report: one of no|log|system-out|system-err|out-err|all

junit_log_passing_tests (bool):

Capture log information for passing tests to JUnit report:

junit_duration_report (string):

Duration time to report: one of total|call

junit_family (string):

Emit XML for schema: one of legacy|xunit1|xunit2

doctest_optionflags (args):

option flags for doctests

doctest_encoding (string):

encoding used for doctest files

cache_dir (string): cache directory path.

log_level (string): default value for --log-level

log_format (string): default value for --log-format

log_date_format (string):

default value for --log-date-format

log_cli (bool): enable log display during test run (also known as "live logging").

log_cli_level (string):

default value for --log-cli-level

log_cli_format (string):

default value for --log-cli-format

log_cli_date_format (string):

default value for --log-cli-date-format

log_file (string): default value for --log-file

log_file_level (string):

default value for --log-file-level

log_file_format (string):

default value for --log-file-format

log_file_date_format (string):

default value for --log-file-date-format

log_auto_indent (string):

default value for --log-auto-indent

faulthandler_timeout (string):

Dump the traceback of all threads if a test takes more than TIMEOUT seconds to finish.

addopts (args): extra command line options

minversion (string): minimally required pytest version

required_plugins (args):

plugins that must be present for pytest to run

environment variables:

PYTEST_ADDOPTS extra command line options

PYTEST_PLUGINS comma-separated plugins to load during startup

PYTEST_DISABLE_PLUGIN_AUTOLOAD set to disable plugin auto-loading

PYTEST_DEBUG set to enable debug tracing of pytest's internals

to see available markers type: pytest --markers

to see available fixtures type: pytest --fixtures

(shown according to specified file_or_dir or current dir if not specified; fixtures with leading '_' are only shown with the '-v' option

(venv) zydeMacBook-Air:learnpytest zy$

pytest use case rules

-

The file name follows test_ * Py and***_ test.py**

-

Function with * * test_** start

-

Class starts with test and method starts with * * test_ Start, and can't have__ init__** method

-

All packages must have**__ init__.py * * file

-

Assert using assert

The terminal executes the pytest case

cmd can execute pytest case in three ways:

- pytest

- py.test

- python -m pytest

If you execute the above command in a folder without parameters, it will execute all qualified use cases in the folder. See pytest use case rules for conditions.

Example:

Test already exists in the project folder learnpytest_ aaa. Py use case file. We execute it using the command line.

- pytest

(venv) zydeMacBook-Air:learnpytest zy$ pytest =========================================================== test session starts ============================================================ platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 rootdir: /Users/zy/PycharmProjects/learnpytest collected 2 items test_aaa.py .. [100%] ============================================================ 2 passed in 0.03s =============================================================

- py.test

(venv) zydeMacBook-Air:learnpytest zy$ py.test =========================================================== test session starts ============================================================ platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 rootdir: /Users/zy/PycharmProjects/learnpytest collected 2 items test_aaa.py .. [100%] ============================================================ 2 passed in 0.01s =============================================================

- python -m pytest

(venv) zydeMacBook-Air:learnpytest zy$ python -m pytest =========================================================== test session starts ============================================================ platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 rootdir: /Users/zy/PycharmProjects/learnpytest collected 2 items test_aaa.py .. [100%] ============================================================ 2 passed in 0.02s =============================================================

Execute use case rules

Test already exists in the learnpytest project directory_ aaa. Py and test_bbb.py two use case files. The use case structure is like this:

-learnpytest

-test_aaa.py

-class TestCase

-def test001

-def test002

-class TestCall

-def test001

-test_bbb.py

-class TestLogin

-def test001

-def test002

Execute all use cases under a directory

If you execute all use cases directly in this directory, you can enter pytest or other commands (see pytest case executed by the terminal). What if you want to execute in a non target directory?

Use pytest target directory path

zydeMacBook-Air:~ zy$ pytest /Users/zy/PycharmProjects/learnpytest ============================= test session starts ============================== platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 rootdir: /Users/zy collected 5 items PycharmProjects/learnpytest/test_aaa.py ... [ 60%] PycharmProjects/learnpytest/test_bbb.py .. [100%] ============================== 5 passed in 0.03s ===============================

Execute all use cases in a py file

To execute test_ aaa. For all use cases in py, use the pytest target file path

zydeMacBook-Air:~ zy$ pytest /Users/zy/PycharmProjects/learnpytest/test_aaa.py ============================= test session starts ============================== platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 rootdir: /Users/zy collected 3 items PycharmProjects/learnpytest/test_aaa.py ... [100%] ============================== 3 passed in 0.02s ===============================

Match use cases by keyword and execute

This will run a test that contains a name that matches the given string expression, including Python

Use file name, class name and function name as operators of variables.

Run test_ bbb. Case without 002 in py file. (we add the - s parameter to print detailed debugging information and specify which case is executed)

(venv) zydeMacBook-Air:learnpytest zy$ pytest -s -k "bbb and not 002" =========================================================== test session starts ============================================================ platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 rootdir: /Users/zy/PycharmProjects/learnpytest collected 5 items / 4 deselected / 1 selected test_bbb.py implement test001>>>>>>>>> . ===================================================== 1 passed, 4 deselected in 0.02s ====================================================== (venv) zydeMacBook-Air:learnpytest zy$

Run test_ aaa. case without 001 in TestCase class in. Py file.

(venv) zydeMacBook-Air:learnpytest zy$ pytest -s -k "aaa and Case and not 001" =========================================================== test session starts ============================================================ platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 rootdir: /Users/zy/PycharmProjects/learnpytest collected 5 items / 4 deselected / 1 selected test_aaa.py implement test002>>>>>>>>> . ===================================================== 1 passed, 4 deselected in 0.02s ======================================================

Run by node

Each collected test is assigned a unique nodeid consisting of a module file name followed by a specifier

Class names, function names, and parameters from parameterization, separated by the:: symbol. The following example adds the - s parameter for clarity.

Run test_ aaa. Test001 test method in testcase class in py module: pytest_ aaa. py::TestCase::test001

(venv) zydeMacBook-Air:learnpytest zy$ pytest -s test_aaa.py::TestCase::test001 =========================================================== test session starts ============================================================ platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 rootdir: /Users/zy/PycharmProjects/learnpytest collected 1 item test_aaa.py implement test001>>>>>>>>> . ============================================================ 1 passed in 0.01s ============================================================= (venv) zydeMacBook-Air:learnpytest zy$

Run test_ bbb. Test002 test function in py module: pytest_ bbb. py::test002

(venv) zydeMacBook-Air:learnpytest zy$ pytest -s test_bbb.py::test002 =========================================================== test session starts ============================================================ platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 rootdir: /Users/zy/PycharmProjects/learnpytest collected 1 item test_bbb.py implement test002>>>>>>>>> . ============================================================ 1 passed in 0.01s =============================================================

Run the use case marked by the marked expression

For the convenience of this example, let's give it to test first_ aaa. Add the tag decorator @ pytest. To the test002 method in TestCase and test001 method in TestCall in py mark. kk

import pytest

class TestCase:

def test001(self):

print("implement test001>>>>>>>>>")

assert True

@pytest.mark.kk

def test002(self):

print("implement test002>>>>>>>>>")

assert True

class TestCall:

@pytest.mark.kk

def test001(self):

print("implement test001>>>>>>>>>")

assert True

Next, we run the kk marked expression using the marked expression: pytest -m kk

(venv) zydeMacBook-Air:learnpytest zy$ pytest -s -m kk

=========================================================== test session starts ============================================================

platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0

rootdir: /Users/zy/PycharmProjects/learnpytest

collected 5 items / 3 deselected / 2 selected

test_aaa.py implement test002>>>>>>>>>

.implement test001>>>>>>>>>

.

============================================================= warnings summary =============================================================

test_aaa.py:7

/Users/zy/PycharmProjects/learnpytest/test_aaa.py:7: PytestUnknownMarkWarning: Unknown pytest.mark.kk - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/stable/mark.html

@pytest.mark.kk

test_aaa.py:13

/Users/zy/PycharmProjects/learnpytest/test_aaa.py:13: PytestUnknownMarkWarning: Unknown pytest.mark.kk - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/stable/mark.html

@pytest.mark.kk

-- Docs: https://docs.pytest.org/en/stable/warnings.html

=============================================== 2 passed, 3 deselected, 2 warnings in 0.03s ================================================

Run from package

To facilitate testing, we create a new package under the learnpytest project directory. The package name is jjj, and test_aaa.py into jjj.

(venv) zydeMacBook-Air:learnpytest zy$ pytest --pyargs jjj.test_aaa =========================================================== test session starts ============================================================ platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 rootdir: /Users/zy/PycharmProjects/learnpytest collected 0 items ========================================================== no tests ran in 0.00s =========================================================== ERROR: module or package not found: jjj.test_aaa (missing __init__.py?)

The test failed.

Stop the test when a failure is encountered

We use the - x parameter to stop the test when we encounter a failure

test_aaa.py file

import pytest

class TestCase:

def test001(self):

print("implement test001>>>>>>>>>")

assert True

@pytest.mark.kk

def test002(self):

print("implement test002>>>>>>>>>")

assert False

class TestCall:

@pytest.mark.kk

def test001(self):

print("implement test001>>>>>>>>>")

assert True

In the above file, test002 failed to execute. We use the - x parameter to execute the settings and stop the execution when it fails. The results are as follows. It can be seen that test001 in TestCall is not executed after test002 is executed.

(venv) zydeMacBook-Air:learnpytest zy$ pytest -x test_aaa.py

=========================================================== test session starts ============================================================

platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0

rootdir: /Users/zy/PycharmProjects/learnpytest

collected 3 items

test_aaa.py .F

================================================================= FAILURES =================================================================

_____________________________________________________________ TestCase.test002 _____________________________________________________________

self = <test_aaa.TestCase object at 0x107373150>

@pytest.mark.kk

def test002(self):

print("implement test002>>>>>>>>>")

> assert False

E assert False

test_aaa.py:10: AssertionError

----------------------------------------------------------- Captured stdout call -----------------------------------------------------------

implement test002>>>>>>>>>

============================================================= warnings summary =============================================================

test_aaa.py:7

/Users/zy/PycharmProjects/learnpytest/test_aaa.py:7: PytestUnknownMarkWarning: Unknown pytest.mark.kk - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/stable/mark.html

@pytest.mark.kk

test_aaa.py:13

/Users/zy/PycharmProjects/learnpytest/test_aaa.py:13: PytestUnknownMarkWarning: Unknown pytest.mark.kk - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/stable/mark.html

@pytest.mark.kk

-- Docs: https://docs.pytest.org/en/stable/warnings.html

========================================================= short test summary info ==========================================================

FAILED test_aaa.py::TestCase::test002 - assert False

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! stopping after 1 failures !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

================================================= 1 failed, 1 passed, 2 warnings in 0.09s ==================================================

Extension: let's explore whether execution will stop when an error is encountered but the use case assertion is successful.

test_aaa.py file

import pytest

class TestCase:

def test001(self):

print("implement test001>>>>>>>>>")

assert True

@pytest.mark.kk

def test002(self):

print("implement test002>>>>>>>>>")

a=[1,2]

print(a[3])

assert True

class TestCall:

@pytest.mark.kk

def test001(self):

print("implement test001>>>>>>>>>")

assert True

(venv) zydeMacBook-Air:learnpytest zy$ pytest -s -x test_aaa.py

=========================================================== test session starts ============================================================

platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0

rootdir: /Users/zy/PycharmProjects/learnpytest

collected 3 items

test_aaa.py implement test001>>>>>>>>>

.implement test002>>>>>>>>>

F

================================================================= FAILURES =================================================================

_____________________________________________________________ TestCase.test002 _____________________________________________________________

self = <test_aaa.TestCase object at 0x10d8c3110>

@pytest.mark.kk

def test002(self):

print("implement test002>>>>>>>>>")

a=[1,2]

> print(a[3])

E IndexError: list index out of range

test_aaa.py:11: IndexError

============================================================= warnings summary =============================================================

test_aaa.py:7

/Users/zy/PycharmProjects/learnpytest/test_aaa.py:7: PytestUnknownMarkWarning: Unknown pytest.mark.kk - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/stable/mark.html

@pytest.mark.kk

test_aaa.py:15

/Users/zy/PycharmProjects/learnpytest/test_aaa.py:15: PytestUnknownMarkWarning: Unknown pytest.mark.kk - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/stable/mark.html

@pytest.mark.kk

-- Docs: https://docs.pytest.org/en/stable/warnings.html

========================================================= short test summary info ==========================================================

FAILED test_aaa.py::TestCase::test002 - IndexError: list index out of range

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! stopping after 1 failures !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

================================================= 1 failed, 1 passed, 2 warnings in 0.08s ==================================================

It can be seen that even if the assert assertion is True, the error occurred during program execution will be determined as failed, and the scenario of stopping the test in case of failure is met.

Stop the test when the number of errors reaches the specified number

Use the * * - maxfail * * parameter to set to stop the test when the number of error cases reaches the specified number.

import pytest

class TestCase:

def test001(self):

print("implement test001>>>>>>>>>")

assert False

@pytest.mark.kk

def test002(self):

print("implement test002>>>>>>>>>")

assert False

class TestCall:

@pytest.mark.kk

def test001(self):

print("implement test001>>>>>>>>>")

assert True

It's test_aaa.py file. The assertion of test001 and test002 in the file failed.

We set to stop the test when the number of errors reaches 2.

(venv) zydeMacBook-Air:learnpytest zy$ pytest --maxfail=2 test_aaa.py

=========================================================== test session starts ============================================================

platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0

rootdir: /Users/zy/PycharmProjects/learnpytest

collected 3 items

test_aaa.py FF

================================================================= FAILURES =================================================================

_____________________________________________________________ TestCase.test001 _____________________________________________________________

self = <test_aaa.TestCase object at 0x108dbd4d0>

def test001(self):

print("implement test001>>>>>>>>>")

> assert False

E assert False

test_aaa.py:5: AssertionError

----------------------------------------------------------- Captured stdout call -----------------------------------------------------------

implement test001>>>>>>>>>

_____________________________________________________________ TestCase.test002 _____________________________________________________________

self = <test_aaa.TestCase object at 0x108dc4790>

@pytest.mark.kk

def test002(self):

print("implement test002>>>>>>>>>")

> assert False

E assert False

test_aaa.py:10: AssertionError

----------------------------------------------------------- Captured stdout call -----------------------------------------------------------

implement test002>>>>>>>>>

============================================================= warnings summary =============================================================

test_aaa.py:7

/Users/zy/PycharmProjects/learnpytest/test_aaa.py:7: PytestUnknownMarkWarning: Unknown pytest.mark.kk - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/stable/mark.html

@pytest.mark.kk

test_aaa.py:13

/Users/zy/PycharmProjects/learnpytest/test_aaa.py:13: PytestUnknownMarkWarning: Unknown pytest.mark.kk - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/stable/mark.html

@pytest.mark.kk

-- Docs: https://docs.pytest.org/en/stable/warnings.html

========================================================= short test summary info ==========================================================

FAILED test_aaa.py::TestCase::test001 - assert False

FAILED test_aaa.py::TestCase::test002 - assert False

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! stopping after 2 failures !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

====================================================== 2 failed, 2 warnings in 0.09s =======================================================

How to run pytest in pycharm

Method 1: modify the default runner of the project

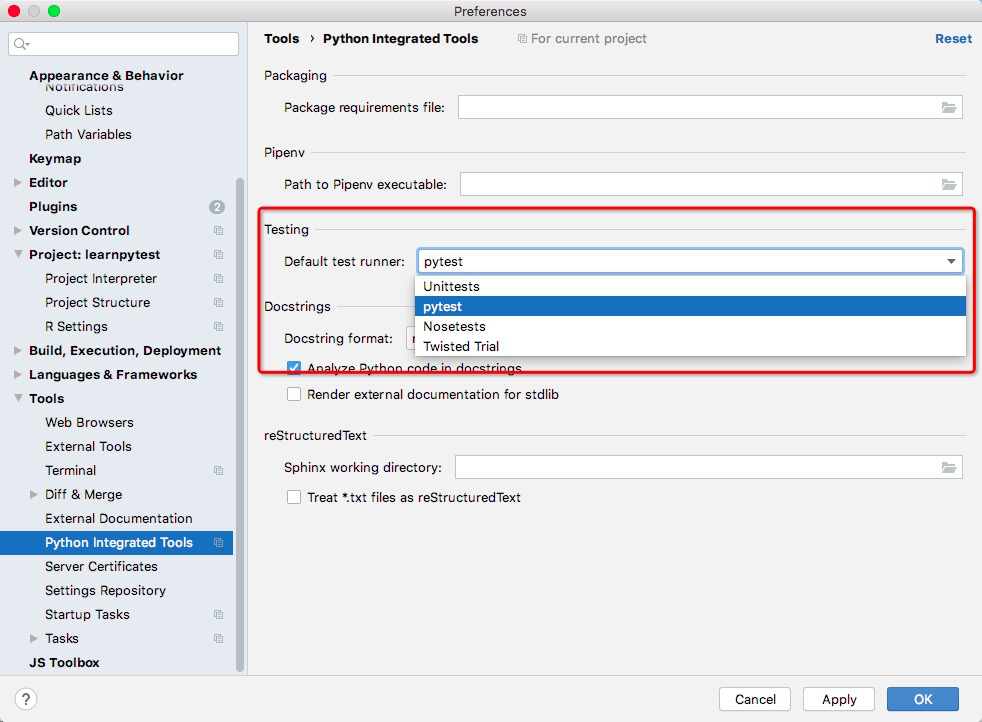

Set preferences - > tools - > Python integrated tools - > Default test runner as pytest, as shown in the following figure

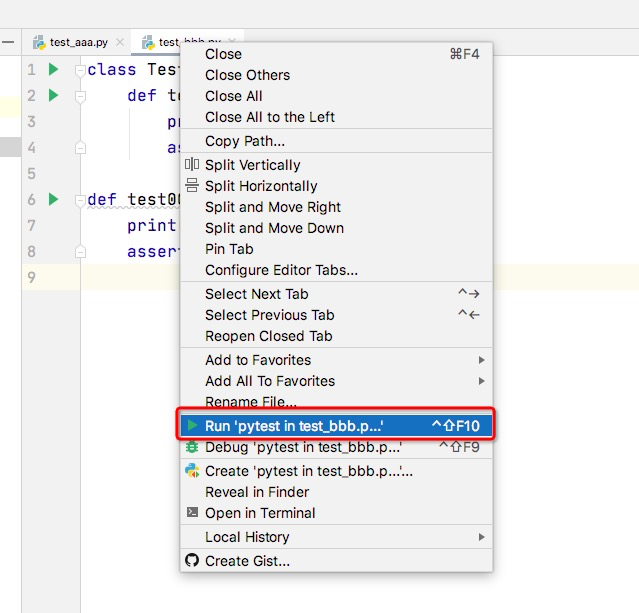

After saving, right-click the case module name and click Run 'pytest in XXX Py ', as shown below.

Operation results:

Testing started at 1 pm:58 ... /Users/zy/PycharmProjects/learnpytest/venv/bin/python "/Applications/PyCharm CE.app/Contents/plugins/python-ce/helpers/pycharm/_jb_pytest_runner.py" --path /Users/zy/PycharmProjects/learnpytest/test_bbb.py Launching pytest with arguments /Users/zy/PycharmProjects/learnpytest/test_bbb.py in /Users/zy/PycharmProjects/learnpytest ============================= test session starts ============================== platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 -- /Users/zy/PycharmProjects/learnpytest/venv/bin/python cachedir: .pytest_cache rootdir: /Users/zy/PycharmProjects/learnpytest collecting ... collected 2 items test_bbb.py::TestLogin::test001 PASSED [ 50%]implement test001>>>>>>>>> test_bbb.py::test002 PASSED [100%]implement test002>>>>>>>>> ============================== 2 passed in 0.02s =============================== Process finished with exit code 0

Method 2: write pytest running code in pycharm

Import the pytest module and use pytest main()

import pytest

if __name__ == '__main__':

pytest.main(['test_bbb.py'])

Execution results:

/Users/zy/PycharmProjects/learnpytest/venv/bin/python /Users/zy/PycharmProjects/learnpytest/test_bbb.py ============================= test session starts ============================== platform darwin -- Python 3.7.4, pytest-6.2.5, py-1.11.0, pluggy-1.0.0 rootdir: /Users/zy/PycharmProjects/learnpytest collected 2 items test_bbb.py .. [100%] ============================== 2 passed in 0.01s =============================== Process finished with exit code 0