First of all, I declare that my crawler is on the unbuttu16.04 system, mainly referring to the following BLOG

https://blog.csdn.net/qq_40774175/article/details/81273198

On this basis, some modifications have been made to improve it.

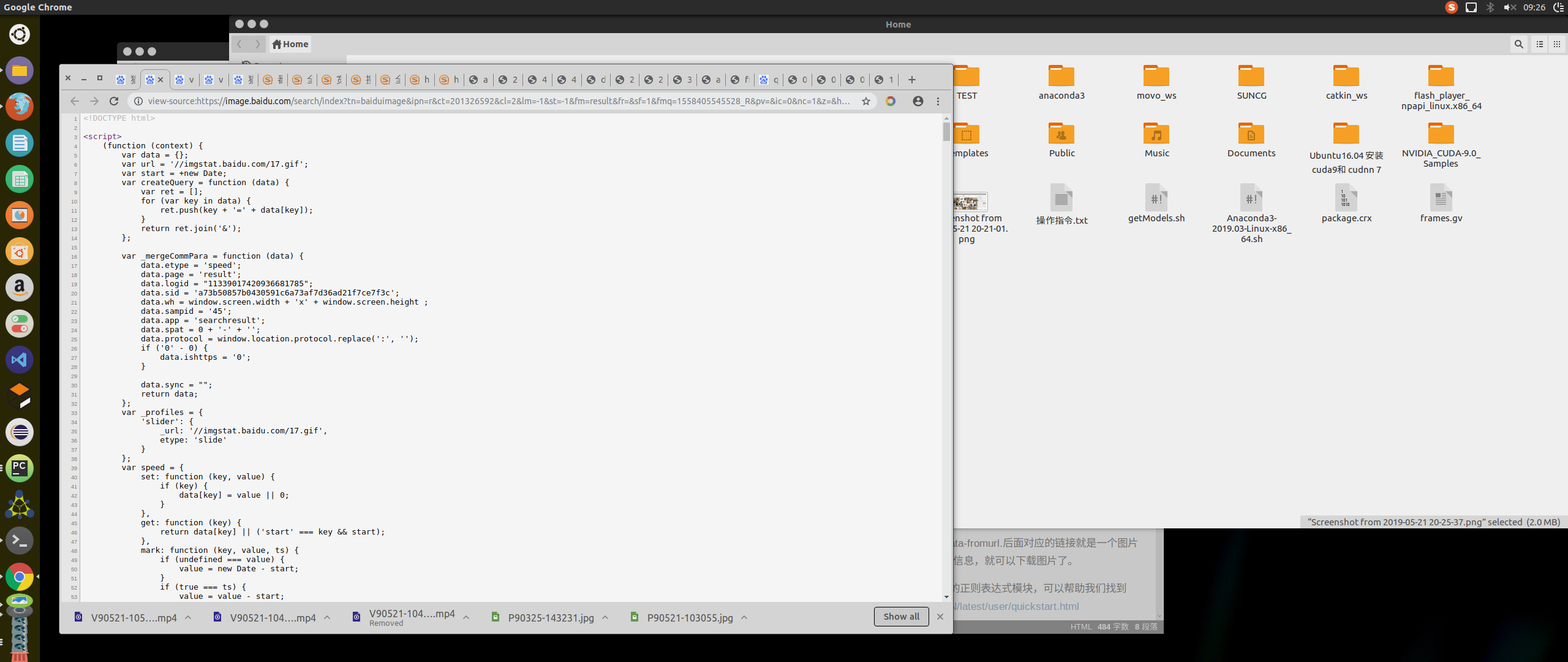

1. You need to know Python's requests library. You can get the original information of the web page by using the requests library, which is the kind of information you see when you open a web page to view the source code. (Google browser is recommended)

On this page, we can find the url of a picture. The next link is a link corresponding to a picture (PS: Obj url is used in this article). If we want to get this information, we can download the pictures. Learning links: https://2.python-requests.org//zh_CN/latest/user/quickstart.html

2, so how can I get the URL of this picture? At this time, Python has a powerful regular expression module, which can help us find the obj URL. Regular expression learning link https://2.python-requests.org//zh_CN/latest/user/quickstart.html

The code is ready to be released.

# -*-coding:utf-8-*-

import re

import requests

from urllib import error

import os

num = 0

numPicture = 0

file = ''

List = []

def Find(url):

global List

print('Please wait while the total number of pictures is detected.....')

t = 0

i = 1

s = 0

while t < 1000: # Set the number of search pictures here

Url = url + str(t)

try:

Result = requests.get(Url, timeout=7)

except BaseException:

t = t + 60

continue

else:

result = Result.text

pic_url = re.findall('"objURL":"(.*?)",', result, re.S) # First use regular expression to find image url

s += len(pic_url)

if len(pic_url) == 0:

break

else:

List.append(pic_url)

t = t + 60

return s

def dowmloadPicture(html, keyword):

global num

pic_url = re.findall('"objURL":"(.*?)",', html, re.S) # First use regular expression to find image url

print('Find keywords:' + keyword + 'About to start downloading pictures...')

for each in pic_url:

imsearch = re.search('png', each) # Filter JPG pictures

if imsearch is not None:

continue

print('Downloading section' + str(num + 1) + 'Picture address:' + str(each))

try:

if each is not None:

pic = requests.get(each, timeout=7)

else:

continue

except BaseException:

print('Error, the current picture cannot be downloaded')

continue

else:

string = file + '/' + keyword + '_' + str(num) + '.jpg'

fp = open(string, 'wb')

fp.write(pic.content)

fp.close()

num += 1

if num >= numPicture:

num = 0 # Zero number of downloaded pictures per category

return

if __name__ == '__main__': # Main function entry

tm = int(input('Please enter the number of downloads for each type of picture '))

numPicture = tm

line_list = []

with open('./name.txt', encoding='utf-8') as file:

line_list = [k.strip() for k in file.readlines()] # Remove the space at the end with strip()

for word in line_list:

if len(word) != 0: # Check each line of name.txt

url = 'http://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&word=' + word + '&pn='

tot = Find(url)

print('After testing%s Class pictures%d Zhang' % (word, tot))

file = word

y = os.path.exists(file)

if y == 1:

print('The file already exists, please re-enter')

file = word + 'Folder 2'

os.mkdir(file)

else:

os.mkdir(file)

t = 0

tmp = url

while t < numPicture:

try:

url = tmp + str(t)

result = requests.get(url, timeout=10)

print(url)

except error.HTTPError as e:

print('network error,Please adjust the network and try again')

t = t + 60

else:

dowmloadPicture(result.text, word)

t = t + 60

else:

print('End of current search,Thanks for using')