1, Foreword

Because JD's anti crawling technology is strong, it is impossible to crawl its data using conventional methods, and it is difficult to use reverse analysis technology, so this paper will directly use selenium to crawl JD's commodity data. If you don't know how to install and configure selenium, please click to check the author's previous article: Python automated questionnaire

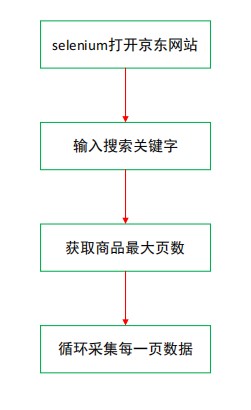

The steps of crawling data in this paper are as follows:

2, Complete code

Import the required packages, including time, selenium, lxml and openpyxl.

import time from selenium import webdriver from selenium.webdriver.support import expected_conditions as EC from selenium.webdriver.common.by import By from selenium.webdriver.support.ui import WebDriverWait from lxml import etree from openpyxl import Workbook

openpyxl creates a new workbook and adds a header, including the title and price of goods.

wb = Workbook() sheet = wb.active sheet['A1'] = 'name' sheet['B1'] = 'price' sheet['C1'] = 'commit' sheet['D1'] = 'shop' sheet['E1'] = 'sku' sheet['F1'] = 'icons' sheet['G1'] = 'detail_url'

selenium basic configuration, where driver_path is the folder where the chromedriver Google browser driver is located. If you put chromedriver under the project folder, this line of code can be omitted. selenium is mainly configured not to load pictures when visiting the website, which can not only speed up the access speed, but also save traffic; Set the waiting time for browser access to avoid program error due to special reasons.

driver_path = r"D:\python\chromedriver_win32\chromedriver.exe"

options = webdriver.ChromeOptions()

# Don't load pictures

options.add_experimental_option('prefs', {'profile.managed_default_content_settings.images': 2})

driver = webdriver.Chrome(executable_path=driver_path, options=options)

wait = WebDriverWait(driver, 60) # Set waiting time

Get the maximum number of pages of goods. The goods on JD are generally 100 pages.

def search(keyword):

try:

input = wait.until(

EC.presence_of_all_elements_located((By.CSS_SELECTOR, "#key"))

) # Wait until the search box loads

submit = wait.until(

EC.element_to_be_clickable((By.CSS_SELECTOR, "#search > div > div.form > button"))

) # Wait until the search button can be clicked

input[0].send_keys(keyword) # Enter keywords into the search box

submit.click() # click

wait.until(

EC.presence_of_all_elements_located(

(By.CSS_SELECTOR, '#J_bottomPage > span.p-skip > em:nth-child(1) > b')

)

)

total_page = driver.find_element_by_xpath('//*[@id="J_bottomPage"]/span[2]/em[1]/b').text

return int(total_page)

except TimeoutError:

search(keyword)

To obtain the specific data of goods, the crawling logic is to first use selenium to obtain the web page source code, and then lxml parsing. Of course, selenium parsing can also be used directly here. The crawled data fields include title, price, number of comments, store name, unique product id, etc. The sku field of unique product id is very important and is the main url parameter for subsequent crawling of the product comment.

def get_data(html):

selec_data = etree.HTML(html)

lis = selec_data.xpath('//ul[@class="gl-warp clearfix"]/li')

for li in lis:

try:

title = li.xpath('.//Div [@ class = "p-name p-name-type-2"] / / EM / text() '[0]. Strip() # name

price = li.xpath('.//Div [@ class = "p-price"] / / I / text() '[0]. Strip() # price

comment = li.xpath('.//div[@class="p-commit"]//a/text()) # comments

shop_name = li.xpath('.//div[@class="p-shop"]//a/text()) # shop name

data_sku = li.xpath('.//Div [@ class = "p-focus"] / A / @ data SKU ') [0] # item unique id

icons = li.xpath('.//div[@class="p-icons"]/i/text()) # remarks

comment = comment[0] if comment != [] else ''

shop_name = shop_name[0] if shop_name != [] else ''

icons_n = ''

for x in icons:

icons_n = icons_n + ',' + x

detail_url = li.xpath('.//div[@class="p-name p-name-type-2"]/a/@href')[0] # details page website

detail_url = 'https:' + detail_url

item = [title, price, comment, shop_name, data_sku, icons_n[1:], detail_url]

print(item)

sheet.append(item)

except TimeoutError:

get_data(html)

Set the series crawling process of the main function. There are some differences in url parameters between the first page and other pages, which need special treatment. Use j to control the number of pages, and construct the real url of each page according to the law of url parameters in the for loop.

def main():

url_main = 'https://www.jd.com/'

keyword = input('Please enter the product name:') # Search keywords

driver.get(url=url_main)

page = search(keyword)

j = 1

for i in range(3, page*2, 2):

if j == 1:

url = 'https://search.jd.com/Search?keyword={}&page={}&s={}&click=0'.format(keyword, i, j)

else:

url = 'https://search.jd.com/Search?keyword={}&page={}&s={}&click=0'.format(keyword, i, (j-1)*50)

driver.get(url)

time.sleep(1)

driver.execute_script("window.scrollTo(0, document.body.scrollHeight)") # Slide to the bottom

time.sleep(3)

driver.implicitly_wait(20)

wait.until(

EC.presence_of_all_elements_located((By.XPATH, '//*[@id="J_goodsList"]/ul/li[last()]'))

)

html = driver.page_source

get_data(html)

time.sleep(1)

print(f'Crawling to No{j}page')

j += 1

wb.save('Jingdong double eleven{}information.xlsx'.format(keyword))

Open the crawler.

if __name__ == '__main__':

main()

3, Results

Taking Huawei mobile phones as an example, the final data crawled are as follows:

We can find that there are a lot of dirty data in the crawled source data. If you want to use it for statistical analysis or deeper analysis, you must clean the data first, which is easier to achieve by using panda library. In the future, we will use sku to crawl the comment data of products. Please look forward to it!