0. Preface

In the middle, there are many process notes to modify the code, which are not available. They are only used to record ideas. If you want to see the final code, turn to the bottom directly.

Run Li Mo 2021 deep learning linear regression code as follows

def synthetic_data(w, b, num_examples):

"""generate y = Xw + b + Noise."""

X = torch.normal(0, 1, (num_examples, len(w)))

y = torch.matmul(X, w) + b

y += torch.normal(0, 0.01, y.shape)

return X, y.reshape((-1, 1))

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = synthetic_data(true_w, true_b, 1000)The following error is reported:

TypeError Traceback (most recent call last)

<ipython-input-14-13b36a1f7ef4> in <module>

8 true_w = torch.tensor([2, -3.4])

9 true_b = 4.2

---> 10 features, labels = synthetic_data(true_w, true_b, 1000)

<ipython-input-14-13b36a1f7ef4> in synthetic_data(w, b, num_examples)

1 def synthetic_data(w, b, num_examples):

2 """generate y = Xw + b + Noise."""

----> 3 X = torch.normal(0, 1, (num_examples, len(w)))

4 y = torch.matmul(X, w) + b

5 y += torch.normal(0, 0.01, y.shape)

TypeError: normal() received an invalid combination of arguments - got (int, int, tuple), but expected one of:

* (Tensor mean, Tensor std, torch.Generator generator, Tensor out)

* wei

* (float mean, Tensor std, torch.Generator generator, Tensor out)In order to understand the mistakes, I went to check the documents and found that most of the online introductions were

torch.normal(mean, std, out=None) → Tensor

Tensor mean, Tensor std

torch.normal(mean, std=1.0, out=None) → Tensor

Tensor mean, float std

torch.normal(mean=0.0, std, out=None) → Tensor

float mean, Tensor std

There is torch in the error Generator generator is not introduced, so it includes torch Documentation for the generator.

Note: read pytorch1 0 Chinese document does not introduce torch Normal (mean, STD, *, generator = none, out = none). Finally, after consulting the latest English document of pytorch, the following records are obtained. Two more torches were found The format of the normal() function.

1.torch. Introduction to two other formats of normal() function

torch.normal(mean, std, *, generator=None, out=None) → Tensor

torch.normal(mean, std, *, generator=None, out=None) → Tensor

Returns the tensor of random numbers extracted from separate normal distributions whose mean and standard deviation are given.

Mean is a tensor with the mean of the normal distribution of each output element

std is a tensor, the standard deviation of the normal distribution of each output element

The shapes of mean and std do not need to match, but the total number of elements in each tensor needs to be the same.

When the shapes do not match, the shape of the average is used as the shape of the return output tensor

When std is a CUDA tensor, this function synchronizes its device with the CPU.

parameter

mean (Tensor) – tensor of the mean value of each element

std (Tensor) – tensor of the standard deviation of each element

Keyword parameters

generator (torch.Generator, optional) – a pseudo-random number generator for sampling

out (Tensor, optional) – output tensor

torch.normal(mean, std, size, *, out=None) → Tensor

torch.normal(mean, std, size, *, out=None) → Tensor

Similar to the above function, but all plotted elements share the mean and standard deviation. The size of the resulting tensor is given by the size.

parameter

mean (float) - the average of all distributions

std (float) – standard deviation of all distributions

size (int...)– An integer sequence that defines the shape of the output tensor.

Keyword parameters

out (tensor, optional) – outputs the tensor.

2. Code modification (recorded error codes and notes)

It can be seen from the error that the code format is (int, int, tuple) and what the teacher said in the course, the format used for bold speculation is

torch.normal(mean, std, size, *, out=None)

The modification code is as follows:

def synthetic_data(w, b, num_examples):

"""generate y = Xw + b + Noise."""

X = torch.normal(0., 1., (num_examples, len(w)))

#X = torch.normal(mean = 0.,std= 1., size =(num_examples, len(w)))

#Try the above

y = torch.matmul(X, w) + b

y += torch.normal(0., 0.01, y.shape)

return X, y.reshape((-1, 1))

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = synthetic_data(true_w, true_b, 1000)The old problem of transportation or wrong reporting

Note: the above codes are in pytorch1 Version 0.0 still reports an error

3. Conclusion and other modification process records (also wrong codes)

After repeated attempts, it was finally confirmed. Because the version used is pytorch1 0.0 instead of the new version, you can't use the program given by the teacher. (the normal function in pytorch version 1.0.0 does not use size.)

The final decision was made according to pytorch1 The magic code of version 0.0 is as follows:

The format adopted here is (tensor mean, float STD, torch. Generator, tensor out)

The modification code is as follows:

def synthetic_data(w, b, num_examples):

"""generate y = Xw + b + Noise."""

X = torch.normal(torch.tensor([0]), 1.)

y = torch.matmul(X, w) + b

y += torch.normal(torch.tensor([0]), 0.01)

return X, y.reshape((-1, 1))

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = synthetic_data(true_w, true_b, 1000)A new error occurred:

RuntimeError: _th_normal is not implemented for type torch.LongTensor

The default reason type is float64. The type is too long, so it is changed to 32 bits

Therefore, the modified code is as follows:

def synthetic_data(w, b, num_examples):

"""generate y = Xw + b + Noise."""

a1 = torch.tensor([0],dtype=torch.float32)#If you put it directly into the function, an error will be reported, so it is good to use Mr. a1

X = torch.normal(a1, 1.)

y = torch.matmul(X, w) + b

y += torch.normal(a1, 0.01)

return X, y.reshape((-1, 1))

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = synthetic_data(true_w, true_b, 1000)A new error occurred:

RuntimeError Traceback (most recent call last)

<ipython-input-48-e254f9fc8d52> in <module>

10 true_w = torch.tensor([2, -3.4],dtype=torch.float32)

11 true_b = 4.2

---> 12 features, labels = synthetic_data(true_w, true_b, 1000)

<ipython-input-48-e254f9fc8d52> in synthetic_data(w, b, num_examples)

4

5 X = torch.normal(a1, 1.)

----> 6 y = torch.matmul(X, w) + b

7 y += torch.normal(a1, 0.01)

8 return X, y.reshape((-1, 1))RuntimeError: inconsistent tensor size, expected tensor [1] and src [2] to have the same number of elements, but got 1 and 2 elements respectively

The reason is that the dimensions are different.

The modification code is as follows:

def synthetic_data(w, b, num_examples):

"""generate y = Xw + b + Noise."""

a1 = torch.tensor([0,0],dtype=torch.float32)#If you put it directly into the function, an error will be reported, so it is good to use Mr. a1

X = torch.normal(a1, 1.)

y = torch.matmul(X, w) + b

y += torch.normal(a1,1.)

return X, y.reshape((-1, 1))

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = synthetic_data(true_w, true_b, 1000)The following error occurred:

RuntimeError Traceback (most recent call last)

<ipython-input-36-915e79a2fa26> in <module>

10 true_w = torch.tensor([2, -3.4])

11 true_b = 4.2

---> 12 features, labels = synthetic_data(true_w, true_b, 1000)

<ipython-input-36-915e79a2fa26> in synthetic_data(w, b, num_examples)

5 X = torch.normal(a1, 1.)

6 y = torch.matmul(X, w) + b

----> 7 y += torch.normal(a1,1.)

8 return X, y.reshape((-1, 1))

9

RuntimeError: output with shape [] doesn't match the broadcast shape [2]Due to broadcast mechanism and other reasons, the operations of tensor y+=a + and y=a+y are different, so the modified code is as follows:

def synthetic_data(w, b, num_examples):

"""generate y = Xw + b + Noise."""

a1 = torch.tensor([0,0],dtype=torch.float32)#If you put it directly into the function, an error will be reported, so it is good to use Mr. a1

X = torch.normal(a1, 1.)

y = torch.matmul(X, w) + b

y = torch.normal(a1,1.) + y

return X, y.reshape((-1, 1))

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = synthetic_data(true_w, true_b, 1000)The code here will not report an error, but then run the following code:

print('features:', features[0], '\nlabel:', labels[0])The output is as follows:

features: tensor(-0.9737) label: tensor([7.3704])

It is different from the output in the course, as follows:

features: tensor([-0.0748, -0.3831]) label: tensor([5.3410])

Later, after reflection, I found that I fell into a misunderstanding. I can't write the program according to the error. I should write the code according to what function should be designed in the course. The above code is only the process notes of thinking, which is not correct. The correct code starts from section 4.

================================== boundary=================================

==============Supplementary function torch Introduction to matmul()=================

torch.matmul(tensor1, tensor2, out=None) → Tensor

Matrix product of two tensors.

The behavior depends on the dimension of the tensor as follows:

If both tensors are one-dimensional, the dot product (scalar) is returned.

If both parameters are two-dimensional, the matrix product is returned.

If the first parameter is one-dimensional and the second parameter is two-dimensional, in order to multiply the matrix, add 1 before its dimension. After the matrix is multiplied, the previous dimension is removed.

If the first parameter is two-dimensional and the second parameter is one-dimensional, the matrix vector product is returned.

If both parameters are at least one-dimensional and at least one parameter is N-dimensional (where N > 2), a batch matrix multiplication is returned. If the first parameter is one-dimensional, for the purpose of batch matrix multiplication, add a 1 before its dimension, and then delete it. If the second parameter is one-dimensional, for the multiple of the batch matrix, a 1 is appended to its dimension and deleted thereafter. The non matrix (i.e. batch) dimension is broadcast (so it must be broadcast). For example, if tensor1 is a torch tensor, tensor2 is a torch tensor, and out will be a torch tensor.

be careful

The out parameter is not supported in the one-dimensional dot product version of this function.

Parameters:

tensor1 – the first tensor to be multiplied

tensor2 – the second tensor to be multiplied

out (tensor, optional) – output tensor

====================================================================

==============================Dividing line=================================

====================================================================

4. The final procedure is as follows (correctly modified code):

According to the course, an artificial data set is constructed according to the linear model with noise. Let the number of training data set samples be 1000 and the number of inputs (number of features) be 2.

import numpy as np

def synthetic_data(w, b, num_examples):

"""generate y = Xw + b + Noise."""

#X = torch.normal(0, 1, (num_examples, len(w)))

#Originally, the teacher defined size as (num_examples=1000, len(w)=2)

X = torch.randn(num_examples, len(w), dtype=torch.float32)

y = torch.matmul(X, w) + b

#If the input dimension is 1D and the other dimension is 2D, first expand the dimension of 1D to 2D (in front of the dimension of 1D + 1),

#Then remove this dimension after obtaining the result, and the dimension obtained is the same as that of input.

#Even if the extension (broadcast) processing is performed, the input dimension must correspond to the other dimension

#y += torch.normal(0, 0.01, y.shape)

#Noise term ϵ It follows a normal distribution with a mean of 0 and a standard deviation of 0.01.

y += torch.tensor(np.random.normal(0, 0.01, size=y.size()), dtype=torch.float32)

return X, y.reshape((-1, 1))

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = synthetic_data(true_w, true_b, 1000)print('features:', features[0], '\nlabel:', labels[0])Output

features: tensor([-0.7966, -0.0960]) label: tensor([2.9413])

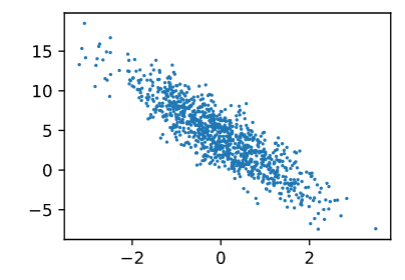

d2l.set_figsize()

d2l.plt.scatter(features[:, (1)].detach().numpy(),

labels.detach().numpy(), 1);Output picture

================================End================================