Write in front

Recently, there was an uproar in the script circle when the news that a crowd coding platform was run away came out (I'm not in the script circle, I just like to watch the people in the group blow and force), as if I could never hear the familiar advertising language again. This also indicates that the third-party coding platform is unreliable. At this time, it's essential to play the code, but what about the function? Of course, do it yourself! I'm not very busy recently. I'm going to roll out a series of articles on identifying verification codes with Python. The series plans to include various popular verification code forms, such as slider, four arithmetic, click selection, gesture, spatial reasoning, Google, etc. All the codes that have run through have been put on my knowledge planet. Please take them if necessary. Don't talk much, open!

Data characteristics

The click selection of several beautiful icons is similar to that of other icons, which should be clicked in order.

get data

Normal people know that these data must be caught by reptiles (if you are single so far, when I didn't say). Count beauty It's a good conscience for anti crawling. If you analyze the request a little, you will find that some parameters seem encrypted but are actually written dead. Therefore, by constructing the request header and parameters, you can easily obtain the url of the verification code image.

Recognition ideas

A verification code is composed of two diagrams, and one is the suffix_ bg.jpg background image, one is the suffix is_ fg.png icon diagram.

First, think about the problems to be solved in order to click in order:

- Select four icons from the icon diagram in order (the basis for clicking in order), which I would like to call F4

- Locate the four icons that have been rotated and scaled in the background image and pull them out. I would like to call them f4

- Calculate the similarity between F4 and F4 (base pairing)

Select F4 in order

A little old fellow who knows CV should know that this kind of picture is good. Turn it into a gray image, OTSU threshold segmentation, and expand it to get a better connected area of F4 s.

# image is an icon diagram gray_img = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) _, threshold_img = cv2.threshold(gray_img, 100, 255, cv2.THRESH_OTSU) kernel = np.ones([3, 3], np.uint8) dialte_img = cv2.dilate(threshold_img, kernel, 2)

The result is soy sauce purple.

With the above results, it is easy to pick pictures in order. Traverse the vertical pixel sum of Kangkang from left to right. Whether it is 0 is OJBK.

# F4

roi_image = []

i = 0

while i < image.shape[1]:

if(np.sum(dialte_img[:,i]) > 0):

start_col = i

while np.sum(dialte_img[:,i]) > 0:

i += 1

end_col = i

# Matting

roi_image.append(image[:,start_col:end_col])

else:

i += 1

Matting effect

Positioning f4

If old fellow has read the book that I wrote before, I would like to continue to use template matching to find the location of the icon on the background map. But if you try it, you will find that you can't find the position at all! no accurate Because template matching has no rotation invariance and scaling invariance. The so-called invariance is that change is equal to no change. That is, template matching is not suitable for matching targets that have been rotated and scaled, even if they look like one thing from the human eye.

Originally, I wanted to use SIFT for positioning, but sift applied for a patent. I can't whore in vain with the reduced version of opencv

Later, I thought, forget it. Go to YoloV5. Anyway, YoloV5n doesn't have high requirements for graphics cards. I can train a positioning icon model for 4G bully. Just do it.

After half an hour of marking, more than 90 drawings were marked. Then a 13.9M model is trained with YoloV5n pytorch version, and the mAP is up to 98!!! I have to say, Yolo is awesome! (if you are not familiar with yolov5, you can refer to Official github)

Once you have the model, you need to rewrite the official predict Py, because predict Py is too bloated, and all the prediction results are drawn on the graph, just like soy sauce purple.

But what we want is f4 our position. Therefore, all miscellaneous items such as visualization and dump logs can be deleted. Note: predict There are two coordinate representations in py, one is xywh and the other is xyxy. Xywh is the normalized coordinate of the upper left corner of the target rectangle and the normalized width and height of the rectangle. The coordinates of the upper and lower corners of the rectangle are normalized, and the coordinates of the upper and lower corners of the rectangle are not xyxy.

As for which way to express the position, different people have different opinions. It can be converted to each other anyway.

In order to make opencv a good matting, I used xyxy, and then encapsulated it a little.

# pos is a list of coordinates that f4 people can use directly

pos = yolo_detector.detect(bg_img)

# List of stored f4

bg_roi_imgs = []

for p in pos:

# Matting

bg_roi_imgs.append(bg_img[p[0]:p[1], p[2]:p[3]])

Calculate similarity

F4 and F4 are available, so we need them to make base pairing. The logic of making a base is actually quite simple, probably maozi.

# Put the rectangular boxes of friends in order

rects = []

for i in range(len(puzzle_images)):

best_score = 0

best_rect = None

for j in range(len(bg_roi_imgs)):

score = Calculate similarity(puzzle_images[i], bg_roi_imgs[j])

if dis > best_score:

best_score = dis

best_rect = [pos[j][2], pos[j][0], pos[j][3], pos[j][1]]

rects.append(best_rect)

So what's the similarity? At first I thought it was a perceptual hash? It turned out not to be very good. How about a HOG feature and a cosine distance? As a result, he meowed more than he felt

Forget it, a shuttle with twin network is just labeling. Can't I do it

Labeling (laziness)

As for labeling, those who have studied ML or DL know that the real data of the business scenario is certainly the best, because the data distribution is the most similar. But I'm lazy... I did image enhancement opportunistically. The idea is to randomly find a few net maps, and then rotate the seeds randomly and zoom to get the map. Then randomly find a net map to paste it and pull it out.

import cv2

import numpy as np

import os

import time

def random_rotate(img):

rows, cols, channels = img.shape

angle = [0, 20, 45, 60, -20, -45]

aa = np.random.randint(len(angle))

rotate = cv2.getRotationMatrix2D((rows * 0.5, cols * 0.5), angle[aa], 1)

res = cv2.warpAffine(img, rotate, (cols, rows))

return res

def random_resize(img):

scale = [1.0, 1.2, 1.3,1.5, 1.7 ,2, 2.5]

x = np.random.randint(len(scale))

img = cv2.resize(img, (0, 0), fx=scale[x], fy=scale[x])

return img

def gen_random_img(bg, fg):

fg_ = fg.copy()

fg_ = random_rotate(fg_)

fg_ = random_resize(fg_)

fg_r, fg_c = fg_.shape[0], fg_.shape[1]

x = np.random.randint(bg.shape[1]-fg_c)

y = np.random.randint(bg.shape[0]-fg_r)

roi = bg[y:y+fg_r, x:x+fg_c].copy()

for i in range(roi.shape[0]):

for j in range(roi.shape[1]):

if np.sum(fg_[i,j,:]) > 30:

roi[i, j, 0] = fg_[i, j, 0]

roi[i, j, 1] = fg_[i, j, 1]

roi[i, j, 2] = fg_[i, j, 2]

return roi

bgs = os.listdir('random_bg')

for fg_path in os.listdir('./images_background/'):

filename = os.listdir(os.path.join('./images_background/', fg_path))[0]

for i in range(100):

bg_i = np.random.randint(len(bgs))

bg = cv2.imread('random_bg/'+bgs[bg_i])

fg = cv2.imread(os.path.join(os.path.join('./images_background/',fg_path),filename))

roi = gen_random_img(bg, fg)

cv2.imwrite(os.path.join(os.path.join('./images_background/',fg_path),str(round(time.time()*1000)))+'.jpg', roi)

Then you have a data set of about maozi

Training twin network

There are many versions of the twin network github of pytorch. Just choose the one that looks best. I chose VGG16 as the backbone and triple loss as the loss function. After training about 7 epoch s, the accuracy is OK (later experiments proved that it was a little over fitting).

Use model

After having the model, just call the model to predict directly. Anyway, the result given is a probability value. The higher the probability value, the more similar it is.

# Put the rectangular boxes of friends in order

rects = []

for i in range(len(puzzle_images)):

best_score = 0

best_rect = None

for j in range(len(bg_roi_imgs)):

score = siamese_model.detect_image(puzzle_images[i], bg_roi_imgs[j])

if dis > best_score:

best_score = dis

best_rect = [pos[j][2], pos[j][0], pos[j][3], pos[j][1]]

rects.append(best_rect)

At this time, there will be the position of F4 in the background image in rects.

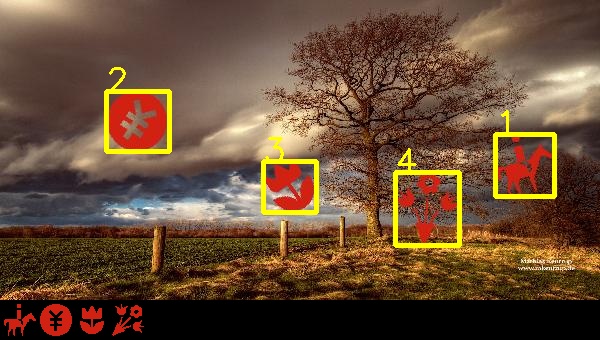

Recognition results

In order to view the recognition results conveniently, I pasted F4 and the background picture on a picture, and then the numbers on the frame are in the order of clicking in turn.

# Background map

bg_img = cv2.imread(bg_path)

# F4 guys

fg_img = cv2.imread(fg_path)

# New map

back = np.zeros([340, 600, 3], dtype=np.uint8)

# Get the rectangular box information clicked in turn

rects = get_result(bg_img, fg_img, model, detector)

for i, rect in enumerate(rects):

# visualization

bg_img = cv2.rectangle(bg_img, (rect[0], rect[1]), (rect[2], rect[3]), (0, 255, 255), 3)

bg_img = cv2.putText(bg_img, str(i + 1), (rect[0], rect[1]), cv2.FONT_HERSHEY_SIMPLEX, 1.1, (0, 255, 255), 2)

back[:bg_img.shape[0], :bg_img.shape[1], :] = bg_img

# Put F4 s at the bottom of the picture

for i in range(fg_img.shape[0]):

for j in range(fg_img.shape[1]):

if fg_img[i, j, 0] != 0 and fg_img[i, j, 1] != 0 and fg_img[i, j, 2] != 0:

back[i+bg_img.shape[0], j, 0] = fg_img[i, j, 0]

back[i+bg_img.shape[0], j, 1] = fg_img[i, j, 1]

back[i+bg_img.shape[0], j, 2] = fg_img[i, j, 2]

After testing, the correct rate of clicking in turn is about 65%.

Improvement points

1. When picking F4, I didn't consider the icons that are very separated. For example, AI in the figure below will be pulled into A and I. You can consider directly using the yolo model of positioning F4 to matting, and the effect is certainly better than this.

2. Because of laziness, there are too few labeled data. I only labeled more than 50 kinds of icons for the data of twin network. In fact, there are far more than 50 kinds of icons in the test, which makes the model easy to over fit. For example, there are similar s-shaped gaps in the icons of 1 and 2 in the figure below. The model is wrong. If you are not lazy, the effect will not be bad.

3. Construct a network structure to achieve end-to-end identification.