When deploying the project, it is impossible to directly output all the information to the console. We can record these information in the log file, which is not only convenient for us to view the running situation of the program, but also quickly locate the location of the problem according to the log generated during the running when the project fails.

1. Log level

Python standard library logging is used to record logs. By default, it is divided into six log levels (brackets are the values corresponding to the levels), NOTSET (0), DEBUG (10), INFO (20), WARNING (30), ERROR (40) and CRITICAL (50). When we customize the log level, be careful not to have the same value as the default log level. When logging is executed, log information greater than or equal to the set log level will be output. If the set log level is INFO, logs of INFO, WARNING, ERROR and CRITICAL levels will be output.

2. logging process

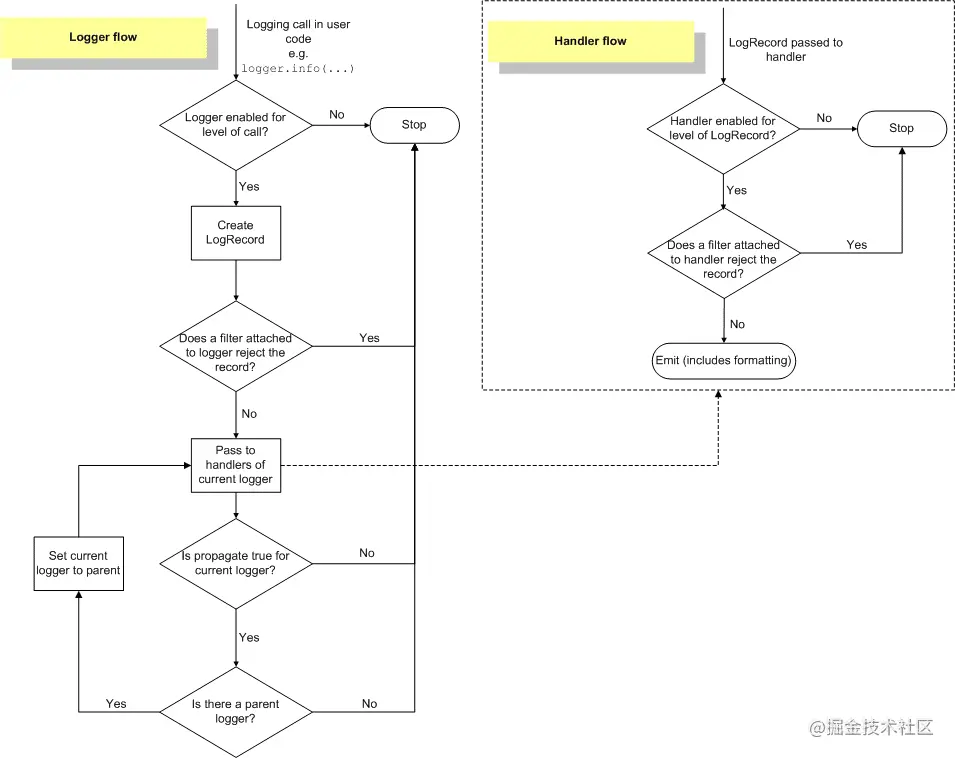

The working flow chart of the official logging module is as follows:

From the following figure, we can see these Python types: Logger, LogRecord, Filter, Handler and Formatter.

Type Description:

Logger: the log exposes the function to the application, and determines which logs are valid based on the logger and filter level.

LogRecord: log recorder, which transfers the log to the corresponding processor for processing.

Handler: the processor that sends the log records (generated by the logger) to the appropriate destination.

Filter: filter, which provides better granularity control. It can determine which log records to output.

Formatter: formatter that indicates the layout of the log records in the final output.

- Judge whether the Logger object is available for the set level. If it is available, proceed to the next step. Otherwise, the process ends.

- Create a LogRecord object. If the Filter object registered in the Logger object returns False after filtering, the log will not be recorded and the process ends. Otherwise, it will be executed downward.

- The LogRecord object passes the Handler object into the current Logger object (sub process in the figure). If the log level of the Handler object is greater than the set log level, judge whether the Filter object registered in the Handler object returns True after filtering and release the output log information. Otherwise, it will not be released and the process ends.

- If the incoming Handler is greater than the level set in the Logger, that is, the Handler is valid, it will be executed downward. Otherwise, the process ends.

- Judge whether this Logger object has a parent Logger object. If not (it means that the current Logger object is the top-level Logger object root Logger), the process ends. Otherwise, set the Logger object as its parent Logger object, repeat steps 3 and 4 above, and output the log output in the parent Logger object until it is the root Logger.

3. Log output format

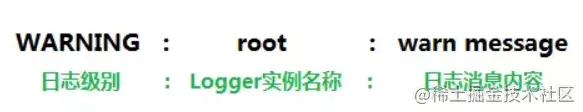

The output format of the log can be considered as settings. The default format is shown in the following figure.

4. Basic use

logging is very simple to use. The basicConfig() method can meet the basic needs. If the method does not pass in parameters, a Logger object will be created according to the default configuration. The default log level is set to WARNING. The default log output format is shown in the figure above. The optional parameters of this function are shown in the table below.

Parameter name | Parameter description |

|---|---|

filename | The file name of the log output to the file |

filemode | File mode, r [+], w [+], a [+] |

format | Format of log output |

datefat | Format of date and time attached to the log |

style | Format placeholder, default to '%' and '{}' |

level | Set log output level |

stream | Defines the output stream, which is used to initialize the StreamHandler object. It cannot be used with the filename parameter, otherwise ValueError exception will occur |

handles | The processor is defined to create a Handler object. It cannot be used with filename and stream parameters. Otherwise, ValueError exception will be thrown |

The example code is as follows:

import logging

logging.basicConfig()

logging.debug('This is a debug message')

logging.info('This is an info message')

logging.warning('This is a warning message')

logging.error('This is an error message')

logging.critical('This is a critical message')

Copy codeThe output results are as follows:

WARNING:root:This is a warning message ERROR:root:This is an error message CRITICAL:root:This is a critical message Copy code

Pass in common parameters. The example code is as follows (the variables in the log format placeholder will be described later):

import logging

logging.basicConfig(filename="test.log", filemode="w", format="%(asctime)s %(name)s:%(levelname)s:%(message)s", datefmt="%d-%m-%Y %H:%M:%S", level=logging.DEBUG)

logging.debug('This is a debug message')

logging.info('This is an info message')

logging.warning('This is a warning message')

logging.error('This is an error message')

logging.critical('This is a critical message')

Copy codeGenerated log file test Log, as follows:

13-10-18 21:10:32 root:DEBUG:This is a debug message 13-10-18 21:10:32 root:INFO:This is an info message 13-10-18 21:10:32 root:WARNING:This is a warning message 13-10-18 21:10:32 root:ERROR:This is an error message 13-10-18 21:10:32 root:CRITICAL:This is a critical message Copy code

However, when an exception occurs, directly using the nonparametric debug(), info(), warning(), error(), critical() methods cannot record the exception information, and exc needs to be set_ Only when the info parameter is True, or use the exception() method, or use the log() method, but also set the log level and exc_info parameter.

import logging

logging.basicConfig(filename="test.log", filemode="w", format="%(asctime)s %(name)s:%(levelname)s:%(message)s", datefmt="%d-%M-%Y %H:%M:%S", level=logging.DEBUG)

a = 5

b = 0

try:

c = a / b

except Exception as e:

# Choose one of the following three methods, and the first one is recommended

logging.exception("Exception occurred")

logging.error("Exception occurred", exc_info=True)

logging.log(level=logging.DEBUG, msg="Exception occurred", exc_info=True)

Copy code5. Custom Logger

The above basic use allows us to quickly start the logging module, but generally it can not meet the actual use. We also need to customize the Logger.

A system has only one Logger object, and the object cannot be instantiated directly. Yes, the singleton mode is used here, and the method to obtain the Logger object is getLogger.

Note: the singleton mode here does not mean that there is only one Logger object, but that there is only one root Logger object in the whole system. When the Logger object executes methods such as info(), error(), it actually calls methods such as info(), error() corresponding to the root Logger object.

We can create multiple Logger objects, but the real output log is the root Logger object. Each Logger object can have a name. If you set Logger = logging getLogger(__name__),__ name__ Is a special built-in variable in Python, which represents the name of the current module (the default is _main). The name of the Logger object is. It is recommended to use a namespace hierarchy with a dot as a separator.

The Logger object can set multiple Handler objects and Filter objects, and the Handler object can set the Formatter object. The Formatter object is used to set the specific output format. The common variable formats are shown in the following table. See for all parameters Python(3.7) official documentation:

variable | format | Variable description |

|---|---|---|

asctime | %(asctime)s | The log time is constructed into a readable form. By default, it is accurate to milliseconds, such as 23:24:57832 on October 13, 2018. The datefmt parameter can be additionally specified to specify the format of the variable |

name | %(name) | The name of the log object |

filename | %(filename)s | File name without path |

pathname | %(pathname)s | The file name that contains the path |

funcName | %(funcName)s | The name of the function where the log is located |

levelname | %(levelname)s | Level name of the log |

message | %(message)s | Specific log information |

lineno | %(lineno)d | The line number of the log record |

pathname | %(pathname)s | Full path |

process | %(process)d | Current process ID |

processName | %(processName)s | Current process name |

thread | %(thread)d | Current thread ID |

threadName | %threadName)s | Current thread name |

Both the Logger object and the Handler object can set the level. The default Logger object level is 30, that is, WARNING, and the default Handler object level is 0, that is, NOTSET. The logging module is designed for better flexibility. For example, sometimes we want to output both DEBUG level logs in the console and WARNING level logs in the file. You can set only one Logger object at the lowest level and two Handler objects at different levels. The example code is as follows:

import logging

import logging.handlers

logger = logging.getLogger("logger")

handler1 = logging.StreamHandler()

handler2 = logging.FileHandler(filename="test.log")

logger.setLevel(logging.DEBUG)

handler1.setLevel(logging.WARNING)

handler2.setLevel(logging.DEBUG)

formatter = logging.Formatter("%(asctime)s %(name)s %(levelname)s %(message)s")

handler1.setFormatter(formatter)

handler2.setFormatter(formatter)

logger.addHandler(handler1)

logger.addHandler(handler2)

# 10, 30 and 30 respectively

# print(handler1.level)

# print(handler2.level)

# print(logger.level)

logger.debug('This is a customer debug message')

logger.info('This is an customer info message')

logger.warning('This is a customer warning message')

logger.error('This is an customer error message')

logger.critical('This is a customer critical message')

Copy codeThe console output result is:

2018-10-13 23:24:57,832 logger WARNING This is a customer warning message 2018-10-13 23:24:57,832 logger ERROR This is an customer error message 2018-10-13 23:24:57,832 logger CRITICAL This is a customer critical message Copy code

The output content in the file is:

2018-10-13 23:44:59,817 logger DEBUG This is a customer debug message 2018-10-13 23:44:59,817 logger INFO This is an customer info message 2018-10-13 23:44:59,817 logger WARNING This is a customer warning message 2018-10-13 23:44:59,817 logger ERROR This is an customer error message 2018-10-13 23:44:59,817 logger CRITICAL This is a customer critical message Copy code

If you create a custom Logger object, do not use the log output methods in logging. These methods use the default configured Logger object, otherwise the output log information will be repeated.

import logging

import logging.handlers

logger = logging.getLogger("logger")

handler = logging.StreamHandler()

handler.setLevel(logging.DEBUG)

formatter = logging.Formatter("%(asctime)s %(name)s %(levelname)s %(message)s")

handler.setFormatter(formatter)

logger.addHandler(handler)

logger.debug('This is a customer debug message')

logging.info('This is an customer info message')

logger.warning('This is a customer warning message')

logger.error('This is an customer error message')

logger.critical('This is a customer critical message')

Copy codeThe output results are as follows (you can see that the log information has been output twice):

2018-10-13 22:21:35,873 logger WARNING This is a customer warning message WARNING:logger:This is a customer warning message 2018-10-13 22:21:35,873 logger ERROR This is an customer error message ERROR:logger:This is an customer error message 2018-10-13 22:21:35,873 logger CRITICAL This is a customer critical message CRITICAL:logger:This is a customer critical message Copy code

Note: when importing python files with log output, such as import test Py, the log in the import file will be output after meeting the log level greater than the current setting.

6. Logger configuration

Through the above example, we know the configuration required to create a Logger object. The configuration object is directly hard coded in the program. The configuration can also be obtained from dictionary objects and configuration files. Open logging Config Python file, you can see the configuration resolution conversion function in it.

Get configuration information from the dictionary:

import logging.config

config = {

'version': 1,

'formatters': {

'simple': {

'format': '%(asctime)s - %(name)s - %(levelname)s - %(message)s',

},

# Other formatter

},

'handlers': {

'console': {

'class': 'logging.StreamHandler',

'level': 'DEBUG',

'formatter': 'simple'

},

'file': {

'class': 'logging.FileHandler',

'filename': 'logging.log',

'level': 'DEBUG',

'formatter': 'simple'

},

# Other handler s

},

'loggers':{

'StreamLogger': {

'handlers': ['console'],

'level': 'DEBUG',

},

'FileLogger': {

# There are both console Handler and file Handler

'handlers': ['console', 'file'],

'level': 'DEBUG',

},

# Other loggers

}

}

logging.config.dictConfig(config)

StreamLogger = logging.getLogger("StreamLogger")

FileLogger = logging.getLogger("FileLogger")

# Omit log output

Copy codeGet configuration information from the configuration file:

Common configuration files include ini format, yaml format, JSON format, or can be obtained from the network. As long as there is a corresponding file parser parsing configuration, only the configuration of ini format and yaml format is shown below.

test.ini file

[loggers] keys=root,sampleLogger [handlers] keys=consoleHandler [formatters] keys=sampleFormatter [logger_root] level=DEBUG handlers=consoleHandler [logger_sampleLogger] level=DEBUG handlers=consoleHandler qualname=sampleLogger propagate=0 [handler_consoleHandler] class=StreamHandler level=DEBUG formatter=sampleFormatter args=(sys.stdout,) [formatter_sampleFormatter] format=%(asctime)s - %(name)s - %(levelname)s - %(message)s Copy code

testinit.py file

import logging.config

logging.config.fileConfig(fname='test.ini', disable_existing_loggers=False)

logger = logging.getLogger("sampleLogger")

# Omit log output

Copy codetest.yaml file

version: 1

formatters:

simple:

format: '%(asctime)s - %(name)s - %(levelname)s - %(message)s'

handlers:

console:

class: logging.StreamHandler

level: DEBUG

formatter: simple

loggers:

simpleExample:

level: DEBUG

handlers: [console]

propagate: no

root:

level: DEBUG

handlers: [console]

Copy codetestyaml.py file

import logging.config

# pyymal library needs to be installed

import yaml

with open('test.yaml', 'r') as f:

config = yaml.safe_load(f.read())

logging.config.dictConfig(config)

logger = logging.getLogger("sampleLogger")

# Omit log output

Copy code7. Problems in actual combat

1. Chinese garbled code

In the above example, the log output is in English. If you can't output the log to the file, there will be a problem of Chinese garbled code. How to solve this problem? FileHandler can set the file code when creating objects. If the file code is set to "utf-8" (utf-8 and utf8 are equivalent), the problem of Chinese garbled code can be solved. One method is to customize the Logger object, which needs to write a lot of configurations. The other method is to use the default configuration method basicConfig(), and pass in the handlers processor list object, where the handler sets the encoding of the file. Many online methods are invalid. The key reference codes are as follows:

# Custom Logger configuration handler = logging.FileHandler(filename="test.log", encoding="utf-8") Copy code

# Use the default Logger configuration

logging.basicConfig(handlers=[logging.FileHandler("test.log", encoding="utf-8")], level=logging.DEBUG)

Copy code2. Temporarily disable log output

Sometimes we don't want the log output, but we want to output the log after that. If we use the print() method to print information, we need to comment out all the print() methods. After using logging, we have the "magic" of turning off the log with one click. One way is to give logging. When using the default configuration The disabled () method passes in the disabled log level to disable the log output below the setting level. In the other method, when customizing the Logger, the disable property of the Logger object is set to True, and the default value is False, that is, it is not disabled.

logging.disable(logging.INFO) Copy code

logger.disabled = True Copy code

3. Log files are divided by time or size

If the log is saved in one file, a single log file will be large over a long period of time or more logs, which is not conducive to backup or viewing. We wonder if we can divide the log files according to time or size? The answer must be yes, and it's very simple. Logging takes our needs into account. logging. TimedRotatingFileHandler and RotatingFileHandler classes are provided in the handlers file, which can be divided by time and size respectively. Open the handles file and you can see the Handler classes with other functions, which inherit from the base class BaseRotatingHandler.

# TimedRotatingFileHandler class constructor def __init__(self, filename, when='h', interval=1, backupCount=0, encoding=None, delay=False, utc=False, atTime=None): # Constructor of RotatingFileHandler class def __init__(self, filename, mode='a', maxBytes=0, backupCount=0, encoding=None, delay=False) Copy code

The example code is as follows:

# One log file is divided every 1000 bytes, and there are three backup files

file_handler = logging.handlers.RotatingFileHandler("test.log", mode="w", maxBytes=1000, backupCount=3, encoding="utf-8")

Copy code# A log file is divided every 1 hour, interval is the time interval, and there are 10 backup files

handler2 = logging.handlers.TimedRotatingFileHandler("test.log", when="H", interval=1, backupCount=10)

Copy codeAlthough the Python official website says that the logging library is thread safe, there are still problems worth considering in multi process, multi thread and multi process and multi thread environments. For example, how to divide logs into different log files according to processes (or threads), that is, a process (or thread) corresponds to a file.

Summary: the Python logging library is designed very flexibly. If you have special needs, you can improve the basic logging library and create a new Handler class to solve the problems in actual development.