1. Introduction to Python logging module

1.1 importance of logging

- The programs deployed in the production environment run in a black box. They can't check the problems through debugging programs. They can't accurately reproduce the problems by observing the problem phenomena. Repairing the problems is difficult and will consume a lot of time

- Log files record relevant time records, status records, error records and other information, so as to easily track the operation status and quickly troubleshoot problems.

1.2 advantages of logging module

-

It is convenient to manage logs by importance 1

-

Facilitate log information management (record of additional log information, such as time and operation module information) 2

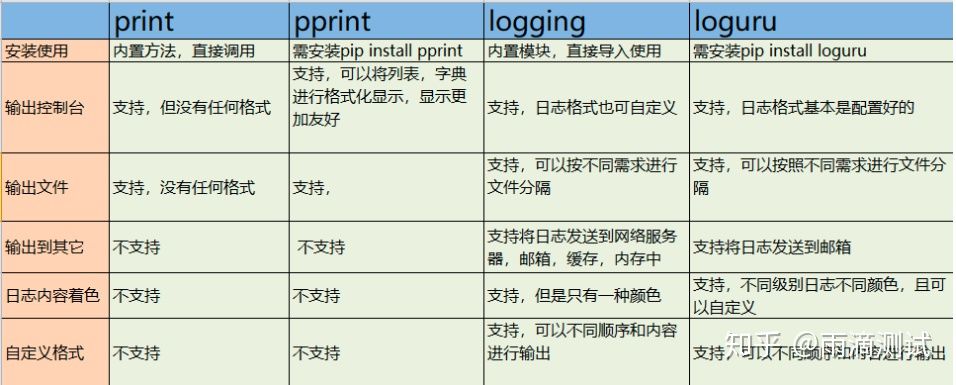

1.3 comparison of common log modules

Picture from python output log

- Print: use the program to print more quickly. It doesn't care about the log format. It just wants to see the results faster. It is generally used for a fragment of the program or single point debugging output.

- Pprint: it is more intuitive and friendly to print tuples, lists and dictionary data. If the output is in this format, pprint can be given priority

- Logging: it is more suitable for the implementation of a system or framework, and has higher requirements for logging. Logging can be given priority

- Loguru: it is also suitable for the implementation of a system or framework, but it is lighter and does not need to be configured. If the requirements for logs are not high, loguru can be given priority

2. Composition and workflow of logging module

2.1 composition of logging module

- Logger: that is, Logger Main Class, the object created during logging. We can call the logger method to pass in the log template and information to generate one Log Record after another, which is called Log Record.

- Log Record: refers to the generated log records.

- Handler: a class that handles log records. It can output log records to the specified log location and storage form.

- Formatter: the generated Log Record is also an object. If the log text is output, there needs to be a formatting process. Then this process is completed by the formatter. What is returned is the log string, and then it is returned to the Handler for processing.

- Filter: filter before saving and save the desired logs. For example, only a certain level of logs or logs containing a certain keyword are saved. Then the filtering process is left to filter.

The above is the basic architecture and object functions of the whole logging module 3 , the following is a brief description of the objects and methods of the Logging module for detailed reference[ logging — Logging facility for Python]

2.1.1 Logging module class

>>> dir(logging.Logger) ['__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', '_log', 'addFilter', 'addHandler', 'callHandlers', 'critical', 'debug', 'error', 'exception', 'fatal', 'filter', 'findCaller', 'getChild', 'getEffectiveLevel', 'handle', 'hasHandlers', 'info', 'isEnabledFor', 'log', 'makeRecord', 'manager', 'removeFilter', 'removeHandler', 'root', 'setLevel', 'warn', 'warning'] >>> dir(logging.Handler) ['__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'acquire', 'addFilter', 'close', 'createLock', 'emit', 'filter', 'flush', 'format', 'get_name', 'handle', 'handleError', 'name', 'release', 'removeFilter', 'setFormatter', 'setLevel', 'set_name'] >>> dir(logging.Formatter) ['__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'converter', 'default_msec_format', 'default_time_format', 'format', 'formatException', 'formatMessage', 'formatStack', 'formatTime', 'usesTime'] >>> dir(logging.Filter) ['__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'filter']

2.1.2 Logging module level function

logging.getLogger(name=None)

logging.getLoggerClass()

logging.debug(msg, *args, **kwargs)

logging.info(msg, *args, **kwargs)

logging.warning(msg, *args, **kwargs)

logging.error(msg, *args, **kwargs)

logging.critical(msg, *args, **kwargs)

logging.exception(msg, *args, **kwargs)

logging.basicConfig(**kwargs)

Logging Level

logger = logging.getLogger('simple_example')

logger.setLevel(logging.DEBUG)

| Level | When it's used |

|---|---|

| DEBUG | Detailed information, typically of interest only when diagnosing problems. |

| INFO | Confirmation that things are working as expected. |

| WARNING | An indication that something unexpected happened, or indicative of some problem in the near future (e.g. 'disk space low'). The software is still working as expected. |

| ERROR | Due to a more serious problem, the software has not been able to perform some function. |

| CRITICAL | A serious error, indicating that the program itself may be unable to continue running. |

LogRecord attributes

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

| Attribute name | Format | Description |

|---|---|---|

| args | You shouldn't need to format this yourself. | The tuple of arguments merged into msg to produce message, or a dict whose values are used for the merge (when there is only one argument, and it is a dictionary). |

| asctime | %(asctime)s | Human-readable time when the LogRecord was created. By default this is of the form '2003-07-08 16:49:45,896' (the numbers after the comma are millisecond portion of the time). |

| created | %(created)f | Time when the LogRecord was created (as returned by time.time()). |

| exc_info | You shouldn't need to format this yourself. | Exception tuple (à la sys.exc_info) or, if no exception has occurred, None. |

| filename | %(filename)s | Filename portion of pathname. |

| funcName | %(funcName)s | Name of function containing the logging call. |

| levelname | %(levelname)s | Text logging level for the message ('DEBUG', 'INFO', 'WARNING', 'ERROR', 'CRITICAL'). |

| levelno | %(levelno)s | Numeric logging level for the message (DEBUG, INFO, WARNING, ERROR, CRITICAL). |

| lineno | %(lineno)d | Source line number where the logging call was issued (if available). |

| message | %(message)s | The logged message, computed as msg % args. This is set when Formatter.format() is invoked. |

| module | %(module)s | Module (name portion of filename). |

| msecs | %(msecs)d | Millisecond portion of the time when the LogRecord was created. |

| msg | You shouldn't need to format this yourself. | The format string passed in the original logging call. Merged with args to produce message, or an arbitrary object (see Using arbitrary objects as messages). |

| name | %(name)s | Name of the logger used to log the call. |

| pathname | %(pathname)s | Full pathname of the source file where the logging call was issued (if available). |

| process | %(process)d | Process ID (if available). |

| processName | %(processName)s | Process name (if available). |

| relativeCreated | %(relativeCreated)d | Time in milliseconds when the LogRecord was created, relative to the time the logging module was loaded. |

| stack_info | You shouldn't need to format this yourself. | Stack frame information (where available) from the bottom of the stack in the current thread, up to and including the stack frame of the logging call which resulted in the creation of this record. |

| thread | %(thread)d | Thread ID (if available). |

| threadName | %(threadName)s | Thread name (if available). |

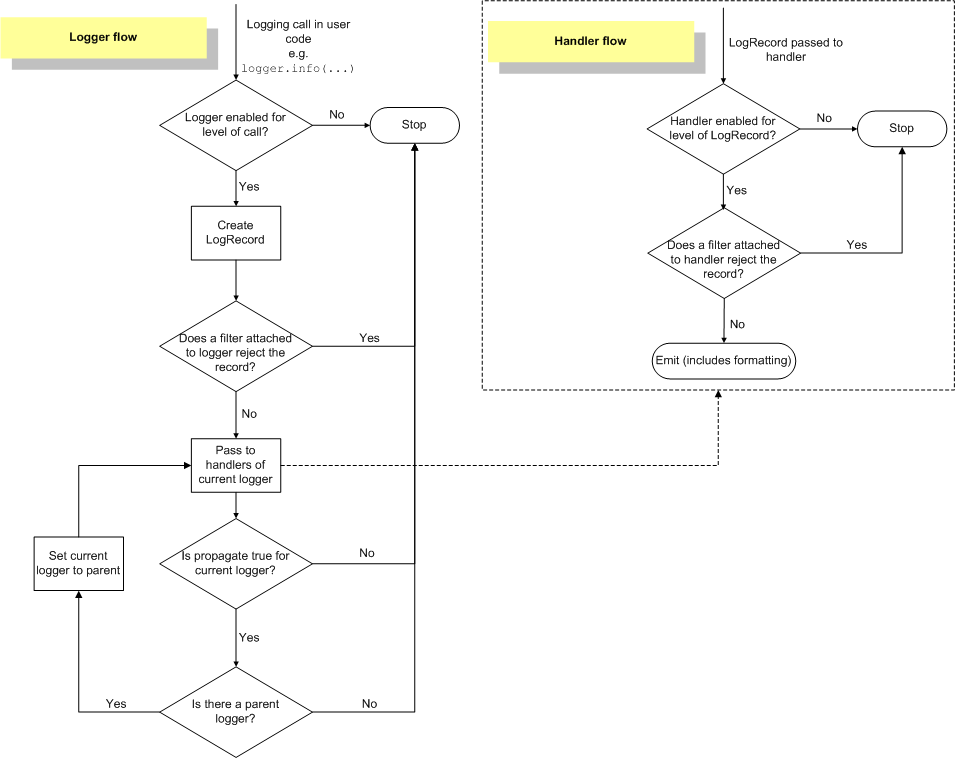

2.2 Logging workflow

The picture is taken from the official website logging-basic-tutorial

2.3 Logging configuration workflow

There are four ways to configure logging

Method 1: logging basicConfig()

"""

logging.basicConfig(

filename,

filemode,

format,

datefmt,

style,

level,

stream,

handlers

)

"""

import logging

_format = ('%(asctime)s '

'%(name)s ' # the name of logger

'%(levelname)s '

'%(filename)s '

'%(threadName)s '

'%(lineno)d: '

'%(message)s')

logging.basicConfig(

level=logging.DEBUG, # The default level is WARNING

filename='test.log',

format=_format,

)

logging.debug('logging debug')

logging.info('logging info')

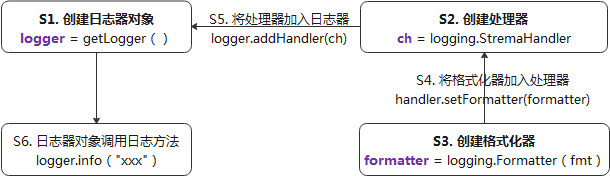

Method 2: call the configuration method directly from Python code

import logging

# create logger

logger = logging.getLogger('simple_example')

logger.setLevel(logging.DEBUG)

# create console handler and set level to debug

ch = logging.StreamHandler()

ch.setLevel(logging.DEBUG)

# create formatter

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

# add formatter to ch

ch.setFormatter(formatter)

# add ch to logger

logger.addHandler(ch)

# 'application' code

logger.debug('debug message')

logger.info('info message')

logger.warning('warn message')

logger.error('error message')

logger.critical('critical message')

Method 3: create a configuration file and read the configuration content from the file( fileConfig() )

import logging

import logging.config

logging.config.fileConfig('logging.conf')

# create logger

logger = logging.getLogger('simpleExample')

# 'application' code

logger.debug('debug message')

logger.info('info message')

logger.warning('warn message')

logger.error('error message')

logger.critical('critical message')

logging.conf (comments need to be removed when using the configuration file)

[loggers] keys=root,simpleExample [handlers] keys=consoleHandler [formatters] keys=simpleFormatter ########################################################## [logger_root] # Define the logger of root_ key1 level=DEBUG handlers=consoleHandler # Inherited from key in [handlers] [logger_simpleExample] # Define the logger of simpleExample_ key2 level=DEBUG handlers=consoleHandler qualname=simpleExample # Inherited from key in [loggers] propagate=0 [handler_consoleHandler] # Define the processor for window output, handlers_key1 class=StreamHandler level=DEBUG formatter=simpleFormatter # Inherit key from [formatters] args=(sys.stdout,) [formatter_simpleFormatter] # formatters_key1 format=%(asctime)s - %(name)s - %(levelname)s - %(message)s

Method 4: create a configuration file dictionary (YAML, JSON) and read the configuration content from the dictionary( dictConfig())

def setup_logging(default_path = "config/logging.json", default_level = logging.INFO,env_key = "LOG_CFG"):

path = default_path

value = os.getenv(env_key,None)

if value:

path = value

if os.path.exists(path):

with open(path,"r") as f:

config = json.load(f)

logging.config.dictConfig(config)

else:

logging.basicConfig(level = default_level)

if __name__ == '__main__':

setup_logging(default_path = "config/logging.json")

main()

Configuration file: YAML format dictionary

version: 1formatters: simple: format: '%(asctime)s - %(name)s - %(levelname)s - %(message)s'handlers: console: class: logging.StreamHandler level: DEBUG formatter: simple stream: ext://sys.stdoutloggers: simpleExample: level: DEBUG handlers: [console] propagate: noroot: level: DEBUG handlers: [console]

Configuration file: JSON format dictionary (can only be used after removing # the content)

{ "version":1, "disable_existing_loggers":false, "formatters":{ "simple":{ "format":"%(asctime)s - %(name)s - %(filename)s - %(funcName)s - %(lineno)d- %(levelname)s - %(message)s" } }, "handlers":{ "console":{ "class":"logging.StreamHandler", "level":"DEBUG", # set up handlers Output level of, next level "formatter":"simple", "stream":"ext://sys. stdout" }, "info_ file_ Handler ": {" class ":" logging. Handlers. Rotatingfilehandler "," level ":" info ", # set the output level of handlers, the next level" formatter ":" simple "," filename ":" log / info. Log "," maxbytes ": 40960," backupcount ": 20," encoding ":" utf8 "}," error_ file_ Handler ": {" class ":" logging. Handlers. Rotatingfilehandler "," level ":" error ", # set the output level of handlers, next level" formatter ":" simple "," filename ":" log / errors. Log "," maxbytes ": 40960, # single file size 1024 * 40" backupcount ": 20, # number of backup files: 20 Encoding ":" utf8 "}}," loggers ": {" my_module ": {" level ":" error ", # set the output level of the Logger" handlers ": [" info_file_handler "]," propagate ":" no "}}," root ": {" level ":" info "," handlers ": [" console "," info_file_handler "," error_file_handler "]}}

2.4 simple examples

import loggingimport logging.handlerslogger = logging.getLogger('test')logger.setLevel(level=logging.DEBUG) # Level of logging"""%(name)s: Logger Name of%(levelno)s: Print log level values%(levelname)s: Name of the print log level%(pathname)s: Print the path of the current executing program, which is actually sys.argv[0]%(filename)s: Print the name of the currently executing program%(funcName)s: Current function for printing logs%(lineno)d: Print the current line number of the log%(asctime)s: Time to print the log%(thread)d: Print thread ID%(threadName)s: Print thread name%(process)d: Printing process ID%(message)s: Print log information"""formatter = logging.Formatter('%(asctime)s - %(filename)s[line:%(lineno)d] - %(levelname)s: %(message)s')"""logging.StreamHandler -> console output logging.FileHandler -> File output logging.handlers.RotatingFileHandler -> Automatically split the log file according to the size, and regenerate the file once it reaches the specified size logging.handlers.TimedRotatingFileHandler -> Automatically split log files by time"""file_handler = logging.FileHandler('log/test.log') # Output the location of the file log and add the file location file_handler.setLevel(level=logging.INFO) # Level of output file log file_handler.setFormatter(formatter) # Format of output file log stream_handler = logging.StreamHandler() # Location and mode of output screen log stream_handler.setLevel(logging.DEBUG) # Level of output screen log stream_handler.setFormatter(formatter) # Format of output screen log# time_rotating_file_handler = logging.handlers.TimedRotatingFileHandler(filename='rotating_test.log', when='D')# time_rotating_file_handler.setLevel(logging.DEBUG)# time_rotating_file_handler.setFormatter(formatter)logger.addHandler(file_handler)logger.addHandler(stream_handler)# logger.addHandler(time_rotating_file_handler)# Run the test logger Debug ('debug level, generally used to print some debugging information, the lowest level ') logger Info ('info level, generally used to print some normal operation information ') logger Warning ('warning level, generally used to print warning information ') logger Error ('error level, usually used to print some error messages') logger Critical ('critical level, generally used to print some fatal error messages, with the highest level ')

3. Usage of logging module

3.1 log writing to file and screen

Output to file: set logging, create a FileHandler, set the format of the output message, add it to the logger, and then write the log to the specified file

Output to screen: add StreamHandler to logger to output logs to screen

Other different Handler methods

StreamHandler: logging.StreamHandler;Log output to stream, which can be sys.stderr,sys.stdout Or file FileHandler: logging.FileHandler;Log output to file BaseRotatingHandler: logging.handlers.BaseRotatingHandler;Basic log rollback method RotatingHandler: logging.handlers.RotatingHandler;Log rollback mode, which supports the maximum number of log files and log file rollback TimeRotatingHandler: logging.handlers.TimeRotatingHandler;Log rollback method: rollback log files within a certain time area SocketHandler: logging.handlers.SocketHandler;Remote output log to TCP/IP socketsDatagramHandler: logging.handlers.DatagramHandler;Remote output log to UDP socketsSMTPHandler: logging.handlers.SMTPHandler;Remote output log to email address SysLogHandler: logging.handlers.SysLogHandler;Log output to syslogNTEventLogHandler: logging.handlers.NTEventLogHandler;Remote output log to Windows NT/2000/XP Event log for MemoryHandler: logging.handlers.MemoryHandler;Log output to specified in memory bufferHTTPHandler: logging.handlers.HTTPHandler;adopt"GET"perhaps"POST"Remote output to HTTP The server

See the next section for the output of screens and documents at the same time.

3.2 multi module output( Logging from multiple modules)

Example 1: the simplest example explains the usage pattern

# myapp.pyimport loggingimport mylibdef main(): logging.basicConfig(filename='myapp.log', level=logging.INFO) logging.info('Started') mylib.do_something() logging.info('Finished')if __name__ == '__main__': main()

# mylib.pyimport loggingdef do_something(): logging.info('Doing something')

output>>> INFO:root:StartedINFO:root:Doing somethingINFO:root:Finished

Example 2: multi module tracking information source 4

# mainModule.pyimport loggingimport subModulelogger = logging.getLogger("mainModule") # The main module defines the logger'mainModule', which can be used in other modules of the python interpreter process. There is no need to repeatedly define and configure the loggerlogger of each module setLevel(level = logging.INFO)handler = logging. FileHandler("log.txt")handler. setLevel(logging.INFO)formatter = logging. Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')handler. setFormatter(formatter) console = logging. StreamHandler()console. setLevel(logging.INFO)console. setFormatter(formatter) logger. addHandler(handler)logger. addHandler(console) logger. info("creating an instance of subModule.subModuleClass")a = subModule. SubModuleClass()logger. info("calling subModule.subModuleClass.doSomething")a.doSomething()logger. info("done with subModule.subModuleClass.doSomething")logger. info("calling subModule.some_function")subModule. som_ function()logger. info("done with subModule.some_function")

import logging module_logger = logging.getLogger("mainModule.sub") # Any logger starting with 'mainmodule' is its child loggerclass submoduleclass (object): def__ init__ (self): self. logger = logging. getLogger("mainModule.sub.module") self. logger. info("creating an instance in SubModuleClass") def doSomething(self): self. logger. info("do something in SubModule") a = [] a.append(1) self. logger. debug("list a = " + str(a)) self. logger. info("finish something in SubModuleClass") def som_ function(): module_ logger. info("call function some_function")

output>>>2016-10-09 20:25:42,276 - mainModule - INFO - creating an instance of subModule.subModuleClass2016-10-09 20:25:42,279 - mainModule.sub.module - INFO - creating an instance in SubModuleClass2016-10-09 20:25:42,279 - mainModule - INFO - calling subModule.subModuleClass.doSomething2016-10-09 20:25:42,279 - mainModule.sub.module - INFO - do something in SubModule2016-10-09 20:25:42,279 - mainModule.sub.module - INFO - finish something in SubModuleClass2016-10-09 20:25:42,279 - mainModule - INFO - done with subModule.subModuleClass.doSomething2016-10-09 20:25:42,279 - mainModule - INFO - calling subModule.some_function2016-10-09 20:25:42,279 - mainModule.sub - INFO - call function some_function2016-10-09 20:25:42,279 - mainModule - INFO - done with subModule.some_function

3.3 Logging records Traceback information

The traceback module in Python is used to track the exception return information. You can record the traceback in logging The revised part is taken from the reference article 3.

import logginglogger = logging.getLogger(__name__)logger.setLevel(level = logging.INFO)handler = logging.FileHandler("log.txt")handler.setLevel(logging.INFO)formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')handler.setFormatter(formatter) console = logging.StreamHandler()console.setLevel(logging.INFO) logger.addHandler(handler)logger.addHandler(console) logger.info("Start print log")logger.debug("Do something")logger.warning("Something maybe fail.")try: open("sklearn.txt","rb")except (SystemExit,KeyboardInterrupt): raiseexcept Exception: logger.error("Faild to open sklearn.txt from logger.error",exc_info = True) # Method 1 logger.exception("Failed to open sklearn.txt from logger.exception") # Method 2 logger info("Finish")

Start print logSomething maybe fail.Faild to open sklearn.txt from logger.errorTraceback (most recent call last): File "G:\zhb7627\Code\Eclipse WorkSpace\PythonTest\test.py", line 23, in <module> open("sklearn.txt","rb")IOError: [Errno 2] No such file or directory: 'sklearn.txt'Finish

3.4 output progress information

logging.info("Operating progress: {:.1f}%, {} ".format(num/cycle_No*100, ">" * (num // 2)))

https://zhuanlan.zhihu.com/p/56095714