Analysis of login process

These days, Baidu's login and post bar check-in are studied. As a matter of fact, Baidu is an Internet giant. A login process is extremely complicated and poisonous. I studied for several days and still didn't understand. So choose a soft persimmon to knead first, and then choose CSDN.

The process is simple, and I won't take a screenshot. Open the browser directly, then open Fiddler, and log in to CSDN. Then Fiddler shows that the browser sends a POST request to https://passport.csdn.net/account/login?ref=toolbar, which contains the login form and is not encrypted. Of course, CSDN itself still uses HTTPS, so security is OK.

The body of the request is as follows: username and password are, of course, username and password.

username=XXXXX&password=XXXXXX&rememberMe=true<=LT-461600-wEKpWAqbfZoULXmFmDIulKPbL44hAu&execution=e4s1&_eventId=submit

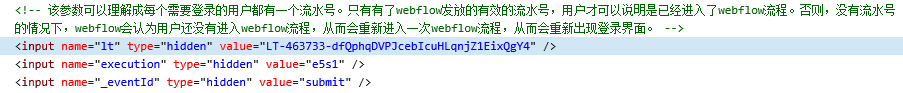

I don't know what the lt parameter is, so I can see it all in the form directly on the page, and it's all right now. CSDN is very thoughtful and even annotated. In addition, if you open Baidu's home page, you will find that the browser's log will also output Baidu's recruitment information.

Login Code

All this information is available so that we can log in. Don't talk nonsense, go straight to the code. Let me start with a few pits I met.

First of all, there is a parameter error. In fact, the logic is all right. But I forgot to change my name after copying and pasting the code. I logged in to the form where all three parameters are lt. As a result, the page returned from the login is the wrong page. I thought I had been blind for most of the day without any requests. Finally, we debugged it many times with Fiddler before we found it.

The second problem is the jump of CSDN chicken thieves. Because the browser has its own JS engine, so we enter the web address in the browser, the process of arriving at the page is not necessarily a request. What JS code may be used in the middle to jump to the middle page first, and then to the actual page. This is what the _validate_redirect_url(self) function in the code does. After the first request is logged in, you get an intermediate page that contains a bunch of JS code, including a redirect address. When we get this redirect address, we have to request it once. After we get 200OK, we can get the actual page by subsequent requests.

The third problem is that regular expressions match the space on the page. Get articles first to know the total number of articles, this is easy to do, just get the number of articles on the page. It's like 100 pieces of this with 20 pages. So how to get it? At first, I used (d+) to rule (d+) pages, but the results did not match, then I looked at the page carefully, the original two words are not a space, but two spaces! In fact, this problem is easy to deal with. Just change the regular ( d+) section s * to a total ( d+) page. So if you encounter a space problem in the future, match it directly with s, and don't think about whether you input one or two spaces.

import requests from bs4 import BeautifulSoup import re import urllib.parse as parse class CsdnHelper: """Sign in CSDN And classes that list all articles""" csdn_login_url = 'https://passport.csdn.net/account/login?ref=toolbar' headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/56.0.2924.87 Safari/537.36', } blog_url = 'http://write.blog.csdn.net/postlist/' def __init__(self): self._session = requests.session() self._session.headers = CsdnHelper.headers def login(self, username, password): '''Login main function''' form_data = self._prepare_login_form_data(username, password) response = self._session.post(CsdnHelper.csdn_login_url, data=form_data) if 'UserNick' in response.cookies: nick = response.cookies['UserNick'] print(parse.unquote(nick)) else: raise Exception('Logon failure') def _prepare_login_form_data(self, username, password): '''Get the parameters from the page and prepare to submit the form''' response = self._session.get(CsdnHelper.csdn_login_url) login_page = BeautifulSoup(response.text, 'lxml') login_form = login_page.find('form', id='fm1') lt = login_form.find('input', attrs={'name': 'lt'})['value'] execution = login_form.find('input', attrs={'name': 'execution'})['value'] eventId = login_form.find('input', attrs={'name': '_eventId'})['value'] form = { 'username': username, 'password': password, 'lt': lt, 'execution': execution, '_eventId': eventId } return form def _get_blog_count(self): '''Get the number of articles and pages''' self._validate_redirect_url() response = self._session.get(CsdnHelper.blog_url) blog_page = BeautifulSoup(response.text, 'lxml') span = blog_page.find('div', class_='page_nav').span print(span.string) pattern = re.compile(r'(\d+)strip\s*common(\d+)page') result = pattern.findall(span.string) blog_count = int(result[0][0]) page_count = int(result[0][1]) return (blog_count, page_count) def _validate_redirect_url(self): '''Verify redirected pages''' response = self._session.get(CsdnHelper.blog_url) redirect_url = re.findall(r'var redirect = "(\S+)";', response.text)[0] self._session.get(redirect_url) def print_blogs(self): '''Output article information''' blog_count, page_count = self._get_blog_count() for index in range(1, page_count + 1): url = f'http://write.blog.csdn.net/postlist/0/0/enabled/{index}' response = self._session.get(url) page = BeautifulSoup(response.text, 'lxml') links = page.find_all('a', href=re.compile(r'http://blog.csdn.net/u011054333/article/details/(\d+)')) print(f'----------The first{index}page----------') for link in links: blog_name = link.string blog_url = link['href'] print(f'Name of article:<{blog_name}> Links to articles:{blog_url}') if __name__ == '__main__': csdn_helper = CsdnHelper() username = input("enter one user name") password = input("Please input a password") csdn_helper.login(username, password) csdn_helper.print_blogs()

Of course, the most important thing here is the login process. After we log in, we can do other things. For example, the next step is to write a backup tool to download all articles and pictures from the CSDN blog locally. Interested students can try.