Pytorch learning notes (5) -- dimensional transformation of pytorch Foundation

Catalog

view/reshape

The usage of view is basically the same as that of reshape. View is the api of version 0.3 of pytorch0.4. In order to keep the reshaping dimension function name consistent with that of numpy, reshape is introduced

In [1]: import torch In [2]: a = torch.rand(4,1,28,28) #Randomly generate a four-dimensional sensor to represent the gray-scale image #Corresponding to batchsize channel height width In [3]: a.shape Out[3]: torch.Size([4, 1, 28, 28]) In [4]: a.view(4,28*28)#As long as the product is used for dimension reconstruction, no error will be reported Out[4]: tensor([[0.3060, 0.2680, 0.3763, ..., 0.6596, 0.5645, 0.8772], [0.7751, 0.9969, 0.0864, ..., 0.7230, 0.4374, 0.1659], [0.5146, 0.5350, 0.1214, ..., 0.2056, 0.2646, 0.8539], [0.5737, 0.5637, 0.2420, ..., 0.7731, 0.6198, 0.6113]]) #Equivalent to merging channel height width In [5]: a.view(4,28*28).shape Out[5]: torch.Size([4, 784]) In [6]: a.view(4*28,28).shape Out[6]: torch.Size([112, 28]) #Equivalent to merging batchsize channel height In [7]: a.view(4*1,28,28).shape Out[7]: torch.Size([4, 28, 28]) #Equivalent to merging batchsize channel In [8]: b = a.view(4,784) In [9]: b.view(4,28,28,1)

Disadvantages: if you want to restore data after using view, you must know the order of data storage, otherwise the wrong order will cause data pollution, such as the last two lines of code

Add / delete dimensions - squeeze / unssqueeze

Add dimensions using unsqueeze

In [12]: a.unsqueeze(0).shape Out[12]: torch.Size([1, 4, 1, 28, 28]) #The parameter passed in the unsqueeze is equivalent to the index indicating the subscript of the dimension to be added #unsqueeze(0) means to add a dimension at subscript 0 In [13]: a.shape Out[13]: torch.Size([4, 1, 28, 28]) In [15]: a.unsqueeze(-1).shape Out[15]: torch.Size([4, 1, 28, 28, 1]) #Pass in - 1 for the last subscript pass in - 2 for the last subscript #unsqueeze(-1) is to add a dimension to the last subscript In [16]: a.unsqueeze(4).shape Out[16]: torch.Size([4, 1, 28, 28, 1]) #unsqueeze(4) adds a dimension to the 5th subscript because the subscript starts from 0 In [17]: a.unsqueeze(-4).shape Out[17]: torch.Size([4, 1, 1, 28, 28]) #unsqueeze(-4) adds a dimension to the last four subscript, because there are five dimensions after adding a dimension. The fourth dimension is the second dimension In [18]: a.unsqueeze(-5).shape Out[18]: torch.Size([1, 4, 1, 28, 28]) #unsqueeze(-5) add a dimension to the last five subscripts because there are five dimensions after adding one dimension, and the fifth dimension from the bottom is the first dimension In [19]: a.unsqueeze(5).shape #Because there are five dimensions after adding one dimension, it is wrong to use subscript 5 --------------------------------------------------------------------------- RuntimeError Traceback (most recent call last) <ipython-input-19-b54eab361a50> in <module> ----> 1 a.unsqueeze(5).shape RuntimeError: Dimension out of range (expected to be in range of [-5, 4], but got 5)

In [20]: a = torch.tensor([1.2,2.3]) In [21]: a.unsqueeze(-1).shape Out[21]: torch.Size([2, 1]) In [22]: a.shape Out[22]: torch.Size([2]) In [23]: a.unsqueeze(-1) Out[23]: tensor([[1.2000], [2.3000]]) In [24]: a Out[24]: tensor([1.2000, 2.3000]) In [25]: a.unsqueeze(0) Out[25]: tensor([[1.2000, 2.3000]]) In [26]: a.unsqueeze(0).shape Out[26]: torch.Size([1, 2])

Delete dimensions using squeeze

If the deleted dimension length is not 1, the original data will be returned

In [27]: b = torch.rand(1,32,1,1) In [28]: b.shape Out[28]: torch.Size([1, 32, 1, 1]) In [29]: b.squeeze().shape Out[29]: torch.Size([32]) In [30]: b.squeeze(0).shape Out[30]: torch.Size([32, 1, 1]) #Delete first dimension In [31]: b.squeeze(-1).shape Out[31]: torch.Size([1, 32, 1]) #Delete last dimension In [32]: b.squeeze(1).shape Out[32]: torch.Size([1, 32, 1, 1]) #Delete the second dimension because the second dimension is not 1, so it remains unchanged In [34]: b.squeeze(-4).shape Out[34]: torch.Size([32, 1, 1]) #Delete the last four dimensions, that is, delete the first dimension

Dimension extension - Expand/repeat

Difference: expand dimension expansion adds data when necessary, but only changes the way of data understanding when not adding data, thus saving memory (recommended operation)

repeat adds data by copying

Expand dimensions with expand

There are two conditions to use Expand:

1. The dimension of the extended sensor must be the same as that of the pre extended sensor

2. The dimension length to be extended can only be 1, otherwise it cannot be extended

In [35]: a = torch.rand(4,32,14,14) In [36]: b = torch.rand(1,32,1,1) #Extend b to the same format as a In [37]: a.shape Out[37]: torch.Size([4, 32, 14, 14]) In [38]: b.shape Out[38]: torch.Size([1, 32, 1, 1]) In [39]: b.expand(4,32,14,14).shape#Dimension extension. The extension dimension length is 1 Out[39]: torch.Size([4, 32, 14, 14]) In [40]: b.expand(-1,32,-1,-1).shape#Use - 1 to indicate that the original dimension length is unchanged Out[40]: torch.Size([1, 32, 1, 1]) In [41]: b.expand(-1,32,-1,-4).shape#No error will be reported, but there is a bug in this way Out[41]: torch.Size([1, 32, 1, -4])

Use repeat to extend dimensions (not recommended)

Unlike expand, the parameter passed in in expand is the length of the dimension after expansion, while the parameter passed in repeat is the number of times to copy

In [42]: b.shape Out[42]: torch.Size([1, 32, 1, 1]) In [43]: b.repeat(4,1,1,1).shape#dim0 copies 4 times other dimensions copy once Out[43]: torch.Size([4, 32, 1, 1]) In [44]: b.repeat(4,32,1,1).shape#dim0 replicates 4 times, dim1 replicates 32 times Out[44]: torch.Size([4, 1024, 1, 1]) In [45]: b.repeat(4,1,32,32).shape#dim0 replicates 4 times, dim2 replicates 32 times, dim3 replicates 32 times Out[45]: torch.Size([4, 32, 32, 32])

Dimension transpose ---- transpose/t/permute

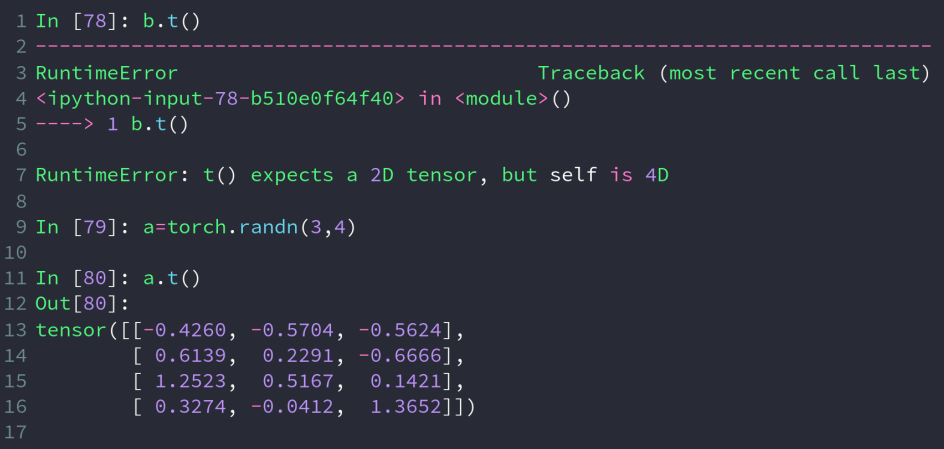

Use t for dimension transpose

This function can only be used for data of two dimension matrix types to transpose data of more than two dimensions. An error will be reported

Use post to transpose

The reason for using the continuous function in the code is that after the dimension is reshaped, the stored data changes from continuous data to discontinuous data, so it is necessary to convert it into continuous data through this function, which is related to the data storage method in pytorch. Please refer to link

In [15]: a.shape Out[15]: torch.Size([4, 3, 32, 32]) #[b,c,h,w] In [16]: a1 = a.transpose(1,3).view(4,3*32*32).view(4,3,32,32) #Transfer (1,3) exchange dim1 and dim3 into [b, W, h, C] = = > [4,32,32,3] #view(4,3*32*32) becomes [b,w*h*c] #view(4,3,32,32) becomes [b,c,w,h] format consistent with the source data, but the order has been disordered, causing data pollution --------------------------------------------------------------------------- RuntimeError Traceback (most recent call last) <ipython-input-16-262e84a7fdcb> in <module> ----> 1 a1 = a.transpose(1,3).view(4,3*32*32).view(4,3,32,32) RuntimeError: invalid argument 2: view size is not compatible with input tensor's size and stride (at least one dimension spans across two contiguous subspaces). Call .contiguous() before .view(). at c:\a\w\1\s\windows\pytorch\aten\src\th\generic/THTensor.cpp:213 In [17]: a1 = a.transpose(1,3).contiguous().view(4,3*32*32).view(4,3,32,32) #Transfer (1,3) exchange dim1 and dim3 into [b, W, h, C] = = > [4,32,32,3] #view(4,3*32*32) becomes [b,w*h*c] #view(4,3,32,32) becomes [b,c,w,h] format consistent with the source data, but the order has been disordered, causing data pollution In [18]: a2 = a.transpose(1,3).contiguous().view(4,3*32*32).view(4,32,32,3).transpose(1,3) #Transfer (1,3) exchange dim1 and dim3 into [b, W, h, C] = = > [4,32,32,3] #view(4,3*32*32) becomes [b,w*h*c] #view(4,3,32,32) becomes [b,w,h,c] #Transform (1,3) to [b,c,h,w] format in the same order as the source data In [19]: a1.shape,a2.shape Out[19]: (torch.Size([4, 3, 32, 32]), torch.Size([4, 3, 32, 32])) In [20]: torch.all(torch.eq(a,a1)) Out[20]: tensor(0, dtype=torch.uint8) #All data are the same return 1 otherwise return 0 In [21]: torch.all(torch.eq(a,a2)) Out[21]: tensor(1, dtype=torch.uint8)

Transpose with permute

#Use translate and permute respectively to set [b, C, h, w] = = > [b, h, W, C] In [22]: a = torch.rand(4,3,28,28) In [23]: a.transpose(1,3).shape Out[23]: torch.Size([4, 28, 28, 3]) In [24]: b = torch.rand(4,3,28,32) In [25]: b.transpose(1,3).shape Out[25]: torch.Size([4, 32, 28, 3]) #[b,c,h,w]==>[b,w,h,c] In [26]: b.transpose(1,3).transpose(1,2).shape Out[26]: torch.Size([4, 28, 32, 3]) #[b,c,h,w]==>[b,w,h,c]==>[b,h,w,c] In [27]: b.permute(0,2,3,1).shape Out[27]: torch.Size([4, 28, 32, 3]) #[b, C, h, w] = > [b, h, W, C] exchange dimensions directly according to Subscripts