Use QAudioOutput to play the audio decoded by ffmpeg

Written above, it is not recommended to play media audio with QAudioOutput, because it is not powerful enough and difficult to control. SDL is recommended.

Audio data format

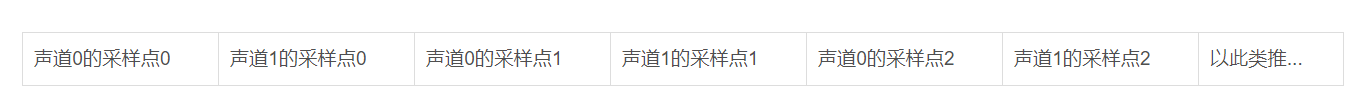

If you want to play a raw audio stream, you need to specify the format of the data in addition to the data itself in order to play it correctly. Among them, the number of channels, sampling rate and sampling data type are the most basic format contents. For example, a raw audio stream with 2 channels, 48000Hz sampling rate and 8-bit unsigned shaping data type is stored as follows:

Above, each grid is a sampling point of one channel. 48000Hz means 48000 sampling points for one channel in one second, and 2 * 48000 = 96000 sampling points for two channels. Each sampling point is an 8-bit unsigned shaping data. As long as the number of channels, sampling rate and sampling data type of a piece of data arranged in this way can be clearly known, this piece of audio data can be played.

Audio data format in ffmpeg

In the decoding context, the data format of audio can be obtained

In the structure AVCodecContext:

/* audio only */

int sample_rate; ///< samples per second

int channels; ///< number of audio channels

/**

* audio sample format

* - encoding: Set by user.

* - decoding: Set by libavcodec.

*/

enum AVSampleFormat sample_fmt; ///< sample format

They are sampling rate, channel number and sampling data format.

The data format AVSampleFormat is as follows:

enum AVSampleFormat {

AV_SAMPLE_FMT_NONE = -1,

AV_SAMPLE_FMT_U8, ///< unsigned 8 bits

AV_SAMPLE_FMT_S16, ///< signed 16 bits

AV_SAMPLE_FMT_S32, ///< signed 32 bits

AV_SAMPLE_FMT_FLT, ///< float

AV_SAMPLE_FMT_DBL, ///< double

AV_SAMPLE_FMT_U8P, ///< unsigned 8 bits, planar

AV_SAMPLE_FMT_S16P, ///< signed 16 bits, planar

AV_SAMPLE_FMT_S32P, ///< signed 32 bits, planar

AV_SAMPLE_FMT_FLTP, ///< float, planar

AV_SAMPLE_FMT_DBLP, ///< double, planar

AV_SAMPLE_FMT_S64, ///< signed 64 bits

AV_SAMPLE_FMT_S64P, ///< signed 64 bits, planar

AV_SAMPLE_FMT_NB ///< Number of sample formats. DO NOT USE if linking dynamically

};

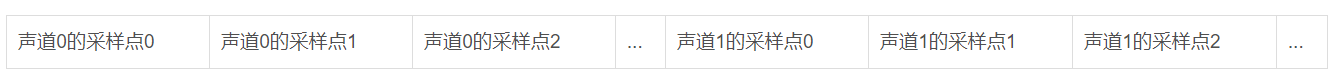

As you can see, there are two data formats for many of the same types, such as AV_SAMPLE_FMT_U8 and AV_SAMPLE_FMT_U8P is an 8-bit unsigned integer, but the latter has an additional suffix P. the note indicates planar. What does this mean?

This is actually another data structure. Generally, the data just decoded by ffmpeg is not arranged as we saw before, but grouped according to each channel:

Such data cannot be played directly. It must be arranged according to the sequence of sampling points as before, so it must be converted to non planar form before playing.

Qaudiofoutput audio format qaudiofoutput

To initialize a QAudioOutput, you must first set QAudioFormat, which requires the following properties:

setSampleRate(int); // Set sampling rate

setChannelCount(int); // Set the number of channels

setSampleSize(int); // Set the sampling data size (bit s)

setSampleType(QAudioFormat::SampleType); // Format sampling data

setCodec(QString); // When the decoder type is set, the raw stream is set to "audio/pcm"

QAudioFormat::SampleType sampling data formats are as follows:

enum SampleType { Unknown, SignedInt, UnSignedInt, Float };

The useful ones are SignedInt signed shaping, unsigned shaping and Float floating-point type. You can see that in fact, sampleSize and sampleType together determine the sampling data type. For example, setSampleSize(8) and setsampletype (unsigned int) correspond to AV in ffmpeg_ SAMPLE_ FMT_ U8.

AV function in ffmpeg_ get_ bytes_ per_ Sample can obtain the number of bytes of the sampling data type corresponding to AVSampleFormat. Multiply the result by 8 to get the sampleSize parameter of QAudioFormat.

Audio format conversion in FFmpeg

First of all, the reason why the audio decoding method cannot be directly set according to the context in MPEG is that the decoding method can not be directly set:

swrCtx = swr_alloc_set_opts(nullptr,

audioCodecCtx->channel_layout, AV_SAMPLE_FMT_S16, audioCodecCtx->sample_rate,

audioCodecCtx->channel_layout, audioCodecCtx->sample_fmt, audioCodecCtx->sample_rate,

0, nullptr);

swr_init(swrCtx);

After decoding a frame of audio, first convert and then calculate the required memory size, and then allocate memory and perform format conversion:

int bufsize = av_samples_get_buffer_size(nullptr, frame->channels, frame->nb_samples,

AV_SAMPLE_FMT_S16, 0);

uint8_t *buf = new uint8_t[bufsize];

swr_convert(swrCtx, &buf, frame->nb_samples, (const uint8_t**)(frame->data), frame->nb_samples);

In this way, the audio data in the buf can be used for playback. Don't forget to delete[] buf after use.

After the final decoding, remember to release the conversion context:

swr_free(&swrCtx);

QAudioOutput play audio

First set QAudioFormat, then initialize QAudioOutput and turn on the audio device:

QAudioFormat audioFormat;

audioFormat.setSampleRate(audioCodecCtx->sample_rate);

audioFormat.setChannelCount(audioCodecCtx->channels);

audioFormat.setSampleSize(8*av_get_bytes_per_sample(AV_SAMPLE_FMT_S16));

audioFormat.setSampleType(QAudioFormat::SignedInt);

audioFormat.setCodec("audio/pcm");

QAudioOutput audioOutput = new QAudioOutput(audioFormat);

QIODevice *audioDevice = audioOutput->start();

In this way, you can write audio data and play it through the QIODevice::write() method. Play the data converted in the previous section with the following code:

audioDevice->write((const char*)buf, bufsize); delete[] buf;

After the playback stops, don't forget to stop and release QAudioOutput:

audioOutput->stop(); delete audioOutput;