CLS log services can trigger cloud functions directly for fast processing, but there are a little more triggers. For example, if the log being processed is originally generated by a cloud function, the number of function triggers will be doubled. If the log does not need to be processed in time, you can let it accumulate a minute in the CLS and trigger a cloud function with a timer. Query interface through log service SearchLog To achieve batch processing.

But there is a pit in doing this. If there are more than 100 logs in this minute, we can put Limit to the maximum 1000. If there are more than 1000 logs, according to the documentation, we should turn over pages by passing Context, which can turn out up to 10000 logs. However, the operation described in the document is simply not possible, because if you pass a Query parameter when you page a query, you will be assumed to have a new query and return to the first page, even if the Query parameter is identical to the previous query. If you pass a Query parameter and only the Context parameter, you will only receive an error that is missing the Query parameter because Query is a required parameter.

Even if you use SQL queries and SQL paging (by passing offset s and limit s to SQL), if you have more than 10,000 log s in a minute, you will still be the same. So a better way might be to pass every minute Log Download Interface To download log processing for a specified period of time. This can be cumbersome, though. For example, the process of generating downloaded files does not have a notification mechanism such as callback or webhook. You may need to write a round robin that is not a good task. Get a list of log download tasks The key to knowing that it was built successfully is that you don't know how long you're going to rotate, and the log files generated can be downloaded unauthorized through the public network with an additional risk of leaking information.

Ultimately, I decided to endure batch processing at a time granularity of five minutes, so that I could automatically post the log to COS every five minutes, trigger the cloud function execution with the COS creation file event, and download the log file for quick processing. Because the sdk of COS can read files into streams, so that the file is very large in time, or decompressed in real time by stream processing (logs are forced to be automatically compressed), streaming parsing:

'use strict';

const zlib = require("zlib"),

readLine = require('readline'),

COS = require('cos-nodejs-sdk-v5'),

cos = new COS({

SecretId: process.env.SECRET_ID,

SecretKey: process.env.SECRET_KEY

});

exports.main_handler = (event, context,callback) => {

let gunzip = zlib.createGunzip();//zlib is responsible for unzipping gz files

let jsonCount=0,invalidLines=0;

let rl = readLine.createInterface(gunzip);//readLine is responsible for parsing lines of data from the extracted text

rl.on('line', function (line) {//Processing a row of data

if(/^\s*\{.*\}\s*$/.test(line)){ //Here's the simplest thing to do

jsonCount++;//This row of data appears to be JSON data

}else{

invalidLines++;//This row does not appear to be JSON data

}

})

gunzip.on('finish', () => {//File decompression complete

callback(null,"Number of valid rows: "+jsonCount+" ,Number of invalid rows:"+invalidLines)

});

var records = event.Records;

for(var i=0;i<records.length;i++){

var c = records[i].cos;

console.log("Download and analyze log files "+c.cosObject.key)

cos.getObject({

Bucket: c.cosBucket.name+"-"+c.cosBucket.appid,

Region: c.cosBucket.cosRegion,

Key: "/"+c.cosObject.key.split("/").slice(3).join("/"),

Output: gunzip

// Output: "/tmp/test.gz"

}, function(err, data) {

// console.log(err || data);

});

}

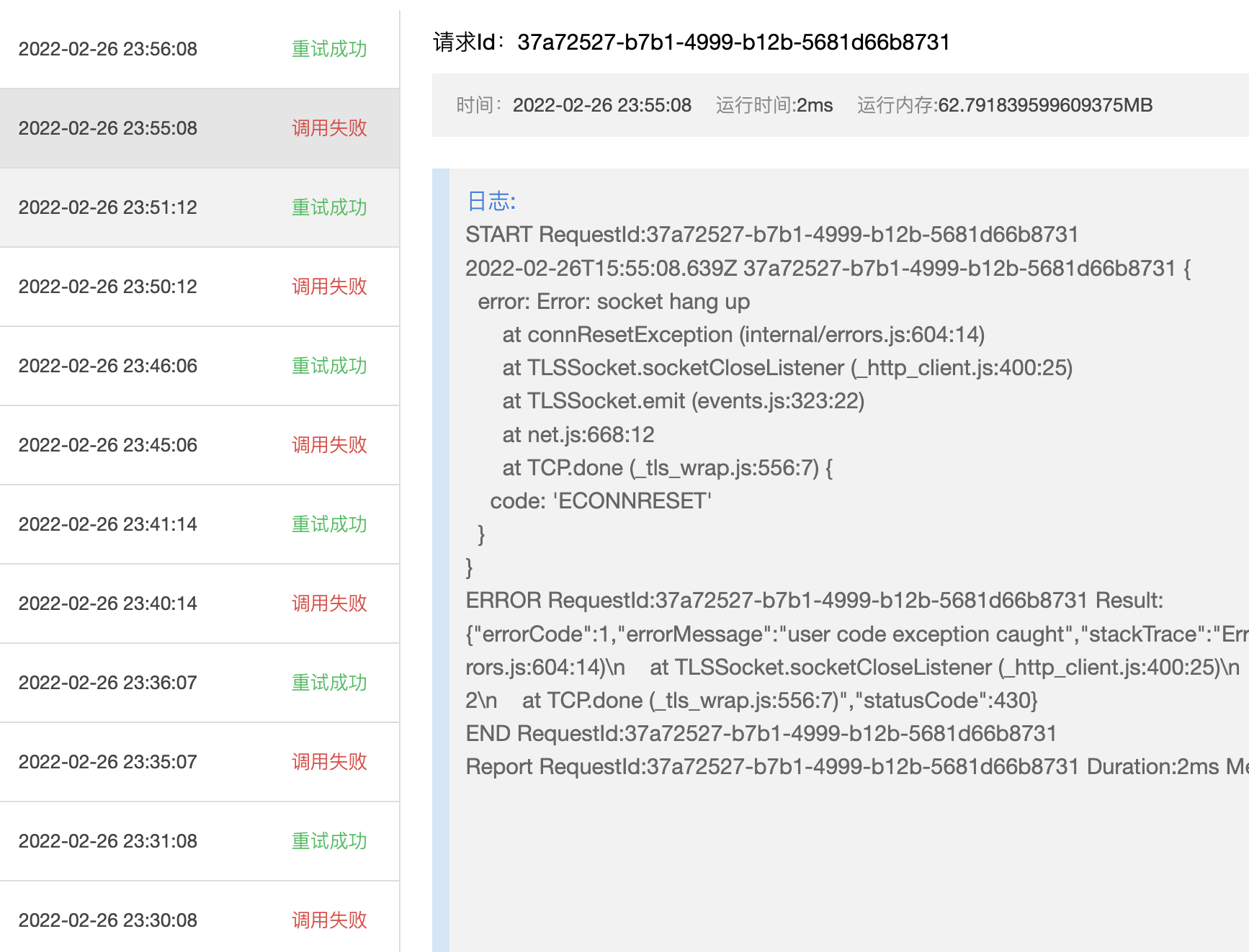

};Continue to slot this COS SDK here, very often triggering ECONNRESET errors that cause file processing to fail

{

error: Error: socket hang up

at connResetException (internal/errors.js:604:14)

at TLSSocket.socketCloseListener (_http_client.js:400:25)

at TLSSocket.emit (events.js:323:22)

at net.js:668:12

at TCP.done (_tls_wrap.js:556:7) {

code: 'ECONNRESET'

}

}Failure is also very regular, COS triggered first read to execute file stable failure, read again is successful. Is it because COS was not ready when the trigger triggered?