R329-opencv4_contrib-wechat_qrcode

The CV team of wechat has opened the wechat QR code scanning engine and has joined opencv4_contrib module, let's try it today.

Install opencv_contrib

In the previous tutorial, we only installed the python version of OpenCV (in fact, it can be used without installation, because the C + + version and python version of the compiled opencv library have been built in the image of R329, but the version seems to be opencv3), but today our experiment only has OpenCV. We need to uninstall it first and then install opencv_contrib.

Uninstall the installed opencv first. If it is not installed, you do not need to uninstall. Check whether you have installed it. You can use "pip3 list" to check whether there is opencv python

pip3 uninstall opencv-python

Then download the whl package

Download it and transfer it to R329. See the previous blog for the specific method, then cd it to the folder where the whl file is located and execute the following command to install it

pip3 install opencv_contrib_python-4.5.5.62-cp36-abi3-manylinux_2_17_aarch64.manylinux2014_aarch64.whl

Model Download:

Specific implementation code

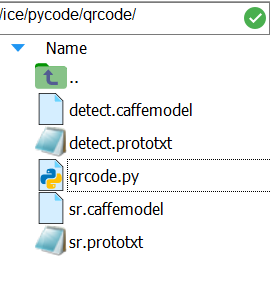

First create a new folder, name it qrcode, and then create a new qrcode Py file, the content is

#-*- coding: utf-8 -*-

import time

import cv2

cap = cv2.VideoCapture(0)

#Sets the height of the image

cap.set(3,240)

#Set the width of the image. Although 240 is set here, it is actually 320,

cap.set(4,240)

#Write to the specified device

f = open('/dev/fb0','wb')

try:

detector = cv2.wechat_qrcode_WeChatQRCode(

"detect.prototxt", "detect.caffemodel", "sr.prototxt", "sr.caffemodel")

except:

print("---------------------------------------------------------------")

print("Failed to initialize WeChatQRCode.")

print("Please, download 'detector.*' and 'sr.*' from")

print("https://github.com/WeChatCV/opencv_3rdparty/tree/wechat_qrcode")

print("and put them into the current directory.")

print("---------------------------------------------------------------")

exit(0)

prevstr = ""

while True:

st = time.time()

ret,img = cap.read()

if ret:

#Intercepting 240 * 240 images, the lcd can display up to 240 * 240 16 bit pixels

img = img[:,0:240]

#Rotate the grayscale image 180 degrees clockwise

img = cv2.rotate(img,cv2.ROTATE_180)

res, points = detector.detectAndDecode(img)

for t in res:

if t != prevstr:

print(t)

#Convert to 16 bit color, (because lcd is a 16 bit display)

img = cv2.cvtColor(img,cv2.COLOR_BGR2BGR565)

cv2.putText(img, "{0}" .format(str(1 / (time.time() - st))), (0, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 0, 255), 1)

#Write binary data of image

f.seek(0)

f.write(bytearray(img))

Upload the downloaded four model files to the qrcode folder

Then cd to the qrcode folder and execute

python3 qrcode.py

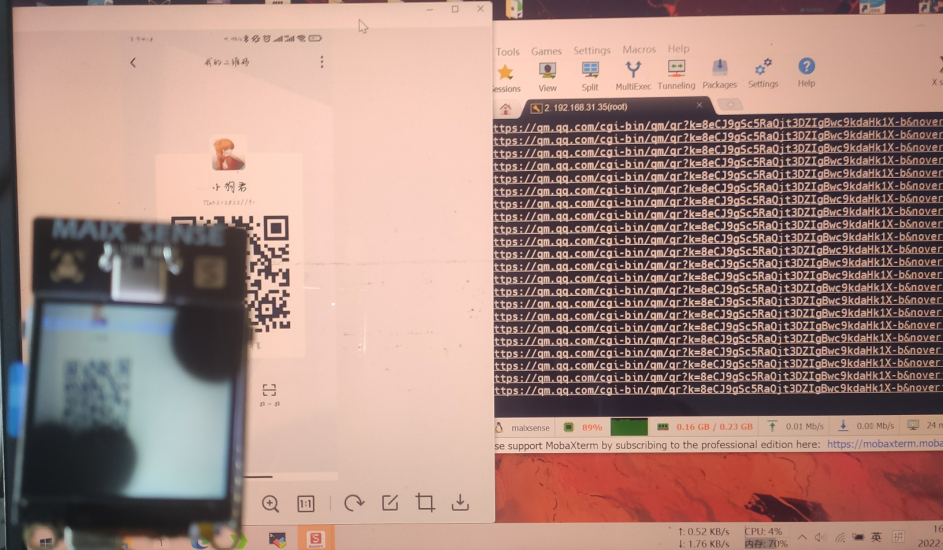

The effect is shown in the figure. When there is no QR code, it is about 7-8 frames, and when the QR code is recognized, it is about 2-3 frames. It feels OK, which is much better than the pure code recognition of K210. The group friends said that it would be more efficient to convert caffemodel into NCNN model, and it has been implemented, and it is open-source on github, but it is written in C + +. I can only say hello world to C + +. I ponder, convert the model, and then refactor the code in C + +. This efficiency should be improved a lot, but I don't know when to wait.

Reply 777 reminder