1, Background

- Requirements:

Redis provides services externally through tcp. The client initiates requests through socket connection. Each request will be blocked after the command is issued and wait for the redis server to process. After processing, the result will be returned to the client.

In fact, it is similar to an http server, asking and answering questions and responding to requests. If the time-consuming process of redis service itself in complex operations is eliminated, it can be seen that the most time-consuming process is the network transmission process. Each command corresponds to sending and receiving two network transmissions. If a process takes 0.1 seconds, only 10 requests can be processed in one second, which will seriously restrict the performance of redis.

In many scenarios, if we want to complete a business, we may perform multiple continuous operations on redis, such as inventory minus one, order plus one, balance deduction, etc. there are many steps that need to be performed successively.

- Potential hazard: in such a scenario, the time-consuming of network transmission will be the main bottleneck limiting the processing capacity of redis. Looping key and obtaining value may increase the number of connections in the connection pool, create and destroy connections, and consume performance

- resolvent:

Pipeline can be introduced. Pipeline pipeline is a technology to solve the delay caused by a large number of times when executing a large number of commands.

In fact, the principle is very simple. pipeline is to send all commands at once to avoid the network overhead caused by frequent sending and receiving. redis will execute them in turn after receiving a pile of commands, and then package and return the results to the client.

According to the actual situation of the cache data structure in the project, if the data structure is of string type, use the multiGet method of RedisTemplate; The data structure is hash, using pipeline, combining commands and batch operation redis.

2, Operation

-

multiGet operation of RedisTemplate

-

String type for data structure

-

Sample code

-

List<String> keys = new ArrayList<>(); for (Book e : booklist) { String key = generateKey.getKey(e); keys.add(key); } List<Serializable> resultStr = redisTemplate.opsForValue().multiGet(keys)

2. Pipeline usage of redistemplate

Why is Pipelining so fast?

Let's take a look at how the original multiple commands are executed:

Redis client - > redis server: send the first command

Redis server - > redis client: respond to the first command

Redis client - > redis server: send the second command

Redis server - > redis client: respond to the second command

Redis client - > redis server: send the nth command

Redis server - > redis client: respond to the nth command

What is the Pipeling mechanism

Redis Client - > redis server: send the first command (cached in Redis Client, not sent immediately)

Redis Client - > redis server: send the second command (cached in Redis Client, not sent immediately)

Redis Client - > redis server: send the nth command (cached in Redis Client, not sent immediately)

Redis client - > redis server: send cumulative commands

Redis server - > redis client: respond to the 1st, 2nd and Nth commands

-

Sample code

package cn.chinotan.controller; import cn.chinotan.service.RedisService; import lombok.extern.java.Log; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.data.redis.core.StringRedisTemplate; import org.springframework.web.bind.annotation.GetMapping; import org.springframework.web.bind.annotation.PathVariable; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.RestController; import java.util.ArrayList; import java.util.HashMap; import java.util.List; import java.util.Map; import java.util.concurrent.TimeUnit; /** * @program: test * @description: redis Batch data test * @author: xingcheng * @create: 2019-03-16 16:26 **/ @RestController @RequestMapping("/redisBatch") @Log public class RedisBatchController { @Autowired StringRedisTemplate redisTemplate; @Autowired Map<String, RedisService> redisServiceMap; /** * VALUE Cache time 3 minutes */ public static final Integer VALUE_TIME = 1; /** * Test list length */ public static final Integer SIZE = 100000; @GetMapping(value = "/test/{model}") public Object hello(@PathVariable("model") String model) { List<Map<String, String>> saveList = new ArrayList<>(SIZE); List<String> keyList = new ArrayList<>(SIZE); for (int i = 0; i < SIZE; i++) { Map<String, String> objectObjectMap = new HashMap<>(); String key = String.valueOf(i); objectObjectMap.put("key", key); StringBuilder sb = new StringBuilder(); objectObjectMap.put("value", sb.append("value").append(i).toString()); saveList.add(objectObjectMap); // Record all key keyList.add(key); } // Get the corresponding implementation RedisService redisService = redisServiceMap.get(model); long saveStart = System.currentTimeMillis(); redisService.batchInsert(saveList, TimeUnit.MINUTES, VALUE_TIME); long saveEnd = System.currentTimeMillis(); log.info("Insertion time:" + (saveEnd - saveStart) + " ms"); // Batch acquisition long getStart = System.currentTimeMillis(); List<String> valueList = redisService.batchGet(keyList); long getEnd = System.currentTimeMillis(); log.info("Acquisition time:" + (getEnd - getStart) + " ms"); return valueList; } }

package cn.chinotan.service; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.dao.DataAccessException; import org.springframework.data.redis.connection.RedisConnection; import org.springframework.data.redis.connection.StringRedisConnection; import org.springframework.data.redis.core.RedisCallback; import org.springframework.data.redis.core.RedisOperations; import org.springframework.data.redis.core.SessionCallback; import org.springframework.data.redis.core.StringRedisTemplate; import org.springframework.stereotype.Service; import java.util.*; import java.util.concurrent.TimeUnit; import java.util.stream.Collectors; /** * @program: test * @description: redis Pipeline operation * @author: xingcheng * @create: 2019-03-16 16:47 **/ @Service("pipe") public class RedisPipelineService implements RedisService { @Autowired StringRedisTemplate redisTemplate; @Override public void batchInsert(List<Map<String, String>> saveList, TimeUnit unit, int timeout) { /* Insert multiple pieces of data */ redisTemplate.executePipelined(new SessionCallback<Object>() { @Override public <K, V> Object execute(RedisOperations<K, V> redisOperations) throws DataAccessException { for (Map<String, String> needSave : saveList) { redisTemplate.opsForValue().set(needSave.get("key"), needSave.get("value"), timeout,unit); } return null; } }); } @Override public List<String> batchGet(List<String> keyList) { /* Batch acquisition of multiple pieces of data */ List<Object> objects = redisTemplate.executePipelined(new RedisCallback<String>() { @Override public String doInRedis(RedisConnection redisConnection) throws DataAccessException { StringRedisConnection stringRedisConnection = (StringRedisConnection) redisConnection; for (String key : keyList) { stringRedisConnection.get(key); } return null; } }); List<String> collect = objects.stream().map(val -> String.valueOf(val)).collect(Collectors.toList()); return collect; } }

package cn.chinotan.service; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.data.redis.core.StringRedisTemplate; import org.springframework.stereotype.Service; import java.util.ArrayList; import java.util.List; import java.util.Map; import java.util.concurrent.TimeUnit; /** * @program: test * @description: redis General traversal operation * @author: xingcheng * @create: 2019-03-16 16:47 **/ @Service("generic") public class RedisGenericService implements RedisService { @Autowired StringRedisTemplate redisTemplate; @Override public void batchInsert(List<Map<String, String>> saveList, TimeUnit unit, int timeout) { for (Map<String, String> needSave : saveList) { redisTemplate.opsForValue().set(needSave.get("key"), needSave.get("value"), timeout,unit); } } @Override public List<String> batchGet(List<String> keyList) { List<String> values = new ArrayList<>(keyList.size()); for (String key : keyList) { String value = redisTemplate.opsForValue().get(key); values.add(value); } return values; } }

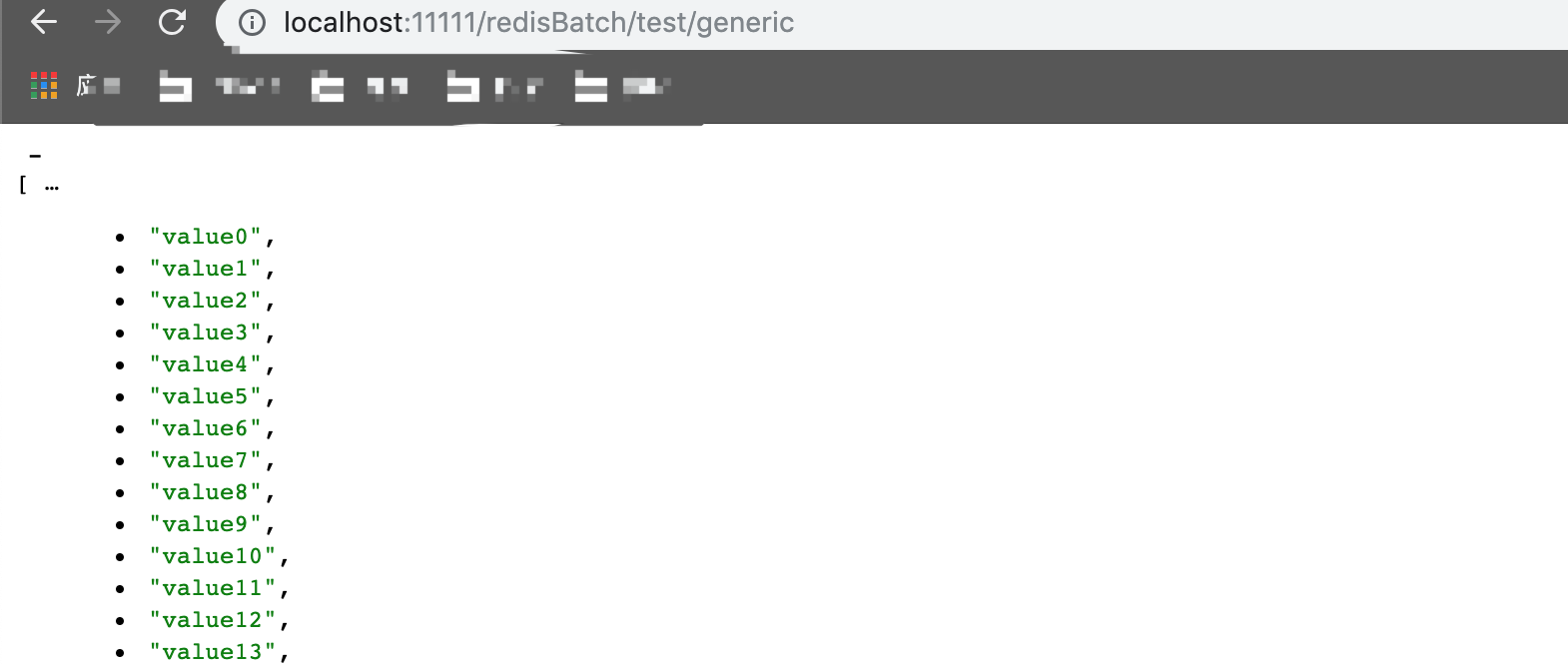

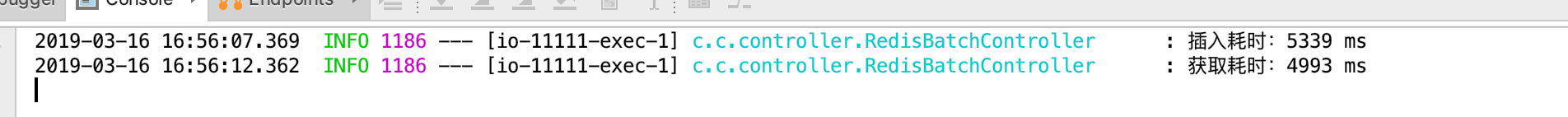

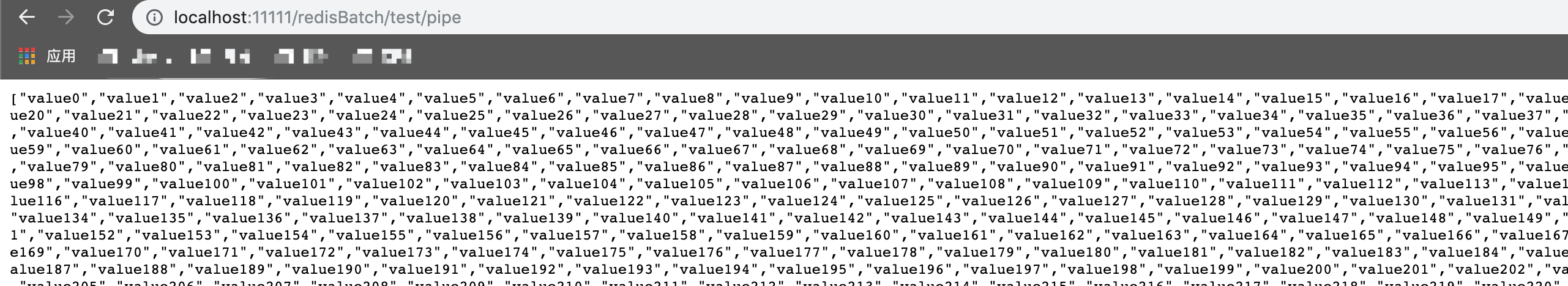

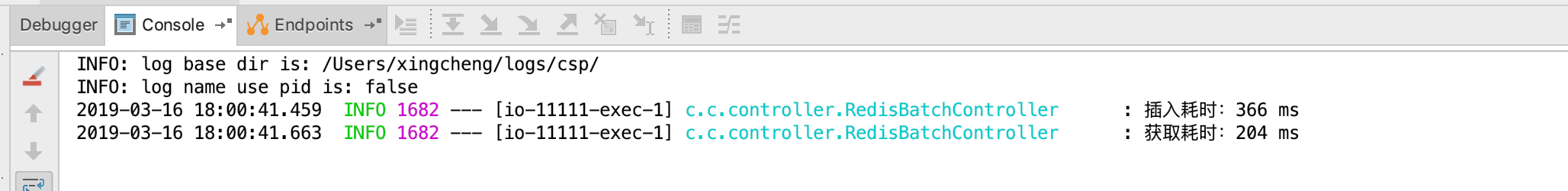

Test results:

You can see a 20 fold improvement in performance

Based on its characteristics, it has two obvious limitations:

- In view of the characteristics of pipeping sending commands, Redis server uses queues to store commands to be executed, and the queues are stored in limited memory, so it is not suitable to send too many commands at one time. If a large number of commands are needed, they can be carried out in batches. The efficiency will not vary too much. It's better than memory overflow~~

- Because the principle of pipeline is to collect the commands to be executed and execute them at one time in the end. Therefore, it is impossible to find the results of data immediately in the middle (the results can be found only after pipelining is completed), which will make it impossible to find the data immediately for conditional judgment (for example, judge whether to continue to insert records).