brief introduction

Didn't you write an article earlier An implementation of like function Your article

Some problems were also raised at that time, and today we will solve some of them

start

Let's talk about the background first to avoid the confusion of not reading the previous article

Let's talk about the like function. Here we mainly solve the big key problem that existed before

Big key problem

Since the main thread of Redis is a single thread model, large key s will also bring some problems, such as:

1. In the cluster mode, when the slot s are evenly divided, there will be data and query skew. Some Redis nodes with large key s occupy more memory and have high QPS.

2. When a large key is deleted or automatically expires, there will be a sudden drop or rise in qps. In extreme cases, the master-slave replication will be abnormal, and the Redis service will be blocked and unable to respond to the request.

strategy

Based on the previous design, improvements are made here

Because liking is a regular operation, in order to avoid frequent operation of the database, the strategy here is:

| redis | |||

|---|---|---|---|

| string | key | value | |

| news:like:count:%s | |||

| News likes string prefix: newsId | count | ||

| Number of modifications | |||

| hash | key | field | value |

| user:like:news:%s | |||

| User likes news hash prefix: hashCode modulus | %s:%s | ||

| userId:newsId | 0 (no likes) / 1 (likes) | ||

| set | key | value | |

| user:like:news:set | |||

| hashKey collection of user likes | user:like:news:%s | ||

| hashCode module | |||

| news:like:count:set | |||

| newsId collection of news likes operation | %s | ||

| newsId |

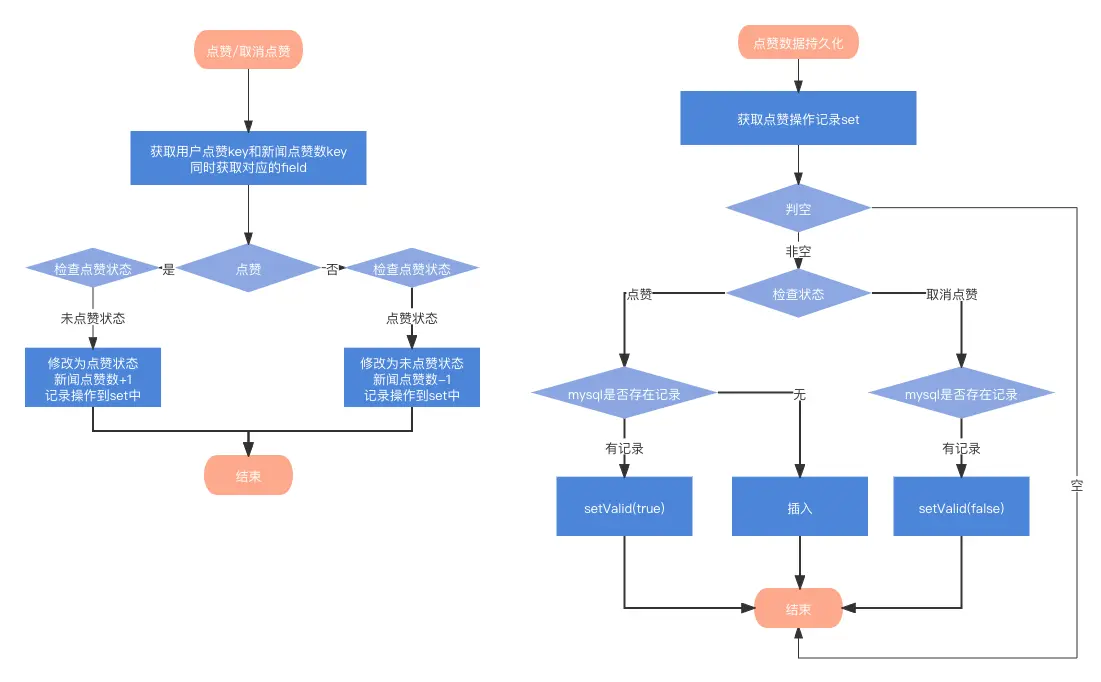

flow chart

The redis data is persisted to mysql through the scheduled task (using the ScheduledExecutorService provided by JDK). Later, it was found that when using the ScheduledExecutorService, it should be due to the null pointer exception caused by injecting Spring components into non Spring components. Therefore, the scheduled task of SpringBoot is used at last, which is very simple to use, It was also mentioned in the previous timed task article.

Liking will generate a lot of data. In order not to generate so much data during persistence, the valid field is used

code

The following is part of the code, you can refer to it

Redis tool class mainly defines some constants and key assembly tools

public class RedisUtils {

/**

* Default key expiration time (s)

*/

public static final Integer DEFAULT_TTL = 300;

/**

* Default key expiration time (minute)

*/

public static final Integer DEFAULT_TTL_MINUTES = 30;

/**

* Default key expiration time (day)

*/

public static final Integer DEFAULT_TTL_DAYS = 7;

/**

* Module 256

*/

public static final Integer KEY_MOLD = 1 << 8;

/**

* scan count

*/

public static final Integer SCAN_COUNT = 3000;

public static <P, T> String getKey(P keyPrefix, T id) {

StringBuilder builder = new StringBuilder().append(keyPrefix).append(id);

return builder.toString();

}

public static String getUserLikeNewsKey(Long userId) {

StringBuilder builder = new StringBuilder()

.append(RedisKeyConstants.USER_LIKE_NEWS)

.append(Math.abs(userId.hashCode() & KEY_MOLD - 1));

return builder.toString();

}

public static String getUserLikeNewsField(Long userId, Long newsId) {

StringBuilder builder = new StringBuilder()

.append(userId)

.append(RedisKeyConstants.SPLITTER)

.append(newsId);

return builder.toString();

}

}

The following are mainly like actions

@Service("userLikeService")

public class UserLikeServiceImpl implements UserLikeService {

public static final Logger LOGGER = LoggerFactory.getLogger(UserLikeServiceImpl.class);

private static final String LIKE_STATE = "1";

private static final String UNLIKE_STATE = "0";

@Autowired

StringRedisTemplate stringRedisTemplate;

@Autowired

UserLikeNewsMapper userLikeNewsMapper;

@Override

public void like(Long userId, Long newsId) {

String userLikeNewsKey = RedisUtils.getUserLikeNewsKey(userId);

String userLikeNewsField = RedisUtils.getUserLikeNewsField(userId, newsId);

String newsLikeCountKey = RedisUtils.getKey(RedisKeyConstants.NEWS_LIKE_COUNT, newsId);

// Big key problem

String recordState = (String) stringRedisTemplate.opsForHash().get(userLikeNewsKey, userLikeNewsField);

if (!LIKE_STATE.equals(recordState)) {

// No likes, likes

LOGGER.info("No likes, likes");

stringRedisTemplate.opsForHash().put(userLikeNewsKey, userLikeNewsField, LIKE_STATE);

// Operation key record

stringRedisTemplate.opsForSet().add(RedisKeyConstants.USER_LIKE_NEWS_KEY_SET, userLikeNewsKey);

// News likes + 1

stringRedisTemplate.opsForValue().increment(newsLikeCountKey);

// Operation key record

stringRedisTemplate.opsForSet().add(RedisKeyConstants.NEWS_LIKE_COUNT_KEY_SET, String.valueOf(newsId));

}

}

@Override

public void unlike(Long userId, Long newsId) {

String userLikeNewsKey = RedisUtils.getUserLikeNewsKey(userId);

String userLikeNewsField = RedisUtils.getUserLikeNewsField(userId, newsId);

String newsLikeCountKey = RedisUtils.getKey(RedisKeyConstants.NEWS_LIKE_COUNT, newsId);

String recordState = (String) stringRedisTemplate.opsForHash().get(userLikeNewsKey, userLikeNewsField);

if (!UNLIKE_STATE.equals(recordState)) {

// Like, cancel

LOGGER.info("Like, cancel");

stringRedisTemplate.opsForHash().put(userLikeNewsKey, userLikeNewsField, UNLIKE_STATE);

// Operation key record

stringRedisTemplate.opsForSet().add(RedisKeyConstants.USER_LIKE_NEWS_KEY_SET, userLikeNewsKey);

// News likes - 1

stringRedisTemplate.opsForValue().decrement(newsLikeCountKey);

// Operation key record

stringRedisTemplate.opsForSet().add(RedisKeyConstants.NEWS_LIKE_COUNT_KEY_SET, String.valueOf(newsId));

}

}

/**

* Check whether users like news

* Not called yet

*

* @param userId

* @param newsId

* @return

*/

@Override

public boolean liked(Long userId, Long newsId) {

String userLikeNewsKey = RedisUtils.getUserLikeNewsKey(userId);

String userLikeNewsField = RedisUtils.getUserLikeNewsField(userId, newsId);

String recordState = (String) stringRedisTemplate.opsForHash().get(userLikeNewsKey, userLikeNewsField);

if (Objects.nonNull(recordState)) {

return LIKE_STATE.equals(recordState);

} else {

UserLikeNews userLikeNews = userLikeNewsMapper.selectValidByUserIdAndNewsId(userId, newsId);

return Objects.nonNull(userLikeNews);

}

}

}

scan

Finally, about persistence, you can see the following article first

https://aijishu.com/a/1060000000007477

This article is about using scan instead of keys

Then look

https://cloud.tencent.com/developer/article/1650002

https://redis.io/commands/scan

Here are the problems of scan

@Override

@Transactional(rollbackFor = Exception.class)

@Scheduled(initialDelay = 60 * 1000, fixedDelay = 5 * 60 * 1000)

public void persistUserLikeNews() {

// Scheduled task persistence

Set<String> keys = stringRedisTemplate.opsForSet().members(RedisKeyConstants.USER_LIKE_NEWS_KEY_SET);

if (Objects.nonNull(keys)) {

for (String key : keys) {

// TODO cursor problem

Cursor<Map.Entry<Object, Object>> cursor = stringRedisTemplate.opsForHash().scan(key

, ScanOptions.scanOptions().match("*").count(RedisUtils.SCAN_COUNT).build());

while (cursor.hasNext()) {

Map.Entry<Object, Object> entry = cursor.next();

String likeRecordField = (String) entry.getKey();

UserLikeNews userLikeRecord = getUserLikeNews(likeRecordField);

// ??? Check whether users and news are still there

UserLikeNews userLikeNews = userLikeNewsMapper.selectByUserIdAndNewsId(userLikeRecord.getUserId(), userLikeRecord.getNewsId());

boolean haveRecord = Objects.nonNull(userLikeNews);

String state = (String) entry.getValue();

// Like status

if (LikeConstants.LIKE.equals(state)) {

// Record valid true

if (haveRecord) {

userLikeNews.setValid(true);

userLikeNewsMapper.updateByPrimaryKeySelective(userLikeNews);

} else {

// No record insertion

userLikeNewsMapper.insertSelective(userLikeRecord);

}

} else if (LikeConstants.UNLIKE.equals(state)) {

// Cancel like status

if (haveRecord) {

// Record valid false

userLikeNews.setValid(false);

userLikeNewsMapper.updateByPrimaryKeySelective(userLikeNews);

}

}

// Delete the persistent field. If there is an exception, mysql can roll back according to the transaction, but redis will not

stringRedisTemplate.opsForHash().delete(key, likeRecordField);

}

try {

cursor.close();

} catch (IOException e) {

LOGGER.error("cursor Closing failed");

e.printStackTrace();

}

// Determine whether there are any elements in the hashKey that are not persisted

if (stringRedisTemplate.opsForHash().size(key) <= 0) {

// Delete from set

stringRedisTemplate.opsForSet().remove(RedisKeyConstants.USER_LIKE_NEWS_KEY_SET, key);

}

}

}

}

Scheduled task code will not be posted. There are many implementation methods

summary

Many times, we really need practice to get the truth

At the beginning, I imagined that the realization was very simple. When I did it, I found that I would always encounter some problems. It was not so smooth. Practice ~ practice