1, Redis Cluster

Early Redis distributed cluster deployment scheme:

- Client partition: the client program determines the key write allocation and the redis node to be written, but the client needs to handle the write allocation, high availability management, failover, etc

- Proxy scheme: redis proxy is implemented based on three-party software. The client connects to the proxy layer first, and the proxy layer realizes the write allocation of key s. It is relatively simple for the client, but it is relatively troublesome for the increase or decrease of cluster management nodes, and the proxy itself is also a single point and performance bottleneck.

The sentinel sentinel mechanism can solve the problem of high availability of redis, that is, when the master fails, it can automatically promote the slave to the master, so as to ensure the normal use of redis services, but it can not solve the bottleneck problem of redis single machine write, that is, the redis write performance of a single machine is limited to factors such as the memory size, concurrent number and network card rate of the single machine, Therefore, after redis version 3.0, redis officially launched the redis cluster mechanism without a central architecture. In a redis cluster without a central architecture, each node saves the current node data and the entire cluster state, and each node is connected to all other nodes. The characteristics are as follows:

- All Redis nodes are interconnected using PING mechanism

- The failure of a node in the cluster is the failure of more than half of the monitoring nodes in the whole cluster

- The client can directly connect to redis without a proxy. The application needs to write all the redis server IP addresses

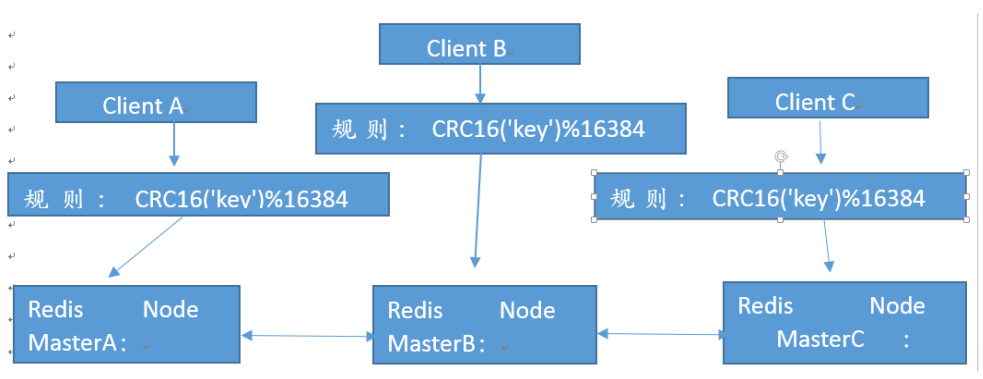

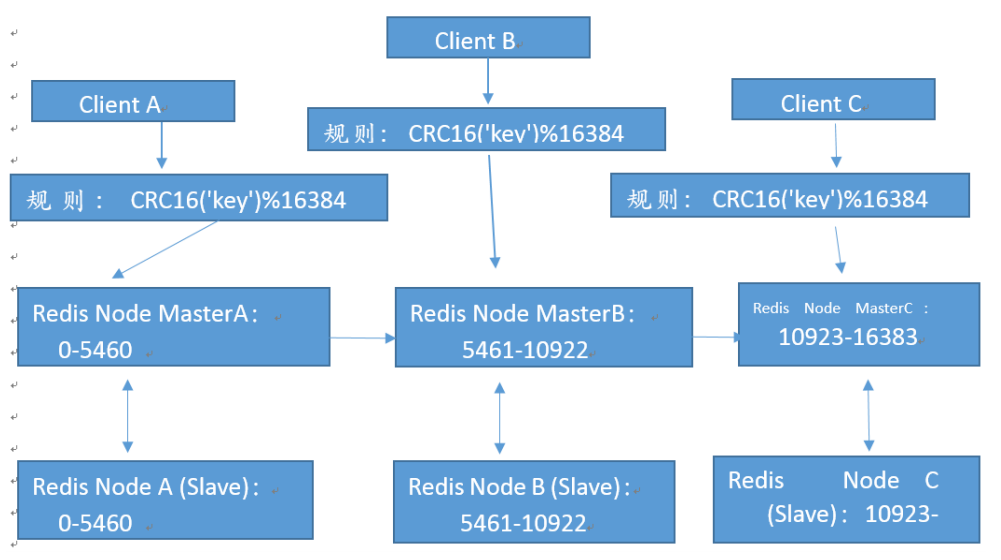

- The redis cluster maps all redis nodes to 0-16383 slots. Read and write operations need to be performed on the specified redis nodes. Therefore, the number of reids node s is equivalent to the number of times of redis concurrency expansion

- The Redis cluster allocates 16384 slots in advance. When a key value needs to be written in the Redis cluster, the value after CRC16(key) mod 16384 will be used to determine which slot to write the key value to, so as to determine which redis node to write to, so as to effectively solve the stand-alone bottleneck

1.1 Redis cluster architecture

1.1. 1. Basic architecture of redis cluster

If the three master nodes are a, B and C respectively, and 16384 slots are allocated by hash slots, the slot intervals undertaken by their three nodes are:

Node A covers 0-5460

Node B covers 5461-10922

Node C covers 10923-16383

1.1. 2. Redis cluster master-slave architecture

Although the Redis cluster architecture solves the problem of concurrency, it also introduces a new problem. How to solve the high availability of each Redis master

1.2 deploy redis cluster

1.2. 1 Environmental Planning

| ip | host name | role |

|---|---|---|

| 10.10.100.130 | node1 | Master |

| 10.10.100.131 | node2 | Master |

| 10.10.100.132 | node3 | Master |

| 10.10.100.133 | node4 | slave |

| 10.10.100.134 | node5 | slave |

| 10.10.100.135 | node6 | slave |

In addition, two servers are reserved for cluster node addition test.

| ip | host name | role |

|---|---|---|

| 10.10.100.136 | node7 | spare |

| 10.10.100.137 | node8 | spare |

Redis installation directory / apps/redis. See for installation configuration redis I - installation, configuration and persistence

1.2. 2. Prerequisites for creating redis cluster cluster

- Each redis node adopts the same hardware configuration, the same password and the same redis version

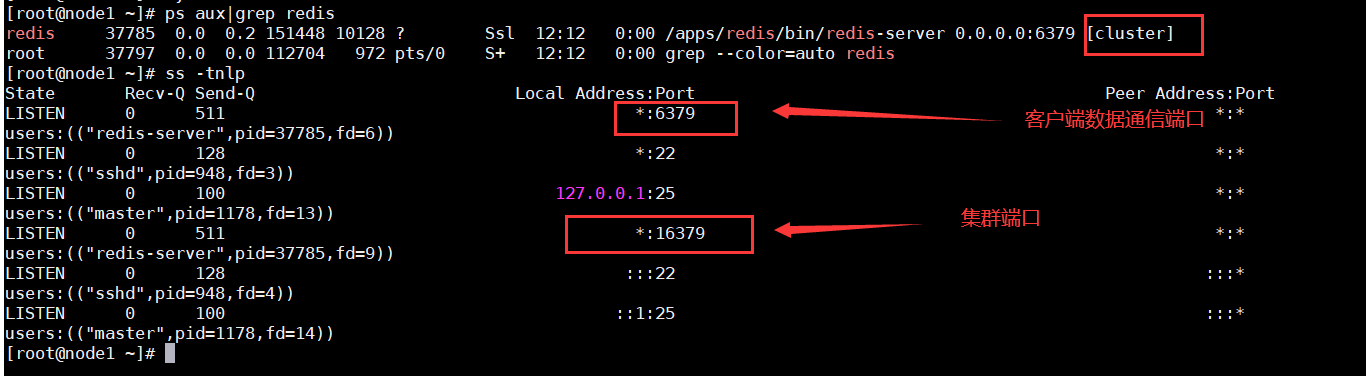

- Parameters that must be enabled for each node

Cluster enabled yes # the cluster status must be enabled, and the redis process will display cluster after it is enabled

cluster-config-file nodes-6380.conf # this file is automatically created and maintained by the redis cluster cluster without any manual operation - All redis servers must have no data

- Start a stand-alone redis without any key value

1.2. 3 start all node reids

#The configuration file enables the cluster status, and all nodes need to be configured vim /apps/redis/etc/redis.conf #Add the following configuration cluster-enabled yes cluster-config-file nodes-6379.conf #Start redis on all nodes systemctl start redis

View reids service status

1.2. 4 create cluster

1.2.4.1 Redis 3 and 4 versions:

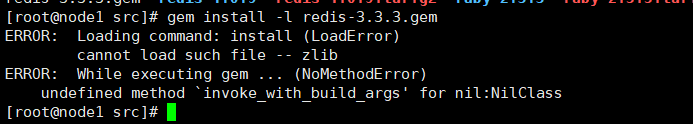

You need to use the cluster management tool redis trib RB, a redis cluster management tool officially launched by redis, is integrated into the source src directory of redis. It is a simple, convenient and practical operation tool based on the cluster commands provided by redis. Redis trib RB was developed by redis author in ruby. Ruby installed in centos system yum has a lower version, as follows:

[root@node1 ~]# yum install ruby rubygems -y

[root@node1 ~]# find / -name redis-trib.rb

/usr/local/src/redis-4.0.9/src/redis-trib.rb

[root@node1 ~]# cp /usr/local/src/redis-4.0.9/src/redis-trib.rb /usr/bin/

[root@node1 ~]# gem install redis

Fetching: redis-4.4.0.gem (100%)

ERROR: Error installing redis:

redis requires Ruby version >= 2.3.0.

Solve the problem of lower ruby Version

Ruby download address https://cache.ruby-lang.org/pub/ruby/

[root@node1 ~]# yum remove ruby rubygems -y [root@node1 ~]# cd /usr/local/src [root@node1 src]# wget https://cache.ruby-lang.org/pub/ruby/2.5/ruby-2.5.5.tar.gz [root@node1 src]# tar xf ruby-2.5.5.tar.gz [root@node1 src]# cd ruby-2.5.5 [root@node1 ruby-2.5.5]# ./configure [root@node1 ruby-2.5.5]# make && make install [root@node1 ruby-2.5.5]# ln -sv /usr/local/bin/gem /usr/bin/ ##Installing reids module offline #Download address https://rubygems.org/gems/redis [root@node1 src]# wget wget https://rubygems.org/downloads/redis-4.1.0.gem [root@node1 src]# gem install -l redis-4.1.0.gem

If the installation prompts the following errors, you can view them https://blog.csdn.net/xiaocong66666/article/details/82892808

Verify redis trib RB command

[root@node1 ~]# redis-trib.rb

Usage: redis-trib <command> <options> <arguments ...>

create host1:port1 ... hostN:portN #Create cluster

--replicas <arg> #Specifies the number of replicas of the master

check host:port #Check cluster information

info host:port #View cluster host information

fix host:port #Repair cluster

--timeout <arg>

reshard host:port #Online hot migration cluster specifies the slots data of the host

--from <arg>

--to <arg>

--slots <arg>

--yes

--timeout <arg>

--pipeline <arg>

rebalance host:port #Balance the number of slot s of each host in the cluster

--weight <arg>

--auto-weights

--use-empty-masters

--timeout <arg>

--simulate

--pipeline <arg>

--threshold <arg>

add-node new_host:new_port existing_host:existing_port #Add host to cluster

--slave

--master-id <arg>

del-node host:port node_id #Delete host

set-timeout host:port milliseconds #Set the timeout for the node

call host:port command arg arg .. arg #Execute commands on all nodes on the cluster

import host:port #Import the data from the external redis server to the current cluster

--from <arg>

--copy

--replace

help (show this help)

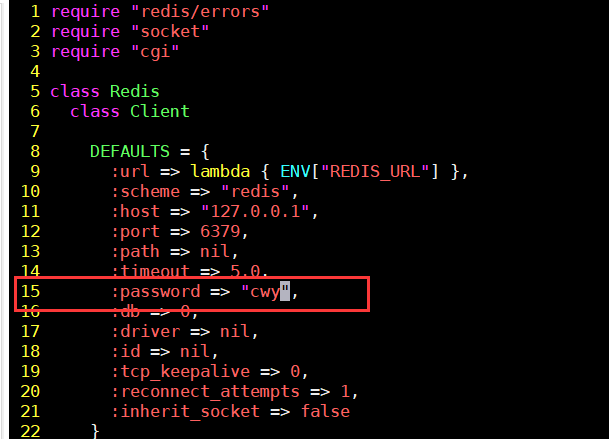

1.2. 4.2 modify redis login password

[root@node1 ~]# vim /usr/local/lib/ruby/gems/2.5.0/gems/redis-3.3.3/lib/redis/client.rb

1.2. 4.3 create redis cluster

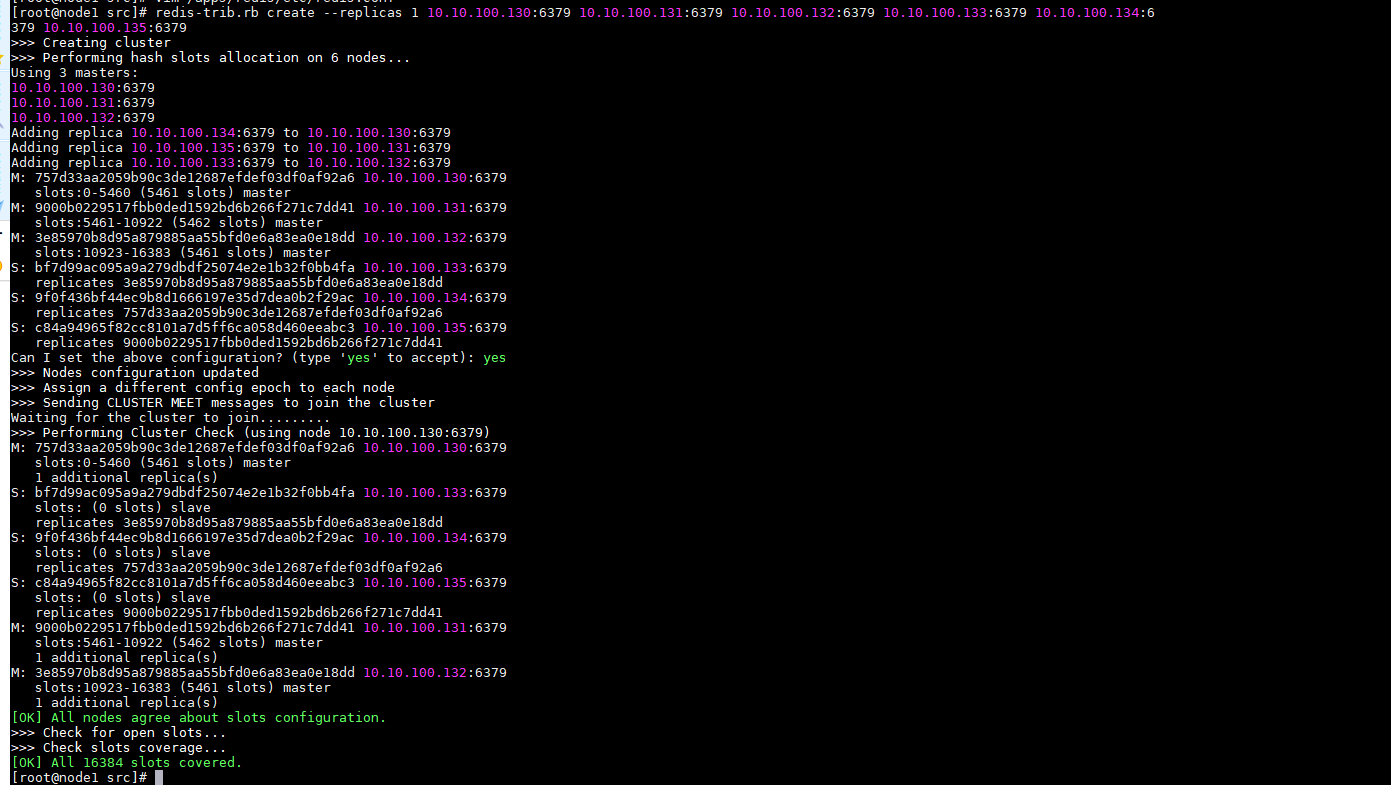

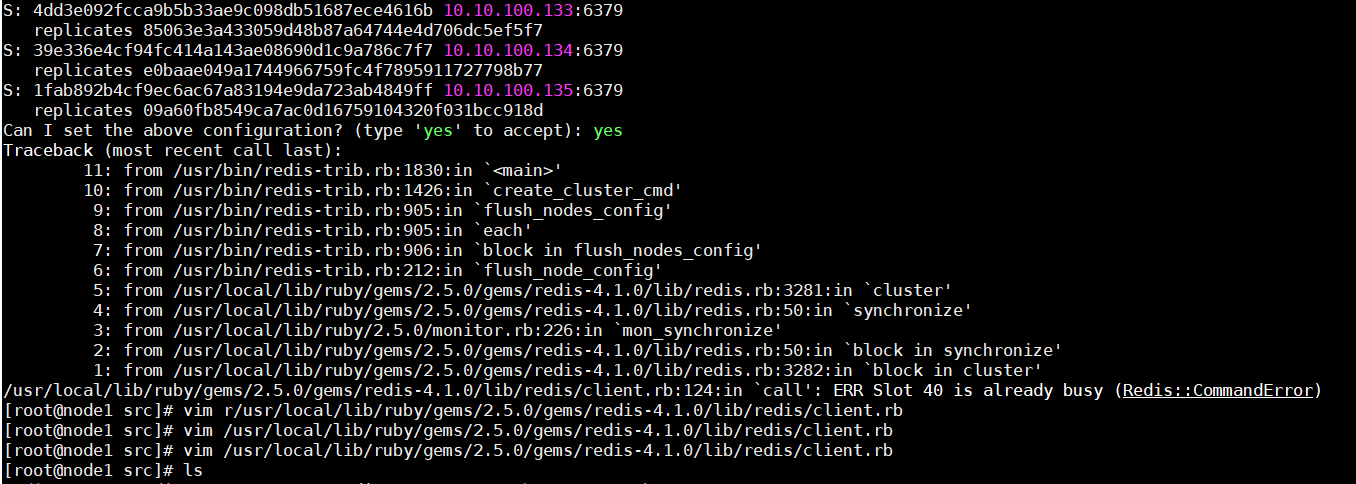

[root@node1 src]# redis-trib.rb create --replicas 1 10.10.100.130:6379 10.10.100.131:6379 10.10.100.132:6379 10.10.100.133:6379 10.10.100.134:6379 10.10.100.135:6379

If the prompt is as follows, an error is reported, indicating that there is data in the redis node

Clear the redis data, delete the persistent backup file, and restart redis

[root@node1 ~]# redis-cli 127.0.0.1:6379> AUTH cwy OK 127.0.0.1:6379> FLUSHALL OK 127.0.0.1:6379> cluster reset OK [root@node1 ~]# rm -f /apps/redis/data/* [root@node1 ~]# systemctl start redis

1.2.4.2 Redis 5 version:

[root@node1 ~]# redis-cli -a cwy --cluster create 10.10.100.130:6379 10.10.100.131:6379 10.10.100.132:6379 10.10.100.133:6379 10.10.100.134:6379 10.10.100.135:6379 --cluster-replicas 1

1.2. 5. Verify Redis cluster status

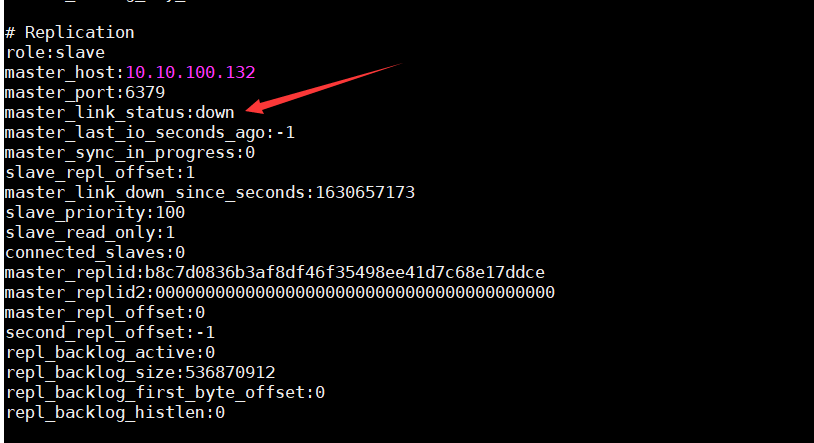

Since the master authentication password is not set, the master has never been established, but the cluster is already running. Therefore, it is necessary to use config set on each slave console to set the master authentication password, or write it in each redis configuration file. It is best to write it in the configuration file after setting the password at the control point.

#slave node setting masterauth 10.10.100.133:6379> CONFIG SET masterauth cwy OK 10.10.100.134:6379> CONFIG SET masterauth cwy OK 10.10.100.135:6379> CONFIG SET masterauth cwy OK

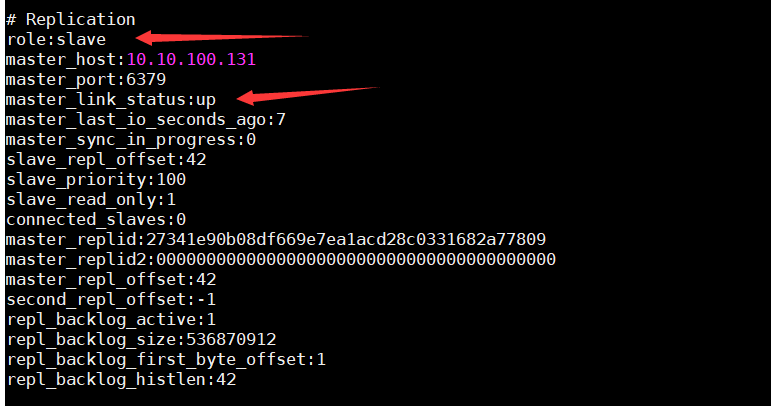

Confirm slave status

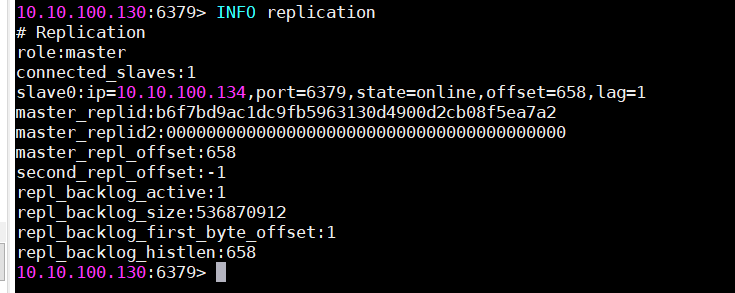

1.2. 5.1 verifying master status

1.2. 5.2 verifying cluster status

10.10.100.130:6379> CLUSTER INFO cluster_state:ok cluster_slots_assigned:16384 cluster_slots_ok:16384 cluster_slots_pfail:0 cluster_slots_fail:0 cluster_known_nodes:6 cluster_size:3 cluster_current_epoch:6 cluster_my_epoch:1 cluster_stats_messages_ping_sent:2534 cluster_stats_messages_pong_sent:2500 cluster_stats_messages_sent:5034 cluster_stats_messages_ping_received:2495 cluster_stats_messages_pong_received:2534 cluster_stats_messages_meet_received:5 cluster_stats_messages_received:5034

1.2. 5.3 viewing cluster node correspondence

10.10.100.130:6379> CLUSTER NODES bf7d99ac095a9a279dbdf25074e2e1b32f0bb4fa 10.10.100.133:6379@16379 slave 3e85970b8d95a879885aa55bfd0e6a83ea0e18dd 0 1630658036192 4 connected 9f0f436bf44ec9b8d1666197e35d7dea0b2f29ac 10.10.100.134:6379@16379 slave 757d33aa2059b90c3de12687efdef03df0af92a6 0 1630658037200 5 connected c84a94965f82cc8101a7d5ff6ca058d460eeabc3 10.10.100.135:6379@16379 slave 9000b0229517fbb0ded1592bd6b266f271c7dd41 0 1630658038207 6 connected 9000b0229517fbb0ded1592bd6b266f271c7dd41 10.10.100.131:6379@16379 master - 0 1630658036000 2 connected 5461-10922 3e85970b8d95a879885aa55bfd0e6a83ea0e18dd 10.10.100.132:6379@16379 master - 0 1630658036000 3 connected 10923-16383 757d33aa2059b90c3de12687efdef03df0af92a6 10.10.100.130:6379@16379 myself,master - 0 1630658036000 1 connected 0-5460

1.2. 5.4 verifying cluster write key s

10.10.100.130:6379> set key1 v1 #After algorithm calculation, the slot of the current key needs to be written to the specified node (error) MOVED 9189 10.10.100.131:6379 #The slot is not in the current node, so it cannot be written 10.10.100.131:6379> set key1 v1 #The specified node can be written OK 10.10.100.131:6379> KEYS * 1) "key1" #The key is not changed in other primary nodes 10.10.100.130:6379> KEYS * (empty list or set)

1.2. 5.5 cluster status verification and monitoring

redis 3|4

[root@node1 ~]# redis-trib.rb check 10.10.100.130:6379 >>> Performing Cluster Check (using node 10.10.100.130:6379) M: 757d33aa2059b90c3de12687efdef03df0af92a6 10.10.100.130:6379 slots:0-5460 (5461 slots) master 1 additional replica(s) S: bf7d99ac095a9a279dbdf25074e2e1b32f0bb4fa 10.10.100.133:6379 slots: (0 slots) slave replicates 3e85970b8d95a879885aa55bfd0e6a83ea0e18dd S: 9f0f436bf44ec9b8d1666197e35d7dea0b2f29ac 10.10.100.134:6379 slots: (0 slots) slave replicates 757d33aa2059b90c3de12687efdef03df0af92a6 S: c84a94965f82cc8101a7d5ff6ca058d460eeabc3 10.10.100.135:6379 slots: (0 slots) slave replicates 9000b0229517fbb0ded1592bd6b266f271c7dd41 M: 9000b0229517fbb0ded1592bd6b266f271c7dd41 10.10.100.131:6379 slots:5461-10922 (5462 slots) master 1 additional replica(s) M: 3e85970b8d95a879885aa55bfd0e6a83ea0e18dd 10.10.100.132:6379 slots:10923-16383 (5461 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. [root@node1 ~]# redis-trib.rb info 10.10.100.130:6379 10.10.100.130:6379 (757d33aa...) -> 0 keys | 5461 slots | 1 slaves. 10.10.100.131:6379 (9000b022...) -> 1 keys | 5462 slots | 1 slaves. 10.10.100.132:6379 (3e85970b...) -> 0 keys | 5461 slots | 1 slaves. [OK] 1 keys in 3 masters. 0.00 keys per slot on average.

redis 5

[root@node1 ~]# redis-cli -a cwy --cluster check 10.10.100.130:6379

1.3 Redis cluster node maintenance

After the cluster runs for a long time, it is inevitable to make corresponding adjustments to the existing cluster due to hardware failure, network planning, business growth and other reasons, such as adding Redis node nodes, reducing nodes, node migration, server replacement, etc

Adding and deleting nodes will involve existing slot reallocation and data migration.

1.3. 1. Dynamically adding nodes for cluster maintenance

To add a Redis node, you need to have the same version and configuration as the previous Redis node, and then start two redis nodes respectively, because one is the master and the other is the slave.

Add 10.10 100.136 dynamically added to the cluster, which can not affect business use and data loss

Synchronize the previous Redis node configuration file to 10.10 100.136 redis compile the installation directory and pay attention to the listening IP of the configuration file.

#Copy configuration file to new node [root@node1 ~]# scp redis.conf 10.10.100.136:/usr/local/redis/etc/ [root@node1 ~]# scp redis.conf 10.10.100.137:/usr/local/redis/etc/ #Start redis [root@node7 ~]#systemctl start redis [root@node8 ~]#systemctl start redis

1.3. 1.1 add node to cluster

add-node new_host:new_port existing_host:existing_port

The new redis node IP to be added and the master IP of the cluster to which the port is added: port. After the new node node is added to the cluster, it is the master node by default, but there is no slots data and needs to be reassigned.

Redis 3|4 addition method

[root@node1 ~]# redis-trib.rb add-node 10.10.100.136:6379 10.10.100.130:6379 [root@node1 ~]# redis-trib.rb add-node 10.10.100.137:6379 10.10.100.130:6379

Redis 5 addition method

redis-cli -a cwy --cluster add-node 10.10.100.137:6379 10.10.100.130:6379 redis-cli -a cwy --cluster add-node 10.10.100.136:6379 10.10.100.130:6379

1.3. 1.2 add slave node for new master

We will be 10.10 100.137 is configured as 10.10 slave of 100.136

#Login to 137 [root@node1 ~]# redis-cli -h 10.10.100.137 10.10.100.137:6379> AUTH cwy OK ##View the current cluster node and find the ID of the target master 10.10.100.137:6379> CLUSTER NODES 0b8713d80eb27b6e530aa4af95c2180937673b63 10.10.100.134:6379@16379 slave be89d12fa71c151f495793d8faa8239ff0286796 0 1630663540819 1 connected be89d12fa71c151f495793d8faa8239ff0286796 10.10.100.130:6379@16379 master - 0 1630663538000 1 connected 0-5460 ef7e819c7002befad0821eaeb32c0f08b23dbc4f 10.10.100.136:6379@16379 master - 0 1630663538806 0 connected 1cf7cebb9d522c697315fd9bab16e56e9dcb26b0 10.10.100.133:6379@16379 slave 280d04878bc488fbe97bde45c1414bbf11ea2701 0 1630663539000 3 connected 7669a2a1b392fb71e2dc61b6e07d6e1b38cc7e14 10.10.100.131:6379@16379 master - 0 1630663539811 2 connected 5461-10922 df05ae6038d1e1732b9692fcdfb0f7b57abce067 10.10.100.135:6379@16379 slave 7669a2a1b392fb71e2dc61b6e07d6e1b38cc7e14 0 1630663541824 2 connected 55ab5a70e4450ef5143cf0cde072b27d4c539291 10.10.100.137:6379@16379 myself,master - 0 1630663541000 7 connected 280d04878bc488fbe97bde45c1414bbf11ea2701 10.10.100.132:6379@16379 master - 0 1630663540000 3 connected 10923-16383 ##Set it to slave, and the command format is cluster replicate master 10.10.100.137:6379> CLUSTER REPLICATE ef7e819c7002befad0821eaeb32c0f08b23dbc4f

1.3. 1.3 reassign slots

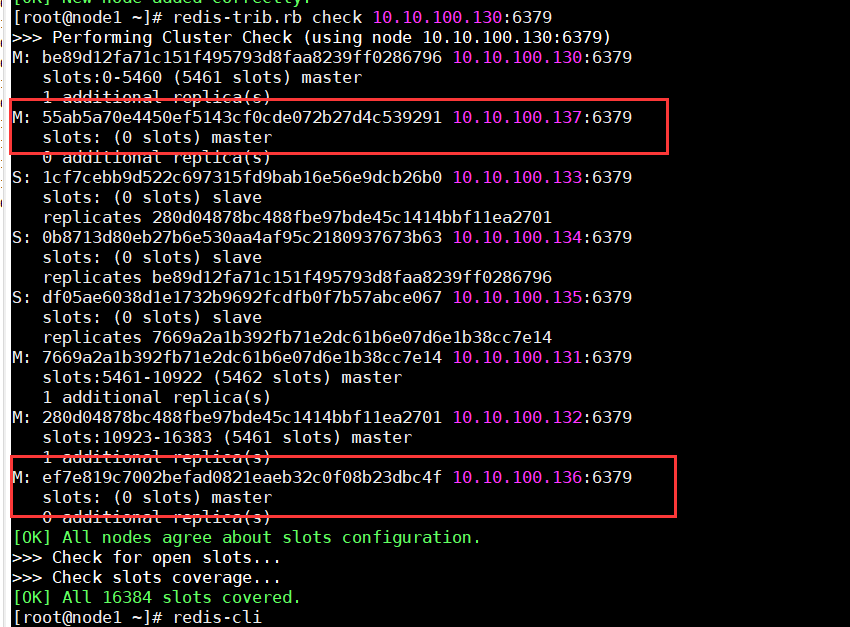

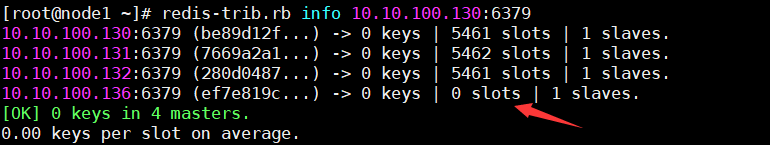

Verify that the newly added master node has no slots

redis 3|4 command

[root@node1 ~]# redis-trib.rb reshard 10.10.100.136:6379

>>> Performing Cluster Check (using node 10.10.100.136:6379)

M: ef7e819c7002befad0821eaeb32c0f08b23dbc4f 10.10.100.136:6379

slots: (0 slots) master

1 additional replica(s)

S: 1cf7cebb9d522c697315fd9bab16e56e9dcb26b0 10.10.100.133:6379

slots: (0 slots) slave

replicates 280d04878bc488fbe97bde45c1414bbf11ea2701

S: 0b8713d80eb27b6e530aa4af95c2180937673b63 10.10.100.134:6379

slots: (0 slots) slave

replicates be89d12fa71c151f495793d8faa8239ff0286796

M: 7669a2a1b392fb71e2dc61b6e07d6e1b38cc7e14 10.10.100.131:6379

slots:5461-10922 (5462 slots) master

1 additional replica(s)

S: df05ae6038d1e1732b9692fcdfb0f7b57abce067 10.10.100.135:6379

slots: (0 slots) slave

replicates 7669a2a1b392fb71e2dc61b6e07d6e1b38cc7e14

M: be89d12fa71c151f495793d8faa8239ff0286796 10.10.100.130:6379

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: 55ab5a70e4450ef5143cf0cde072b27d4c539291 10.10.100.137:6379

slots: (0 slots) slave

replicates ef7e819c7002befad0821eaeb32c0f08b23dbc4f

M: 280d04878bc488fbe97bde45c1414bbf11ea2701 10.10.100.132:6379

slots:10923-16383 (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 4096 #How many slots are allocated

What is the receiving node ID? ef7e819c7002befad0821eaeb32c0f08b23dbc4f

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:all

#Which source host slots are assigned to 10.10 100.136:6379, all is automatically selected in all redis node s,

#If the host is deleted from the redis cluster, you can use this method to move all the slots on the host to other redis hosts

Ready to move 4096 slots.

Source nodes:

M: 7669a2a1b392fb71e2dc61b6e07d6e1b38cc7e14 10.10.100.131:6379

slots:5461-10922 (5462 slots) master

1 additional replica(s)

M: be89d12fa71c151f495793d8faa8239ff0286796 10.10.100.130:6379

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: 280d04878bc488fbe97bde45c1414bbf11ea2701 10.10.100.132:6379

slots:10923-16383 (5461 slots) master

1 additional replica(s)

Destination node:

M: ef7e819c7002befad0821eaeb32c0f08b23dbc4f 10.10.100.136:6379

slots: (0 slots) master

1 additional replica(s)

.........................

Moving slot 12286 from 280d04878bc488fbe97bde45c1414bbf11ea2701

Moving slot 12287 from 280d04878bc488fbe97bde45c1414bbf11ea2701

Do you want to proceed with the proposed reshard plan (yes/no)? yes #Confirm allocation

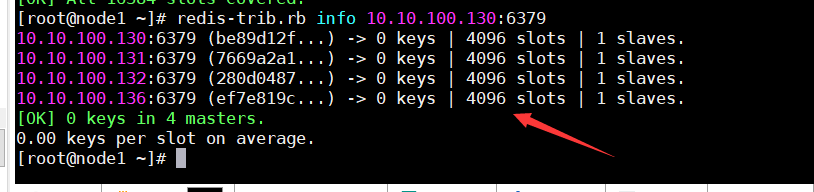

1.3. 1.4 verify slot information

1.3. 2 dynamic deletion of nodes for cluster maintenance

When adding nodes, first add node nodes to the cluster, and then allocate slots. The operation of deleting nodes is just the opposite to that of adding nodes. First migrate the slots on the deleted Redis node to other Redis node nodes in the cluster, and then delete them. If the slots on a Redis node are not completely migrated, When deleting this node, you will be prompted that there is data and it cannot be deleted.

Test will be 10.10 The 100.132master node is removed from the cluster

1.3. 2.1 migrate other masters in the slot of the master

The source server of the migrated Redis master must ensure that there is no data, otherwise the migration will report an error and be forcibly interrupted.

rdis 3|4 command

[root@node1 ~]# redis-trib.rb reshard 10.10.100.130:6379 #If the migration fails, use this command to repair the cluster [root@node1 ~]# redis-trib.rb fix 10.10.100.130:6379

rdis 5 command

redis-cli -a cwy --cluster reshard 10.10.100.130:6379

[root@node1 ~]# redis-trib.rb reshard 10.10.100.130:6379 >>> Performing Cluster Check (using node 10.10.100.130:6379) ........ #How many slots are migrated on the master? Here, 4096 slots of 132 nodes are manually allocated equally to other nodes How many slots do you want to move (from 1 to 16384)? 1364 What is the receiving node ID? 7669a2a1b392fb71e2dc61b6e07d6e1b38cc7e14 #Server ID of receiving slot Please enter all the source node IDs. Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs. Source node #1:280d04878bc488fbe97bde45c1414bbf11ea2701 #From which server are slots migrated Source node #2:done #Write done to indicate that there are no other master s ...... Do you want to proceed with the proposed reshard plan (yes/no)? yes #Continue ......... #The remaining slots are allocated to other nodes in the same way

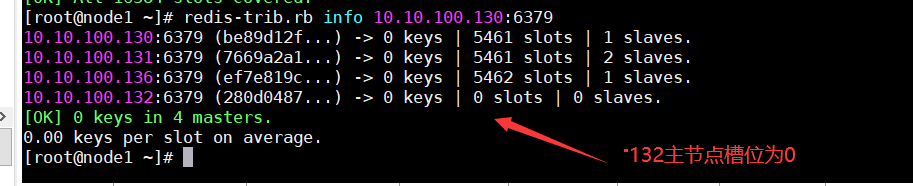

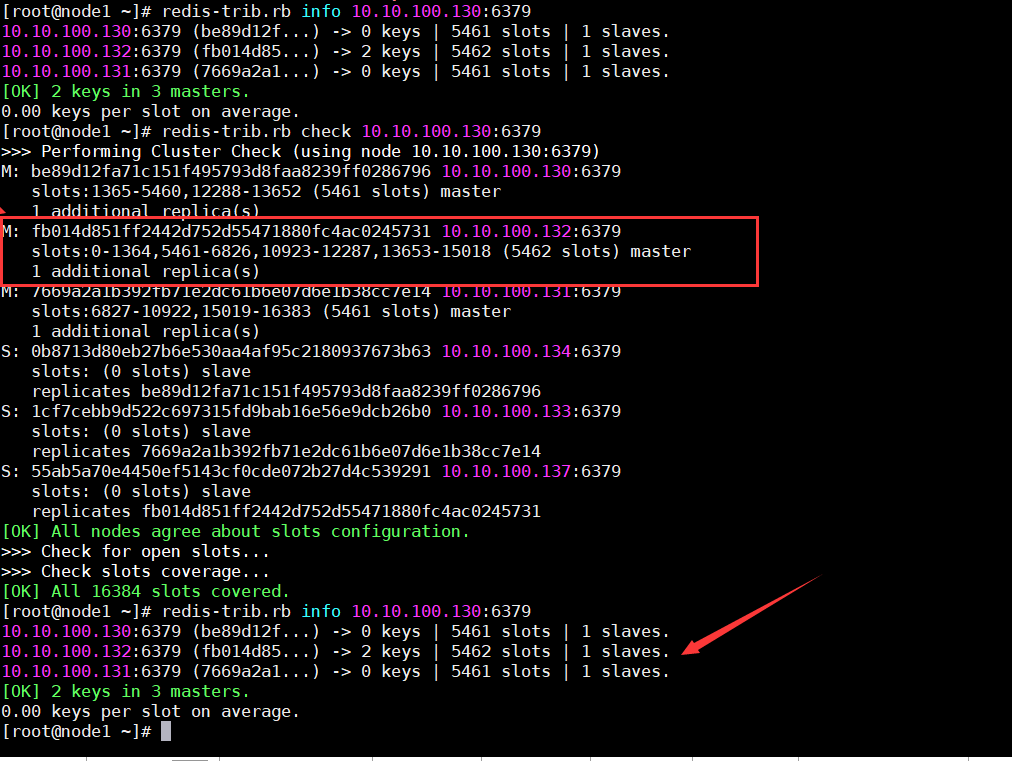

Verify that slot migration is complete

1.3. 2.2 deleting a server from a cluster

[root@node1 ~]# redis-trib.rb del-node 10.10.100.132:6379 280d04878bc488fbe97bde45c1414bbf11ea2701 >>> Removing node 280d04878bc488fbe97bde45c1414bbf11ea2701 from cluster 10.10.100.132:6379 >>> Sending CLUSTER FORGET messages to the cluster... >>> SHUTDOWN the node.

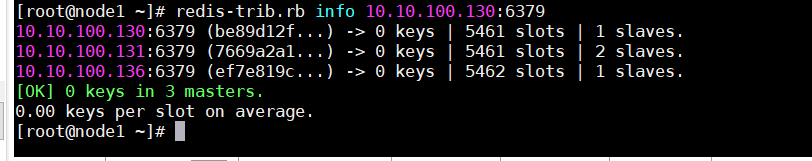

Verify that the node is deleted

Verify and confirm 10.10 100.132 has been deleted from the Redis cluster.

**Note: 10.10 After 100.132 is deleted, its previous slave automatically becomes the slave of other master s in the Redis cluster. This node can also be deleted if it is not needed**

1.3. 3 dynamic migration nodes for cluster maintenance

Because one of the master servers has a hardware problem and needs to go offline, the data needs to be migrated to the new server. The migration scheme is as follows

- After the new server starts redis and adds redis to the cluster, manually migrate the slot to the newly added redis. This scheme needs to export and backup the redis data in advance, empty the data in redis, and import the data to redis after the migration is completed

- After the new server starts redis and adds it to the cluster, add the newly added redis to the slave of the master node that needs to be offline. After data synchronization is completed, manually stop the master node and the master will automatically migrate to the slave node

Both schemes can be implemented by adding nodes, migrating slots and deleting nodes according to the above. Here, the second scheme is tested and verified directly

1.3. 3.1 adding nodes to

#Write point data at node 136 first to verify whether the migration is normal later 10.10.100.136:6379> SET key3 v3 OK 10.10.100.136:6379> SET key7 v7 OK 10.10.100.136:6379> KEYS * 1) "key7" 2) "key3"

Here, 136 nodes are migrated to 132 nodes just deleted

[root@node1 ~]# redis-trib.rb add-node 10.10.100.132:6379 10.10.100.130:6379 >>> Adding node 10.10.100.132:6379 to cluster 10.10.100.130:6379 >>> Performing Cluster Check (using node 10.10.100.130:6379) M: be89d12fa71c151f495793d8faa8239ff0286796 10.10.100.130:6379 slots:1365-5460,12288-13652 (5461 slots) master 1 additional replica(s) M: 7669a2a1b392fb71e2dc61b6e07d6e1b38cc7e14 10.10.100.131:6379 slots:6827-10922,15019-16383 (5461 slots) master 1 additional replica(s) M: ef7e819c7002befad0821eaeb32c0f08b23dbc4f 10.10.100.136:6379 slots:0-1364,5461-6826,10923-12287,13653-15018 (5462 slots) master 1 additional replica(s) S: 0b8713d80eb27b6e530aa4af95c2180937673b63 10.10.100.134:6379 slots: (0 slots) slave replicates be89d12fa71c151f495793d8faa8239ff0286796 S: 1cf7cebb9d522c697315fd9bab16e56e9dcb26b0 10.10.100.133:6379 slots: (0 slots) slave replicates 7669a2a1b392fb71e2dc61b6e07d6e1b38cc7e14 S: 55ab5a70e4450ef5143cf0cde072b27d4c539291 10.10.100.137:6379 slots: (0 slots) slave replicates ef7e819c7002befad0821eaeb32c0f08b23dbc4f [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. >>> Send CLUSTER MEET to node 10.10.100.132:6379 to make it join the cluster. [OK] New node added correctly.

1.3. 3.2 add nodes to the slave of 136

[root@node1 ~]# redis-cli -h 10.10.100.132 10.10.100.132:6379> AUTH cwy OK 10.10.100.132:6379> CLUSTER NODES fb014d851ff2442d752d55471880fc4ac0245731 10.10.100.132:6379@16379 myself,master - 0 1630910247000 0 connected be89d12fa71c151f495793d8faa8239ff0286796 10.10.100.130:6379@16379 master - 0 1630910245000 9 connected 1365-5460 12288-13652 7669a2a1b392fb71e2dc61b6e07d6e1b38cc7e14 10.10.100.131:6379@16379 master - 0 1630910246000 12 connected 6827-10922 15019-16383 ef7e819c7002befad0821eaeb32c0f08b23dbc4f 10.10.100.136:6379@16379 master - 0 1630910245602 11 connected 0-1364 5461-6826 10923-12287 13653-15018 0b8713d80eb27b6e530aa4af95c2180937673b63 10.10.100.134:6379@16379 slave be89d12fa71c151f495793d8faa8239ff0286796 0 1630910248000 9 connected 55ab5a70e4450ef5143cf0cde072b27d4c539291 10.10.100.137:6379@16379 slave ef7e819c7002befad0821eaeb32c0f08b23dbc4f 0 1630910248621 11 connected 1cf7cebb9d522c697315fd9bab16e56e9dcb26b0 10.10.100.133:6379@16379 slave 7669a2a1b392fb71e2dc61b6e07d6e1b38cc7e14 0 1630910249628 12 connected #Add 132 to the slave of 136 10.10.100.132:6379> CLUSTER REPLICATE ef7e819c7002befad0821eaeb32c0f08b23dbc4f OK #Set master connection password 10.10.100.132:6379> CONFIG set masterauth cwy OK 10.10.100.132:6379> INFO Replication # Replication role:slave master_host:10.10.100.136 master_port:6379 master_link_status:up ......

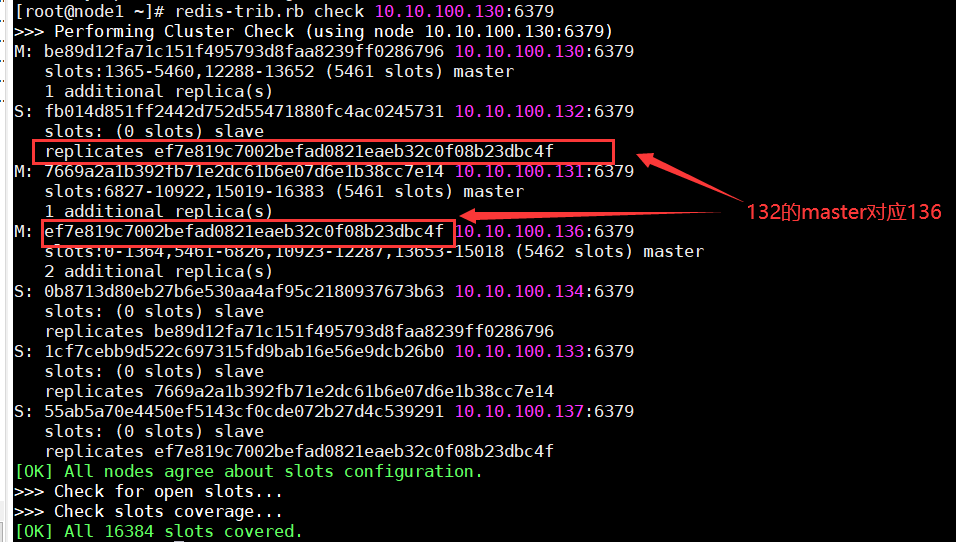

Use redis trib rb check 10.10. 100.130:6379 it can be seen that 132 is already the slave node of 136

1.3. 3.3 manual stop 136 nodes

Manually stop the on 136 redis [root@node7 ~]# systemctl stop redis

After verification, you can see that 136 stops and 132 automatically switches to the master node

1.3. 3.4 login verification data

#redis connected to 132 [root@node1 ~]# redis-cli -h 10.10.100.132 10.10.100.132:6379> AUTH cwy OK #View previous data in 10.10.100.132:6379> KEYS * 1) "key3" 2) "key7" 10.10.100.132:6379> GET key3 "v3" #Here, the write fails because the slot is not on 132 10.10.100.132:6379> set key8 v8 (error) MOVED 13004 10.10.100.130:6379 #It is normal to write data in slot 132 10.10.100.132:6379> set key10 v10 OK 10.10.100.132:6379> KEYS * 1) "key3" 2) "key7" 3) "key10"