Project background

The cloud platform project of a national Ministry has started to build the cloud platform since the middle of 2020. In the later stage, it is expected to migrate all VMware hosts to the domestic cloud platform. However, due to the large number of virtual machines, the system research work has not been completed, resulting in the serious overdue of the project. Since June 2021, we have been involved in this project, and we have quickly helped users complete business system research through the automatic research tool of "know Prophet", The concept of "disaster recovery and gradual" migration has also dispelled the concerns of users before migration, and successfully completed the migration of multiple sets of business systems in less than one month, involving more than 100 virtual machines. This paper focuses on how to use the "know Prophet" research tool to complete automatic research and system information sorting.

User environment

The business system migrated by the customer this time runs on the virtualization platform dominated by VMware. The VMware ESXi version is mainly concentrated on versions 5.1, 5.5 and 6.5, with a total of 20 ESXi servers and more than 1000 virtual machines, which are divided into business area and DMZ area.

Research environment preparation

Software installation

1. Prepare the operating environment

Create a virtual machine on ESXi with the following configuration:

-

Operating system: CentOS 7.3

-

Specification: 2 CPU 2G Memory 40G

-

Network: access to vCenterESXi, 443 and 902 ports (TCP protocol)

2. Install the container

Log in to the system and use the root container to install Docker

wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.15.tgz

Configuration container

setenforce 0 tar -zxvf docker-19.03.15.tgz mv docker/* /usr/bin/ && rm -rf docker cat > /etc/systemd/system/docker.service <<-EOF [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix://var/run/docker.sock ExecReload=/bin/kill -s HUP # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Uncomment TasksMax if your systemd version supports it. # Only systemd 226 and above support this version. #TasksMax=infinity TimeoutStartSec=0 # set delegate yes so that systemd does not reset the cgroups of docker containers Delegate=yes # kill only the docker process, not all processes in the cgroup KillMode=process # restart the docker process if it exits prematurely Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target EOF

Run container

systemctl daemon-reload systemctl start docker

3. Install the Prophet survey tool

docker pull \ registry.cn-beijing.aliyuncs.com/oneprocloud-opensource/cloud-discovery-prophet:latest

4. Run container

We map the / root directory to the inside of the container

docker run \ --net host \ --privileged=true \ --name prophet \ -v $HOME:/root \ -dit \ registry.cn-beijing.aliyuncs.com/oneprocloud-opensource/cloud-discovery-prophet:latest

Systematic research

System information collection

1. Enter the "Prophet" container

docker exec -ti prophet bash

2. Scan active hosts in the whole network

Scan the host in the specified network address segment and record it, which can be used as the input of subsequent more detailed information collection. After the scan is completed, the csv file will be automatically generated under the specified / root path.

prophet-cli scan --host 10.1.0.43-62 --output-path /root

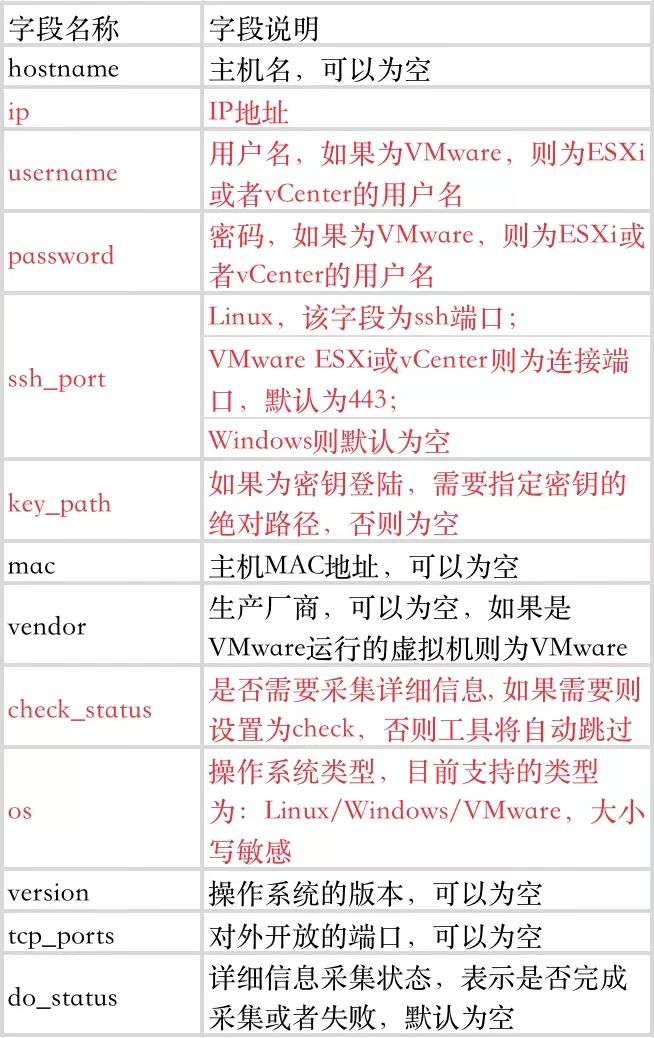

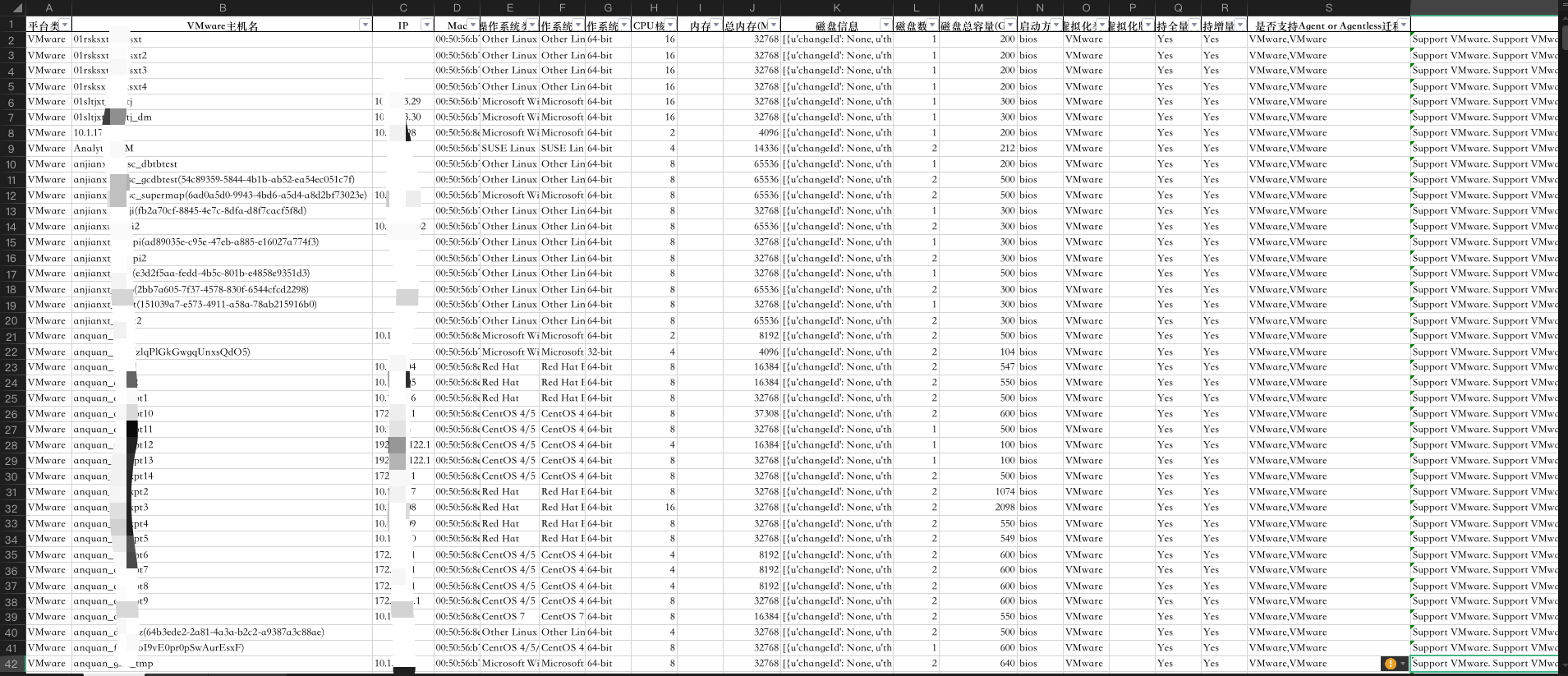

3. Fill in user name / password

Send the generated CSV file back to the local, edit it with Excel, and fill in the user name / password information

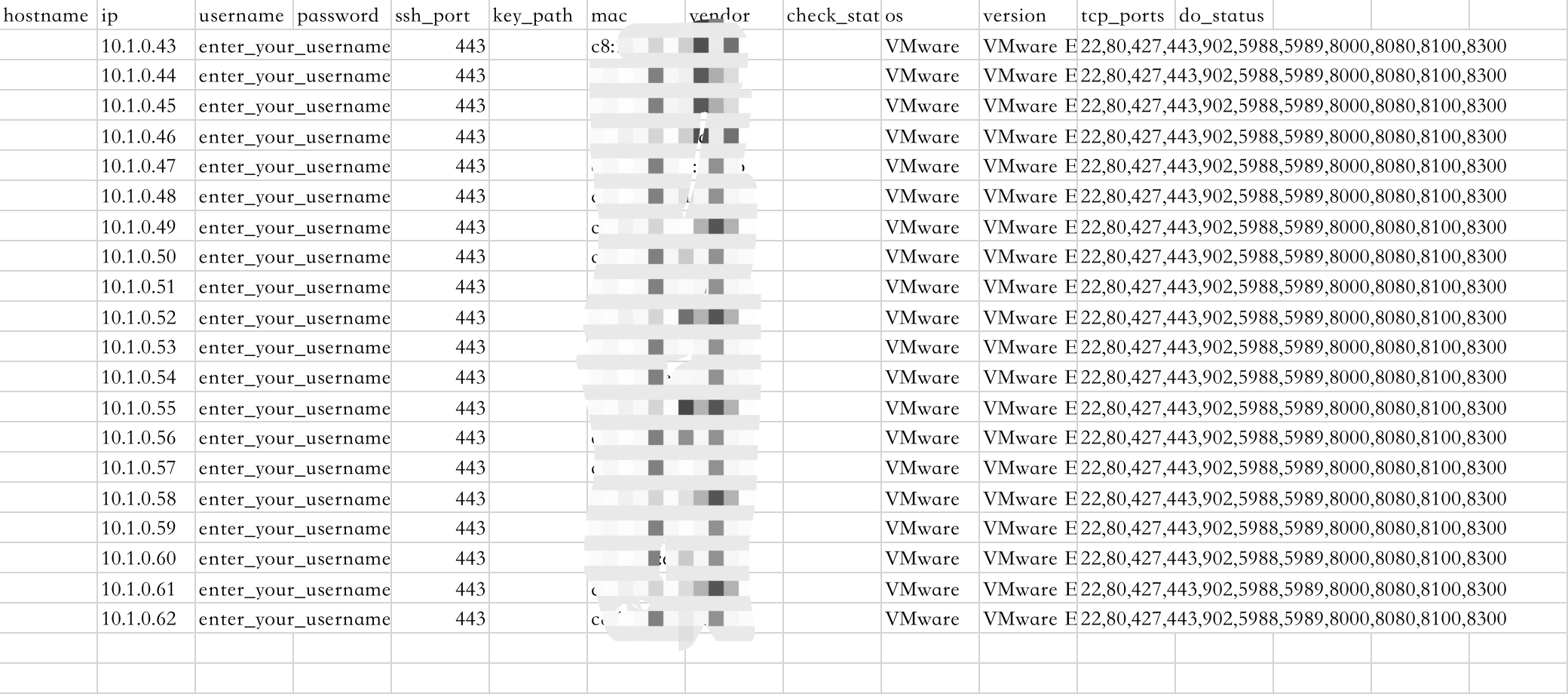

CSV structure description (fill in the red field, which is required)

4. Execute acquisition

When completed, scan_ hosts. After the CSV file is transferred to the host / root directory, you can perform detailed information collection. The method is as follows:

prophet-cli collect --host-file /root/scan_hosts.csv --output-path /root/

After the instruction runs, there will be summary information. If the collection fails, you need to query the error reason in the log

===========Summary========== Total 73 host(s) in list, Need to check 2 host(s), success 0 hosts, failed 2 hosts. Failed hosts: ['[WINDOWS]xxx', '[LINUX]xxx'] ============================

After execution, the host will be generated in the output path_ collection_ xxx. Zip file, which is the packaged file after collection.

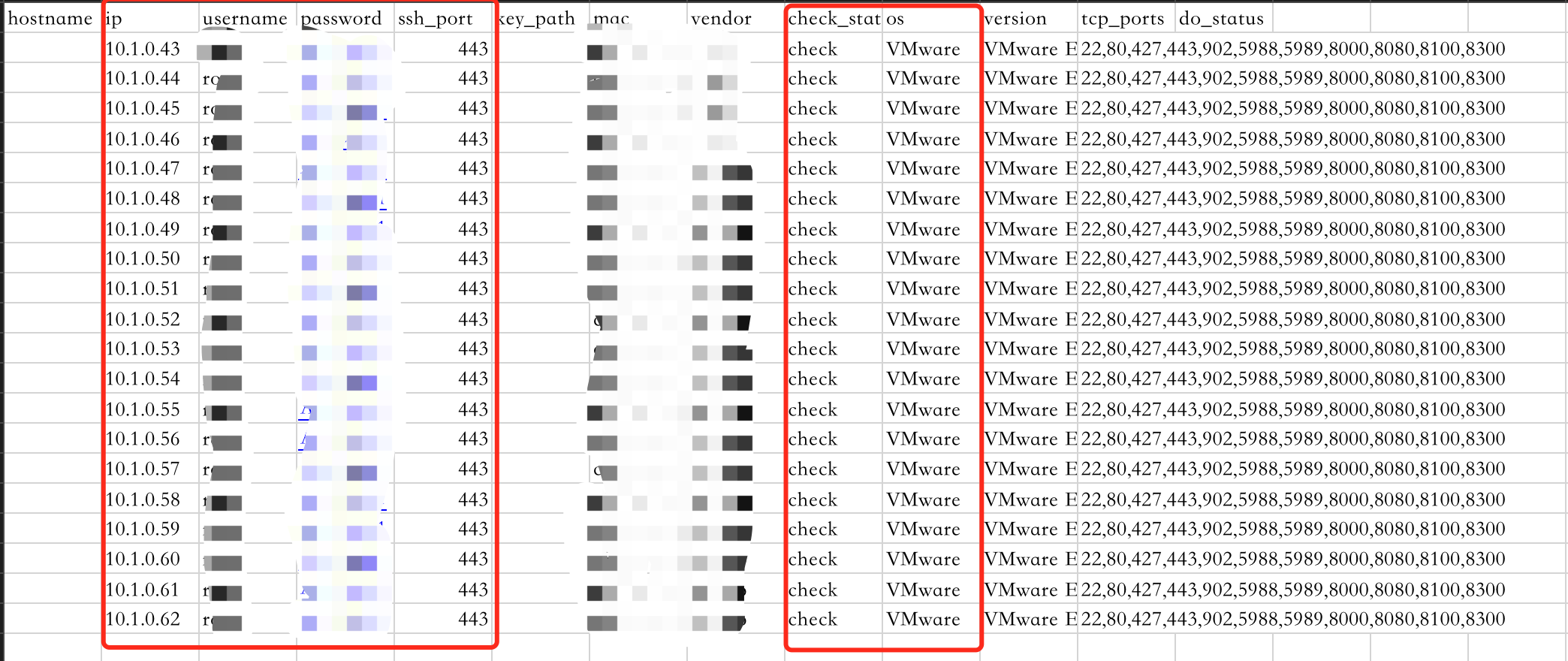

System information analysis

Analyze the results of the previous step

prophet-cli report \ --package-file /root/host_collection_20210804091400.zip \ --output-path /root/

Finally generated analysis_report.csv is the analyzed file, which can be transmitted back to the local for further analysis. So far, all the information collection work has been completed.

Summary

In this investigation, the total running time of the script is within 30 minutes, and a total of 1049 virtual machines are investigated, including 582 windows, 467 Linux, 7734 CPU cores, 19T memory and 60TB storage capacity.

In large-scale system research, automation is a necessary means, and at present, there are few tools in the field of migration research, so the "know Prophet" tool is undoubtedly the best guarantee for the accuracy of system research.

contact us

Now, "Prophet" is open-source and launched on github and synchronized to gitee in China.

-

Github address:

https://github.com/Cloud-Discovery/prophet

-

Gitee address:

https://gitee.com/cloud-discovery/prophet

We hope that the problems and new requirements in the use process can be put forward with us. You can submit the issue on github or join our development exchange group to build Prophet with us.

"Know Prophet"

The tool set for automatic collection and analysis currently supports the collection and analysis of physical machines and VMware environment, and will be extended to cloud platform resources, storage, network and other resources in the future.