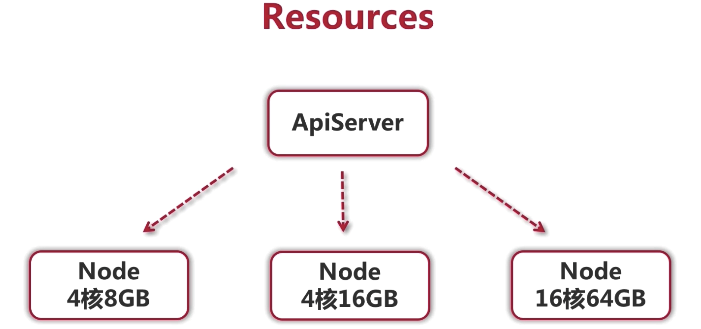

Resource Management (k8s) Resource (above)

Article Directory

Initial knowledge

Hardware is the foundation of everything, and like most technologies, kubenetes needs

- CPU

- Memory

- GPU

- Persistent Storage

gpu is not used much here

Assume a scenario here:

There are many node nodes in a cluster. kubectl collects all node information, including memory and CPU.Now you need to run an application on the cluster that takes up 20G of memory, and it will be scheduled to a machine that meets this requirement, so kubernetes will first collect node information and arrange a suitable node. If a bug happens at this time and takes up memory crazily, there will be resource limitations for this use.

Core Design

Both cpu and memory can set two parameters

-

Requests

The container wants to be allocated to a fully guaranteed amount of resources.

What to use: He will give the scheduler a value by which the scheduler participates in the calculation of the scheduler policy.So that we can find the most suitable node.

-

Limits

Is the upper limit on which containers can use resources.

When there is insufficient resources and competition for resources occurs, this value is used for calculation to make further decisions.Which app to kill.This is a resource-constrained policy.

How to use

Here we do resource constraints for pod s.The resoruces keyword needs to be added to the yaml configuration file to set parameters for requests and limits.

Specifies the use of memory 100 megabytes CPU 100m, the maximum limit memory cannot exceed 100 megabytes CPU cannot exceed 200 m No matter there are 10 cores 20 cores available on the machine only 0.2 cores_

requests:

memory: 100Mi

cpu: 100m

limits:

memory: 100Mi

cpu: 200m

Make sure to add units when setting parameters, not bytes

Company

- Memory

Typical memory uses mega-Mi, Gi,

- cpu

Use lowercase m, do not write unit representations, such as cpu:100 or use 100 CPU

One-core CPU=1000m, 100m=0.1-core

Using demo test, create a web project configuration as follows:

Web-dev.yaml

#deploy apiVersion: apps/v1 kind: Deployment metadata: name: web-demo namespace: dev spec: selector: matchLabels: app: web-demo replicas: 1 template: metadata: labels: app: web-demo spec: containers: - name: web-demo image: hub.zhang.com/kubernetes/demo:2020011512381579063123 ports: - containerPort: 8080 resources: requests: memory: 100Mi cpu: 100m limits: memory: 100Mi cpu: 200m --- #service apiVersion: v1 kind: Service metadata: name: web-demo namespace: dev spec: ports: - port: 80 protocol: TCP targetPort: 8080 selector: app: web-demo type: ClusterIP --- #ingress apiVersion: extensions/v1beta1 kind: Ingress metadata: name: web-demo namespace: dev spec: rules: - host: web.demo.com http: paths: - path: / backend: serviceName: web-demo servicePort: 80

Create a project:

[root@master-001 ~]# kubectl apply -f web-dev.yaml

View usage

[root@master-001 ~]# kubectl describe node node-001 Name: node-001 Roles: <none> Labels: app=ingress beta.kubernetes.io/arch=amd64 beta.kubernetes.io/os=linux kubernetes.io/arch=amd64 kubernetes.io/hostname=node-001 kubernetes.io/os=linux Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock node.alpha.kubernetes.io/ttl: 0 projectcalico.org/IPv4Address: 172.16.126.137/24 projectcalico.org/IPv4IPIPTunnelAddr: 192.168.93.64 volumes.kubernetes.io/controller-managed-attach-detach: true CreationTimestamp: Thu, 02 Jan 2020 13:53:26 +0800 Taints: <none> Unschedulable: false Conditions: Type Status LastHeartbeatTime LastTransitionTime Reason Message ---- ------ ----------------- ------------------ ------ ------- NetworkUnavailable False Thu, 16 Jan 2020 11:00:52 +0800 Thu, 16 Jan 2020 11:00:52 +0800 CalicoIsUp Calico is running on this node MemoryPressure False Thu, 16 Jan 2020 17:30:45 +0800 Sat, 04 Jan 2020 12:11:53 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available DiskPressure False Thu, 16 Jan 2020 17:30:45 +0800 Sat, 04 Jan 2020 12:11:53 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure PIDPressure False Thu, 16 Jan 2020 17:30:45 +0800 Sat, 04 Jan 2020 12:11:53 +0800 KubeletHasSufficientPID kubelet has sufficient PID available Ready True Thu, 16 Jan 2020 17:30:45 +0800 Sat, 04 Jan 2020 12:11:53 +0800 KubeletReady kubelet is posting ready status Addresses: InternalIP: 172.16.126.137 Hostname: node-001 Capacity: cpu: 2 ephemeral-storage: 17394Mi hugepages-1Gi: 0 hugepages-2Mi: 0 memory: 1863104Ki pods: 110 Allocatable: cpu: 2 ephemeral-storage: 16415037823 hugepages-1Gi: 0 hugepages-2Mi: 0 memory: 1760704Ki pods: 110 System Info: Machine ID: ee5a9a60acff444b920cec37aadf35d0 System UUID: A5074D56-3849-E5CC-C84D-898BBFD19AFD Boot ID: 44c86e13-aea4-4a7e-8f5e-3e5434357035 Kernel Version: 3.10.0-1062.el7.x86_64 OS Image: CentOS Linux 7 (Core) Operating System: linux Architecture: amd64 Container Runtime Version: docker://19.3.5 Kubelet Version: v1.16.3 Kube-Proxy Version: v1.16.3 PodCIDR: 10.244.1.0/24 PodCIDRs: 10.244.1.0/24 Non-terminated Pods: (9 in total) Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE --------- ---- ------------ ---------- --------------- ------------- --- default rntibp-deployment-84d77f8f78-f99pp 0 (0%) 0 (0%) 0 (0%) 0 (0%) 12d default tomcat-demo-5f4b587679-7mpz9 0 (0%) 0 (0%) 0 (0%) 0 (0%) 13d dev web-demo-74d747dbb5-z85fj 100m (5%) 200m (10%) 100Mi (5%) 100Mi (5%) 3m1s #Here you can see the memory of the project you just deployed ingress-nginx nginx-ingress-controller-646bdb48f6-nrj59 100m (5%) 0 (0%) 90Mi (5%) 0 (0%) 13d kube-system calico-kube-controllers-648f4868b8-q7tvb 0 (0%) 0 (0%) 0 (0%) 0 (0%) 14d kube-system calico-node-6sljh 250m (12%) 0 (0%) 0 (0%) 0 (0%) 14d kube-system coredns-58cc8c89f4-lhwp2 100m (5%) 0 (0%) 70Mi (4%) 170Mi (9%) 14d kube-system coredns-58cc8c89f4-mq4jm 100m (5%) 0 (0%) 70Mi (4%) 170Mi (9%) 14d kube-system kube-proxy-s25k5 0 (0%) 0 (0%) 0 (0%) 0 (0%) 14d Allocated resources: (Total limits may be over 100 percent, i.e., overcommitted.) Resource Requests Limits -------- -------- ------ cpu 650m (32%) 200m (10%) # 650m cpu usage memory 330Mi (19%) 440Mi (25%) # Memory 330Mi ephemeral-storage 0 (0%) 0 (0%) Events: <none>

K8s resource utilization is based on docker isolation mechanism, which requires restarting a docker before adding the corresponding isolation mechanism

Let's go to the node and view it with the docker command

[root@node-001 ~]# docker ps | grep web-demo 7945a3283097 c2c8abe7e363 "sh /usr/local/tomca..." 12 minutes ago Up 12 minutes k8s_web-demo_web-demo-74d747dbb5-z85fj_dev_08cc53f8-ea1a-4ed5-a3bb-9f6727f826ee_0 [root@node-001 ~]# docker inspect 7945a3283097 View application parameter settings

Other parameters Sorrow...Just say the four values we passed to your docker requests: memory: cpu limits:memory: cpu

CpuShares: In yaml resources: the CPU configuration is 100m(0.1 core), converting this value to 0.1 core and then in 1024 equals 102.4.This value is then passed to the docker, which is a relative weight for the docker and acts as a percentage of container resources allocated when the docker is competing for resources.For example, there are two container CPUs with parameter settings 1 and 2, and the corresponding CpuShares: parameter values are 1024 and 2048.If there is competition for resources, docker tries to allocate CPUs to both containers in a one-to-two ratio.He is relative, and is used to determine the proportion of CPU allocation when there is competition for resources.

Memory: Memory, 104857600_1024_1024=100Mb, which is set in yaml configuration request Memory.Specify limit memory as Memory when docker runs

"CpuShares": 102,

"Memory": 104857600,

CpuPeriod:docker default value 100,000 nanoseconds to 100 milliseconds

CpuQuota: CPU 200m=0.2 boxes set by yaml configuration limits; 100000=20000

Together, these two values indicate that the maximum amount of cpu allocated to this container in 100 milliseconds is 20,000

"CpuPeriod": 100000,

"CpuQuota": 20000,

Limit test

What happens if memory is too small

We'll write a small dot in the memory of demo's container requests and a very memory-eating script inside the container, which won't be tested here.

Tested: Memory-eating script process will be killed after running, but the container does not stop, indicating that kubenets will kill the process that consumes the most memory, rather than having to restart the container.

What about modifying limits to limit memory/CPU overruns

Modify yaml limits memory and CPU beyond current machine capacity

Tested: still able to start normally, he does not rely on limits settings

How about tuning requests memory/CPU

resources:

requests:

memory: 20000Mi #20G

cpu: 10000m

limits:

memory: 100Gi

cpu: 20000m

Then start

Tested: Unable to start container change because there are no nodes that satisfy reputes memory

Turn requests memory into 10g to start three copies

Tested: Only two can be started, because 10g must be reserved for the specified 10g, but not enough 10g to start

But the CPU overrun will not be killed, because the CPU is compressable resource memory is not, this is the difference