Resource scheduling (nodeSelector, nodeAffinity, taint, Tolrations)

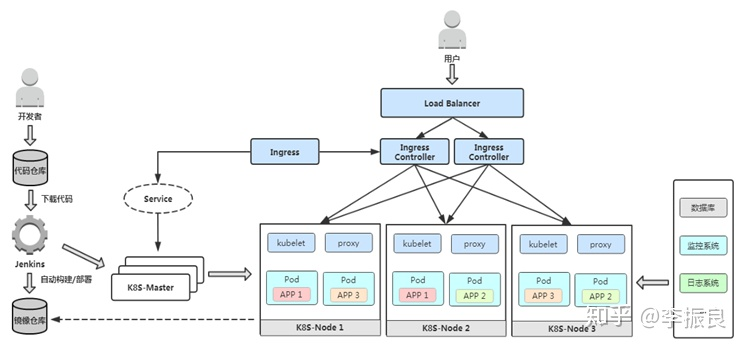

Kubernetes is a master-slave distributed architecture consisting mainly of Master Node and Worker Node, as well as client command line tools kubectl and other add-ons.

- Master Node: As a control node, dispatch management of the cluster; Master Node consists of API Server, Scheduler, Cluster State Store, and Controller-Manger Server.

- Worker Node: A container for running business applications as a real work node; Worker Node contains kubelet, kube proxy, and Container Runtime;

kubectl: Used for interacting with API Server from the command line, while operating on Kubernetes, to add, delete, change and check various resources in the cluster, etc. - Add-on: An extension to the core functionality of Kubernetes, such as adding capabilities such as network and network policies.

- RepliceationScales the number of copies

- endpoint is used to manage network requests

- Scheduler scheduler

The overall architecture of Kubernetes is as follows:

Scheduling process for Kubernetes:

- Users submit requests to create Pod s, either through the REST API of the API Server or through the Kubectl command line tool, supporting both Json and Yaml formats;

- API Server handles user requests and stores Pod data to Etcd;

- Schedule looks at the new pod through the watch mechanism of API Server and tries to bind Node to it.

- Filter hosts: The scheduler uses a set of rules to filter out hosts that do not meet the requirements, such as Pod specifies the required resources, then it filters out hosts that do not have enough resources;

- Host Scoring: Scoring the first step filtered eligible hosts. During the host scoring stage, the scheduler considers some overall optimization strategies, such as distributing a copy of Replication Controller to different hosts, using the lowest load hosts, etc.

- Select host: Select the host with the highest score, binding, and storing the results in Etcd;

- kubelet performs a Pod creation operation based on the scheduling results: after successful binding, container, docker run, scheduler calls API Server's API to create a bound pod object in the etcd describing all pod information running on a working node. kubelet running on each work node also periodically synchronizes bound pod information with etcd, and once it finds that the bound pod object that should be running on that work node has not been updated, calls the Docker API to create and start the container within the pod.

1.nodeSelector

NoeSelector is the simplest form of constraint. nodeSelector is a pod. A field of spec

The labels for the specified node can be viewed through show-labels

[root@master ~]# kubectl get node node1 --show-labels NAME STATUS ROLES AGE VERSION LABELS node1 Ready <none> 4d18h v1.20.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1,kubernetes.io/os=linux [root@master haproxy]#

If no additional nodes labels are added, the default labels you see above are shown. We can add labels to a given node through the kubectl label node command:

[root@master mainfest]# kubectl get node node1 --show-labels NAME STATUS ROLES AGE VERSION LABELS node1 Ready <none> 4d18h v1.20.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1,kubernetes.io/os=linux [root@master haproxy]#

You can also delete the specified labels through the kubectl label node

[root@maste mainfest]# kubectl label node node1 disktype- node/node1 labeled [root@master haproxy]# kubectl get node node1 --show-labels NAME STATUS ROLES AGE VERSION LABELS node1 Ready <none> 4d18h v1.20.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1,kubernetes.io/os=linux [root@master haproxy]#

Create a test pod and specify the nodeSelector option binding node:

[root@master mainfest]# kubectl label node node1.example.com disktype=ssd

node/node1.example.com labeled

[root@master mainfest]# kubectl get node node1.example.com --show-labels

NAME STATUS ROLES AGE VERSION LABELS

node1.example.com Ready <none> 4d18h v1.20.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1.example.com,kubernetes.io/os=linux

[root@master mainfest]# vi test.yml

[root@master mainfest]# cat test.yml

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

env: test

spec:

containers:

- name: test

image: nginx

imagePullPolicy: IfNotPresent

nodeSelector:

disktype: ssd

//View pod dispatched nodes

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test 1/1 Running 0 6s 10.244.1.92 node1 <none> <none>

2.nodeAffinity

NoeAffinity means a node affinity dispatch policy. Is a new dispatch policy to replace the nodeSelector. There are currently two types of node affinity expression:

-

RequiredDuringSchedulingIgnoredDuringExecution:

The rules you make must be met to schedule a pode on Node. Equivalent to hard limit -

PreferredDuringSchedulingIgnoreDuringExecution:

Emphasizing the priority of satisfying formulation rules, the dispatcher tries to dispatch the pod to Node, but does not demand it, which is equivalent to a soft limit. Multiple priority rules can also set weight values to define the order of execution.

IgnoredDuringExecution means:

If the label of a pod's node changes during the pod's operation and does not meet the affinity requirements of the pod's node, the system will ignore the lable change on the node, where the pod machine is selected to run. -

Operators supported by the NodeAffinity syntax include:

-

In:label value in a list

-

NotIn:label value is not in a list

-

Exists: A label exists

-

DoesNotExit: A label does not exist

-

The value of Gt:label is greater than a value

-

The value of Lt:label is less than a value

Notes for setting the nodeAffinity rule are as follows:

- If both nodeSelector and nodeAffinity are defined, the name must be satisfied for the pod to eventually run on the specified node.

- If nodeAffinity specifies more than one nodeSelectorTerms, then one of them can match successfully.

- If there are multiple matchExpressions in nodeSelectorTerms, a node must satisfy all matchExpressions to run the pod.

[root@master mainfest]# cat test.yml

apiVersion: v1

kind: Pod

metadata:

name: test1

labels:

app: nginx

spec:

containers:

- name: test1

image: nginx

imagePullPolicy: IfNotPresent

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: disktype

values:

- ssd

operator: In

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 10

preference:

matchExpressions:

- key: name

values:

- test

operator: In

//Label the node2 host disktype=ssd as well

[root@master mainfest]# kubectl label node node2 disktype=ssd

node/node2 labeled

[root@master mainfest]# kubectl get node node2 --show-labels

NAME STATUS ROLES AGE VERSION LABELS

node2 Ready <none> 4d22h v1.20.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/os=linux

test

Label node1 with name=test

[root@master ~]# kubectl label node node1 name=test node/node1 labeled [root@master ~]# kubectl get node node1 --show-labels NAME STATUS ROLES AGE VERSION LABELS node1 Ready <none> 4d18h v1.20.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1,kubernetes.io/os=linux,name=sb [root@master ~]#

Label node1 with name=test and delete label with name=test and test to see the results

[root@master ~]# kubectl label node node1 name=test node/node1 labeled [root@master ~]# kubectl get node node1 --show-labels NAME STATUS ROLES AGE VERSION LABELS node1 Ready <none> 4d22h v1.20.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1,kubernetes.io/os=linux,name=test [root@master mainfest]# kubectl apply -f test1.yaml pod/test1 created [root@master mainfest]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES test 1/1 Running 0 46m 10.244.1.92 node1 <none> <none> test1 1/1 Running 0 28s 10.244.1.93 node1 <none> <none>

3. Taint and Tolerations

Taints: Avoid Pod scheduling on a specific Node

olerations: Allows Pod to be dispatched to a Node with Taints

Scenarios:

-

Private Node: Node is managed in groups based on the line of business, which is not scheduled by default and is allowed only if stain tolerance is configured

-

Equipped with special hardware: Some Nodes are equipped with SSD hard drives, GPU s, and want to not schedule the Node by default. Allocation is only allowed if stain tolerance is configured

Taint-based expulsion

effect description

The following is a brief explanation of the value of effect:

- NoSchedule: If a pod does not declare tolerance for this Taint, the system will not schedule the Pod on the node of this Taint

- PreferNoSchedule: Soft restricted version of NoSchedule. If a Pod does not declare tolerance for this Taint, the system will try to avoid scheduling this pod to this node, but it is not mandatory.

- NoExecute: Defines the pod's expulsion behavior in response to node failure. NoExecute This Taint effect has the following effects on running pods on the node:

- PD without Toleration set will be expelled immediately

- The pod corresponding to Toleration is configured, and if no value is assigned to Toleration Seconds, it will remain in this node

- If a pod corresponding to Toleration is configured and a toleration Seconds value is specified, it will be expelled after the specified time

- An alpha version of functionality has been introduced since version 1.6 of kubernetes. That is, the node failures are marked as Taint (currently only for node unreachable and node not ready, with corresponding NodeCondition "Ready" values of Unknowown and False). When the TaintBasedEvictions feature is activated (TaintBasedEvictions=true is added to the feature-gates parameter), NodeController automatically sets Taint for Node, and the status is "Ready" Common expulsion logic previously set on the ode will be disabled. Note that in case of node failure, in order to maintain the existing speed limit for pod expulsion, the system will gradually set Taint to the node in a speed limit mode, which will prevent the consequences of a large number of pods being expelled in certain circumstances (such as temporary loss of master s). This feature is compatible with tolerationSeconds, allowing pods to define how long a node failure lasts before it is expelled.

Using the kubeclt taint command, you can set five points for a node, and there is a mutually exclusive relationship between the node and the pod once it is stained, allowing the node to reject the pod's scheduled execution or even expel the pod that already exists on the node

The composition of the stain is as follows:

Each stain has a key and a value as the label of the stain, where the value can be empty, and the effect describes the effect of the stain

[root@master mainfest]# kubectl taint node node1 name=test:NoSchedule node/node1 tainted [root@master mainfest]# kubectl describe node node1|grep -i taint Taints: name=test:NoSchedule

Create a pod test named test2

apiVersion: v1

kind: Pod

metadata:

name: test2

labels:

app: nginx

spec:

containers:

- name: test2

image: nginx

imagePullPolicy: IfNotPresent

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: disktype

values:

- ssd

operator: In

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: name

values:

- test

operator: In

[root@master mainfest]# kubectl apply -f test2.yaml

pod/test2 created

[root@master mainfest]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

Normally, test2 Should be created in node1 But because node1 We set a stain on it, so it's not created on node1 Up, when we remove the stain, we can create node1 upper

[root@master mainfest]# kubectl taint node node1 name-

node/node1 untainted

[root@master mainfest]# kubectl describe node node1|grep -i taint

Taints: <none>

[root@master mainfest]# kubectl apply -f test2.yaml

pod/test2 created

[root@master mainfest]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test 1/1 Running 0 58m 10.244.1.92 node1 <none> <none>

test1 1/1 Running 0 5m12s 10.244.2.93 node2 <none> <none>

test2 1/1 Running 0 12m38s 10.244.2.132 node2 <none> <none>