Resource scheduling of K8s

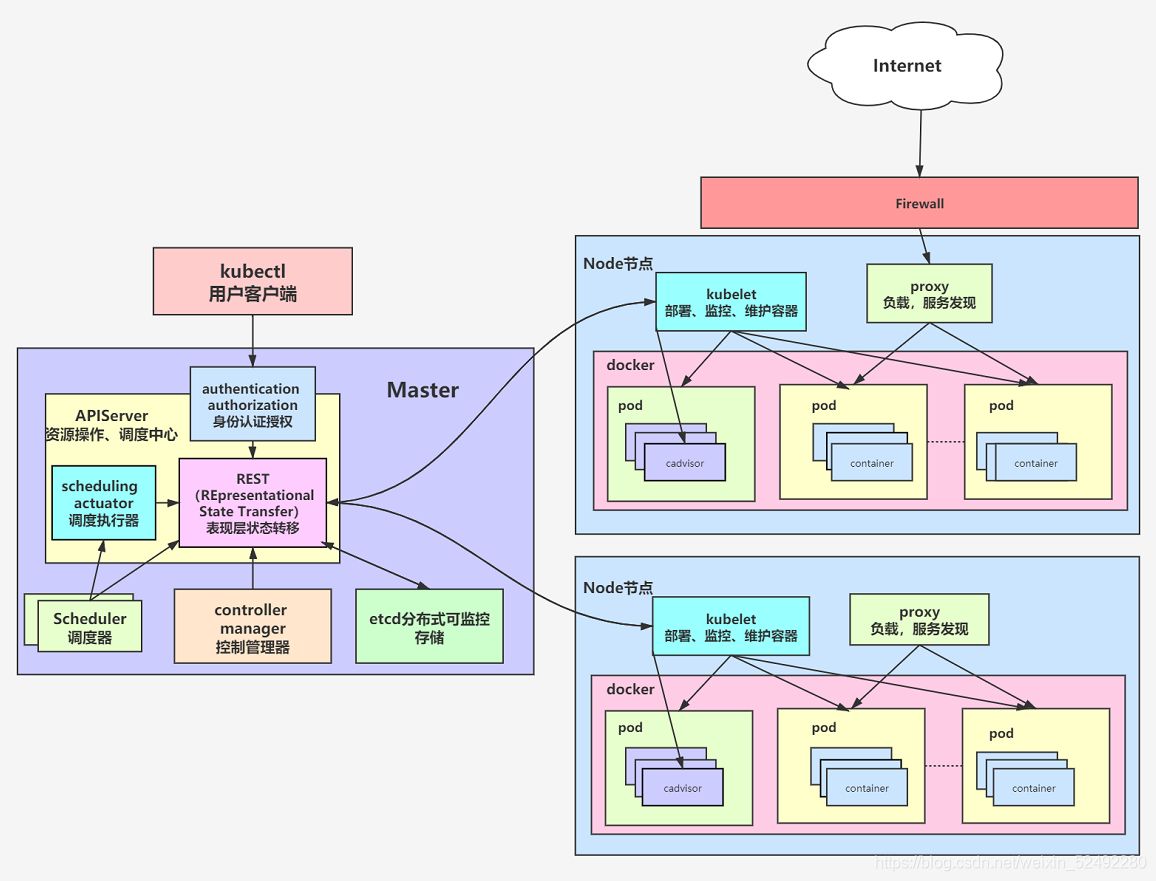

Overall architecture of K8s

Kubernetes belongs to the master-slave distributed architecture, which is mainly composed of Master Node and Worker Node, as well as the client command line tool kubectl and other additional items.

- Master Node: as a control node, it performs scheduling management on the cluster; The Master Node consists of API Server, Scheduler, Cluster State Store and controller manger server;

- Worker Node: as a real work node, it is a container for running business applications; The Worker Node includes kubelet, kube proxy and Container Runtime;

- kubectl: used to interact with API Server through the command line and operate Kubernetes, so as to add, delete, modify and query various resources in the cluster;

- Add on: it is an extension of the core functions of Kubernetes, such as adding network and network policy capabilities.

- Replication is used to scale the number of replicas

- endpoint is used to manage network requests

- Scheduler scheduler

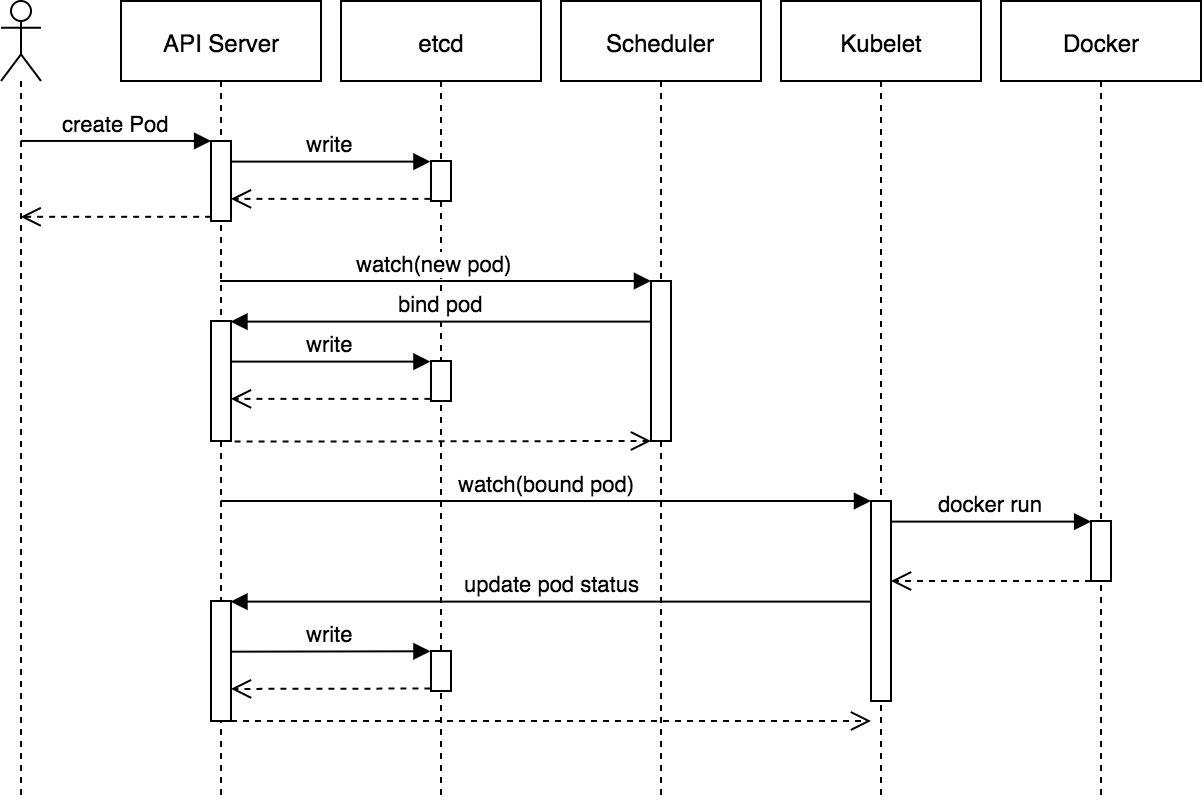

Typical process

The whole process of creating Pod is as follows:

- Users can submit a request to create a Pod through the REST API of the API Server or the Kubectl command line tool, and support two formats: Json and Yaml;

- API Server processes user requests and stores Pod data to Etcd;

- Schedule views the new pod through the watch mechanism of API Server and tries to bind the Node for the pod;

- Filter hosts: the scheduler uses a set of rules to filter out unqualified hosts. For example, if Pod specifies the required resources, it must filter out hosts with insufficient resources;

- Host scoring: score the qualified hosts selected in the first step. In the host scoring stage, the scheduler will consider some overall optimization strategies, such as distributing a copy of Replication Controller to different hosts, using the host with the lowest load, etc;

- Select host: select the host with the highest score, perform binding, and store the results in Etcd;

- kubelet performs pod creation according to the scheduling results: after the binding is successful, the container and docker run will be started, and the scheduler will call the API of API Server to create a bound pod object in etcd to describe all pod information bound and run on a work node. kubelet running on each work node will also regularly synchronize the bound pod information with etcd. Once it is found that the bound pod object that should run on the work node has not been updated, it will call the Docker API to create and start the container in the pod.

nodeSelector

nodeSelector is the simplest way to constrain. nodeSelector is pod A field of the spec

Use -- show labels to view the labels of the specified node

[root@master ~]# kubectl get node node1.example.com --show-labels NAME STATUS ROLES AGE VERSION LABELS node1.example.com Ready <none> 4d6h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1.example.com,kubernetes.io/os=linux

If no additional nodes labels are added, you will see the default labels as shown above. We can add labels to the specified node through the kubectl label node command:

[root@master ~]# kubectl label node node1.example.com disktype=ssd node/node1.example.com labeled [root@master ~]# kubectl get node node1.example.com --show-labels NAME STATUS ROLES AGE VERSION LABELS node1.example.com Ready <none> 4d6h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1.example.com,kubernetes.io/os=linux

Of course, you can also delete the specified labels through kubectl label node

[root@master ~]# kubectl label node node1.example.com disktype- node/node1.example.com unlabeled [root@master ~]# kubectl get node node1.example.com --show-labels NAME STATUS ROLES AGE VERSION LABELS node1.example.com Ready <none> 4d6h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1.example.com,kubernetes.io/os=linux

Create a test pod and specify the nodeSelector option to bind nodes:

[root@master ~]# kubectl label node node1.example.com disktype=ssd

node/node1.example.com labeled

[root@master ~]# kubectl get node node1.example.com --show-labels

NAME STATUS ROLES AGE VERSION LABELS

node1.example.com Ready <none> 4d6h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1.example.com,kubernetes.io/os=linux

[root@master ~]# vi test.yml

[root@master ~]# cat test.yml

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

env: test

spec:

containers:

- name: test

image: nginx

imagePullPolicy: IfNotPresent

nodeSelector:

disktype: ssd

Check the node scheduled by the pod. test the pod is forcibly scheduled to the node with the label disktype=ssd.

[root@master ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES httpd1-57c7b6f7cb-sk86h 1/1 Running 4 (173m ago) 2d 10.244.1.93 node2.example.com <none> <none> nginx1-7cf8bc594f-8j8tv 1/1 Running 1 (173m ago) 3h40m 10.244.1.94 node2.example.com <none> <none>

nodeAffinity

nodeAffinity means node affinity scheduling policy. Is a new scheduling policy to replace nodeSelector. At present, there are two kinds of node affinity expression:

RequiredDuringSchedulingIgnoredDuringExecution:

The rules must be met before the pode can be scheduled to the Node. Equivalent to hard limit

PreferredDuringSchedulingIgnoreDuringExecution:

It is emphasized that priority is given to meeting the established rules. The scheduler will try to schedule the pod to the Node, but it is not mandatory, which is equivalent to soft restriction. Multiple priority rules can also set weight values to define the order of execution.

Ignored during execution means:

If the label of the node where a pod is located changes during the operation of the pod, which does not meet the node affinity requirements of the pod, the system will ignore the change of label on the node, and the pod can run on the node by machine.

The operators supported by NodeAffinity syntax include:

- The value of In: label is In a list

- NotIn: the value of label is not in a list

- Exists: a label exists

- DoesNotExit: a label does not exist

- Gt: the value of label is greater than a certain value

- Lt: the value of label is less than a certain value

Precautions for nodeAffinity rule setting are as follows:

If both nodeSelector and nodeAffinity are defined, name must meet both conditions before pod can finally run on the specified node.

If nodeasffinity specifies multiple nodeSelectorTerms, one of them can match successfully.

If there are multiple matchExpressions in nodeSelectorTerms, a node must meet all matchExpressions to run the pod.

apiVersion: v1

kind: Pod

metadata:

name: test1

labels:

app: nginx

spec:

containers:

- name: test1

image: nginx

imagePullPolicy: IfNotPresent

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution: # Hard strategy

nodeSelectorTerms:

- matchExpressions:

- key: disktype

values:

- ssd

operator: In

preferredDuringSchedulingIgnoredDuringExecution: # Soft strategy

- weight: 10

preference:

matchExpressions:

- key: name

values:

- test

operator: In

Label node2 with disktype=ssd

[root@master ~]# kubectl label node node2.example.com disktype=ssd node/node2.example.com labeled [root@master ~]# kubectl get node node2.example.com --show-labels NAME STATUS ROLES AGE VERSION LABELS node2.example.com Ready <none> 4d7h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2.example.com,kubernetes.io/os=linux

Label node1 with name=test and delete the label with name=test, and check the test results

[root@master ~]# kubectl label node node1.example.com name=test node/node1.example.com labeled [root@master ~]# kubectl get node node1.example.com --show-labels NAME STATUS ROLES AGE VERSION LABELS node1.example.com Ready <none> 4d7h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1.example.com,kubernetes.io/os=linux,name=test [root@master ~]# kubectl apply -f test.yml pod/test created [root@master ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES httpd1-57c7b6f7cb-sk86h 1/1 Running 4 (178m ago) 2d 10.244.1.93 node2.example.com <none> <none> nginx1-7cf8bc594f-8j8tv 1/1 Running 1 (178m ago) 3h45m 10.244.1.94 node2.example.com <none> <none> test 1/1 Running 0 13s 10.244.1.95 node2.example.com <none> <none>

Delete the label with name=test on node1

[root@master ~]# kubectl label node node1.example.com name- node/node1.example.com unlabeled [root@master ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES httpd1-57c7b6f7cb-sk86h 1/1 Running 4 (179m ago) 2d1h 10.244.1.93 node2.example.com <none> <none> nginx1-7cf8bc594f-8j8tv 1/1 Running 1 (179m ago) 3h46m 10.244.1.94 node2.example.com <none> <none> test 1/1 Running 0 44s 10.244.1.95 node2.example.com <none> <none>

The above pod is first required to run on nodes with a label of disktype=ssd. If there are multiple nodes with this label, it is preferred to create it on the label with name=test

Taint and accelerations

Taints: avoid point scheduling to a specific Node

Accelerations: allow Pod to be scheduled to the Node holding Taints

Application scenario:

-

Private Node: nodes are grouped and managed according to the business line. It is hoped that this Node will not be scheduled by default. Allocation is allowed only when stain tolerance is configured

-

Equipped with special hardware: some nodes are equipped with SSD, hard disk and GPU. It is hoped that the Node will not be scheduled by default. The allocation is allowed only when stain tolerance is configured

-

Taint based expulsion

# Taint (stain)

[root@master haproxy]# kubectl describe node master

Name: master.example.com

Roles: control-plane,master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=master.example.com

kubernetes.io/os=linux

node-role.kubernetes.io/control-plane=

node-role.kubernetes.io/master=

node.kubernetes.io/exclude-from-external-load-balancers=

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"8e:50:ba:7a:30:2b"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.240.30

kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 19 Dec 2021 02:41:49 -0500

Taints: node-role.kubernetes.io/master:NoSchedule #aints: avoid Pod scheduling to specific nodes

Unschedulable: false

# Tolerances (stain tolerance)

[root@master ~]# kubectl describe pod httpd1-57c7b6f7cb-sk86h

Name: httpd1-57c7b6f7cb-sk86h

Namespace: default

·····

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s # Blemishes tolerance allows Pod scheduling to nodes that hold Taints

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedCreatePodSandBox 12m kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "2126da4117ba6ce45ddff8a2e0b9de59bac65f05c7be343249d50edea2cacf37" network for pod "httpd1-57c7b6f7cb-sk86h": networkPlugin cni failed to set up pod "httpd1-57c7b6f7cb-sk86h_default" network: open /run/flannel/subnet.env: no such file or directory

Warning FailedCreatePodSandBox 12m kubelet Failed to create pod "best2001/httpd"

Normal Pulled 11m kubelet Successfully pulled image "best2001/httpd" in 16.175310708s

Normal Created 11m kubelet Created container httpd1

Normal Started 11m kubelet Started container httpd1

Node add stain

Format: kubectl taint node [node] key=value:[effect]

For example: kubectl taint node k8s-node1 gpu=yes:NoSchedule verification: kubectl describe node k8s-node1 |grep Taint

Where [effect] can be taken as:

- NoSchedule: must not be scheduled

- PreferNoSchedule: try not to schedule. Tolerance must be configured

- NoExecute: not only will it not be scheduled, it will also expel the existing Pod on the Node

Add the stain tolerance field to the Pod configuration

# Add stain disktype type

[root@master haproxy]# kubectl taint node node1.example.com disktype:NoSchedule

node/node1.example.com tainted

# see

[root@master haproxy]# kubectl describe node node1.example.com

Name: node1.example.com

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=node1.example.com

kubernetes.io/os=linux

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"12:9e:43:99:21:bd"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.240.50

kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 19 Dec 2021 03:27:16 -0500

Taints: disktype:NoSchedule #Stain added successfully

# Test create a pod

[root@master haproxy]# kubectl apply -f nginx.yml

deployment.apps/nginx1 created

service/nginx1 created

[root@master haproxy]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx1-7cf8bc594f-8j8tv 1/1 Running 0 14s 10.244.1.92 node2.example.com <none> <none> #Because there are stains on node1, the created container will run on node2

Remove stains:

kubectl taint node [node] key:[effect]-

[root@master haproxy]# kubectl taint node node1.example.com disktype-

node/node1.example.com untainted

[root@master haproxy]# kubectl describe node node1.example.com

Name: node1.example.com

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=node1.example.com

kubernetes.io/os=linux

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"12:9e:43:99:21:bd"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.240.50

kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 19 Dec 2021 03:27:16 -0500

Taints: <none> # The stain has been deleted successfully

Unschedulable: false