equipment

Here RGBD camera mainly refers to the depth camera which uses Light Coding (structured light) or TOF for imaging, and actively emits light, which is mainly suitable for indoor environment.

The more common RGBD cameras are as follows:

| camera | principle | SDK | data |

|---|---|---|---|

| PrimeSense Carmine 1.08/1.09 (2012) | Light Coding | OpenNI | link |

| Xbox One Kinect(Kinect v2,2013) | TOF | OpenNI/Kinect SDK | |

| ASUS XTION 2/XTION PRO LIVE (CARMINE 1.08 vest) | Light Coding | OpenNI | link |

| Intel RealSense(D435 etc.) | Light Coding | OpenNI/Realsense Camera SDK | link |

| ZED (2016) | Stereo |

data format

Usually, depth data is represented by integers, ranging from 0 to 65535, and the type is UINT16, i.e. unsigned short, unsigned 2 bytes.

The unit is millimeter (mm), but some SDK s also support the use of 100 micron (um) for representation. For example, OpenNI supports deep data formats such as PIXEL_FORMAT_DEPTH_1_MM, PIXEL_FORMAT_DEPTH_100_UM, etc. As for the resolution, it is usually 640x480, or even 320x240 interpolation.

Because Depth has a certain range of effective work, for example, PrimeSense Carmine 1.09, its range of work is only 0.35-1.4m, that is, the range of data is 350-1400, usually using a numerical value to represent invalid data, such as 0 or 65535.

Usually, the FOV of RGB Sensor and Depth Sensor are not the same, so registration is needed. The purpose of registration is to make the pixels of RGB and Depth correspond one by one. It's not so important who registers with whom. Depth maps can also be interpolated, similar to color maps.

In OpenNI, for example, there is an interface called setImageRegistration Mode (IMAGE_REGISTRATION_DEPTH_TO_COLOR), whose effect is to tailor the zoom depth map to make its FOV exactly the same as RGB (but the edges are gone, all of which are invalid Depth pixels).

Storage Read

According to the previous description, the representation of depth maps is also simple, as follows:

char RGBData[640][480][3]; typedef unsigned short UINT16,*PUINT16; UINT16 DepthData[640][480];

We can save and read by ourselves, or we can read and write with the help of some third-party SDK, such as OpenCV.

When operating depth maps, OpenCV uses the format CV_16U, for example:

inline cv::Mat getMat(const VideoFrameRef &frame,int dataStride,int openCVFormat){ //Depth map PNG16 type (short), Little-Endian (low bit in low byte) //RGB Graph BGR24 Type int h=frame.getHeight(); int w=frame.getWidth(); cv::Mat image=cv::Mat(h,w,openCVFormat); memcpy(image.data,frame.getData(),h*w*dataStride); //cv::flip(image,image,1); return image; } getMat(frame,2,CV_16U); //For Depth getMat(frame,3,CV_8UC3); //For RGB

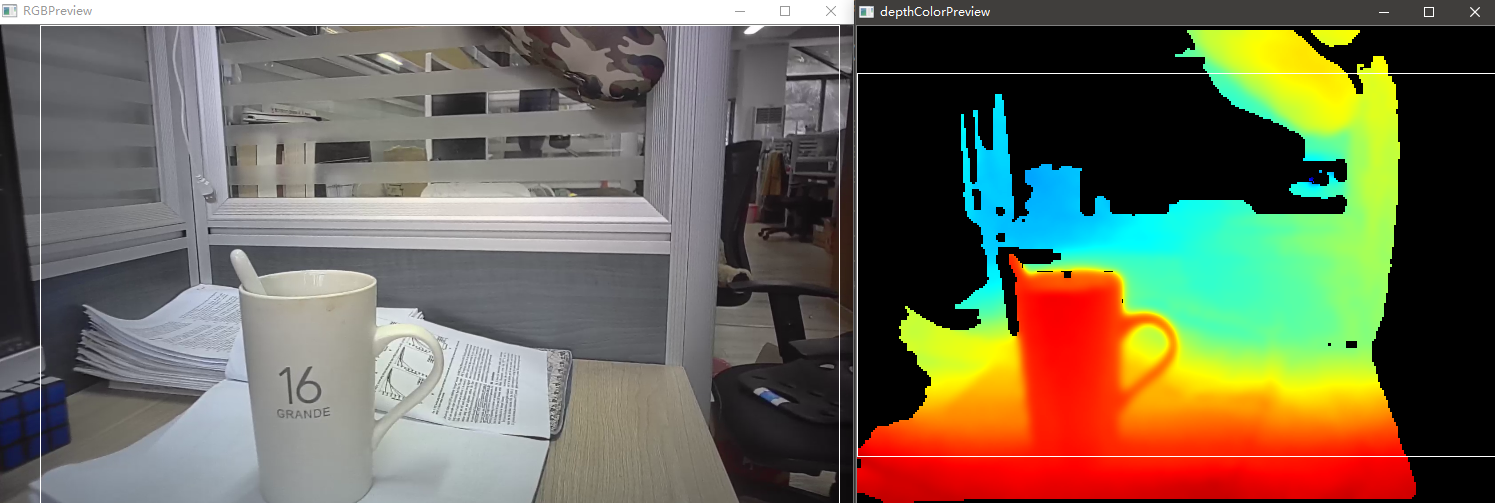

visualization

There are many methods to visualize depth maps, such as three-dimensional point clouds, Mesh, etc. However, the depth data usually acquired is a two-dimensional image, which needs to be converted into three-dimensional data to be displayed, and two-dimensional images are displayed by color map.

Obtaining three-dimensional coordinates

In OpenNI, there is such an interface, as follows:

UINT16 deep=depthImage.at<UINT16>(row,col); float fx, fy, fz; openni::CoordinateConverter::convertDepthToWorld(depth, col, row, deep, &fx, &fy, &fz);

What does this mean? Let's take a look at the implementation.

OniStatus VideoStream::convertDepthToWorldCoordinates(float depthX, float depthY, float depthZ, float* pWorldX, float* pWorldY, float* pWorldZ) { if (m_pSensorInfo->sensorType != ONI_SENSOR_DEPTH) { m_errorLogger.Append("convertDepthToWorldCoordinates: Stream is not from DEPTH\n"); return ONI_STATUS_NOT_SUPPORTED; } float normalizedX = depthX / m_worldConvertCache.resolutionX - .5f; float normalizedY = .5f - depthY / m_worldConvertCache.resolutionY; *pWorldX = normalizedX * depthZ * m_worldConvertCache.xzFactor; *pWorldY = normalizedY * depthZ * m_worldConvertCache.yzFactor; *pWorldZ = depthZ; return ONI_STATUS_OK; } OniStatus VideoStream::convertWorldToDepthCoordinates(float worldX, float worldY, float worldZ, float* pDepthX, float* pDepthY, float* pDepthZ) { if (m_pSensorInfo->sensorType != ONI_SENSOR_DEPTH) { m_errorLogger.Append("convertWorldToDepthCoordinates: Stream is not from DEPTH\n"); return ONI_STATUS_NOT_SUPPORTED; } *pDepthX = m_worldConvertCache.coeffX * worldX / worldZ + m_worldConvertCache.halfResX; *pDepthY = m_worldConvertCache.halfResY - m_worldConvertCache.coeffY * worldY / worldZ; *pDepthZ = worldZ; return ONI_STATUS_OK; }

Generally speaking, we directly express the depth as Z coordinate, while X and Y have a corresponding relationship with Z coordinate, not necessarily proportional, but may be a coefficient. The data structure of m_worldConvertCache is as follows, and a list of possible parameters is indicated. Different cameras may be different:

struct WorldConversionCache { float xzFactor=1.342392; float yzFactor=1.006794; float coeffX=476.760925; float coeffY=476.760956; float distanceScale=1.000000; int resolutionX=640; int resolutionY=480; int halfResX=320; int halfResY=240; };

The specific calculation methods are as follows:

m_worldConvertCache.xzFactor = tan(horizontalFov / 2) * 2; m_worldConvertCache.yzFactor = tan(verticalFov / 2) * 2; m_worldConvertCache.resolutionX = videoMode.resolutionX; m_worldConvertCache.resolutionY = videoMode.resolutionY; m_worldConvertCache.halfResX = m_worldConvertCache.resolutionX / 2; m_worldConvertCache.halfResY = m_worldConvertCache.resolutionY / 2; m_worldConvertCache.coeffX = m_worldConvertCache.resolutionX / m_worldConvertCache.xzFactor; m_worldConvertCache.coeffY = m_worldConvertCache.resolutionY / m_worldConvertCache.yzFactor;

To calculate these parameters, we need to know the field of view angle of the camera in both directions and the resolution of the image. A possible way to calculate the field of view angle is as follows:

float focusLength = (m_stLensParam.stDepthLensParam.dFocalLengthX * m_stLensParam.stSensorParam.dPixelWidth / 1000 + m_stLensParam.stDepthLensParam.dFocalLengthY * m_stLensParam.stSensorParam.dPixelHeight / 1000) / 2; float fovH = 2 * atan(m_stLensParam.stSensorParam.dPixelWidth * m_worldConvertCache.resolutionX / 2 / 1000 / focusLength); float fovV = 2 * atan(m_stLensParam.stSensorParam.dPixelHeight * m_worldConvertCache.resolutionY / 2 / 1000 / focusLength);

With three-dimensional coordinates, we can draw point clouds or Mesh, while doing 2D texture mapping, not to mention here.

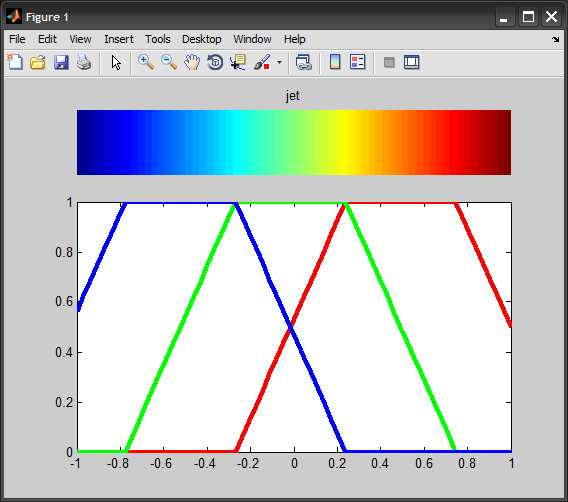

ColorMap

There are many kinds of color maps, the main idea is to use different colors of smooth transition to represent different ranges of values.

In common use MATLAB Jet For example, we can specify that the farther the depth is, the bluer the nearer the redder.

Referring to the code given in the link, we can visualize the image in a specified depth range:

typedef struct { double r,g,b; } COLOUR; COLOUR CommonUtils::getColorMapJet(double v,double vmin,double vmax) { COLOUR c = {1.0,1.0,1.0}; // white double dv; if (v < vmin) v = vmin; if (v > vmax) v = vmax; dv = vmax - vmin; //hot to cold // if (v < (vmin + 0.25 * dv)) { // c.r = 0; // c.g = 4 * (v - vmin) / dv; // } else if (v < (vmin + 0.75 * dv)) { // c.r = 2 * (v - vmin - 0.25 * dv) / dv; // c.b = 1 + 2 * (vmin + 0.25 * dv - v) / dv; // } else { // c.g = 1 + 4 * (vmin + 0.75 * dv - v) / dv; // c.b = 0; // } //colormap jet double t=((v - vmin) / dv)*2-1.0; c.r = clamp(1.5 - std::abs(2.0 * t - 1.0)); c.g = clamp(1.5 - std::abs(2.0 * t)); c.b = clamp(1.5 - std::abs(2.0 * t + 1.0)); return(c); }