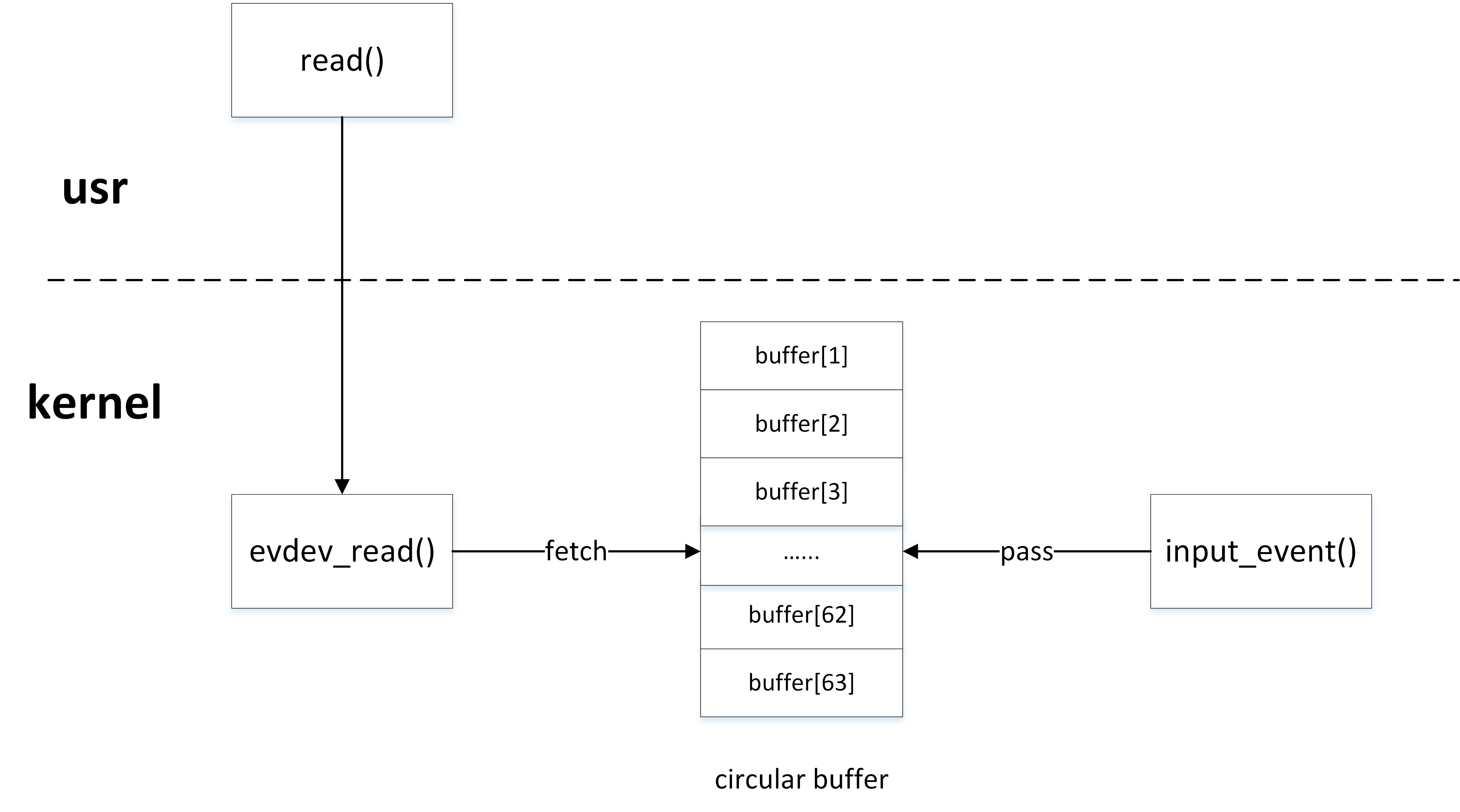

In the event processing layer (), the structure evdev_client defines a circular buffer, which implements an array of circular queues to cache input_event events that are reported to the user layer by the kernel driver.

struct evdev_client {

unsigned int head; // Head Pointer

unsigned int tail; // Tail pointer

unsigned int packet_head; // Package Pointer

spinlock_t buffer_lock;

struct fasync_struct *fasync;

struct evdev *evdev;

struct list_head node;

unsigned int clk_type;

bool revoked;

unsigned long *evmasks[EV_CNT];

unsigned int bufsize; // Circular Queue Size

struct input_event buffer[]; // Circular Queue Array

};The evdev_client object maintains three offsets: head, tail, and packet_head.Head and tail are used as the head and tail pointers of a circular queue to record entries and exits offsets, so what does packet_head do?

packet_head

Kernel drivers process one input, possibly reporting one or more input_events, and synchronization events are reported after these input_events in order to indicate processing is complete.The header pointer header records the entry offset of the buffer in terms of the input_event event event, while the packet pointer header records the entry offset of the buffer in terms of "packets" (one or more input_event events).

The working mechanism of ring buffers

- Circular queuing algorithm:

head++;

head &= bufsize - 1;- Circular queuing algorithm:

tail++;

tail &= bufsize - 1;- The circular queue is full:

head == tail

- Circular queue empty condition:

packet_head == tail

"Remainder" and "Sum"

To solve the overflow and underflow of the head and tail pointer, and make the element space of the queue reusable, the general inbound and outbound queue algorithm of circular queue uses the "redundancy" operation:

Head = (head + 1)% bufsize; //enrollment

Tail = (tail + 1)% bufsize; //queue

To avoid the computationally expensive "redundancy" operation and make the kernel run more efficiently, the ring buffer of the input subsystem uses the "summation" algorithm, which requires the bufsize to be a power of 2. As you can see later, the value of bufsize is actually 64 or 8 times higher, which meets the requirements of the "summation" operation.

Construction and initialization of ring buffers

When the user layer opens the input device node through the open() function, the calling procedure is as follows:

open() -> sys_open() -> evdev_open()

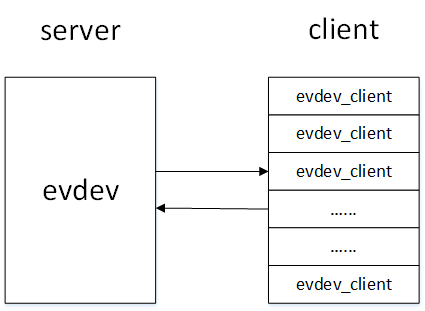

The evdev_client object is constructed and initialized in the evdev_open() function. Every user who opens an input device node maintains an evdev_client object in the kernel. These evdev_client objects are registered in evdev through the evdev_attach_client() function 1 On the object's kernel chain list.

Next, we specifically analyze the evdev_open() function:

static int evdev_open(struct inode *inode, struct file *file)

{

struct evdev *evdev = container_of(inode->i_cdev, struct evdev, cdev);

// 1. Calculate the ring buffer size bufsize and evdev_client object size

unsigned int bufsize = evdev_compute_buffer_size(evdev->handle.dev);

unsigned int size = sizeof(struct evdev_client) +

bufsize * sizeof(struct input_event);

struct evdev_client *client;

int error;

// 2. Allocate Kernel Space

client = kzalloc(size, GFP_KERNEL | __GFP_NOWARN);

if (!client)

client = vzalloc(size);

if (!client)

return -ENOMEM;

client->bufsize = bufsize;

spin_lock_init(&client->buffer_lock);

client->evdev = evdev;

// 3. Register with Kernel Chain List

evdev_attach_client(evdev, client);

error = evdev_open_device(evdev);

if (error)

goto err_free_client;

file->private_data = client;

nonseekable_open(inode, file);

return 0;

err_free_client:

evdev_detach_client(evdev, client);

kvfree(client);

return error;

}In the evdev_open() function, we see the evdev_client object from being constructed to being registered with the kernel chain table, but where is it initialized?The kzalloc() function is actually initialized with the u GFP_ZERO flag while allocating space:

static inline void *kzalloc(size_t size, gfp_t flags)

{

return kmalloc(size, flags | __GFP_ZERO);

}

Producer/Consumer Model

Kernel-driven and user programs are typical producer/consumer models. Kernel-driven generates input_event events, which are then written to the ring buffer by the input_event() function. User programs obtain input_event events from the ring buffer by the read() function.

Producer of Ring Buffers

As a producer, the kernel driver eventually calls the u_pass_event() function to write events to the ring buffer when reporting input_event():

static void __pass_event(struct evdev_client *client,

const struct input_event *event)

{

// Save the input_event event event in a buffer, with the queue head er increasing to point to the next element space

client->buffer[client->head++] = *event;

client->head &= client->bufsize - 1;

// When the head and tail of the queue are equal, the buffer space is full

if (unlikely(client->head == client->tail)) {

/*

* This effectively "drops" all unconsumed events, leaving

* EV_SYN/SYN_DROPPED plus the newest event in the queue.

*/

client->tail = (client->head - 2) & (client->bufsize - 1);

client->buffer[client->tail].time = event->time;

client->buffer[client->tail].type = EV_SYN;

client->buffer[client->tail].code = SYN_DROPPED;

client->buffer[client->tail].value = 0;

client->packet_head = client->tail;

}

// When an EV_SYN/SYN_REPORT synchronization event is encountered, the packet_head moves to the queue head position

if (event->type == EV_SYN && event->code == SYN_REPORT) {

client->packet_head = client->head;

kill_fasync(&client->fasync, SIGIO, POLL_IN);

}

}Consumers of Ring Buffers

When a user program as a consumer reads an input device node through the read() function, the evdev_fetch_next_event() function is finally called in the kernel to read the input_event event from the ring buffer:

static int evdev_fetch_next_event(struct evdev_client *client,

struct input_event *event)

{

int have_event;

spin_lock_irq(&client->buffer_lock);

// Determine if there is an input_event event in the buffer

have_event = client->packet_head != client->tail;

if (have_event) {

// Reads an input_event event from the buffer with tail increasing to the next element space

*event = client->buffer[client->tail++];

client->tail &= client->bufsize - 1;

if (client->use_wake_lock &&

client->packet_head == client->tail)

wake_unlock(&client->wake_lock);

}

spin_unlock_irq(&client->buffer_lock);

return have_event;

}- The structure evdev member client_list is the header of the kernel chain table through which all evdev_client objects hanging on it can be accessed ↩